krizby

Posts: 429 +286

Like I pointed out earlier, there will be no 10-15% extra performance. The RTX Titan is the biggest turing chip Nvidia has and it is not that much of an increase over the 2080 Ti.

I used 1080p as a metric because it is the most reliable measure of a GPU's performance. At higher resolution additional bottlenecks come into play and video memory can be a problem. It makes sense that a mid-range card would fall behind at higher resolutions, after all the 2080 Ti is equipped with a larger memory bus, more memory, and faster memory. There's a 100% chance AMD will provide more and faster memory on their higher end cards. It's also very likely they increase the bus size as well. So in effect, the 2080 Ti's additional lead at 1440p and 4K is the result of it being designed to run at those higher resolutions and having improved features GPU and non-GPU related to handle them. Something that would not be hard for AMD to alleviate. I would go as far to say that comparing 4K results of the two cards is misleading if your point is to show GPU efficiency because the RX 5700 XT isn't really designed to play at that resolution and doesn't have the expensive RAM that the 2080 Ti has (among other things).

This is nothing new though, even the 2070 Super looses significant performance at 4K compared to the 2080 Ti just the same as the RX 5700 XT. The reason is obvious, midrange cards perform best at the resolutions they were designed for.

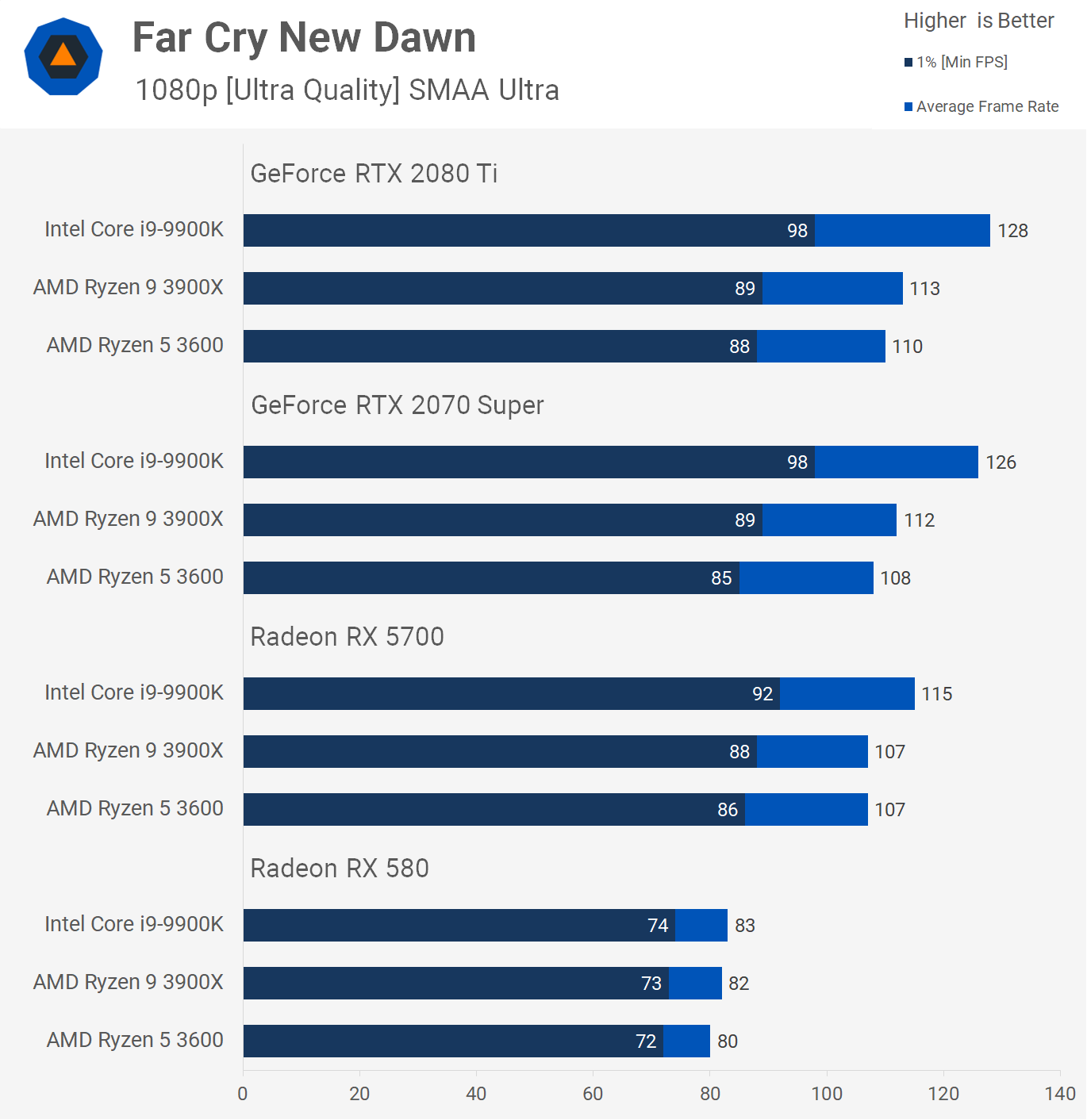

it is useless to bench high end GPU at 1080p since you run into CPU bottleneck, it is basic knowledge, just like you don't benchmark CPU at 4k since you will run into GPU bottleneck.

Take this from 3900X review

See how close 2080 Ti and 2070 Super are ? how preposterous is that.

Now at 1440p, a 2fps difference at 1080p become 15fps (9900k). You don't even want to couple 2080Ti with 3900X at 1440p lol (heavily bottlenecked).

Titan RTX is already 10% faster than 2080Ti at the same TDP, couple with 15.5Gbps Vram 10-15% is not that far fetched.

Last edited: