Rumor mill: We’ve been hearing rumors about the RTX 3080 Ti for quite a while now, most of which suggested it would arrive in January. But the latest word on the grapevine is that Nvidia is pushing that date back to February, allowing the company to focus on improving supply of its current cards. It also seems that concern over Big Navi's threat to the vanilla RTX 3080 has proved unfounded.

Last month brought rumors of an RTX 3080 Ti launching in January, and the card was one of several unreleased Ampere products spotted on HP’s OEM driver list last week. According to VideoCardz’s sources, that January launch has now been moved to February, a claim echoed by Igor Wallosek of Igor’s Lab.

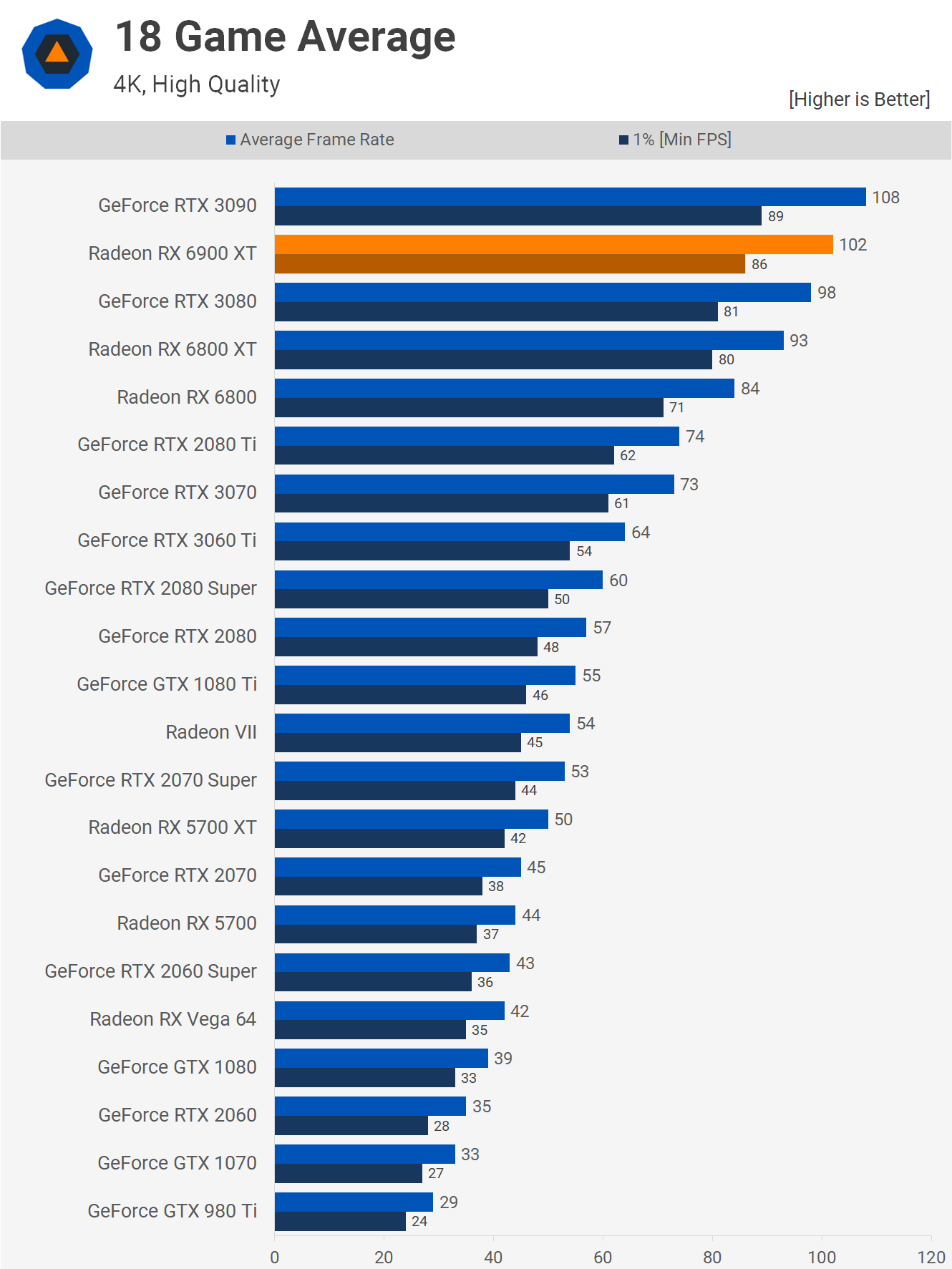

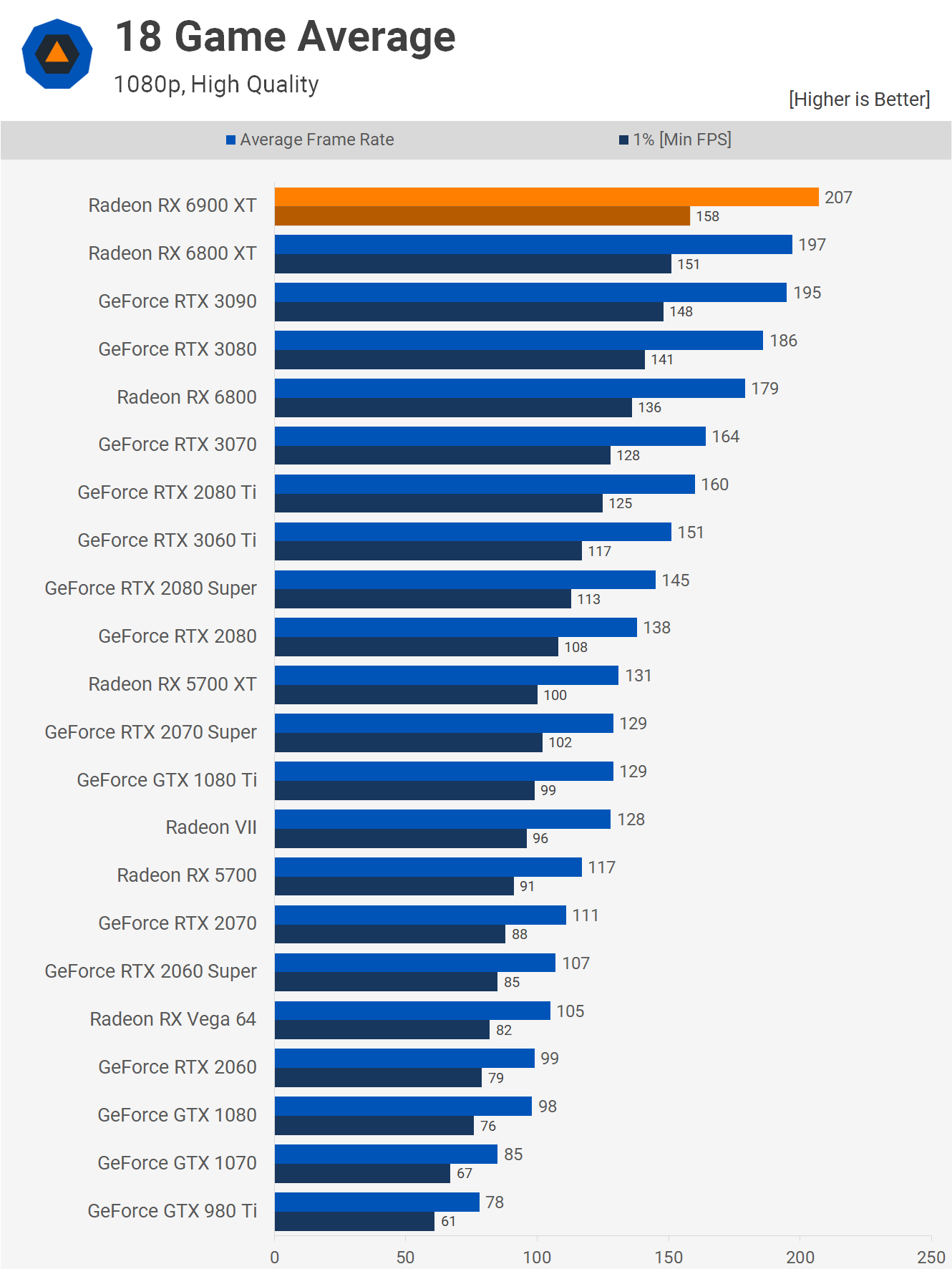

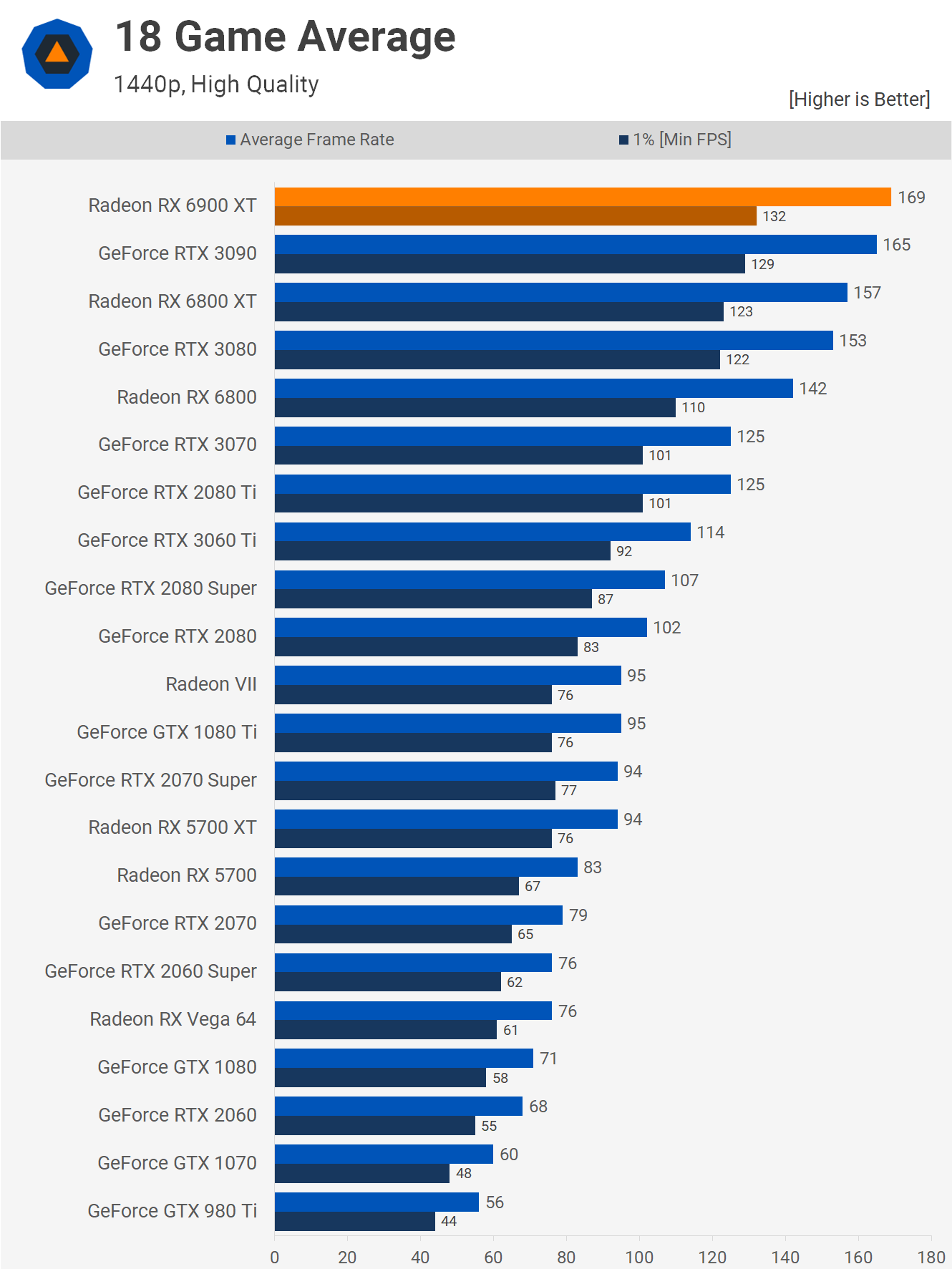

Part of the reasoning behind the postponement is that AMD’s Big Navi cards aren’t as much of a competitor to the RTX 3080 as Nvidia feared. In our testing, the Radeon RX 6800 XT is just mildly ahead at 1440p across 18 games, while the RTX 3080 is the better-performing card at 4K. Even the Radeon RX 6900 XT is only faster by a few fps. As such, team green doesn’t feel the need to rush out an RTX 3080 Ti, which is said to double the standard version’s VRAM to 20GB of GDDR6X—both the RX 6800 XT and 6900 XT have 16GB of GDDR6.

Another reason behind the move could be the availability issues plaguing the current crop of Ampere cards. Nvidia has said these shortages will continue until February 2021, the same month the RTX 3080 Ti is now rumored to arrive.

Additionally, both publications believe that there will be two versions of the RTX 3060 (6GB and 12GB), both of which were also on HP’s list. The more powerful variant is due to arrive between January 11 and 14, the same time as CES, while the 6GB card is said to hit at the end of January or early February. Interestingly, that 6GB RTX 3060 model is thought to have started life as the RTX 3050 Ti before Nvidia rebranded it.

https://www.techspot.com/news/87975-rumor-nvidia-moves-rtx-3080-ti-launch-february.html