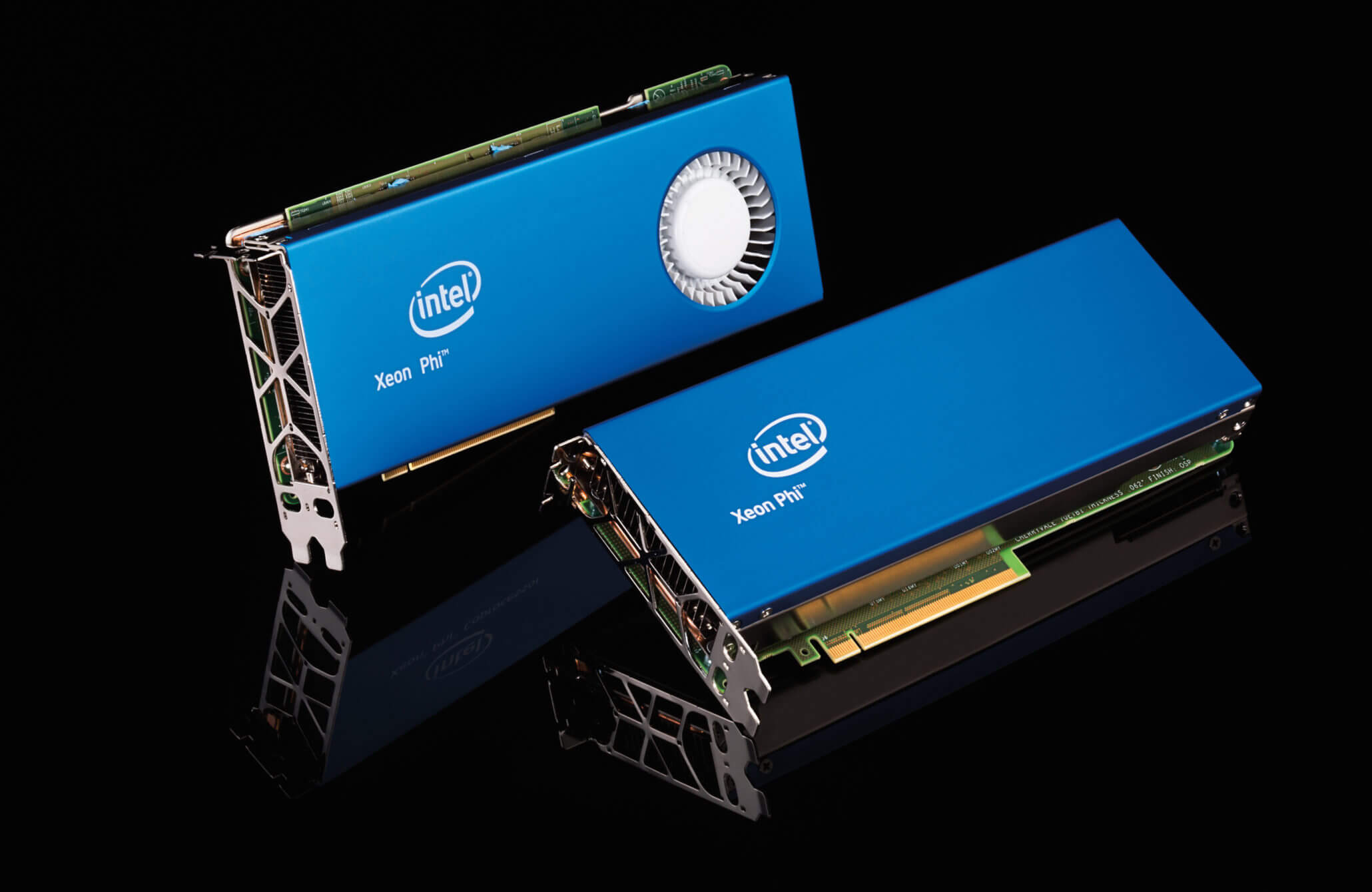

Why it matters: Intel hasn't been competitive in the GPU market for more than two decades and the closest thing to a discrete GPU they currently have is the Xeon Phi compute card. But that's about to change.

Intel is expected to become a player in the consumer GPU market after hiring the head of AMD's Radeon Technologies Group last year. Raja Koduri's official position at Intel is Senior VP where he oversees Intel's Core and Visual Computing Group. Koduri is not the only high-profile hire Intel has made as of late either.

Most recently, Intel confirmed that its first true dedicated graphics card (in a long, long time) will be launching in 2020. But this is not the first time Intel has been working on its own graphics processor. About ten years ago, Intel was talking up its x86-derived Larrabee GPGPU. On paper, it sounded like a real threat to Nvidia and ATI (now AMD), but after facing several delays and complications, plans to market a consumer version of Larrabee were put on hold indefinitely.

Today we're finding out that one of the "fathers" of Larrabee, Tom Forsyth is rejoining the company. Forsyth is a self-described graphics programmer and chip designer with recent stints at Oculus and Valve. He was on the original Larrabee team and now he's back at Intel...

Personal job news - I start at Intel shortly as a chip architect in Raja Koduri's group. Not entirely sure what I'll be working on just yet. But do let me know if you have any suggestions.

— Tom Forsyth (@tom_forsyth) June 19, 2018

Go on. Hit post. You know you want to. Whisper words of wisdom...

The Larrabee project was subject to much debate over the years, often called a failure, but in Tom Forsyth's own words, it was actually a "pretty huge success." Larrabee never made it to market as a consumer product -- you know, the kind we'd have loved to see compete with Radeon and GeForce -- but out of that project Intel got Xeon Phi, a x86-based manycore processor used in supercomputers and high-end workstations.

Forsyth said in his old blog post that in terms of engineering and focus, Larrabee was never primarily a graphics card. But this time it seems Intel is actually focused on developing a graphics product for consumers. According to the latest rumors, Intel has split its dedicated graphics project into two: one will focus on video streaming within data centers, and the other will be for gaming.

Whatever Intel's ultimate motivations may be, gaming/consumer graphics or other applications that modern GPUs can handle like machine learning, AI and self-driving cars, we'll find out soon enough.