Today I’m going to try and tackle a topic that seems to be causing a great deal of confusion, especially after our initial review of Ryzen and the gaming performance observed when testing at 1080p. There is a huge mix of results regarding Ryzen’s gaming performance and a big part of that has to do with GPU bottlenecks.

Since our Ryzen review, we've followed up with a gaming-specific feature testing 16 games at 1080p and 1440p, and yes, I’m also testing with SMT enabled and disabled. Results are very interesting, but this article is about addressing GPU bottlenecks and explaining when and where you are seeing them and why.

So first, why is avoiding a GPU bottleneck so important for testing and understanding CPU performance?

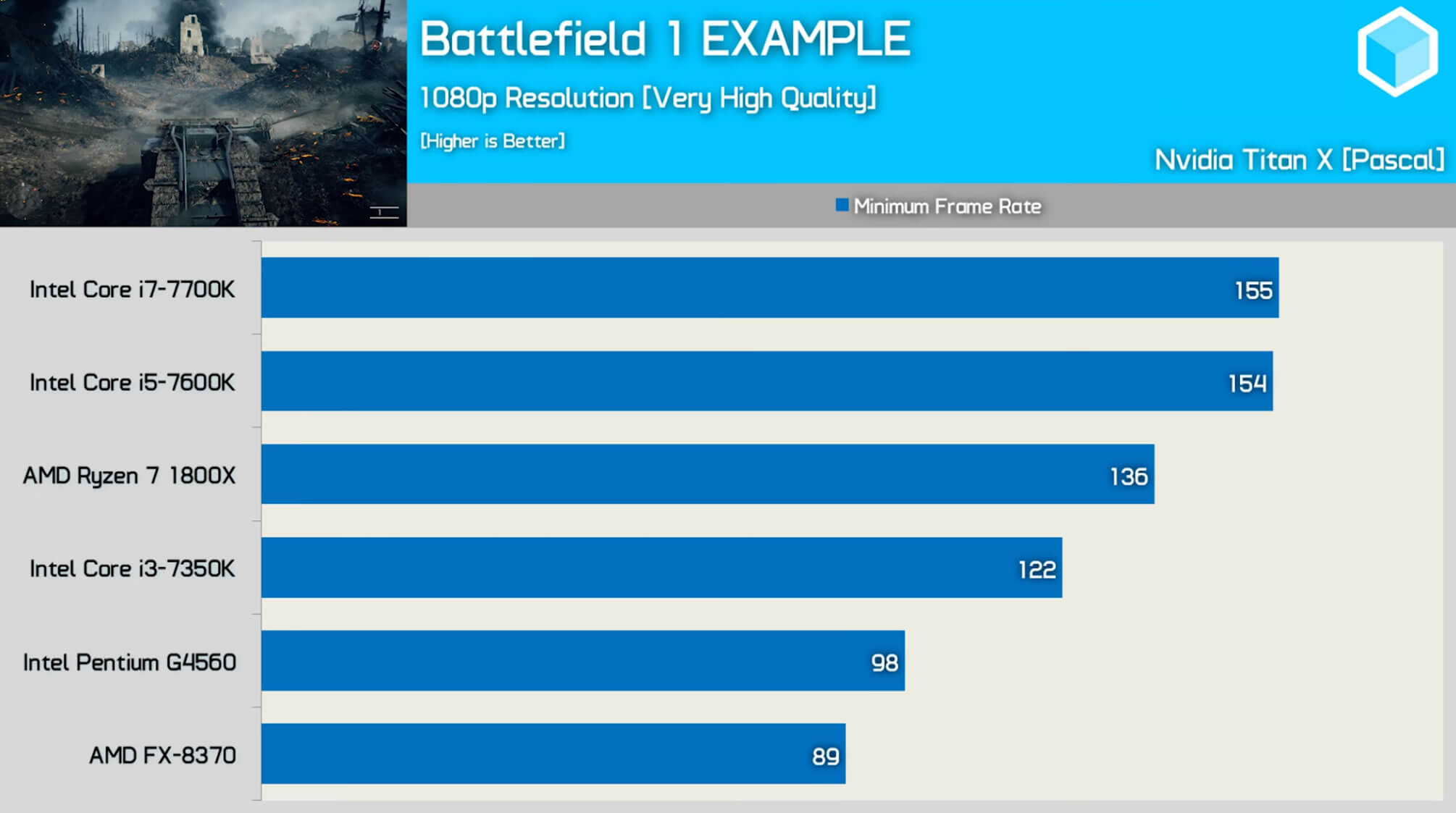

You could test at 4K like some have and show that Ryzen CPUs can indeed match high-end Skylake and Kaby Lake processors when coupled with a GTX 1080 or even a Titan XP. It is true, the 1800X can match the 7700K at 4K in the latest games using high-end GPUs.

Does that mean the 1800X is as fast or possibly faster than the 7700K? No!

We know this isn't true because when we test at a lower resolution, the 7700K is quite a bit faster in most cases. Now you might say "Steve, I don’t care about 1080p gaming, I have an ultrawide 1440p display so I only care how Ryzen performs here." That’s fine, albeit you are sticking your head in the sand and that can come back to bite you.

"Steve, I don’t care about 1080p gaming, I have an ultrawide 1440p display so I only care how Ryzen performs here."

Before I explain why, let me just touch on why we test CPU gaming performance at 1080p and why 4K results, at least on their own, are completely useless. If you test at 1080p, technically you don’t need to show 4K results, whereas if you test at 4K you very much need to show 1080p performance.

Here’s a hypothetical example:

Let’s say the Titan XP is capable of rendering a minimum of 40 fps in Battlefield 1 at 4K. Now if we benchmark half a dozen CPUs including some Core i3, i5, i7 and Ryzen models, all of which allow the Titan XP to deliver its optimal 4K performance, does that mean they are all equal in terms of gaming performance?

It certainly appears so when looking exclusively at 4K performance, however that simply means all are capable of keeping frame rates above 40 fps at all times.

By lowering the resolution and/or game quality visual settings, which reduces GPU load, we start to show the CPU as the weakest link. You can do this by testing at extremely low resolutions such as 720p with low quality settings, though that'd be going a bit too far the other way. I have found 1080p using high to ultra-high quality settings using a Pascal Titan X is a realistic configuration for measuring CPU gaming performance.

So other than showing that there may actually be a difference between certain CPUs in games, why else should you ensure that your results aren't shaped by a GPU bottleneck? Or why is it a bad idea to stick your head in the sand by saying you only care about how they compare at ultrawide or 4K resolutions?

For the longest time myself and other respected tech reviewers claimed that all PC gamers need is a Core i5 processor as you reach a point of diminishing returns with a Core i7 when gaming. This was true about a year ago and there wasn't much evidence that suggested otherwise. Of course, we often noted that things would no doubt change in the future, we just didn't know when that change would happen.

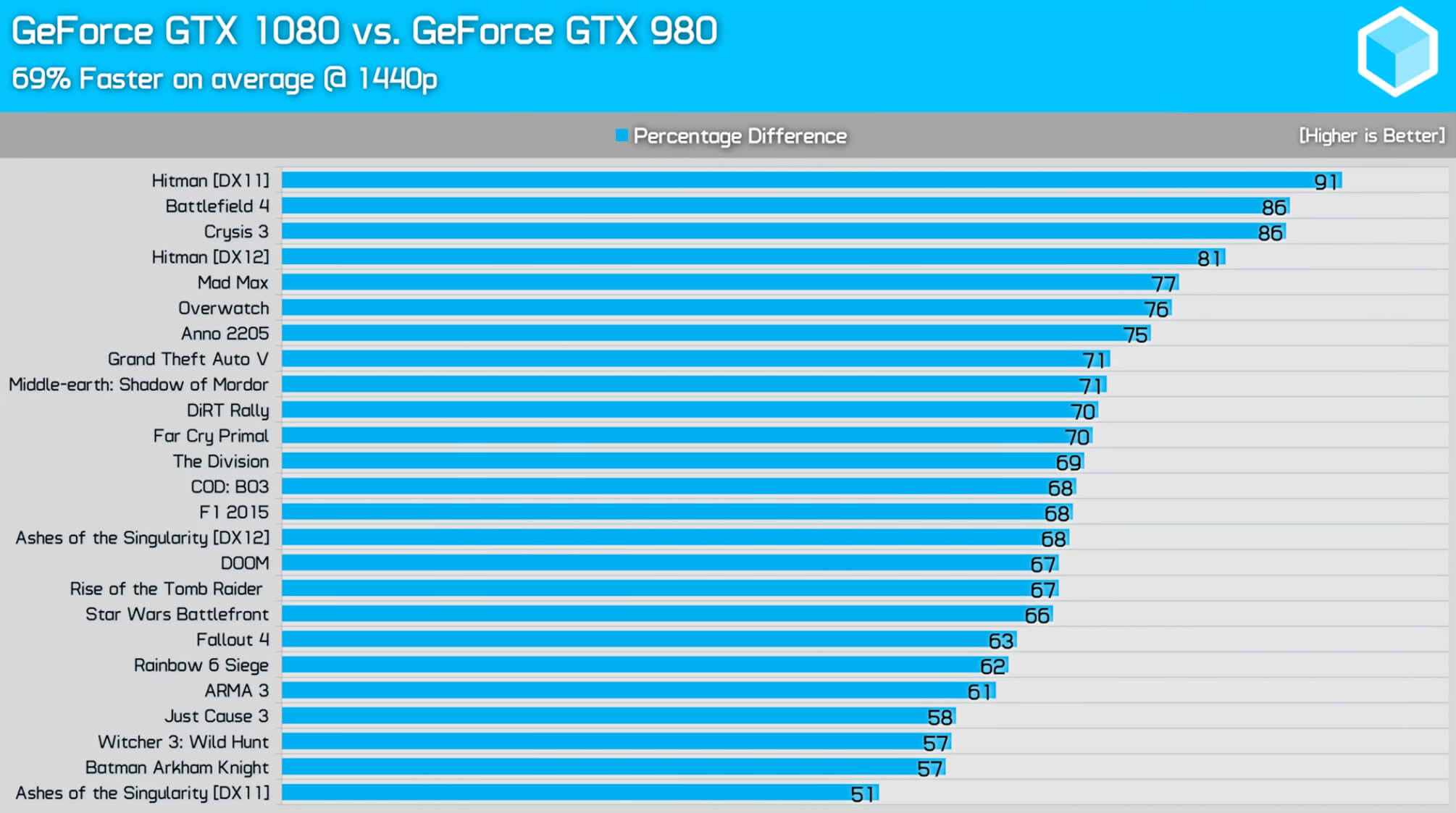

A year later things have changed. A few games are indeed more demanding, but the biggest change is seen on the GPUs. The GTX 980 and Fury graphics cards were considered real weapons a year ago, but today they can be considered mid-range with graphics chips such as the Titan XP and soon to be released GTX 1080 Ti delivering over twice as much performance in many cases.

If we compare the Core i5-7600K and Core i7-7700K in CPU demanding games using the GTX 980 at 1080p, the eight-threaded i7 processor isn't much faster. Given the margins I would say buy a Core i5 as the Core i7 isn't worth the investment.

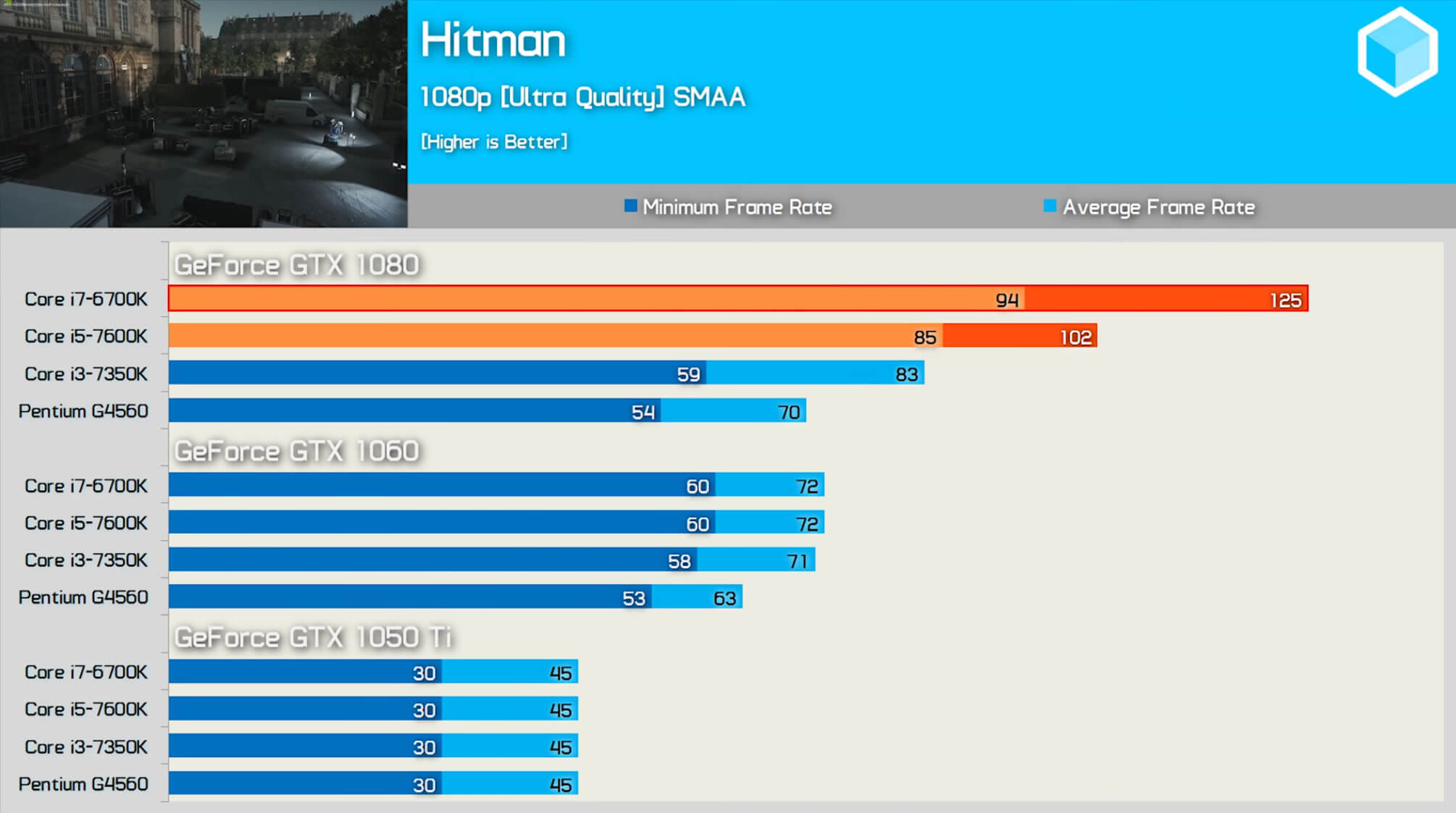

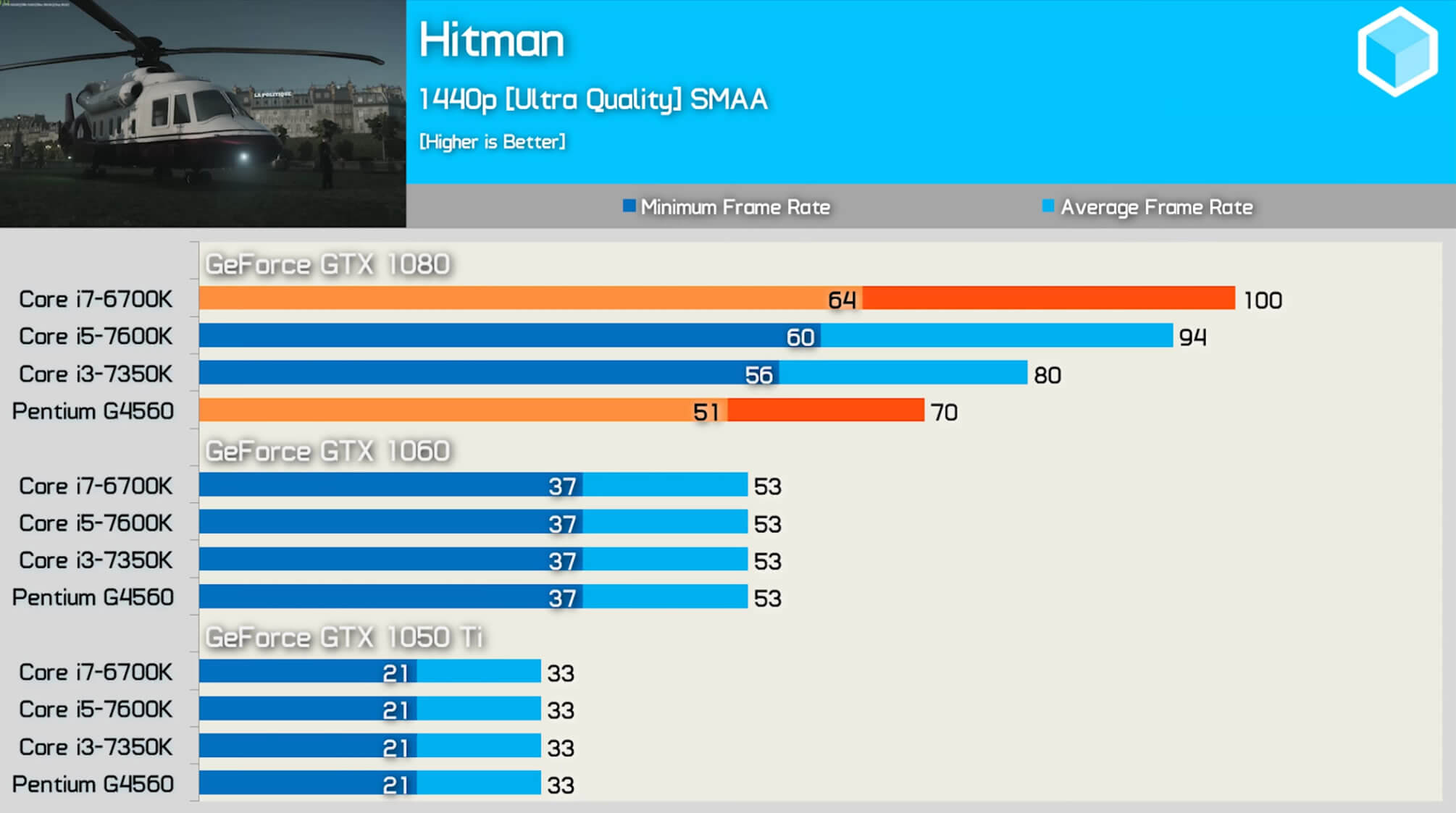

I recently compared the Pentium G4560, Core i3-7350K along with the i5-7600K and i7-6700K processors using the GTX 1050 Ti, GTX 1060 and GTX 1080. If we look at a game such as Hitman we see that the i5 and i7 processors are on par when testing with the GTX 1060 which is equivalent to the GTX 980 in terms of performance. Look at those results and the Core i7 is clearly a bad investment. However if we test with the GTX 1080 the Core i7 processor is now delivering over 20% more performance.

Increasing the resolution to 1440p we see that when testing with the GTX 1060 the dual-core Pentium processor delivers the same performance as the i7-6700K. The 6700K is obviously a much more powerful CPU but the GPU bottleneck simply hides the fact. That said using a more powerful GPU in the GTX 1080 we see that the 6700K is now 43% faster than the G4560.

At 1440p we find that the 6700K is just 6% faster than the 7600K, whereas it was 23% faster at 1080p. I found similar margins in games such as Mafia III, Overwatch, Total War Warhammer, and many others.

Keeping those results in mind, the Titan XP and soon to be released GTX 1080 Ti are much faster than the GTX 1080 again. So the Ryzen gaming performance that you saw in my review and other well-researched reviews from outlets who tested correctly like Gamers Nexus and Tom’s Hardware we can assume a few things:

First, if future games aren't able to better utilize the Ryzen processors than they are presently -- in other words, optimization doesn't occur -- then the misleading 4K results become an even bigger issue. In a couple of years when the GTX 1080 Ti or AMD Vega GPUs go from high-end to mid-range contenders, how will Ryzen look in regards to the current Skylake and Kaby Lake processors, presumably the 4K performance would start to look like what we are seeing at 1080p.

The opposite of that would be that games are optimized for Ryzen, which is of course my hope, then we'll start to see the 8-core, 16-thread AMD processors laying waste to Intel’s 4-core, 8-threaded Core i7 Kaby Lake and Skylake CPUs.

The obvious problem being we don’t know how this is going to play out. I remember AMD fans giving me a hard time back in 2011 when I said the FX-8150 didn't deliver, especially in games. The argument was that games back then used 1 or 2 cores and by the time they were using 4 or more the FX series would prevail. Well, no need to dredge that one up all over again but we know how it played out. That said, I have much more hope for Ryzen and I honestly do believe there is more performance to be seen yet. I said the opposite for the FX processors many years ago, so that’s something.

I hope this helps those of you who were confused by the variance in results and now have a better understanding of where and why a GPU bottleneck is occurring and more importantly why it should be avoided when showing CPU performance.