The big picture: The UK military is moving forward with plans to develop and deploy several thousand combat robots, some which might be autonomous. So far, militaries worldwide have avoided using unmanned technologies in combat situations. Semi-autonomous drones have a pilot who is always at the controls, so humans make the final strike decisions, not AI.

British Army leaders think that by 2030 nearly a quarter of the UK's ground troops will be robots. That is almost 30,000 autonomous and remote-controlled fighting machines deployed within about a decade.

"I suspect we could have an army of 120,000, of which 30,000 might be robots, who knows?" General Sir Nick Carter told The Guardian in an interview.

Carter was careful to note that military leadership does not have any concrete goals in mind yet and that his estimates are only his opinion.

The main hurdle the Army currently faces is funding. Research into developing combat robots was stalled in October when a cross-governmental spending initiative got postponed. Gen. Carter said that talks are now proceeding on the funding issue and that they are "going on in a very constructive way."

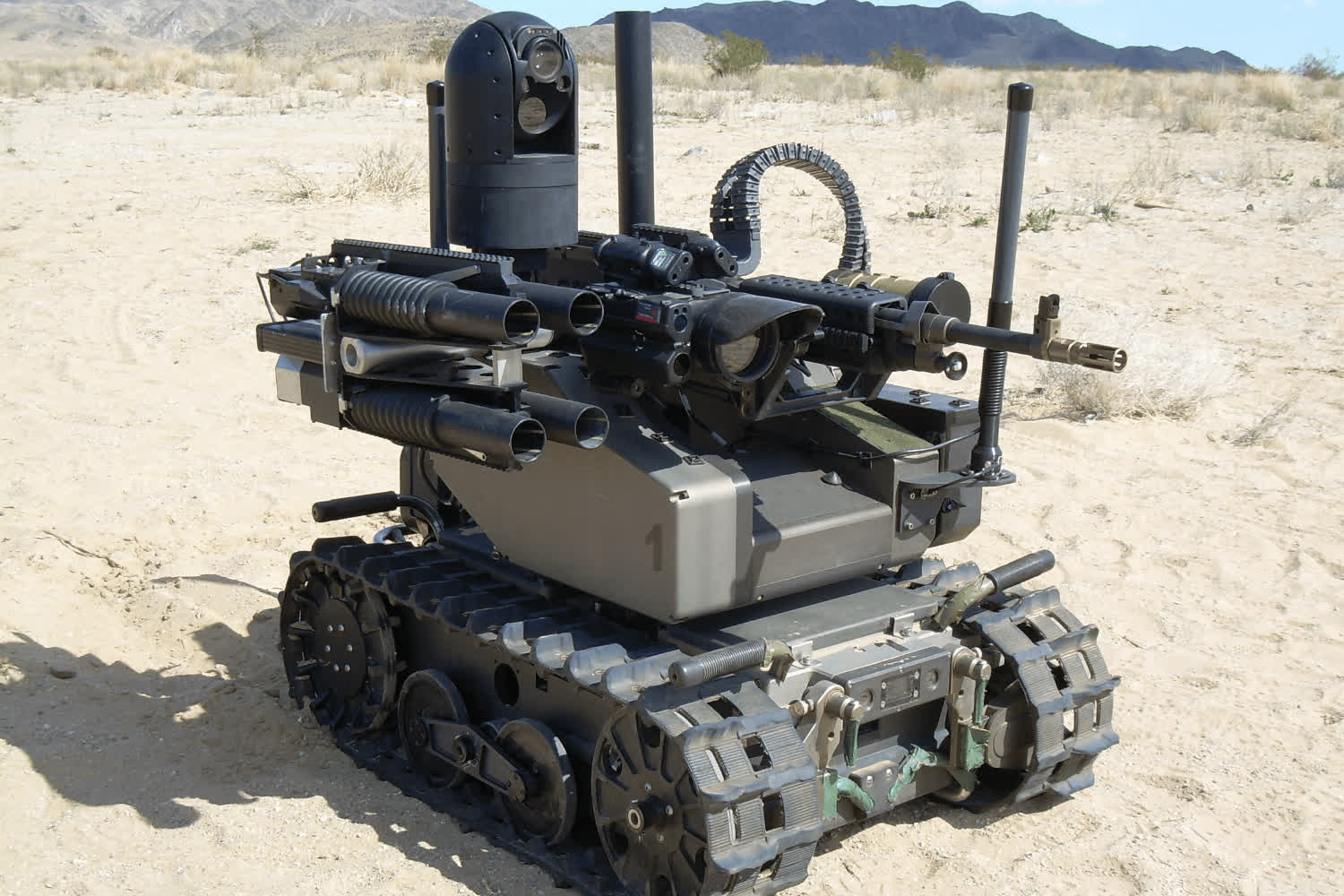

US military's Modular Advanced Armed Robotic System (MAARS) an RSTA remote-controlled drone.

"Clearly, from our perspective, we are going to argue for something like that [a multi-year budget] because we need long-term investment because long-term investment gives us the opportunity to have confidence in modernization," Carter explained.

Deploying combat robots programmed to make split-second, potentially lethal decisions raises some ethical flags, but deployment and development are two different things. The UK military and others have been working on small autonomous or remote-controlled weapons for years.

An example is the UK's i9 drone. It is a small, human-operated six-rotor craft equipped with two shotguns. Its primary function is to take point when storming a building in urban combat scenarios. Having the robot enter first to draw fire and dispatch the enemy before it has a chance to fire on human troops could save countless lives.

Currently, fully autonomous combat robots are not much more than a notion. While prototypes exist, there are no immediate plans to deploy them. World leaders would have to agree on when and how militaries could use these war machines. The last thing anybody wants is rogue AI running amok and killing innocents.

Image credit: Forces

https://www.techspot.com/news/87523-uk-army-could-25-percent-robotic-2030-british.html