In brief: Researchers have been studying a way to reconstruct areas surrounding a drone using sound waves. This process, known as echolocation, is used by bats and other animals to orient themselves in their environment and detect objects.

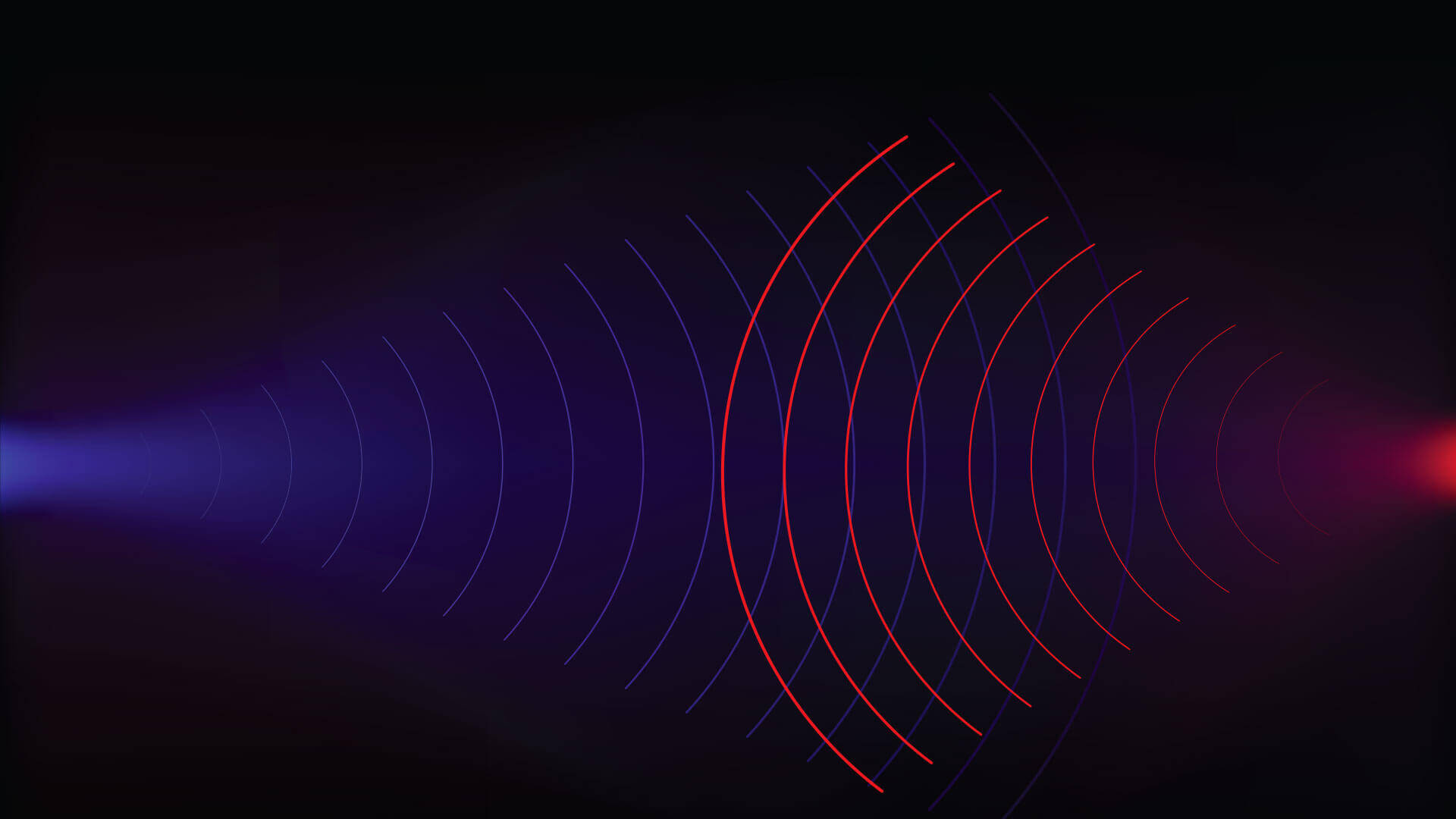

Mathematicians from Purdue and the Technical University of Munich (TUM) have been working on a signal processing method that uses four microphones and a speaker attached to a drone to “sense” the areas around the craft. The speaker emits a sound and mics attached to the four corners of the drone pick up the echo. By using algebra and geometry, it is possible to calculate the distance of an object by the time interval of the echo.

The trick is figuring out which walls or objects correspond to each calculated distance, a process known as echosorting. It is a vital part of the signal processing as otherwise, the algorithms could produce “ghost walls.” For echosorting to work, the microphones must be set up in a non-planar fashion.

Purdue University’s Associate Professor of Mathematics and Electrical and Computer Engineering Mireille Boutin says that the signal processing algorithms have applications for people, underwater vehicles, and cars.

So far, Boutin and TUM Professor of Algorithmic Algebra Gregor Kemper have successfully reconstructed the wall configurations in different rooms and published their research in the SAIM Journal on Applied Algebra and Geometry. They will continue studying different scenarios, such as “when the movement of the drone is restricted, or when the drone listens to the echoes of consecutive sounds as it is moving.”

While they have not talked about the commercialization of their algorithms, they see practical applications that could be beneficial and profitable. For instance, a compact device carried in the pocket of a blind person could warn him or her of walls or other obstacles in the way.

https://www.techspot.com/news/84346-using-four-mics-speaker-drones-can-echolocate-like.html