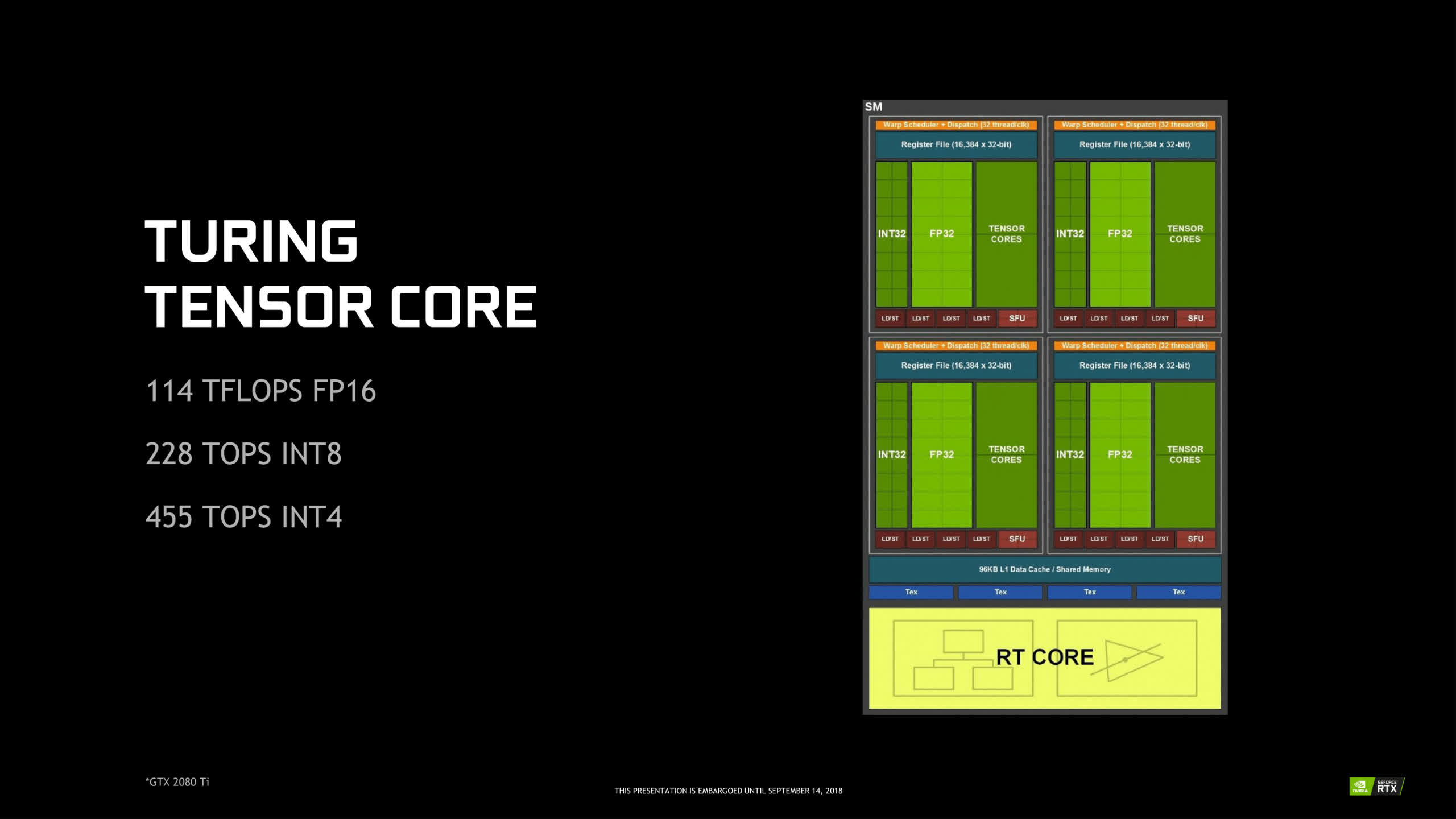

Nvidia first introduced Tensor Cores with the Turing architecture and their family of GeForce RTX graphics cards. One feature to take advantage of those cores was DLSS, mainly to alleviate the performance drop when enabling ray tracing without losing much in terms of image quality.

Not to be confused with DLSS, Nvidia's latest addition to their arsenal of AI-assisted features is called DLDSR, which stands for Deep Learning Dynamic Super Resolution.

What's DLDSR?

DLDSR is a method of supersampling aiming to improve over the older DSR (Dynamic Super Resolution). It renders supported games at a higher resolution than your monitor's native resolution, then shrinks the image to fit within the monitor's native output. This results in superior image quality with less flickering and better anti-aliasing.

DLSS vs DLDSR: Upscale vs Downscale

Both DLSS and DLDSR use the Tensor cores in RTX GPUs, but each one tries to achieve something different. On the one hand DLSS renders at a lower resolution than native in order to gain performance and then upscales the image to the monitor's native with the Tensor cores helping to preserve some, if not all, of the native image quality.

DLDSR does the opposite and downscales a higher resolution image, so it is best used when you have excess GPU power and want to improve on the eye candy.

This may be the case in older games, like Starcraft, which are not very demanding and more often than not have mediocre anti-aliasing solutions. Other usage scenario includes games that may not be the latest AAA ones but you would not call them old either, that have great performance on a wide variety of GPUs combined with nice visuals like Arkane's PREY or Capcom's Resident Evil Remake series. Games like these are ideal because their slow paced nature means that you pay attention to every detail, from texture quality to lighting and shadows, so DLDSR can help these graphical features stand out even more. You will mostly likely want to use it on a 1080p monitor as with anything higher than that the render resolution will end up being extremely high requiring massive amounts of GPU power as a result.

Also, it's best used with single-player games where frame rate is not of such an importance as with multi-player ones. You can for example aim for 60 fps and use DLDSR to improve image quality as much as your system resources allow. In general, depending on your GPU and monitor combo whenever there is substantial headroom above the 60fps, that is considered the base for smooth gaming, you can take advantage of that excess GPU power and put it into DLDSR. Also note that DLDSR works in every game whereas DLSS requires a game-specific implementation from the developers.

Where Does Deep Learning Come In?

DSR and DLDSR are essentially the same up until the point where they render at a higher resolution but the similarities end there. While DSR applies "a high-quality (Gaussian) filter" as per Nvidia, to make the higher resolution image fit within the monitor, DLDSR uses some sort of machine learning filter for this task. This way, DLDSR manages to address a fundamental issue with DSR, which is uneven scaling. To better explain this we need to take a look first at how to enable DSR and DLDSR.

How Do I Enable It?

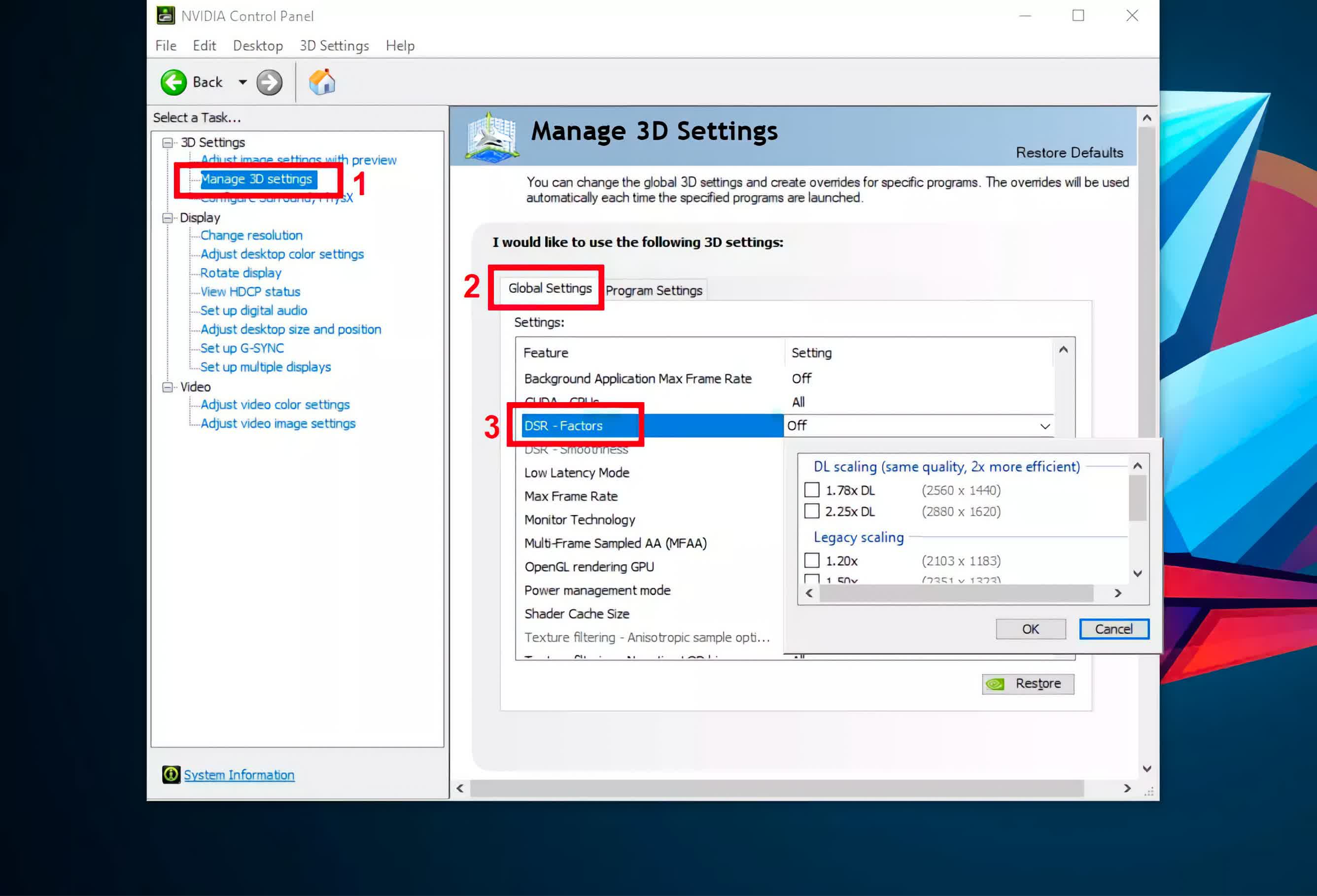

DSR can be used with Nvidia GTX 700 series or newer, while DLDSR requires a GeForce RTX GPU. Open the Nvidia Control Panel and follow the steps as shown below.

Here you will find various DSR Factors that are essentially multipliers of your monitor's native resolution. Each one represents a new resolution which you then need to apply in the game settings.

The first two factors use Deep Learning and are only available for RTX graphics cards, with the rest using the older DSR going up to 4x. Keep in mind that you can select either a DL scaling factor or the corresponding one from legacy scaling, but not both.

Uneven Scaling

Now, here's the catch. DSR works best when the rendering is done at a resolution that is an integer factor of the monitor's native. When using a 1080p monitor, 4x DSR-Factors is ideal as each pixel of the 1920x1080 grid is produced based on information from a set of 4 pixels of the 4K DSR rendered image. But this as you can imagine requires a powerful enough GPU in the first place to be able to render 4K at a playable framerate. Failing to use an integer factor for DSR causes image artifacts and poor edge anti-aliasing.

In order to counter this Nvidia have implemented a DSR-Smoothness slider (you can find it underneath the DSR-Factors in the image above) to control the intensity of the Gaussian filter that is used. Increasing the amount of smoothness makes those image artifacts less noticeable at the cost of a blurrier image overall.

On the other hand, the machine learning filter used by DLDSR, does not suffer from the uneven scaling issue when using non-integer factors, like 1.78x and 2.25x, and it also seems to fare better as regards to anti-aliasing.

Unfortunately, it is not possible to showcase the final output of this with screenshots as every method we tried (Nvidia Ansel, in-game photographer mode, or just plain old Windows print screen) captures the image before the filtering process is applied. So every screenshot would look the same regardless of DSR or DLDSR. Feel free to try it for yourself if you wish to.

We can tell you however that DLDSR seems to be the better option already, but there is a little caveat.

The ML filter produces an over-sharpened image, which may not be a great deal to everyone, but should you want you can play around with the smoothness slider to tone down the intensity of the sharpening.

Performance Comparison and Visuals

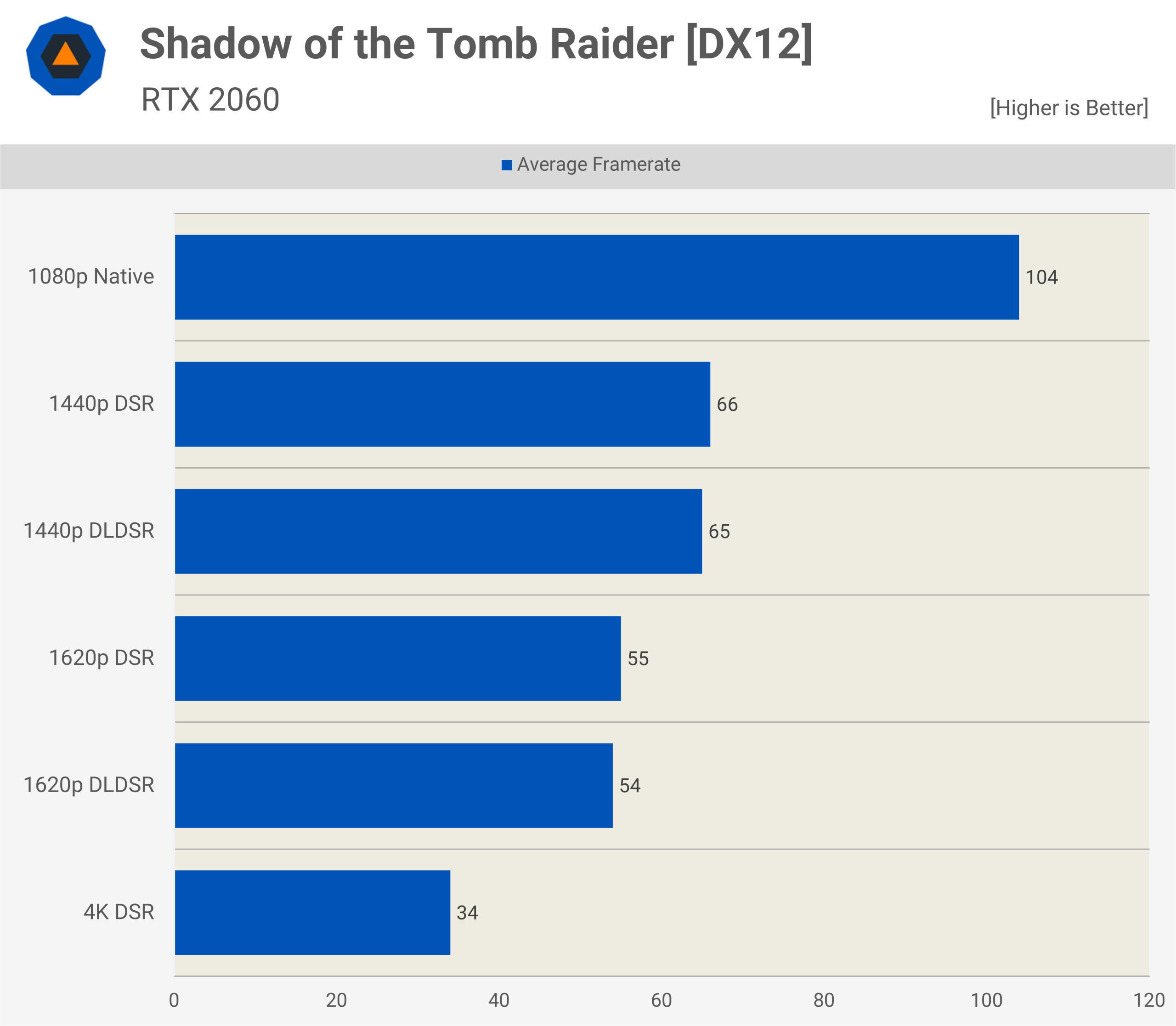

For comparing performance between the various DSR and DLDSR factors we used a Windows 10 machine, an RTX 2060 paired with a Ryzen 5600X and 16GB of DDR4 RAM running at 3200 MT/s with CAS latency of 14 at XMP timings. Performance metrics were done using the integrated benchmark of Shadow of the Tomb Raider in the highest preset and the results are as follows.

As you can see, there's a significant performance drop over native 1080p when using DSR and DLDSR which is expected as it renders the game at a much higher resolution.

But, notice the small difference between DSR and DLDSR at the same render resolution, which can be explained by the processing overhead the Tensor cores introduce.

Now what's interesting is Nvidia's claim that "the image quality of DLDSR 2.25X is comparable to that of DSR 4X, but with higher performance." The performance part is definitely accurate, let's see if the quality claim holds water as well.

Above is a screenshot of Bethesda's Prey taken from Nvidia's marketing material. Pay closer attention to vertical and horizontal lines, for example at the supporting wires for the ceiling elements in the background.

1620p DLDSR manages to recreate them much better than the native 1080p resolution and it also comes very close to the 4K DSR representation with a hefty boost in performance as well.

Notice that based on this, 1080p and 1620p DLDSR seem to be performing the same, but this most likely happens because we are looking at a CPU bottleneck scenario. On a purely GPU bound situation we have seen that's not the case.

Using DLDSR and DLSS together

When a game supports DLSS a good idea would be to use it alongside DLDSR to reclaim some of the performance loss while still keeping better image quality than native.

DLDSR essentially forces DLSS to use a higher base resolution, as DLSS uses a fraction of the game's render resolution, producing a cleaner image as a result. You also take advantage of better anti-aliasing DLSS sometimes has, especially in cases where the game's TAA implementation is not so great.

DLDSR vs Resolution Scaling

Some games offer a resolution scaling or image scaling slider in their graphics settings menu as a additional method of anti-aliasing. Essentially it lets you determine a percentage of your native resolution (e.g. 125% or 150%) at which the rendering will be performed and then the image will be downscaled the same way we discussed above.

Resolution scaling differs from DLDSR as it is an in-game solution, rather than something that works at the driver/hardware level and it would potentially suffer from the same issues DSR does as it does not utilize any neural network to assist with the scaling filtering.

In general, DLDSR is preferable, but depending on the game engine and how it is implemented image scaling may fare better for a particular game, so you can play around with both and decide for yourself.

Potential Issues

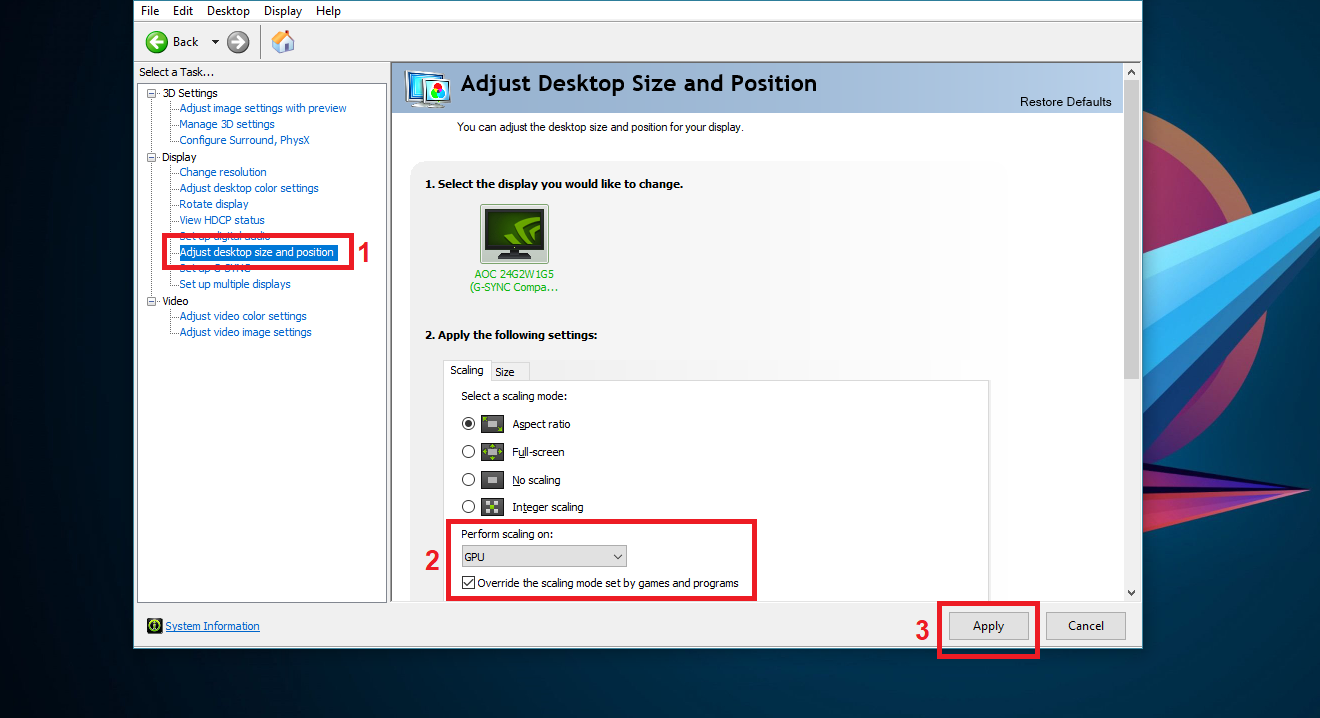

Depending on the game, you may encounter a few issues when using DLDSR. In some cases the image is not scaled down correctly and you end up with the game screen being too large to fit within your monitor.

A workaround would be to change on which device the scaling is performed on, from the monitor which is the default option, to the GPU and also use the checkbox underneath to make sure all programs use this exact scaling mode.

The problem with this workaround is that it sometimes breaks other games which behave correctly with scaling performed on the monitor. There is not much to do about it rather than change the scaling mode back and forth every time, depending on the game you are playing.

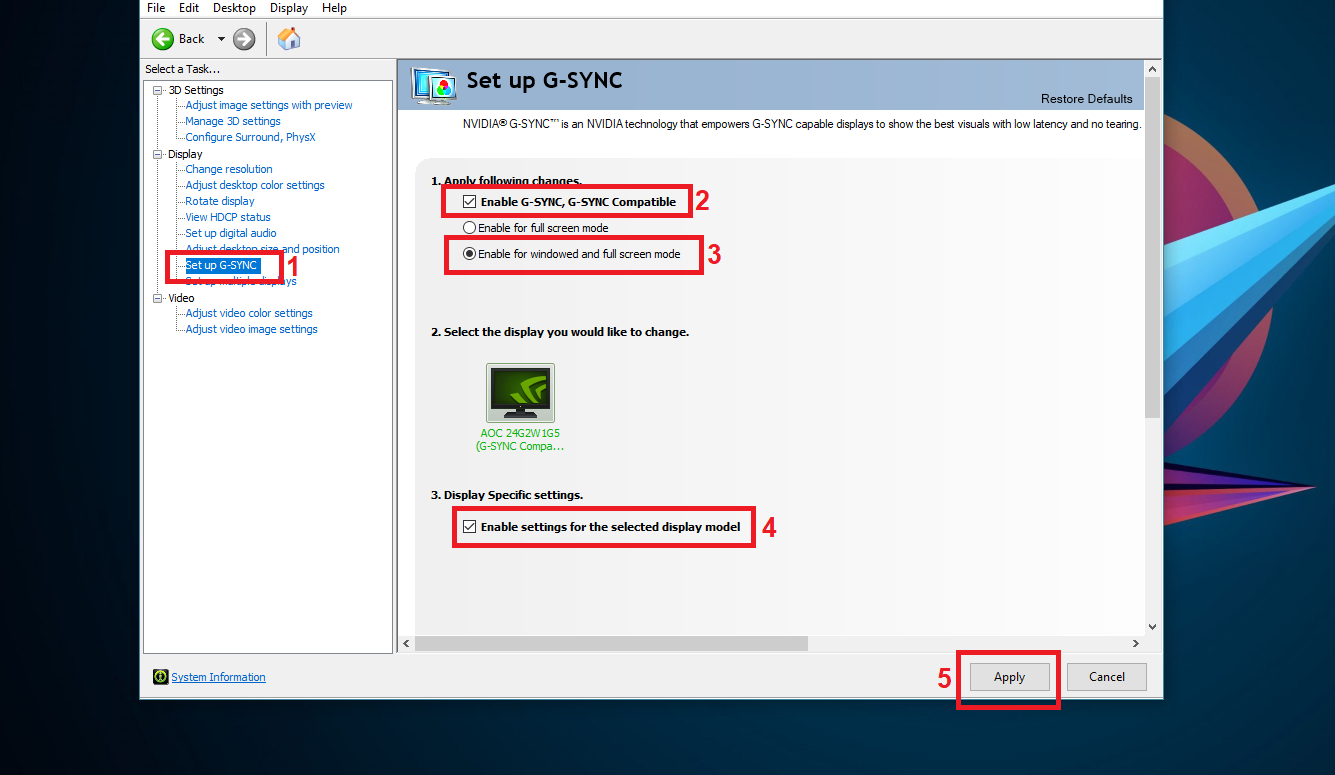

Another issue you may come across when using DLDSR in a variable refresh rate monitor, is that the G-Sync functionality stops working. You can solve it by going to Nvidia Control Panel and enable G-Sync for windowed and full screen mode.

A little tip to make sure that G-Sync works as it should is to enable the framerate counter from the monitor's on-screen-display and check that it changes according to the game's framerate.

This shows the true refresh rate of the monitor while tools like RTSS only report the GPU's frame rate output. Lastly, DLDSR will make any kind of overlay (e.g. RTSS, Nvidia Frameview, Steam's overlay, etc) appear smaller than when using native resolution as they get scaled down, too. Keep in mind that your mileage may vary depending on the game you are playing and the monitor you are using and you may or may not encounter all of the issues mentioned above.

Nvidia DLDSR is yet another feature modern GPUs offer to make your gaming experience a little bit more enjoyable. Taking into account the findings when comparing ultra graphics preset versus high, it may be preferable to settle for lower than ultra settings and use the excess GPU performance headroom to give a boost to the render resolution as that will likely result in more noticeable image quality improvements, clarity and anti-aliasing. If you own a supported graphics card give DLDSR a chance and you may be impressed by the results.