For the past three years Nvidia has been making graphics chips that feature extra cores, beyond the normal ones used for shaders. Known as tensor cores, these mysterious units can be found in thousands of desktop PCs, laptops, workstations, and data centers around the world. But what exactly are they and what are they used for? Do you even really need them in a graphics card?

Today we'll explain what a tensor is and how tensor cores are used in the world of graphics and deep learning.

Time for a Quick Math Lesson

To understand exactly what tensor cores do and what they can be used for, we first need to cover exactly what tensors are. Microprocessors, regardless what form they come in, all perform math operations (add, multiply, etc) on numbers.

Sometimes these numbers need to be grouped together, because they have some meaning to each other. For example, when a chip is processing data for rendering graphics, it may be dealing with single integer values (such as +2 or +115) for a scaling factor, or a group of floating point numbers (+0.1, -0.5, +0.6) for the coordinations of a point in 3D space. In the case of the latter, the position of the location requires all three pieces of data.

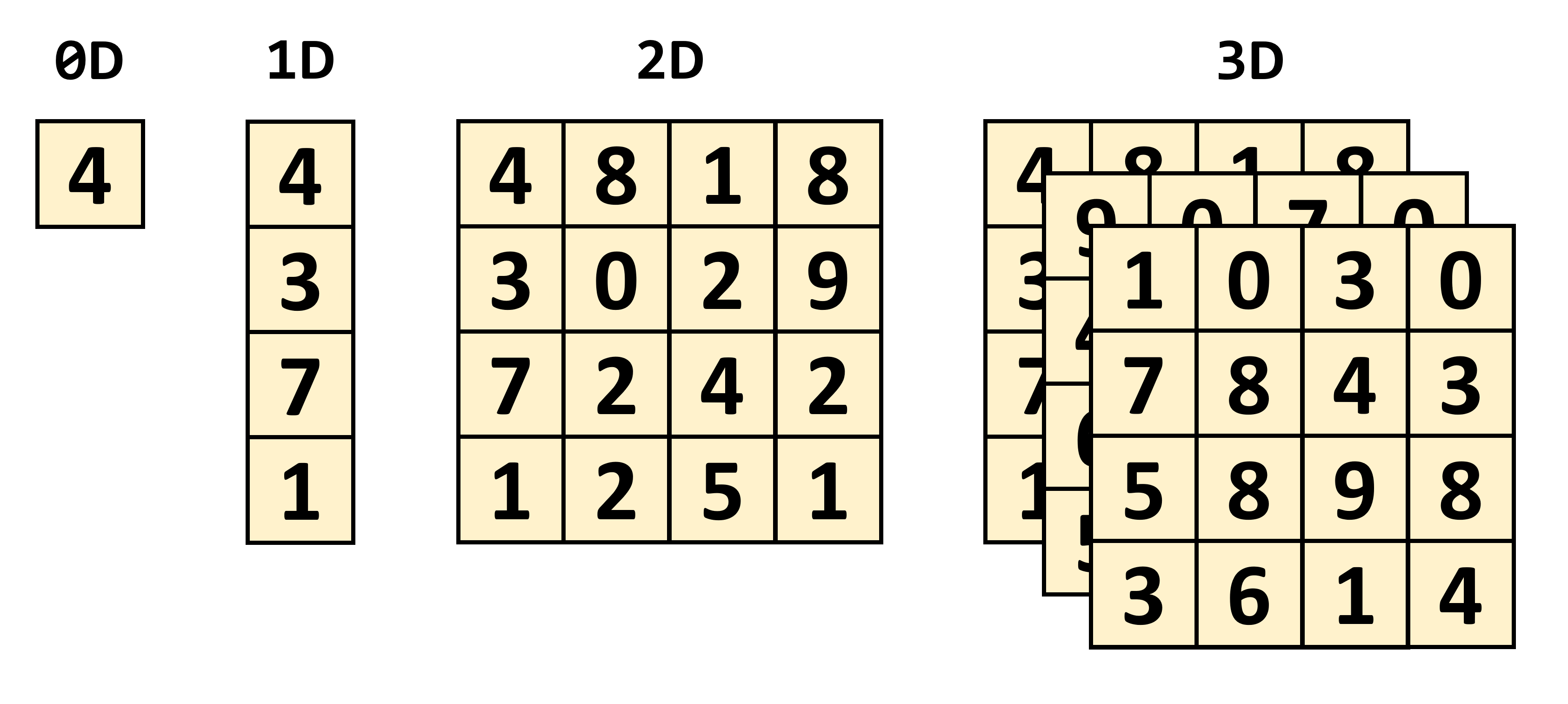

A tensor is a mathematical object that describes the relationship between other mathematical objects that are all linked together.

A tensor is a mathematical object that describes the relationship between other mathematical objects that are all linked together. They are commonly shown as an array of numbers, where the dimension of the array can be viewed as shown below.

The simplest type of tensor you can get would have zero dimensions, and consist of a single value – another name for this is a scalar quantity. As we start to increase the number of dimensions, we can come across other common math structures:

- 1 dimension = vector

- 2 dimensions = matrix

Strictly speaking, a scalar is a 0 x 0 tensor, a vector is 1 x 0, and a matrix is 1 x 1, but for the sake of simplicity and how it relates to tensor cores in a graphics processor, we'll just deal with tensors in the form of matrices.

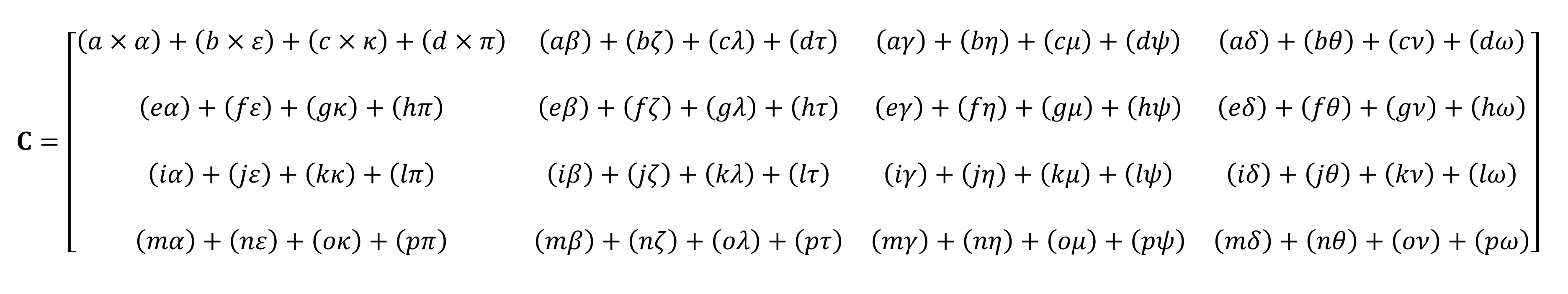

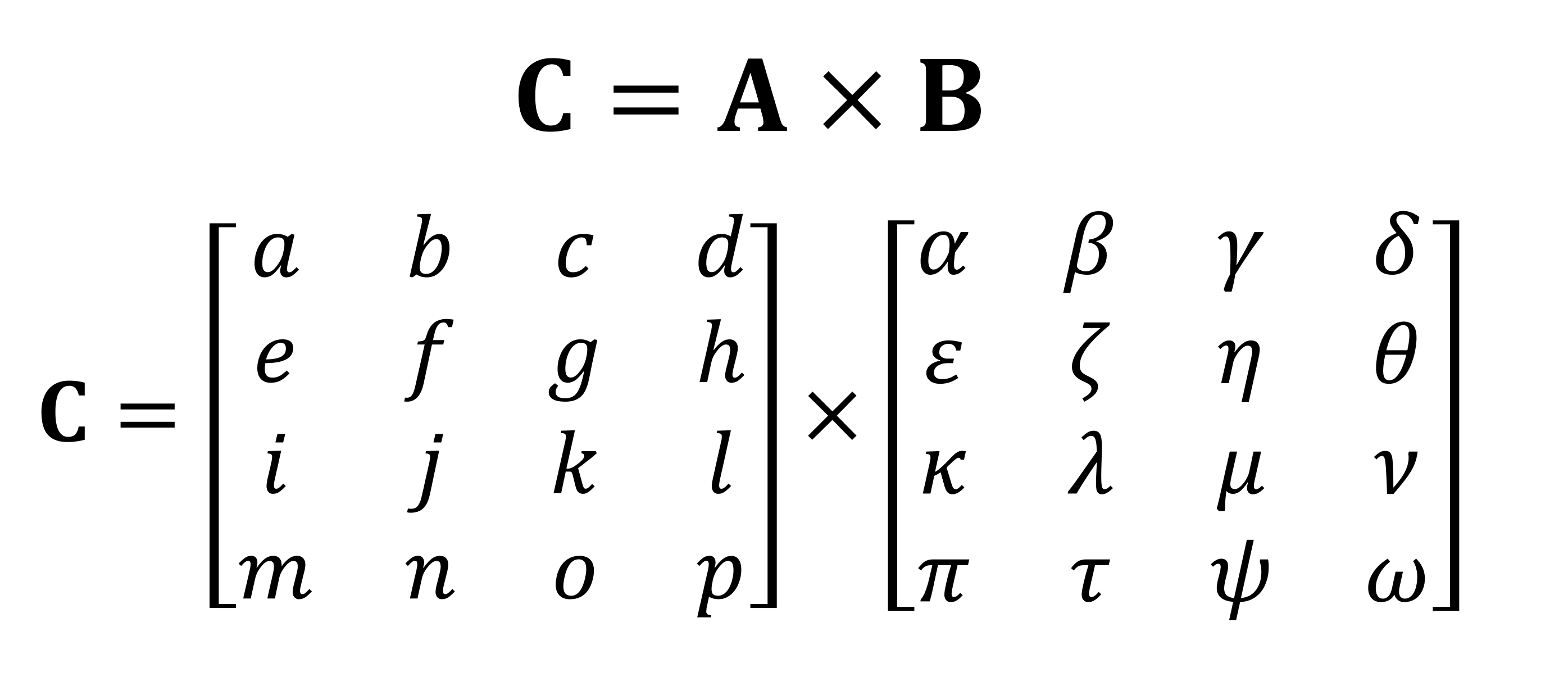

One of the most important math operations done with matrices is a multiplication (or product). Let's take a look at how two matrices, both with 4 rows and columns of values, get multiplied together:

The final answer to the multiplication always the same number of rows as the first matrix, and the same number of columns as the second one. So how do you multiply these two arrays? Like this:

As you can see, a 'simple' matrix product calculation consists of a whole stack of little multiplications and additions. Since every CPU on the market today can do both of these operations, it means that any desktop, laptop, or tablet can handle basic tensors.

However, the above example contains 64 multiplications and 48 additions; each small product results in a value that has to be stored somewhere, before it can be accumulated with the other 3 little products, before that final value for the tensor can be stored somewhere. So although matrix multiplications are mathematically straightforward, they're computationally intensive – lots of registers need to be used, and the cache needs to cope with lots of reads and writes.

CPUs from AMD and Intel have offered various extensions over the years (MMX, SSE, now AVX – all of them are SIMD, single instruction multiple data) that allows the processor to handle lots of floating point numbers at the same time; exactly what matrix multiplications need.

But there is a specific type of processor that is especially designed to handle SIMD operations: graphics processing units (GPUs).

Smarter Than Your Average Calculator?

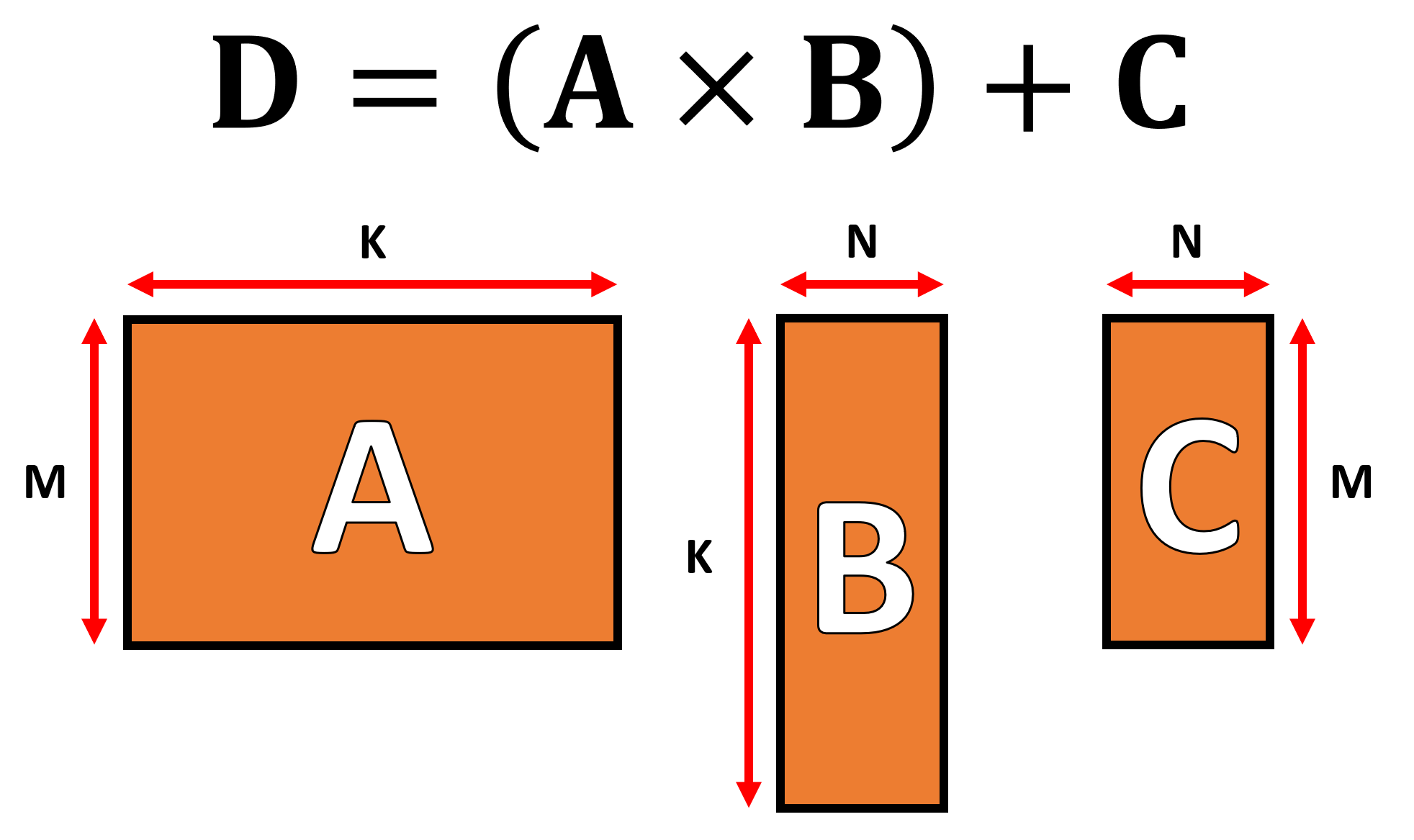

In the world of graphics, a huge amount of data needs to be moved about and processed in the form of vectors, all at the same time. The parallel processing capability of GPUs makes them ideal for handling tensors and all of them today support something called a GEMM (General Matrix Multiplication).

This is a 'fused' operation, where two matrices are multiplied together, and the answer to which is then accumulated with another matrix. There are some important restrictions on what format the matrices must take and they revolve around the number of rows and columns each matrix has.

The algorithms used to carry out matrix operations tend to work best when matrices are square (for example, using 10 x 10 arrays would work better than 50 x 2) and fairly small in size. But they still work better when processed on hardware that is solely dedicated to these operations.

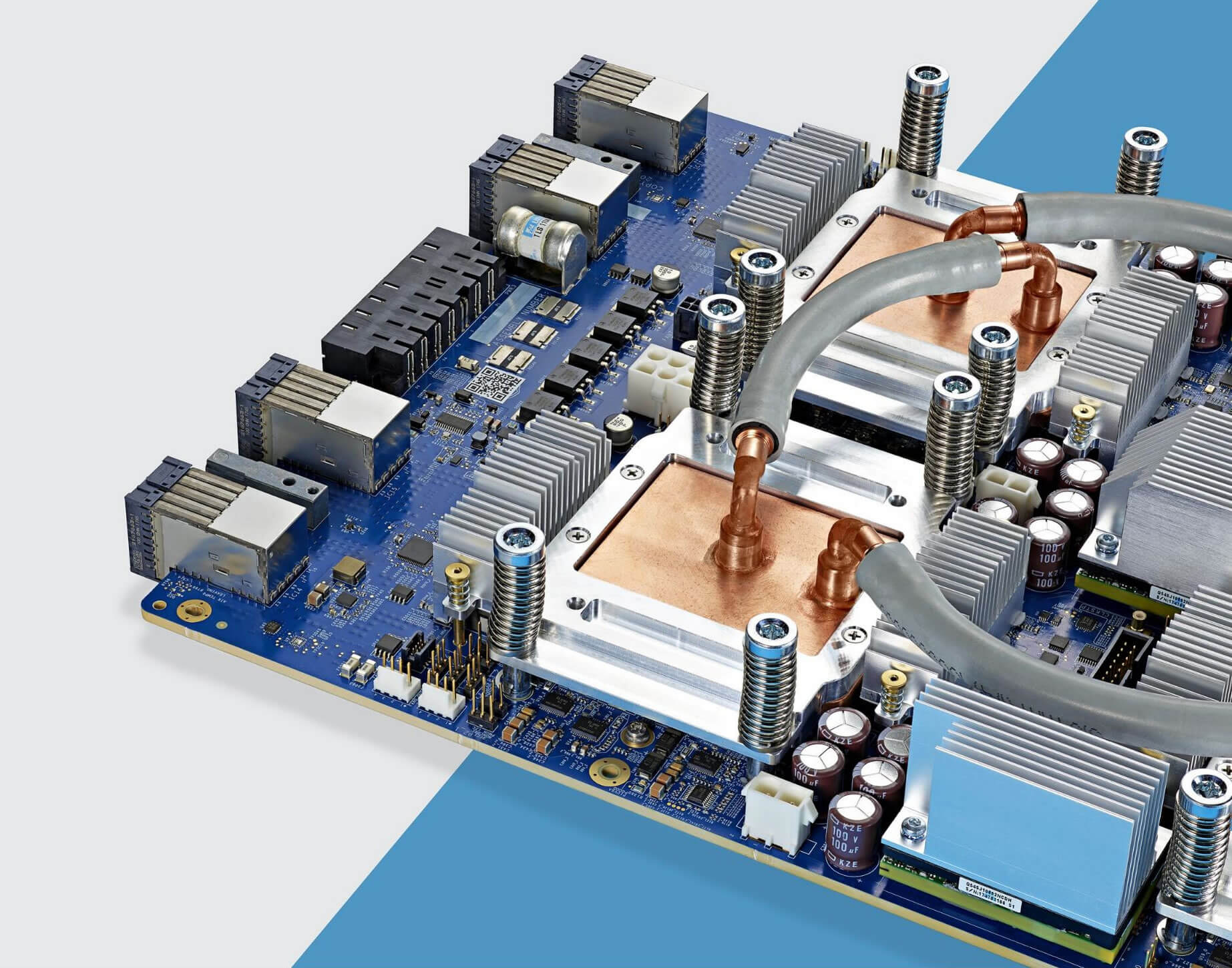

In December 2017, Nvidia released a graphics card sporting a GPU with a new architecture called Volta. It was aimed at professional markets, so no GeForce models ever used this chip. What made it special was that it was the first graphics processor to have cores just for tensor calculations.

With zero imagination behind the naming, Nvidia's tensor cores were designed to carry 64 GEMMs per clock cycle on 4 x 4 matrices, containing FP16 values (floating point numbers 16 bits in size) or FP16 multiplication with FP32 addition. Such tensors are very small in size, so when handling actual data sets, the cores would crunch through little blocks of larger matrices, building up the final answer.

Less than a year later, Nvidia launched the Turing architecture. This time the consumer-grade GeForce models sported tensor cores, too. The system had been updated to support other data formats, such as INT8 (8-bit integer values), but other than that, they still worked just as they did in Volta.

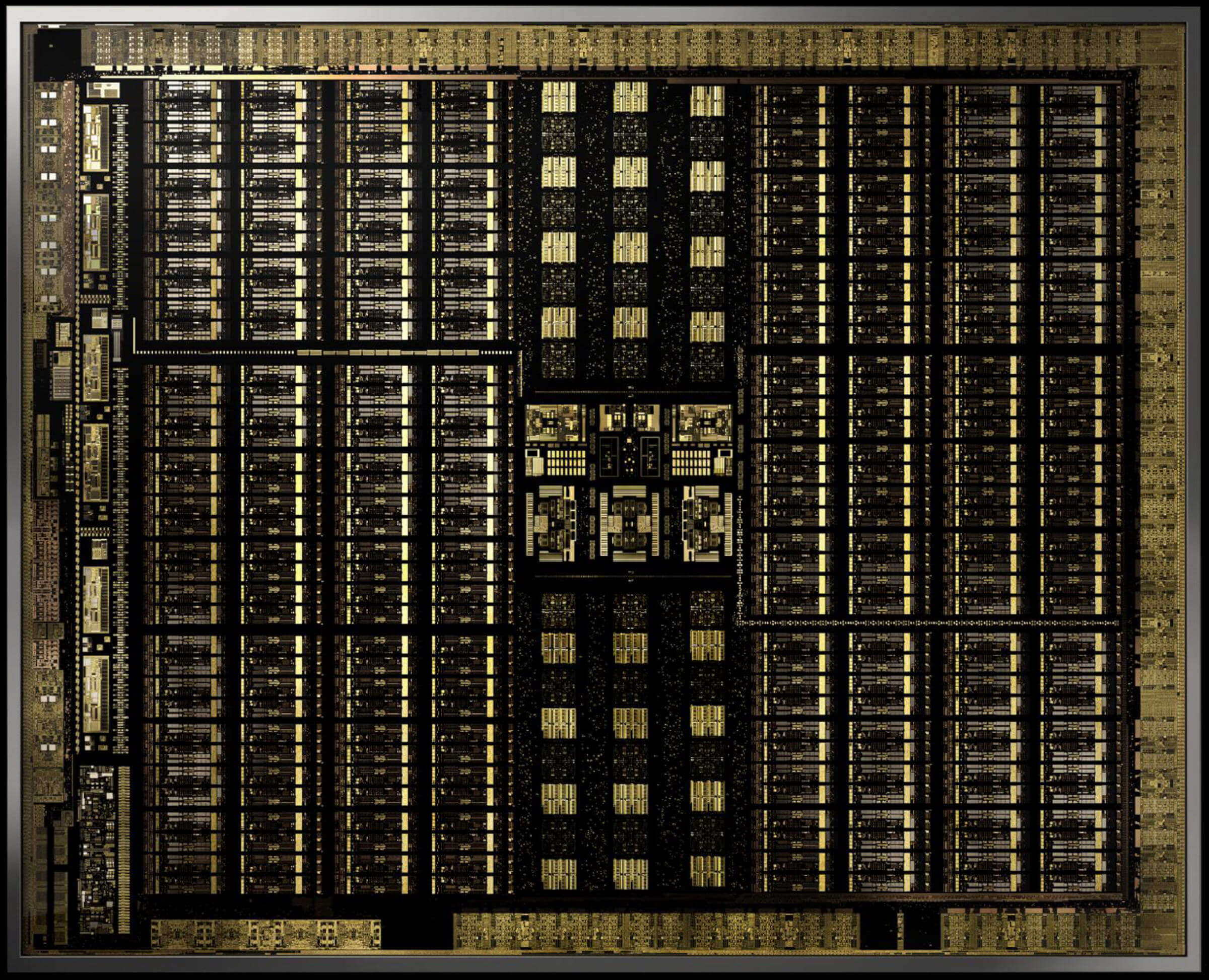

Earlier this year, the Ampere architecture made its debut in the A100 data center graphics processor, and this time Nvidia improved the performance (256 GEMMs per cycle, up from 64), added further data formats, and the ability to handle sparse tensors (matrices with lots of zeros in them) very quickly.

For programmers, accessing tensor cores in any of the Volta, Turing, or Ampere chips is easy: the code simply needs to use a flag to tell the API and drivers that you want to use tensor cores, the data type needs to be one supported by the cores, and the dimensions of the matrices need to be a multiple of 8. After that, that hardware will handle everything else.

This is all nice, but just how much better are tensor cores at handling GEMMs than the normal cores in a GPU?

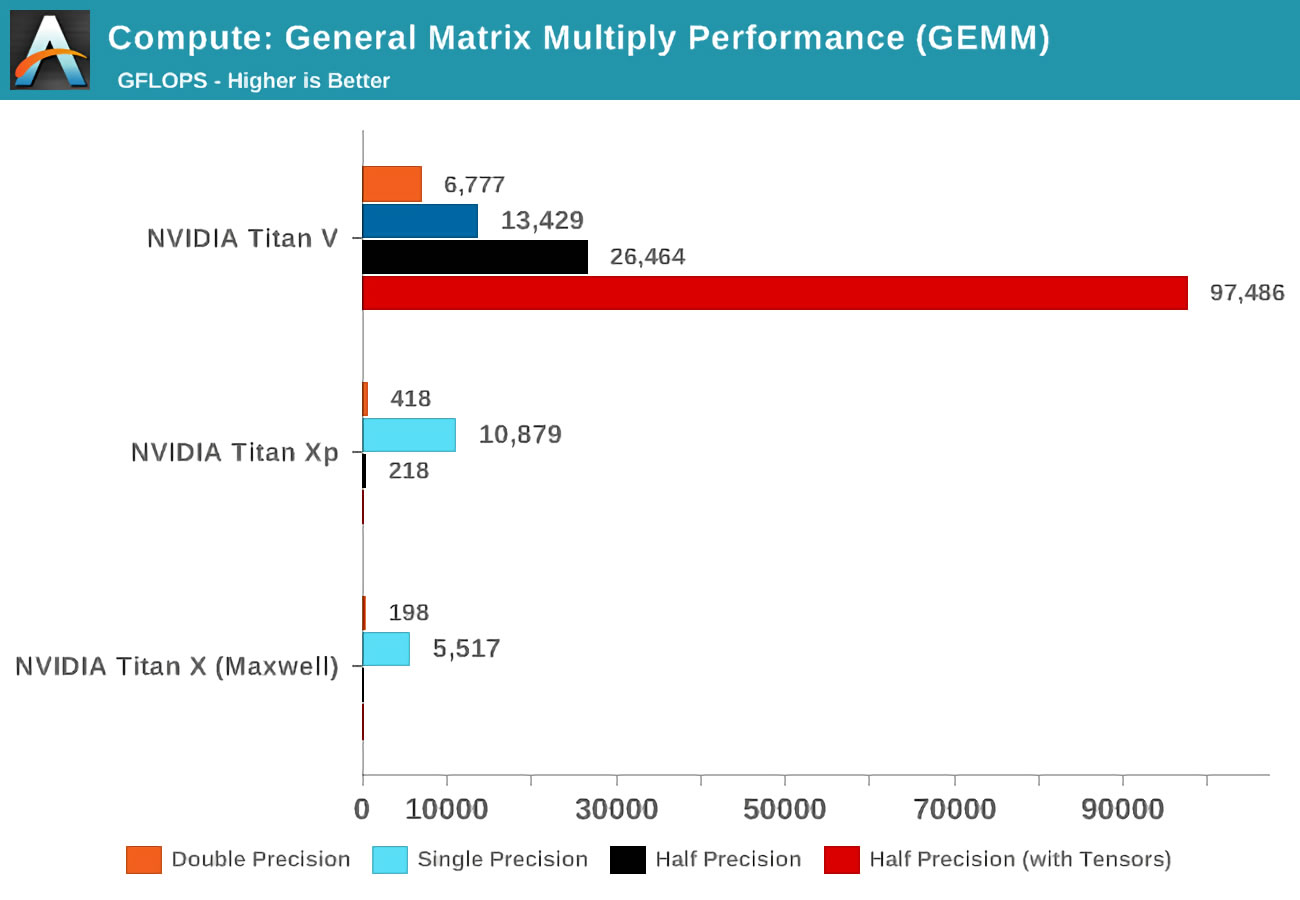

When Volta first appeared, Anandtech carried some math tests using three Nvidia cards: the new Volta, a top-end Pascal-based one, and an older Maxwell card.

The term precision refers to the number of bits used for the floating points numbers in the matrices, with double being 64, single is 32, and so on. The horizontal axis refers to the peak number of FP operations carried out per second or FLOPs for short (remember that one GEMM is 3 FLOP).

Just look what the result was when the tensor cores were used, instead of the standard so-called CUDA cores! They're clearly fantastic at doing this kind of work, so just what can you do with tensor cores?

Math to Make Everything Better

Tensor math is extremely useful in physics and engineering, and is used to solve all kinds of complex problems in fluid mechanics, electromagnetism, and astrophysics, but the computers used to crunch these numbers tend to do the matrix operations on large clusters of CPUs.

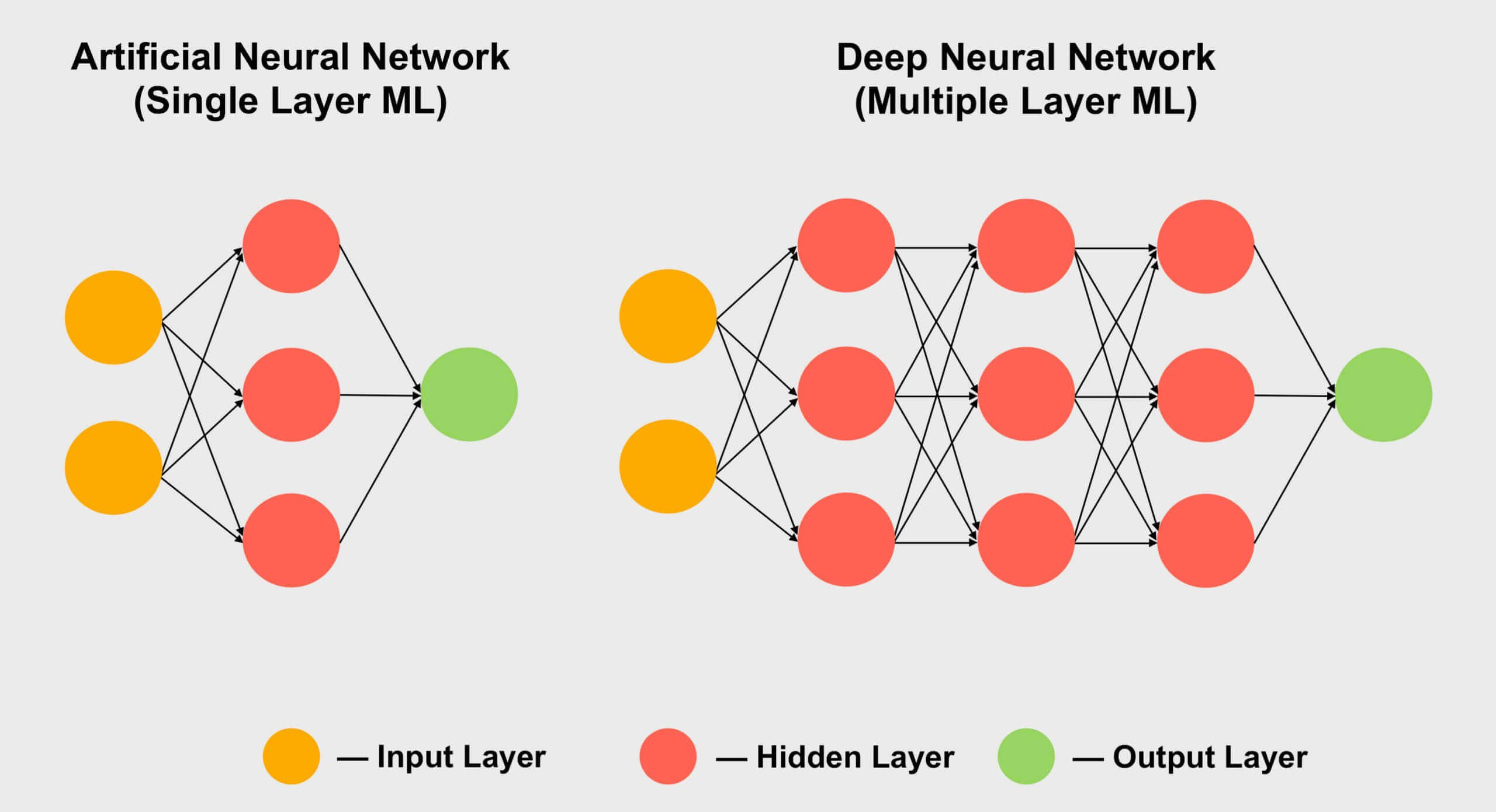

Another field that loves using tensors is machine learning, especially the subset deep learning. This is all about handling huge collections of data, in enormous arrays called neural networks. The connections between the various data values are given a specific weight – a number that expresses how important that connection is.

So when you need to work out how all of the hundreds, if not thousands, of connections interact, you need to multiply each piece of data in the network by all the different connection weights. In other words, multiply two matrices together: classic tensor math!

This is why all the big deep learning supercomputers are packed with GPUs and nearly always Nvidia's. However, some companies have gone as far as making their own tensor core processors. Google, for example, announced their first TPU (tensor processing unit) in 2016 but these chips are so specialized, they can't do anything other than matrix operations.

Tensor Cores in Consumer GPUs (GeForce RTX)

But what if you've got an Nvidia GeForce RTX graphics card and you're not an astrophysicist solving problems with Riemannian manifolds, or experimenting with the depths of convolutional neural networks...? What use are tensor cores for you?

For the most part, they're not used for normal rendering, encoding or decoding videos, which might seem like you've wasted your money on a useless feature. However, Nvidia put tensor cores into their consumer products in 2018 (Turing GeForce RTX) while introducing DLSS – Deep Learning Super Sampling.

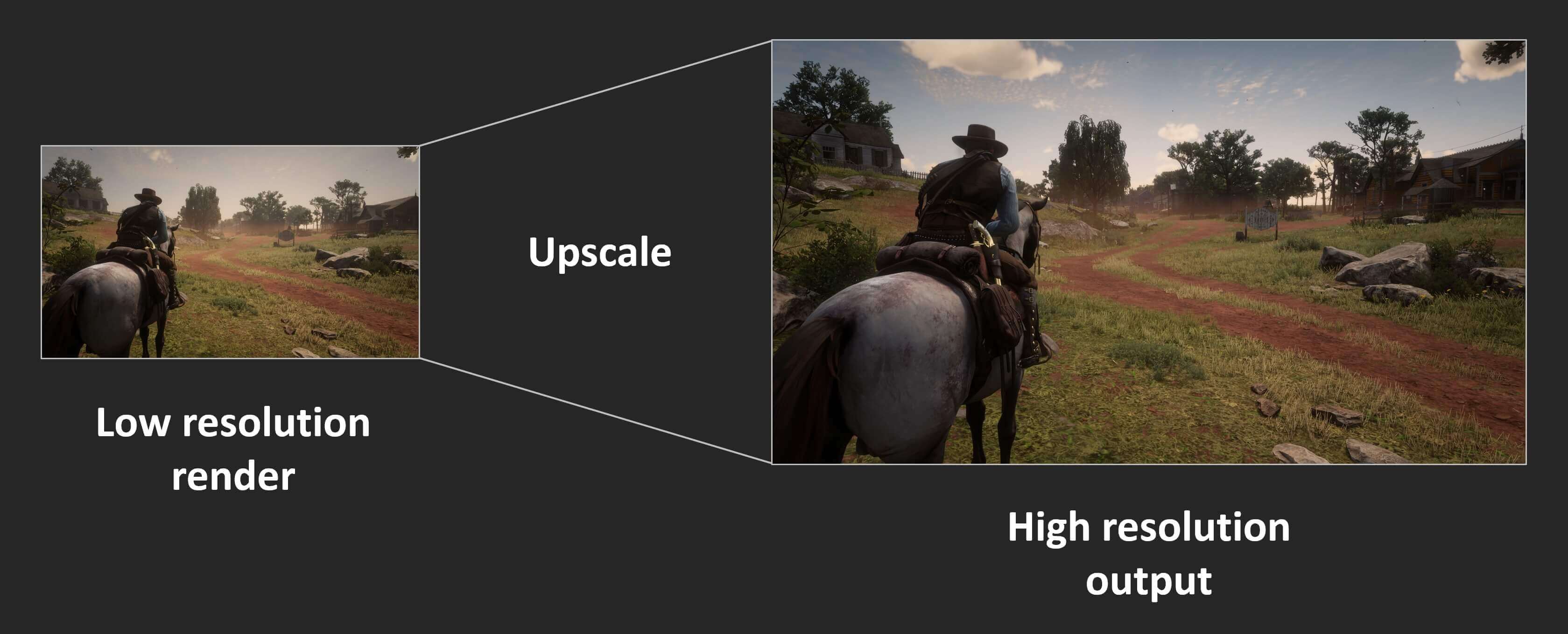

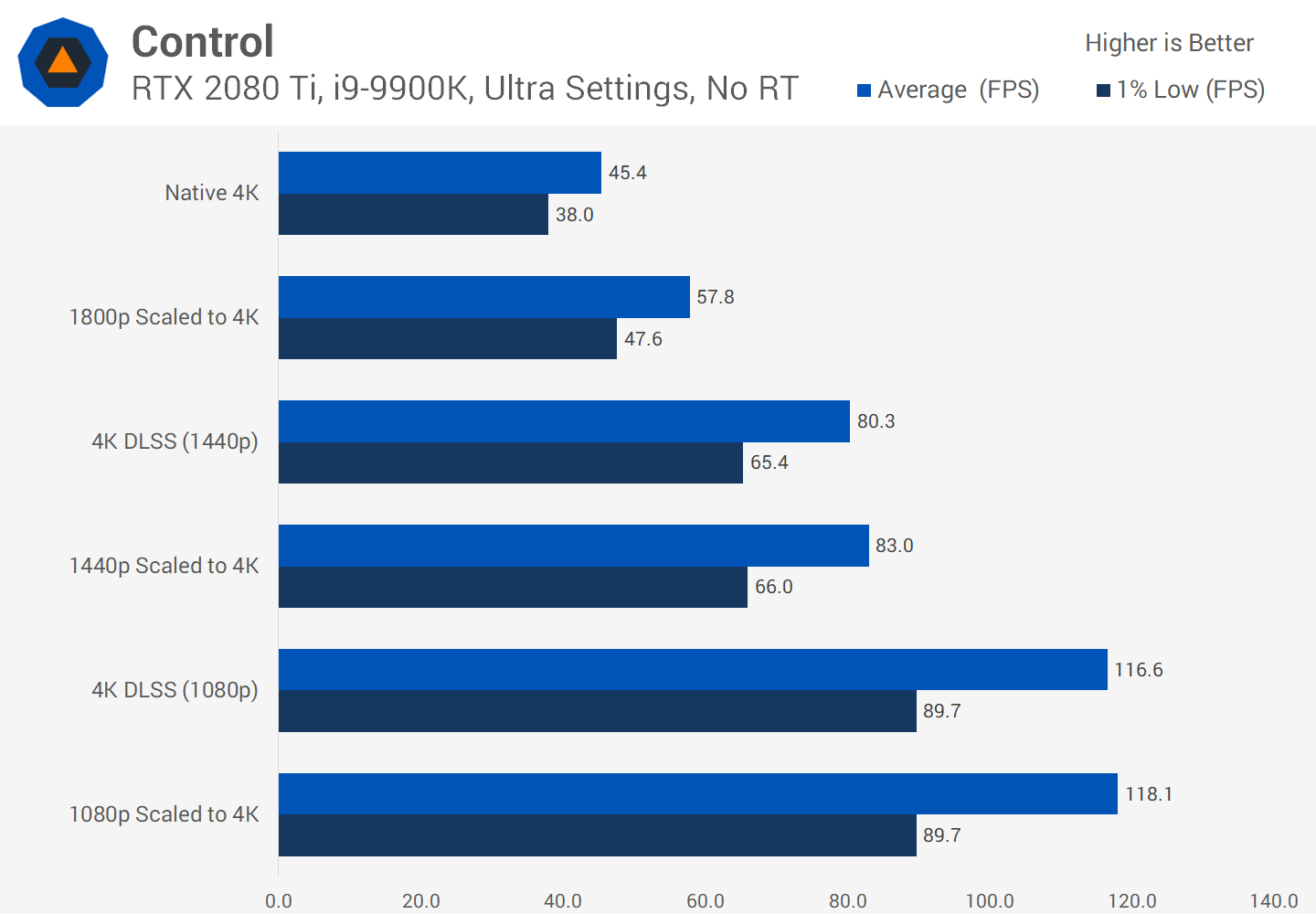

The basic premise is simple: render a frame at low-ish resolution and when finished, increase the resolution of the end result so that it matches the native screen dimensions of the monitor (e.g. render at 1080p, then resize it to 1400p). That way you get the performance benefit of processing fewer pixels, but still get a nice looking image on the screen.

Consoles have been doing something like this for years, and plenty of today's PC games offer the ability, too. In Ubisoft's Assassin's Creed: Odyssey, you can change the rendering resolution right down to just 50% of the monitor's. Unfortunately, the result doesn't look so hot. This is what the game looks like a 4K, with maximum graphics settings applied (click to see the full resolution version):

Running at high resolutions means textures look a lot better, as they retain fine detail. Unfortunately, all those pixels take a lot of processing to churn them out. Now look what happens when the game is set to render at 1080p (25% the amount of pixels than before), but then use shaders at the end to expand it back out to 4K.

The difference might not be immediately obvious, thanks to jpeg compression and the rescaling of the images on our website, but the character's armor and the distance rock formation are somewhat blurred. Let's zoom into a section for a closer inspection:

The left section has been rendered natively at 4K; on the right, it's 1080p upscaled to 4K. The difference is far more pronounced once motion is involved, as the softening of all the details rapidly becomes a blurry mush. Some of this could be clawed back by using a sharpening effect in the graphics card's drivers, but it would be better to not have to do this at all.

This is where DLSS plays its hand – in Nvidia's first iteration of the technology, selected games were analyzed, running them at low resolutions, high resolutions, with and without anti-aliasing. All of these modes generated a wealth of images that were fed into their own supercomputers, which used a neural network to determine how best to turn a 1080p image into a perfect higher resolution one.

It has to be said that DLSS 1.0 wasn't great, with detail often lost or weird shimmering in some places. Nor did it actually use the tensor cores on your graphics card (that was done on Nvidia's network) and every game supporting DLSS required its own examination by Nvidia to generate the upscaling algorithm.

When version 2.0 came out in early 2020, some big improvements had been made. The most notable of which was that Nvidia's supercomputers were only used to create a general upscaling algorithm – in the new iteration of DLSS, data from the rendered frame would be used to process the pixels (via your GPU's tensor cores) using the neural model.

We remain impressed by what DLSS 2.0 can achieve, but for now very few games support it – just 12 in total, at the time of writing. More developers are looking to implement it in their future releases, though, and for good reasons.

There are big performance gains to be found, doing any kind of upscaling, so you can bet your last dollar that DLSS will continue to evolve.

Although the visual output of DLSS isn't always perfect, by freeing up rendering performance, developers have the scope to include more visual effects or offer the same graphics across a wider range of platforms.

Case in point, DLSS is often seen promoted alongside ray tracing in "RTX enabled" games. GeForce RTX GPUs pack additional compute units called RT cores: dedicated logic units for accelerating ray-triangle intersection and bounding volume hierarchy (BVH) traversal calculations. These two processes are time consuming routines for working out where a light interacts with the rest of objects within a scene.

As we've found out, ray tracing is super intensive, so in order to deliver playable performance game developers must limit the number of rays and bounces performed in a scene. This process can result in grainy images, too, so a denoising algorithm has to be applied, adding to the processing complexity. Tensor cores are expected to aid performance here using AI-based denoising, although that has yet to materialize with most current applications still using CUDA cores for the task. On the upside, with DLSS 2.0 becoming a viable upscaling technique, tensor cores can effectively be used to boost frame rates after ray tracing has been applied to a scene.

There are other plans for the tensor cores in GeForce RTX cards, too, such as better character animation or cloth simulation. But like DLSS 1.0 before them, it will be a while before hundreds of games are routinely using the specialized matrix calculators in GPUs.

Early Days But the Promise Is There

So there we go – tensor cores, nifty little bits of hardware, but only found in a small number of consumer-level graphics cards. Will this change in the future? Since Nvidia has already substantially improved the performance of a single tensor core in their latest Ampere architecture, there's good a chance that we'll see more mid-range and budget models sporting them, too.

While AMD and Intel don't have them in their GPUs, we may see something similar being implemented by them in the future. AMD does offer a system to sharpen or enhance the detail in completed frames, for a tiny performance cost, so they may well just stick to that – especially since it doesn't need to be integrated by developers; it's just a toggle in the drivers.

There's also the argument that die space in graphics chips could be better spent on just adding more shader cores, something Nvidia did when they built the budget versions of their Turing chips. The likes of the GeForce GTX 1650 dropped the tensor cores altogether, and replaced them with extra FP16 shaders.

But for now, if you want to experience super fast GEMM throughput and all the benefits this can bring, you've got two choices: get yourself a bunch of huge multicore CPUs or just one GPU with tensor cores.

Shopping Shortcuts:

- GeForce GTX 1660 Super on Amazon

- GeForce RTX 2060 on Amazon

- GeForce RTX 2070 Super on Amazon

- GeForce RTX 2080 Super on Amazon

- GeForce RTX 2080 Ti on Amazon

- Radeon RX 5600 XT on Amazon

- Radeon RX 5700 XT on Amazon