What just happened? Meta has announced it will start testing new Instagram tools that will identify nudity sent in direct messages and blur them. The feature will be enabled by default for those under 18 while adults, for which it will be opt-in, will be encouraged by Meta to turn it on. The social media giant hopes that the update will help address, among other things, the increasing threat of sextortion against young users, encouraging people to "think twice" before sending nudes.

For as long as there have been social media platforms, there have been concerns over their impact on young people, including addiction, mental health damage, being exploited, and falling for scams like sextortion. Instagram and Facebook owner Meta has been under increasing pressure recently to do more to fight these issues.

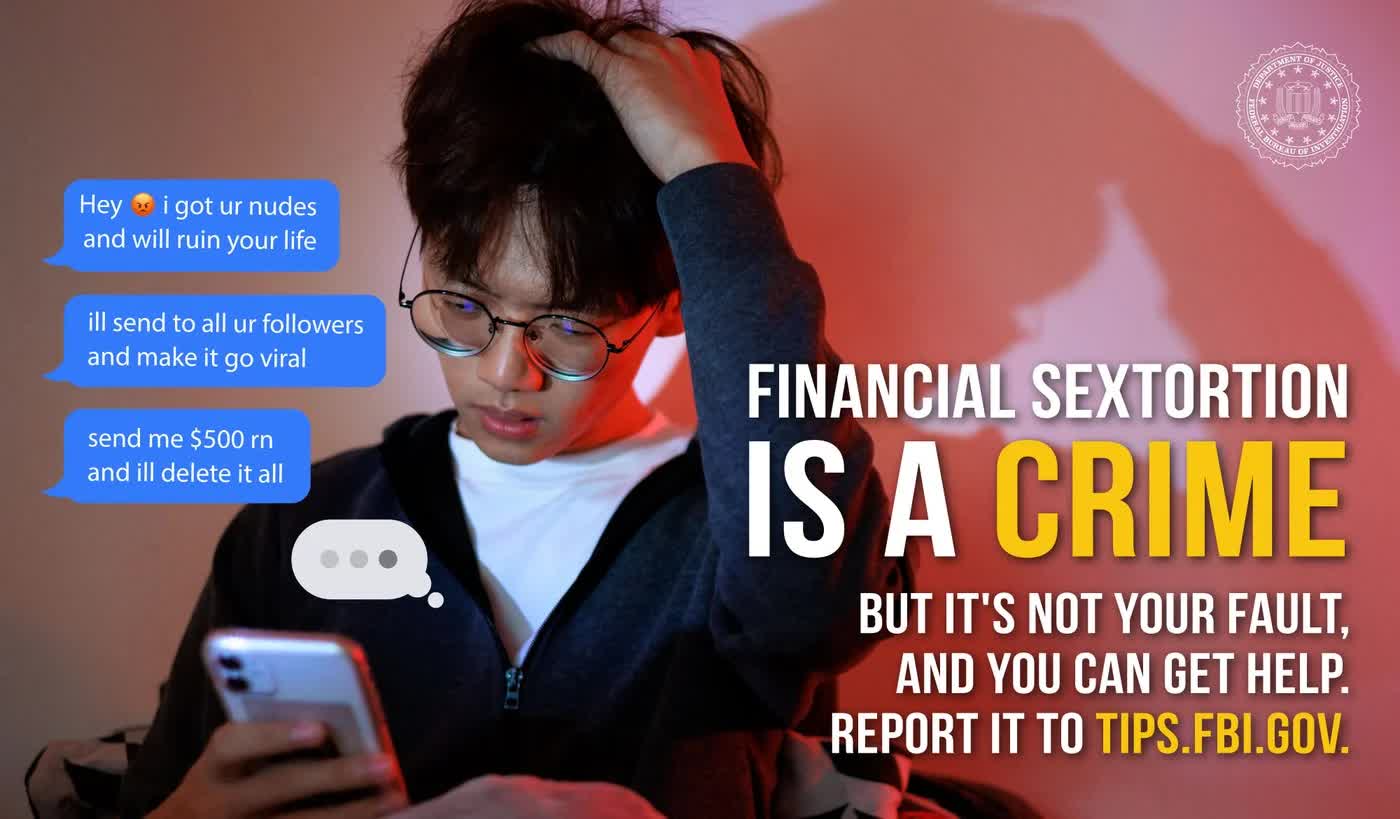

Sextortion on social media often involves someone striking up a conversation with a person and sending them a nude picture. The target is then invited to send one of their own. If they do, the criminals threaten to share it online and with the victim's friends and family unless their demands are met. Two Nigerian men pleaded guilty this week to sexually extorting teenage boys and young men in the US, including one who took his own life.

Meta added that it is developing technology that could identify accounts engaging in sextortion scams and was testing pop-up messages for users who may have interacted with these accounts.

Away from sextortion, the feature should also protect against those who send unsolicited nudes. It will not report explicit images to Meta automatically – that will be left up to users – and people can still choose whether or not to view something, though Meta will send a message encouraging them not to feel pressure to respond.

"Anyone who tries to forward a nude image they've received will see a message encouraging them to reconsider," Meta added. The company will also direct users to safety tips about sharing nudes, warnings that they can be screenshotted and their relationship with the person could change in the future.

Meta said the nudity-blurring tech will use on-device machine learning to identify explicit images, meaning it will also work in end-to-end encrypted chats once Meta rolls them out to Instagram – they are already part of Messenger and WhatsApp.