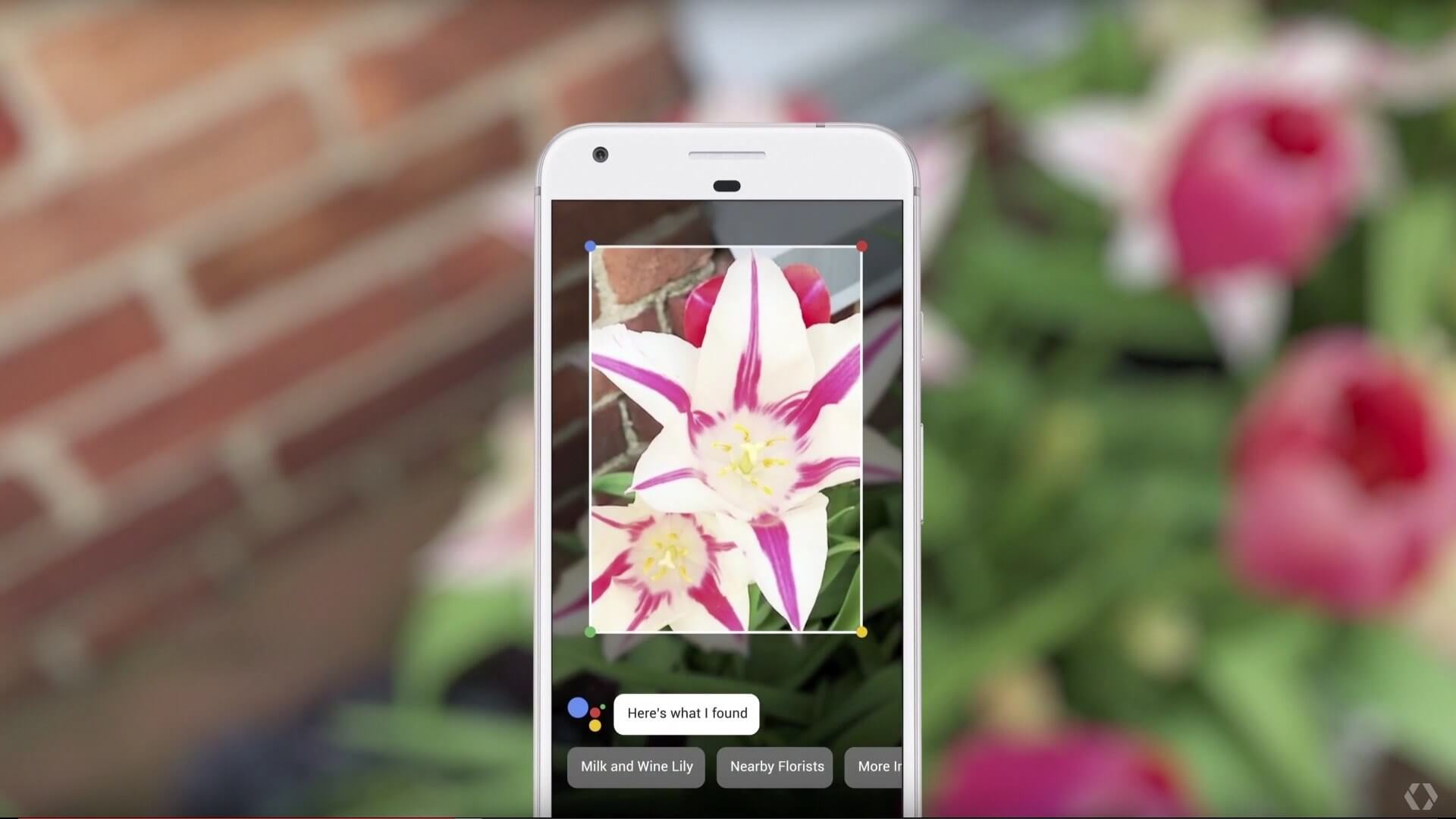

In context: Google Lens uses machine learning to do everything from telling you where to buy that flower growing in your planter to automatically configuring your phone to a network by scanning an SSID sticker. At this year's Google I/O, the company announced a few new tricks coming to Lens.

Google had several announcements at its I/O 2019 event on Tuesday. It had details on a new Hub device, improvements to Google Assistant, and the launch of the Pixel 3a and Pixel 3a XL.

The company highlighted its work on machine learning in its various products including Google Lens. Lens was first launched shortly after Google I/O 2017 and gave Pixel phones the ability to detect and identify objects through the camera and offer suggestion such as where to purchase the identified item.

Today's special: Google Lens. ️ Automatically highlighting what's popular on a menu, when you tap on a dish you can see what it looks like and what people are saying about it, thanks to photos and reviews from @googlemaps. #io19 pic.twitter.com/5PcDsj1VuQ

--- Google (@Google) May 7, 2019

Google expanded Lens to other Android devices and iPhones at last year's I/O, and this year it has seen some significant improvements in what it can do.

Google Lens can now recognize restaurant menus --- not just in a generic sense, but it can detect the specific restaurant. It then uses that information to highlight the most popular dishes right on the screen in real time. If you tap a menu item, Lens will pull up pictures and reviews for it.

After you finish eating Lens can help you with the bill too. Just point the camera at the check and Lens will calculate the tip and even split the bill for you of you wish.

Launching first in Google Go, our Search app for first-time smartphone users, we're working to help people who struggle with reading by giving Google Lens the ability to read text out loud. #io19 pic.twitter.com/YMVbZa5XMQ

--- Google (@Google) May 7, 2019

Speaking of food, if you are more into home cooking and have a subscription to Bon Appétit magazine for example, just point the camera at a recipe, and Lens will bring up instructional videos on how to cook that dish.

Google Lens improvements were not strictly focused on food, although it seemed like it. Lens can now read text out loud or even translate it from another language. This feature could come in handy for reading signs when in a foreign country or for helping those who cannot read.

Look for these features to be rolling out in Google Lens before the end of May.