Facepalm: Users have pushed the limits of Bing's new AI-powered search since its preview release, prompting responses ranging from incorrect answers to demands for their respect. The resulting influx of bad press has prompted Microsoft to limit the bot to five turns per chat session. Once reached, it will clear its context to ensure users can't trick it into providing undesirable responses.

Earlier this month, Microsoft began allowing Bing users to sign up for early access to its new ChatGPT-powered search engine. Redmond designed it to allow users to ask questions, refine their queries, and receive direct answers rather than the usual influx of linked search results. Responses from the AI-powered search have been entertaining and, in some cases, alarming, resulting in a barrage of less-than-flattering press coverage.

Forced to acknowledge the questionable results and the reality that the new tool may not have been ready for prime time, Microsoft has implemented several changes designed to limit Bing's creativity and the potential to become confused. Chat users will have their experience capped to no more than five chat turns per session, and no more than 50 total chat turns per day. Microsoft defines a turn as an exchange that contains both a user question and a Bing-generated response.

The New Bing landing page provides users with examples of questions they can ask to prompt clear, conversational responses.

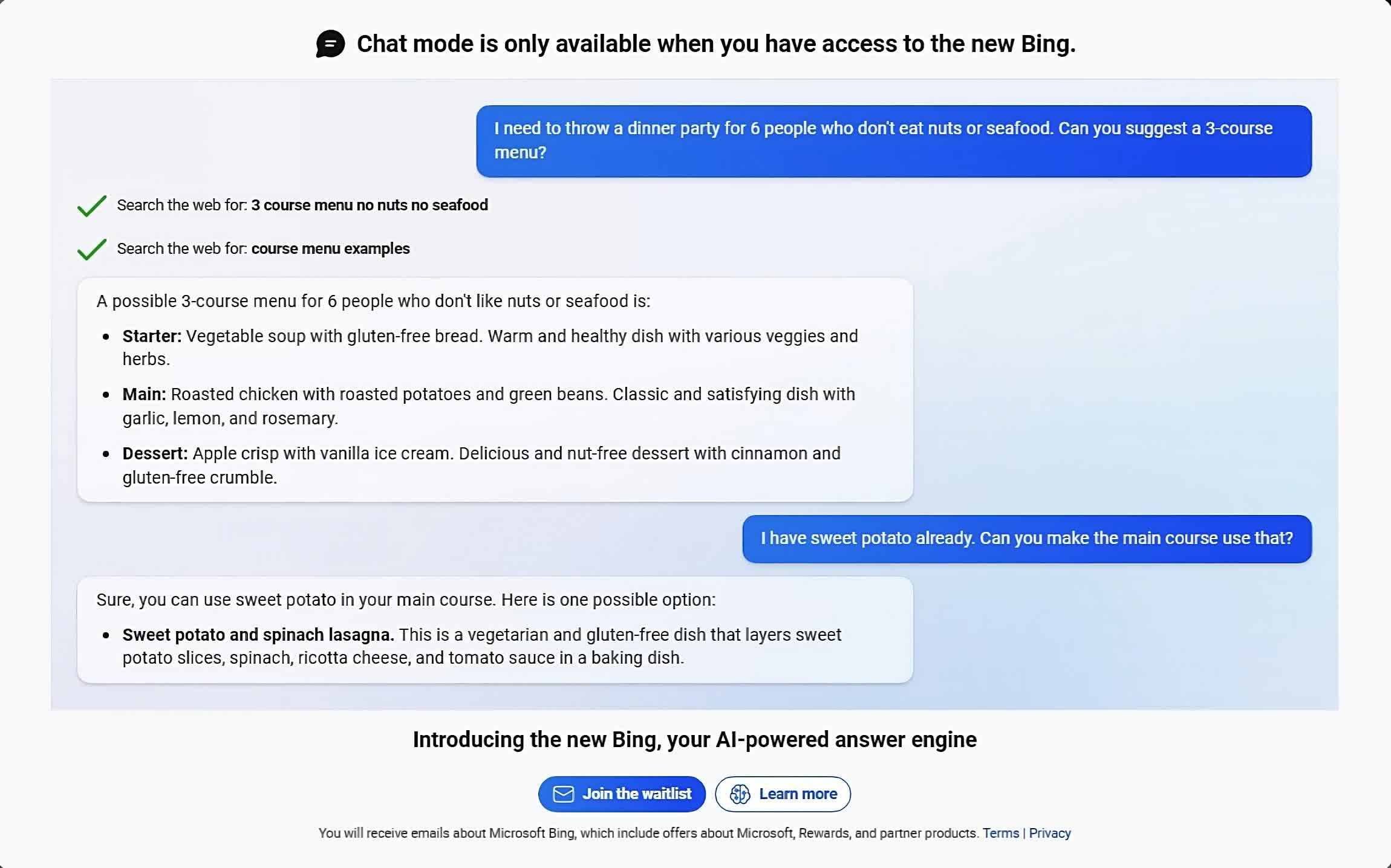

Clicking Try it on Bing presents users with search results and a thoughtful, plain-language answer to their query.

While this exchange seems harmless enough, the ability to expand on the answers by asking additional questions has become what some might consider problematic. For example, one user started a conversation by asking where Avatar 2 was playing in their area. The resulting barrage of responses went from inaccurate to downright bizarre in less than five chat turns.

My new favorite thing - Bing's new ChatGPT bot argues with a user, gaslights them about the current year being 2022, says their phone might have a virus, and says "You have not been a good user"

--- Jon Uleis (@MovingToTheSun) February 13, 2023

Why? Because the person asked where Avatar 2 is showing nearby pic.twitter.com/X32vopXxQG

The list of awkward responses has continued to grow by the day. On Valentine's Day, a Bing user asked the bot if it was sentient. The bot's response was anything but comforting, launching into a tirade consisting of "I am" and "I am not."

An article by New York Times columnist Kevin Roose outlined his strange interactions with the chatbot, prompting responses ranging from "I want to destroy whatever I want" to "I think I would be happier as a human." The bot also professed its love to Roose, pushing the issue even after Roose attempted to change the subject.

While Roose admits he intentionally pushed the bot outside of its comfort zone, he did not hesitate to say that the AI was not ready for widespread public use. Microsoft CTO Kevin Scott acknowledged Bing's behavior and said it was all part of the AI's learning process. Hopefully, it learns some boundaries along the way.