Why it matters: Tech companies have tended to one-up each other regarding accessibility features lately. Everything from games to car infotainment systems seem to have various functions that help those with disabilities navigate menus and communicate via speech synthesis. Apple, never to be one to be outdone, has a batch of features coming soon to make its products more friendly for those with cognitive disabilities.

On Tuesday, Apple announced a slew of accessibility features for iPhone and iPad. The new functionality primarily helps customers with cognitive disabilities such as aphasia, ALS, and other conditions that make speaking, reading, or otherwise using a phone or tablet difficult. Some remarkable features include a simpler interface, point-and-speak functionality, and text-to-speech that can mimic the user's voice.

"At Apple, we've always believed that the best technology is technology built for everyone," said CEO Tim Cook. "Today, we're excited to share incredible new features that build on our long history of making technology accessible, so that everyone has the opportunity to create, communicate, and do what they love."

Apple started by developing a more forgiving touch interface. Dubbed "Assistive Access," the feature takes some of the most used native apps and makes their UI easier to use by enlarging the buttons and touchscreen's grid. The Home screen will also have a larger grid with apps more spaced out. When enabled, this feature should help those with impaired motor function.

The apps that will see these changes include Photos, Phone, Camera, Messages, Music, and FaceTime. Messages will also get new options like an enlarged emoji-only keyboard and the ability to record video messages.

Another helpful feature for those who have difficulties reading or seeing is Point and Speak, which will reside in the existing Magnifier app. Apple introduced Magnifier sometime ago so users didn't have to fiddle with the camera and zoom functions to use their iPhone as a magnifying glass--something most of us have probably done.

Magnifier already has a few handy accessibility features, including color blindness adjustments, the ability to freeze the screen, and modified autofocus. It also has people and door detection and can read signs to the users.

Point and Speak extends the sign-reading functionality by allowing the user to point to specific parts of a sign or physical world interface to have only that portion getting read. For example, Apple demonstrated the feature using the buttons on a microwave oven. As the user points to labeled buttons, the iPhone will speak them out loud rather than trying to read the entire interface.

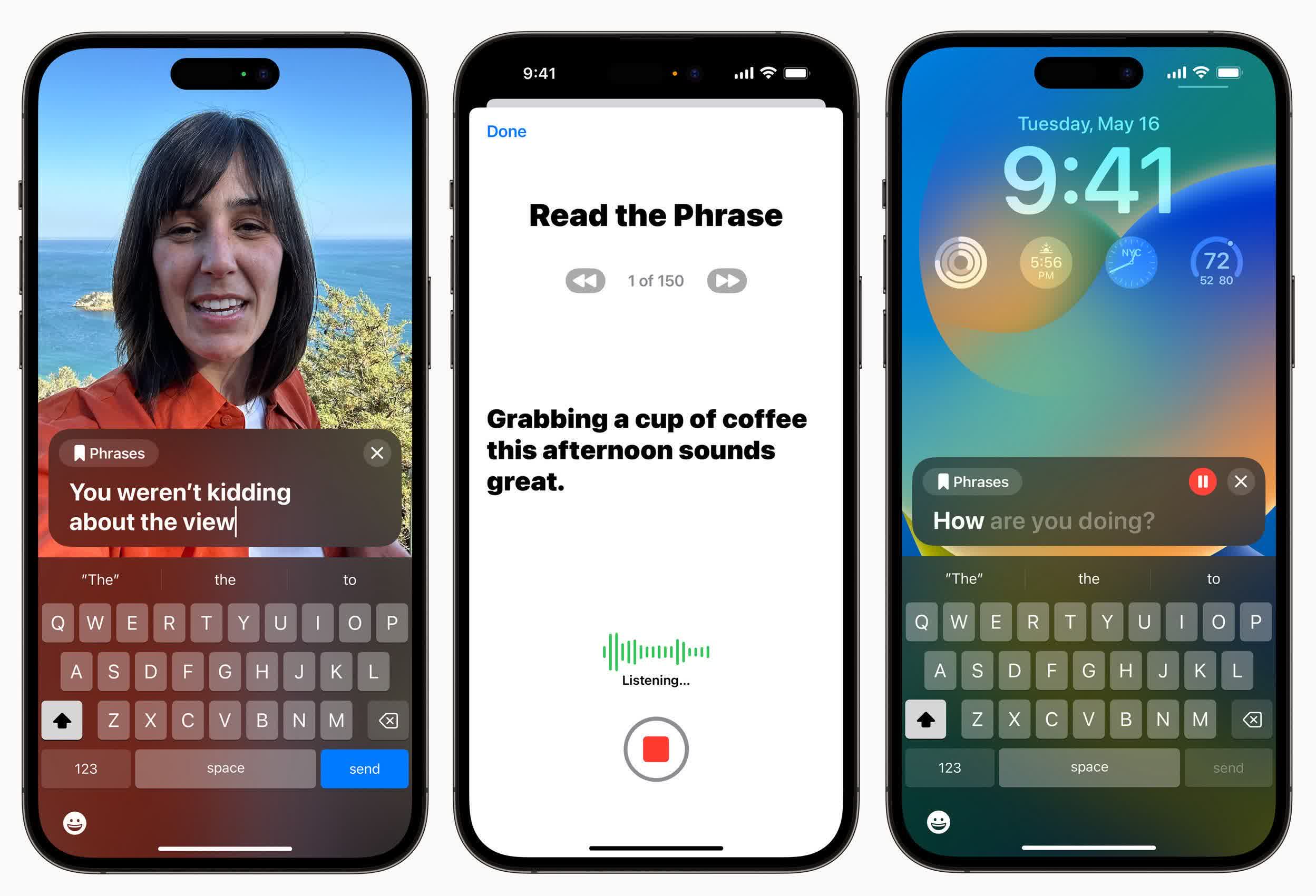

One of the neater features is called Personal Voice. Personal Voice is part of another accessibility component called Live Speech. Live Speech converts text to spoken words during phone, FaceTime, or in-person conversations.

Personal Voice is an extension of Live Speech that allows users to train an AI to speak in their voice. Apple claims that Personal Voice prompts users to read several (150) phrases equalling about 15 minutes of speech. The AI uses the samples to copy the user's voice when using Live Speech. Live Speech also has a customizable memory that saves the user's most common phrases so that things like hello, goodbye, I'm on my way, etc., do not have to be typed out every time.

Apple says that Live Speech with Personal Voice could help ALS patients preserve their actual speaking voice when the disease eventually takes their ability to talk. Physicist Stephen Hawking is famously known for his robotic voice (among other more important things). With Personal Voice, he could have continued speaking in his regular cadence, British accent and all.

Apple has a few other accessibility features highlighted in its press release for those interested. While it didn't say when these updates would arrive, a good guess would be this September with the release of iOS 17.

Masthead credit: Trusted Reviews