Intel 5th-gen vs. 10th-gen, but why? You would be forgiven if you had no idea Intel even had a 5th generation mainstream desktop processor range, because it consisted of only two socketed parts, and neither of them were on sale for particularly long, despite being Intel's most interesting CPUs in years.

Broadwell was kind of a big deal as it ushered in Intel's now infamous 14nm process. It was mid-2015 and the chipmaker's then new and very cutting edge 14nm process was a big deal, we just didn't expect that they'd still be relying on it 6 years later with their 11th-gen Core series processors.

The 14nm process also had its issues and was heavily delayed – just not "10nm" heavily delayed – but it was, and as a result Broadwell arrived much later than expected, forcing Intel to refresh Haswell a year after release, and it would still be another full year before 14nm Broadwell would arrive.

But it'd be short lived. Incredibly, a mere two months after the release of the Core i7-5775C and Core i5-5675C, both parts were essentially discontinued as Intel moved on with Skylake-S, pumping out a full range of 14nm Core i7, i5, i3, Pentium and even Celeron processors. Skylake almost transitioned to DDR4, while Broadwell parts were limited to DDR3 on the Z97 platform.

| Core i7 5775C | Core i7 6700K | Core i7 8700K | Core i7 10700K | Core i7 11700K | |

|---|---|---|---|---|---|

| MSRP $US | $366 | $339 | $359 | $374 | $399 |

| Release Date | June 2015 | August 2015 | October 2017 | May 2020 | March 2021 |

| Cores / Threads | 4/8 | 6/12 | 8/16 | ||

| Base Frequency | 3.30 GHz | 4.00 GHz | 3.70 GHz | 3.80 GHz | 3.60 GHz |

| Max Turbo | 3.70 GHz | 4.20 GHz | 4.70 GHz | 5.10 GHz | 5.00 GHz |

| L3 Cache | 6 MB | 8 MB | 12 MB | 16 MB | |

| Socket | LGA 1150 | LGA 1151 | LGA 1200 | ||

| TDP | 65 watts | 91 watts | 95 watts | 125 W | |

So in a way, Broadwell-DT was a failure, leaving the ultra expensive Broadwell-E parts to push Intel HEDT agenda forward. But it's my opinion that Broadwell-DT was much more interesting than Broadwell-E, despite being limited to just 4 cores, while parts like the Core i7-6950X packed 10.

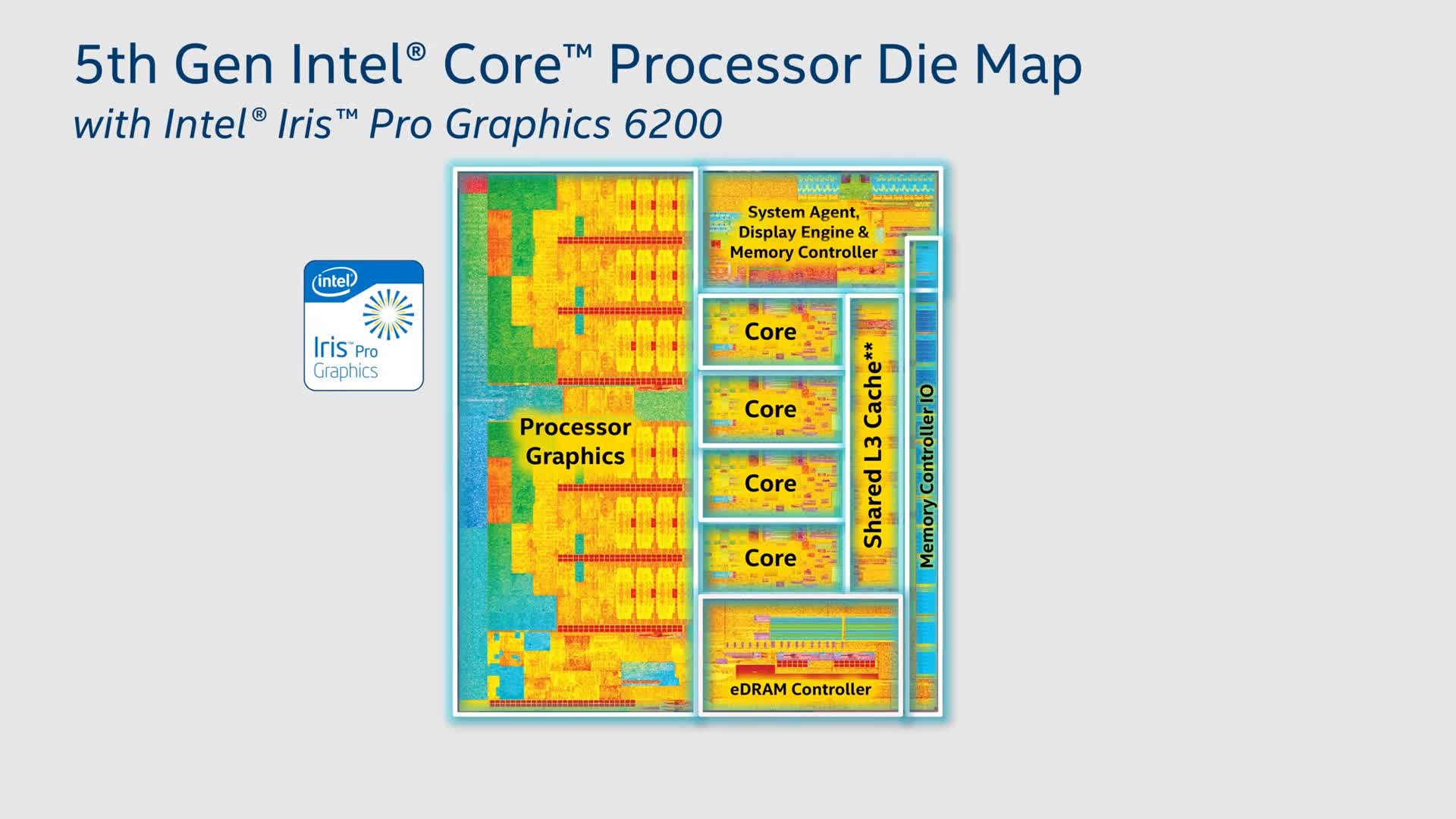

This is because Broadwell-DT featured what Intel called "Crystal Well" which was code for CPUs featuring eDRAM and as introduced with Haswell, though only in BGA form. In the case of the 5775C we got the first socketed Crystal Well part and it received 128MB eDRAM, which acted like an L4 cache. The intention was to feed the iGPU a lot more memory bandwidth, which would improve 3D graphics performance, but when not utilized by the iGPU it could be used by the CPU, as additional cache.

We reviewed the Core i7-5775C back in 2015 and found that it was able to beat AMD's best iGPU solutions in games, sometimes by up to a 60% margin, though it did cost at least three times more. Actually, pricing was always going to be a big issue for any desktop CPU back in 2015 with an on-package 128MB memory buffer and the 5775C came in at $366, a price point Intel was likely making a lot less margin on when compared to the $339 4770K from years prior.

Now, given all my recent testing surrounding Intel's 10th-gen Core series, where we compared different L3 cache capacities with everything else equal such as core count, core frequency, memory frequency and timings, many asked if it'd be possible to add the Core i7-5775C to the results.

I was hesitant at first because it's not exactly an apples to apples test, the 5775C uses slower DDR3 memory and clocking it up to 4.5 GHz was going to be difficult. But given we already had tested the Core i3-10105F at 4.2 GHz I thought if I could get the 5775C to the same frequency that would be a really interesting comparison.

Like the Core i3 model, the 5775C packs a 6MB L3 cache, though of course uses much older cores, but it also has the 128MB L4 cache and that will be made available to the cores when using the Radeon RX 6900 XT. So just how good was the 5775C, and can it stand up to modern quad-cores like the awkwardly named Core i3-10105F?

| Year of release | Microarchitecture | Tick or Tock | Process Node |

| 2019 | Comet Lake | Tock | 14nm |

| 2017 | Coffee Lake | Tock | 14nm |

| 2017 | Kaby Lake | Tock | 14nm |

| 2015 | Skylake | Tock | 14nm |

| 2015 | Broadwell | Tick | 14nm |

| 2013 | Haswell | Tock | 22nm |

| 2012 | Ivy Bridge | Tick | 22nm |

| 2011 | Sandy Bridge | Tock | 32nm |

| 2010 | Westmere | Tick | 32nm |

| 2008 | Nehalem | Tock | 45nm |

| 2007 | Penryn | Tick | 45nm |

| 2006 | Conroe | Tock | 65nm |

To find out, of course, we ran a series of benchmarks, but before we get to that, a few additional test notes. First, please be aware that the Core i3-10105F and Core i7-5775C ran at a slight clock speed disadvantage when compared to the i5, i7 and i9 parts. This is because the highest stable frequency I could achieve with the 5775C was 4.2 GHz and then 10105F is locked at a 4.2 GHz all-core.

For the 10th-gen parts, we used the Gigabyte Z590 Aorus Xtreme motherboard. I then clocked the three K-SKU CPUs at 4.5 GHz with a 45x multiplier for the ring bus and used DDR4-3200 CL14 dual-rank, dual-channel memory with all primary, secondary and tertiary timings manually configured. The Core i3-10105F used the same spec memory, but I was unable to adjust the clock frequency.

The Core i7-5775C was tested on the Asus Z97-Pro motherboard using the latest BIOS and some DDR3-2400 CL11-13-13-31 memory. We used an RX 6900 XT for testing as it's the fastest 1080p gaming GPU around. Let's check out the results.

Benchmarks

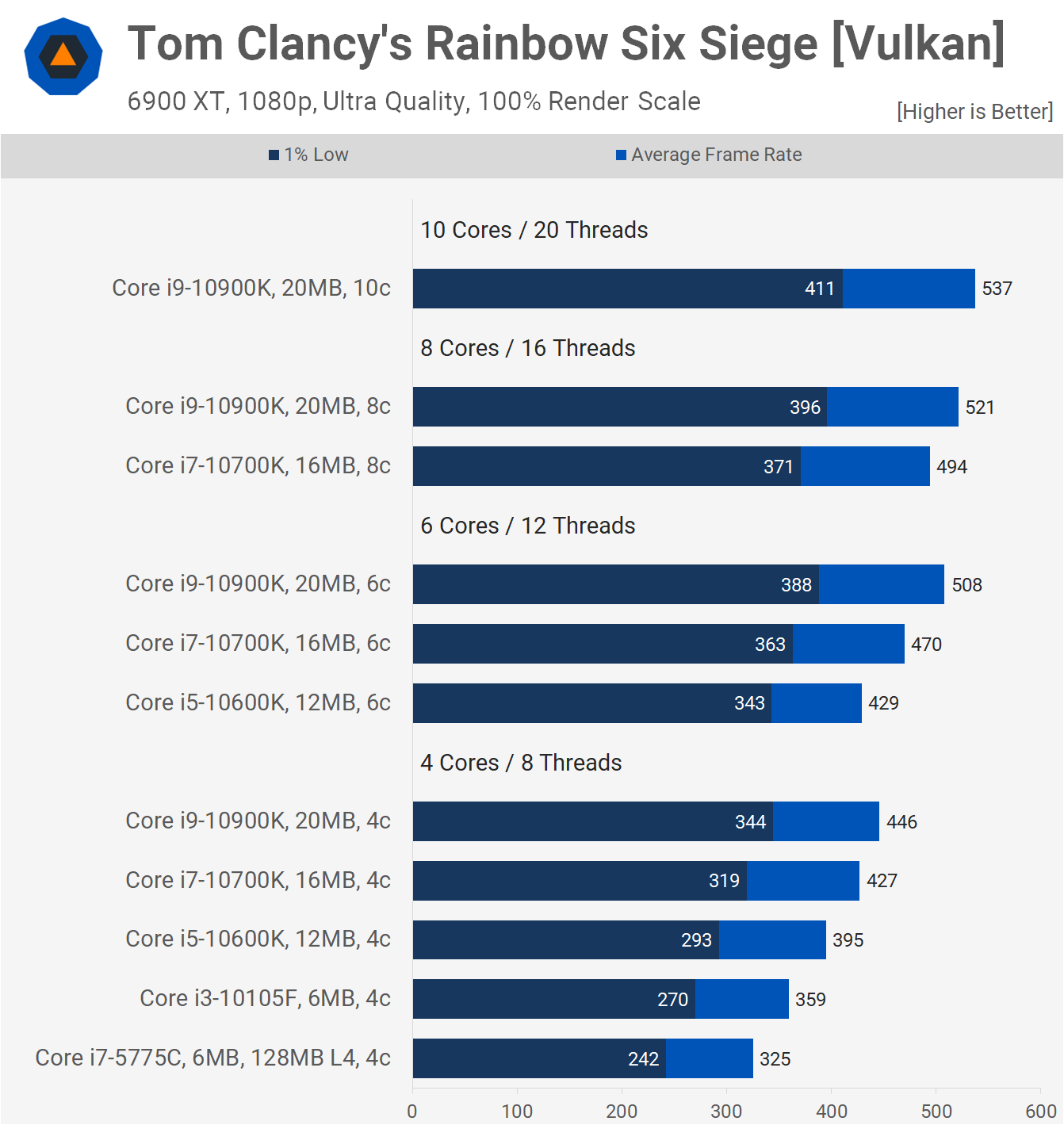

Starting with Rainbow Six Siege, the two results we want to focus on here are those of the Core i3-10105F and Core i7-5775C. The old 5th-gen Core i7 using DDR3-2400 memory pushed the 6900 XT to 325 fps on average, which is an impressive result when you consider that the 10105F was just 10% faster and it enjoys the benefit of much faster DDR4-3200 memory, along with numerous generations of core and architecture refinement.

What this means is, when matched with the same core count and operating frequency, we're looking at what is effectively just a 10% IPC improvement for Intel over a 6 year period, when using Rainbow Six Siege as the measuring stick. That's a shockingly small improvement given this also includes an upgrade in memory technology.

Of course, Intel has added more cores and cache since then, but if we stick with the 4 core configurations we see that the 10600K is 22% faster, the 10700K 31% faster and the 37% faster, and of course those margins widen further once you enabled all supported cores for those parts.

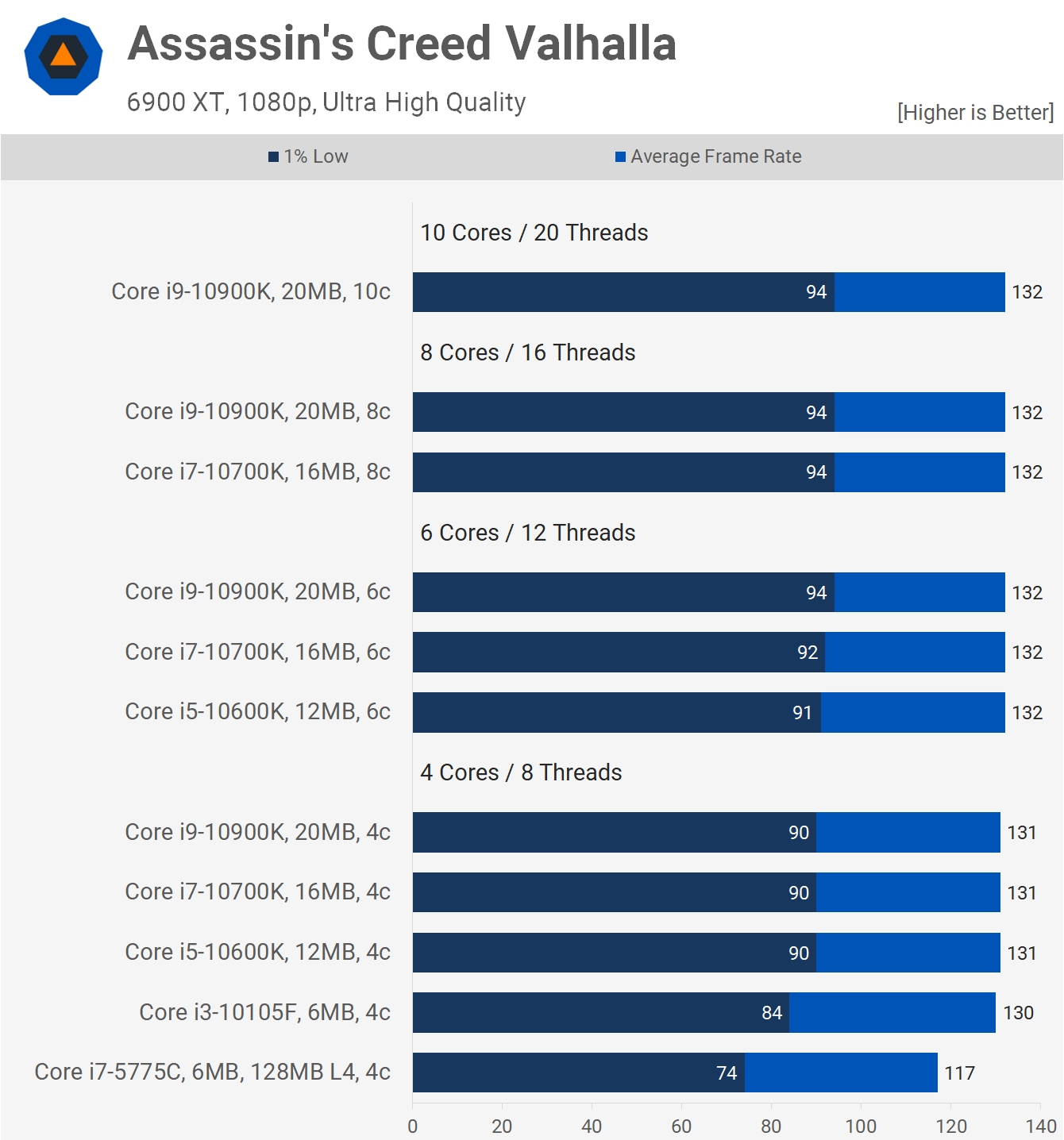

The Assassin's Creed Valhalla data isn't terribly useful as the 10th-gen parts are all heavily GPU limited and this wasn't the case for the 5775C which trailed the average frame rate result of the 10th-gen Core i3 by 10% and the 1% low by 12%. So it's difficult to make any real performance claims based on this data, so let's move on.

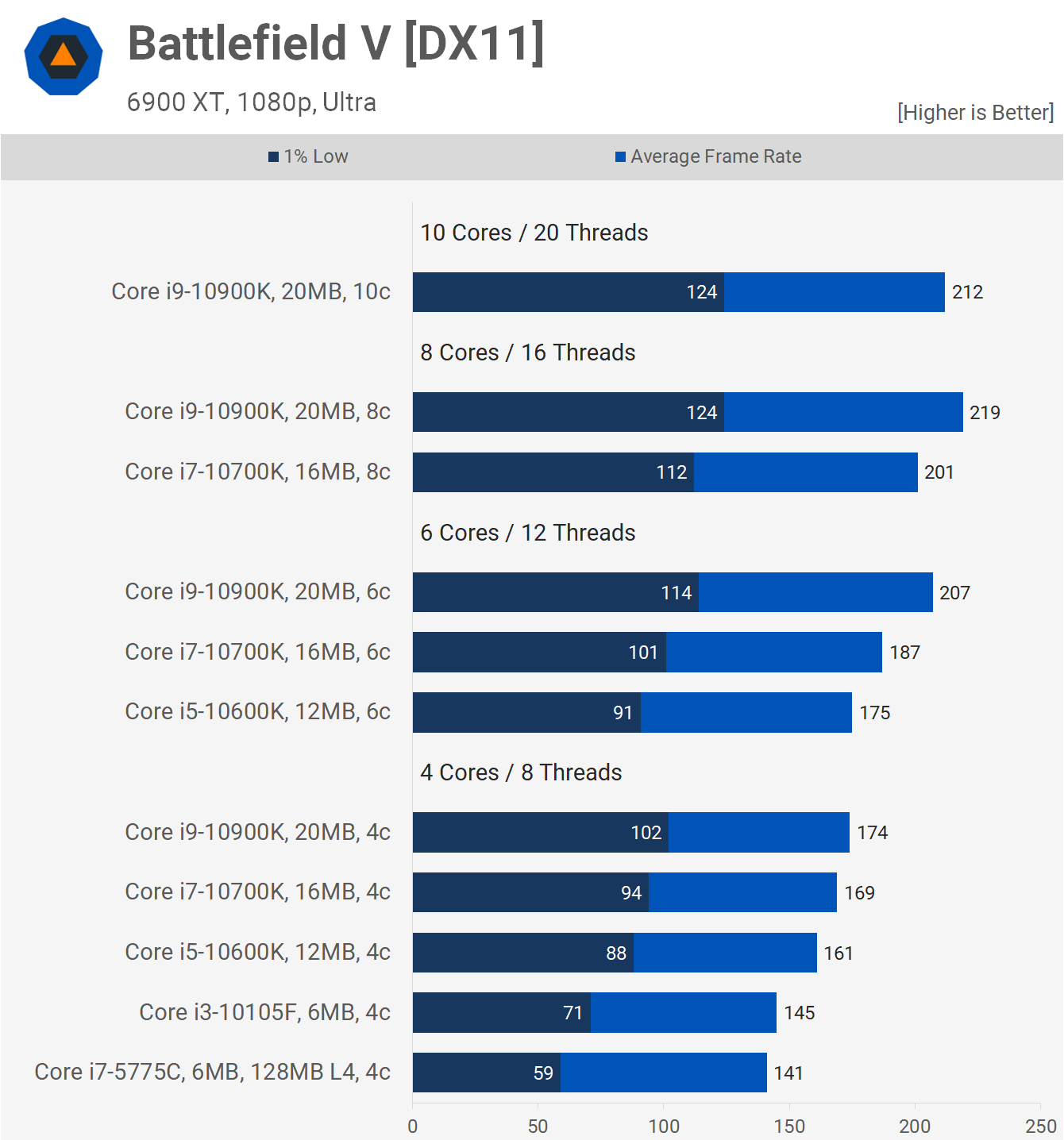

The Battlefield V results are interesting as the 5775C wasn't a great deal slower than the 10105F, especially when looking at the 1% low data. When comparing the average frame rate the 5th-gen part was just 3% slower, but we saw a much larger 17% drop in performance for the 1% low, and I'd say this is largely down to the difference in memory and cache bandwidth. Something we'll look at a little later on.

Moving on to F1 2020, the 10th-gen Core i3 really isn't offering much in the way of extra performance over the aging 5th-gen 5775C. We're looking at an 8% improvement in average frame rate with an 11% boost to the 1% low performance. Here Intel's achieved it's biggest performance gains over the years simply by adding more L3 cache, as seen with the 10600K. It's only a 13% performance uplift, but that's significant given the 10105F and 10600K are based on the exact same CPU architecture.

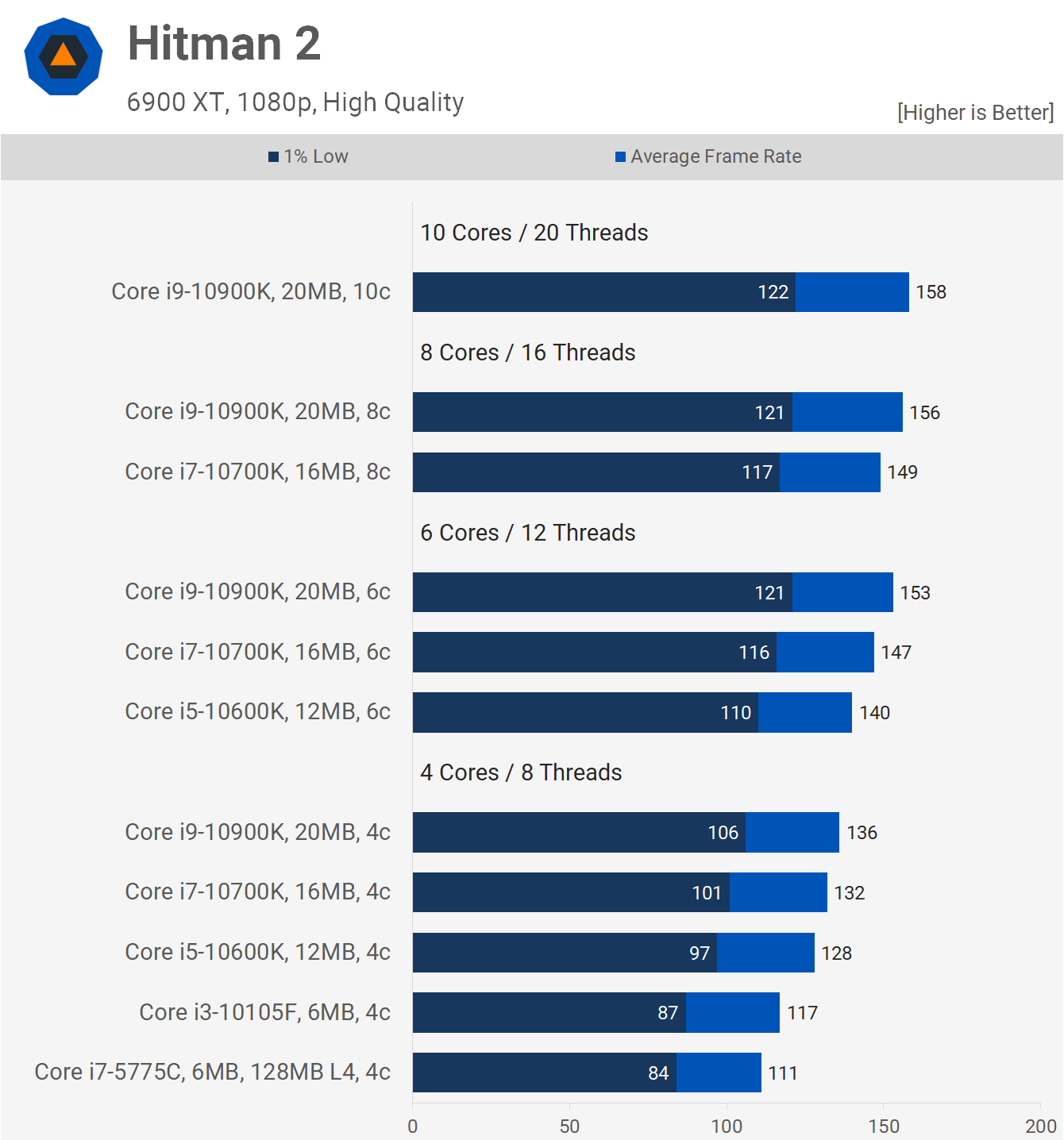

Hitman shows very little progress for Intel over the past 6 years. The 10th-gen Core i3 was just 5% faster than the 5775C and again it's the doubling of L3 cache that's lead to the biggest performance improvement as the 10600K offered a 9% boost from the 10105F and an 11% increase in 1% low performance.

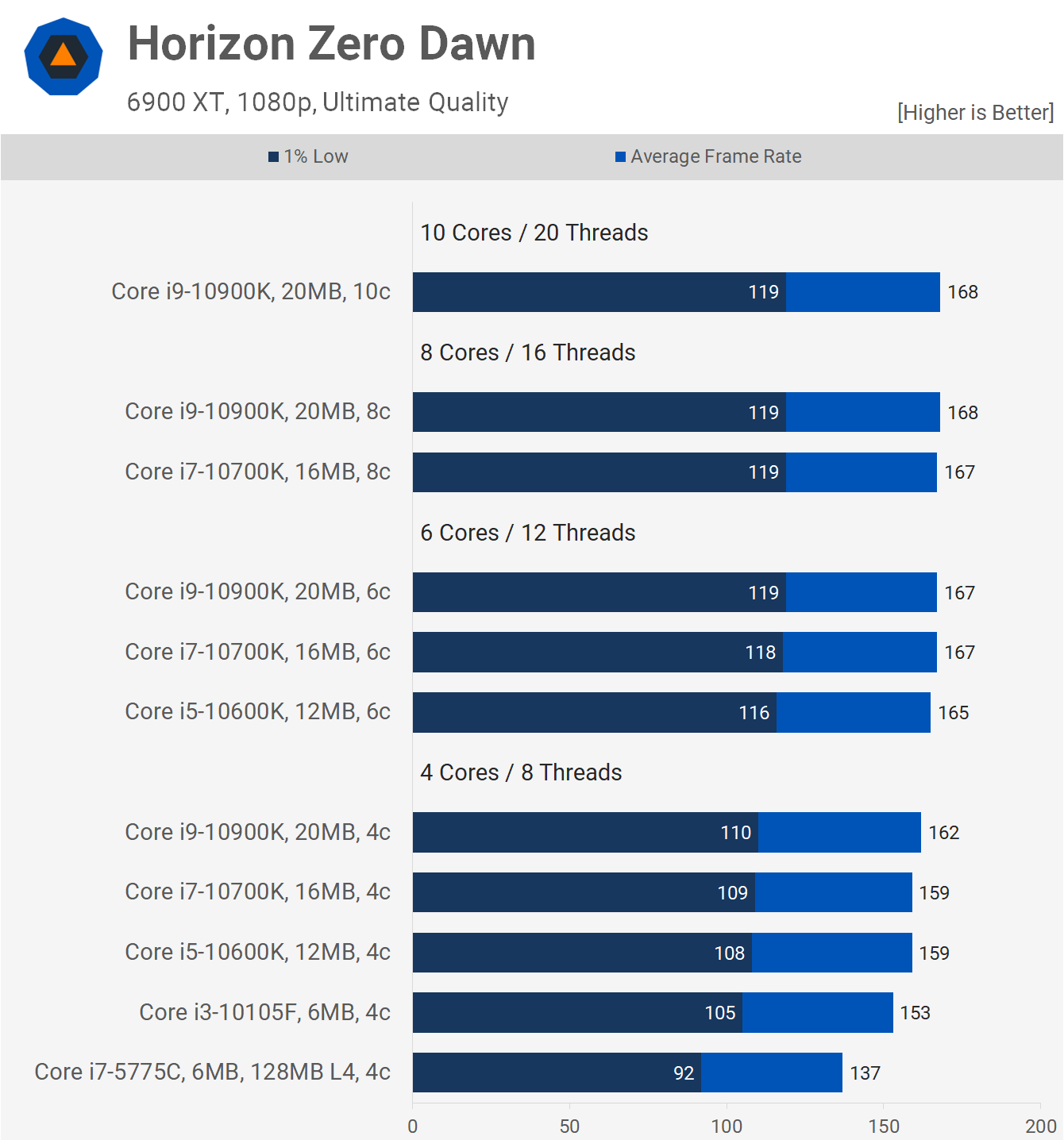

Next we have Horizon Zero Dawn, and I suspect memory bandwidth is at a premium here as the 10th-gen Core i3 was up to 12% faster and 14% faster when comparing the 1% low data. This is one of the bigger performance uplifts we've seen for 10th-gen over 5th-gen.

We're also looking at an 11% increase in performance for the 10105F over the 5775C. Then if we take what would be Intel fastest quad-core, the 20MB 10900K with just 4 cores active, we see that it's 24% faster than the 5775C, so a decent performance uplift there, but probably a lot less than what you'd expect to see after more than half a decade.

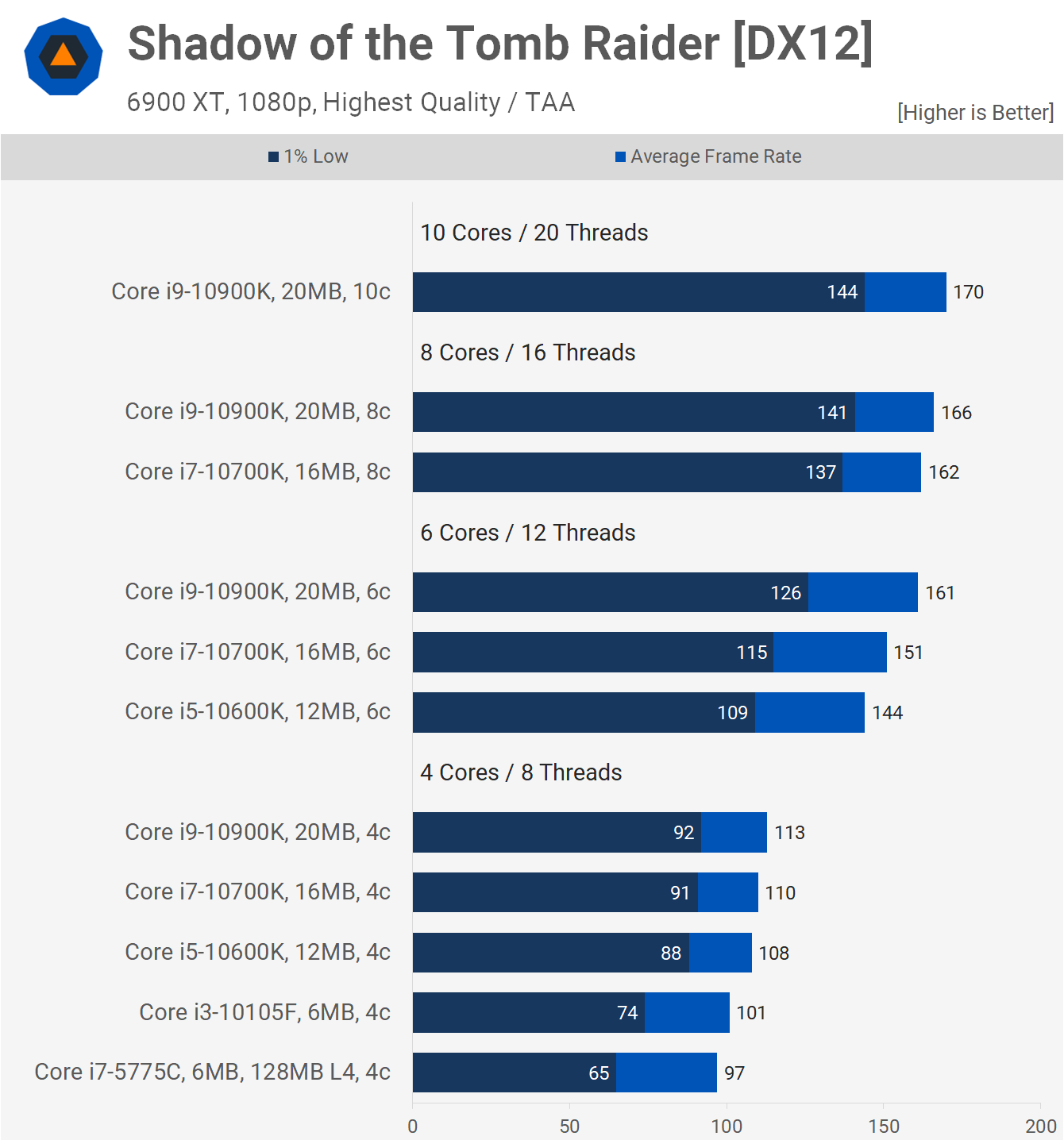

Shadow of the Tomb Raider has always been rough on quad cores, even those with 8 threads, and this is especially in the village section that we use for testing. That said, with powerful enough cores the experience can be quite good, as seen with the 4-core 10600K, 10700K and 10900K configurations. Then if we look at the 10105F we see a 16% drop in 1% low performance from the 10600K, while the 5775C is a further 12% slower again.

So if we hone in on the 5775C and 10105F comparison we see that over the last 6 years Intel has improved performance by up to 14%, seen when looking at the 1% low data. Not amazing, but it's certainly better than most other results we've seen so far.

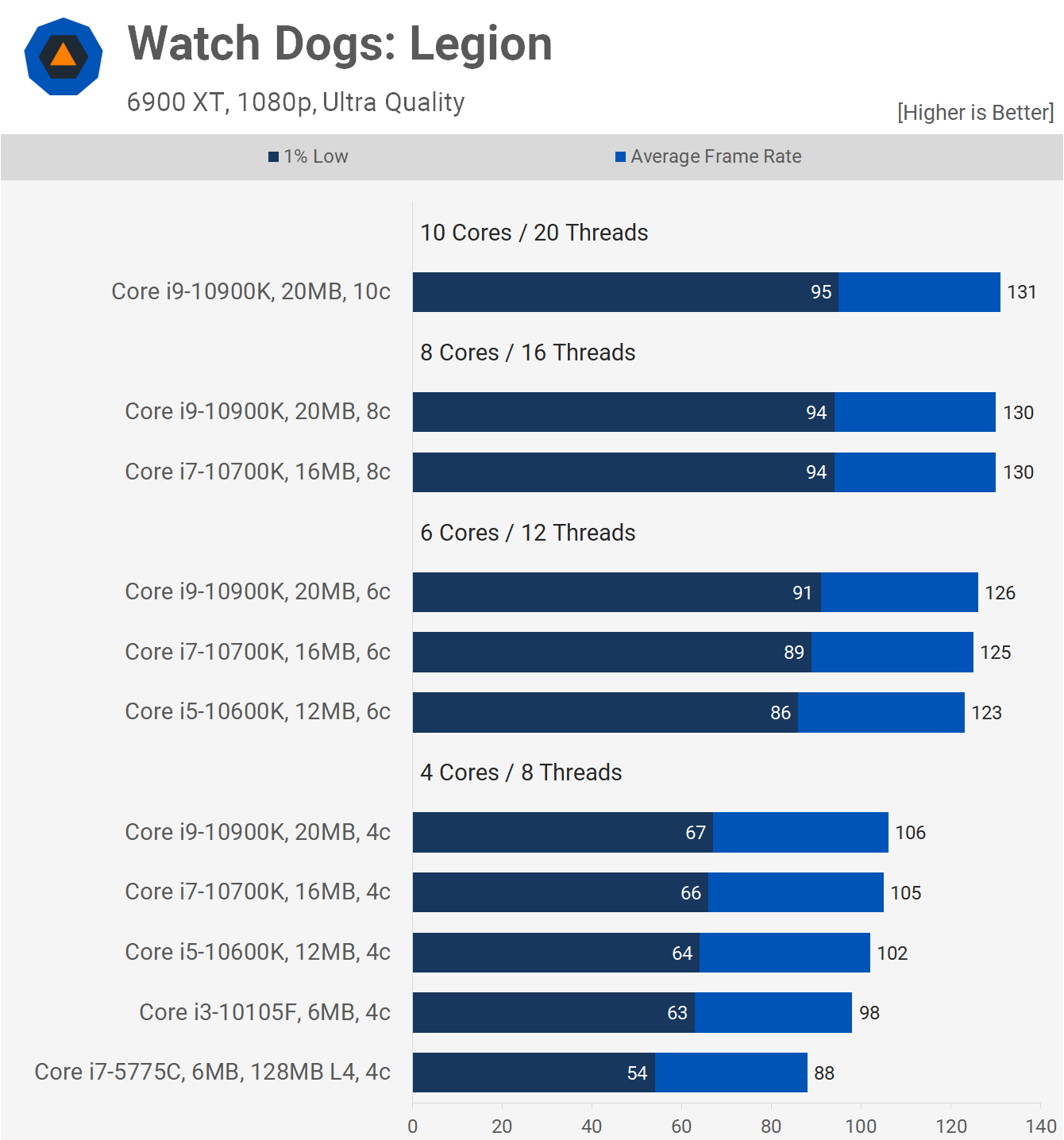

The last game we're going to look at is Watch Dogs Legion and here the 10105F was 11% faster than the 5775C for the average frame rate, and 17% faster when looking a the 1% low data. So once again not a huge or even significant performance increase for what amounts to 6 generations of progress.

Cache Bandwidth

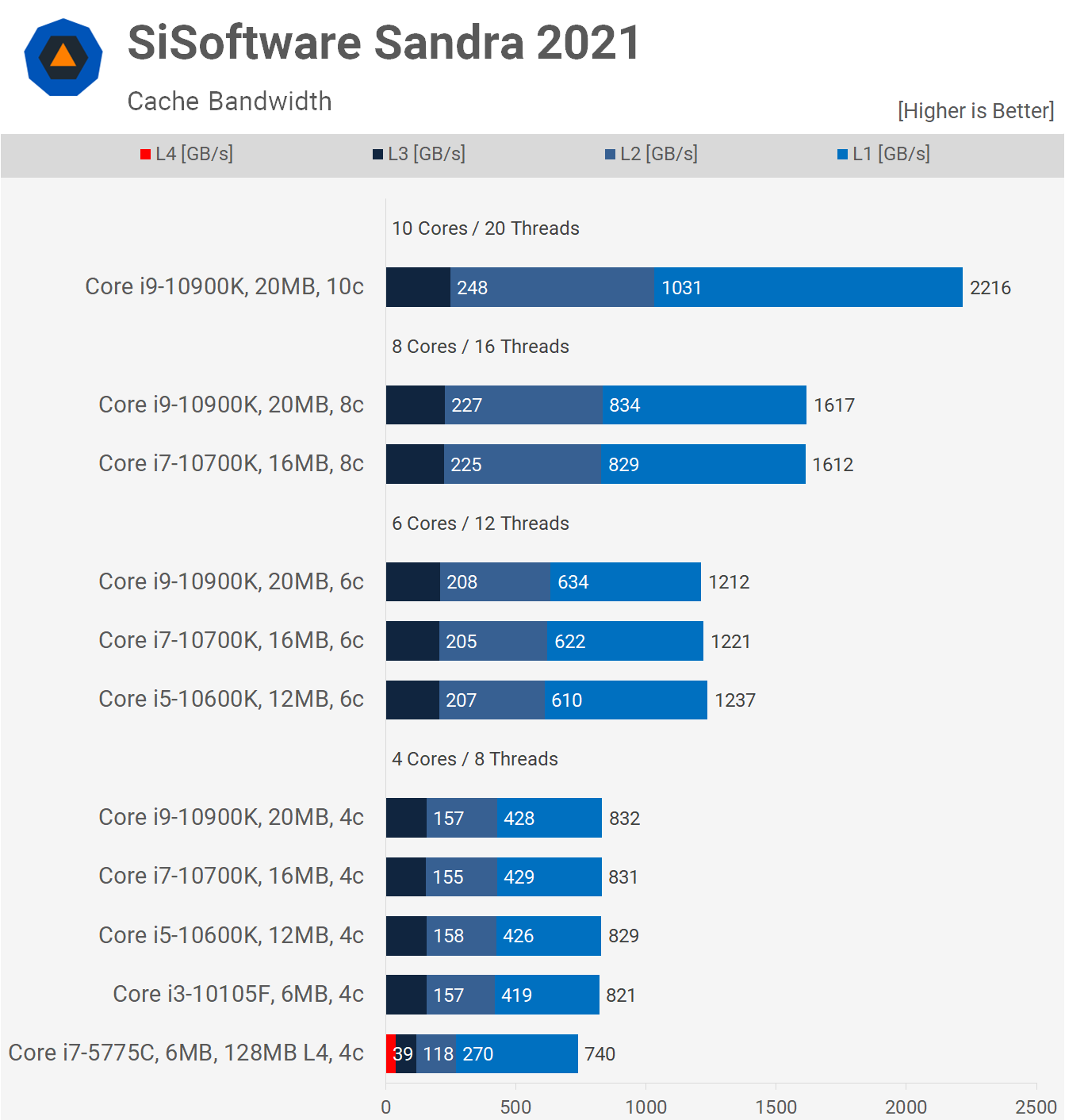

Before wrapping up the testing here's a look at cache bandwidth. Also please note that all 10th-gen configurations enjoyed a memory bandwidth of 38 GB/s. That's 38 GB/s for the DDR4-3200 memory when measured using the SiSoftware Sandra 2021 memory bandwidth test, so not AIDA64 which will give you higher readouts.

I haven't bothered with a memory bandwidth graph because the 11 tested configurations gave just two different results. Again, for the 10th-gen parts it's 38 GB and that's regardless of model or core count. The Core i7-5775C on the other hand was limited to 30 GB/s using the DDR3-2400 memory. That means the newer 10th-gen CPUs enjoyed 27% increase in memory bandwidth, so quite a substantial advantage there.

Now, when looking at cache bandwidth there are a few interesting things to note. First, L1 and L2 is a per-core cache, so the bandwidth figure is effectively multiplied by core count, so we get our best comparison with 4 cores active and here all 10th-gen models are much the same. What we can see is that Intel managed to improve L1 cache bandwidth by 11% from the 5th-gen architecture to the 10th, and then the L2 cache bandwidth by a much more substantial 55%.

Then we see a 33% improvement in L3 cache bandwidth when comparing the 5775C and 10105F. So although the capacity is the same, the bandwidth has been radically improved.

Finally we see that while useful, the eDRAM L4 cache bandwidth isn't huge and in fact it's not much greater than the 10th-gen models accessing system memory with a bandwidth of just 39 GB, around 4x slower than the L3 cache of the 10105F. That probably explains why back in 2015 I found the 5775C was only around 10% faster than the 4770K when compared clock-for-clock in a range of application benchmarks.

Wrap Up

Obviously, being stuck on their 14nm process for what seems like an infinite amount of pluses, Intel's progress over the years has been slower than expected. In a way, it's impressive to see just how much they've been able to squeeze out of the 14nm process, while on the other hand, you'd normally expect to see significantly more progress 6 years later.

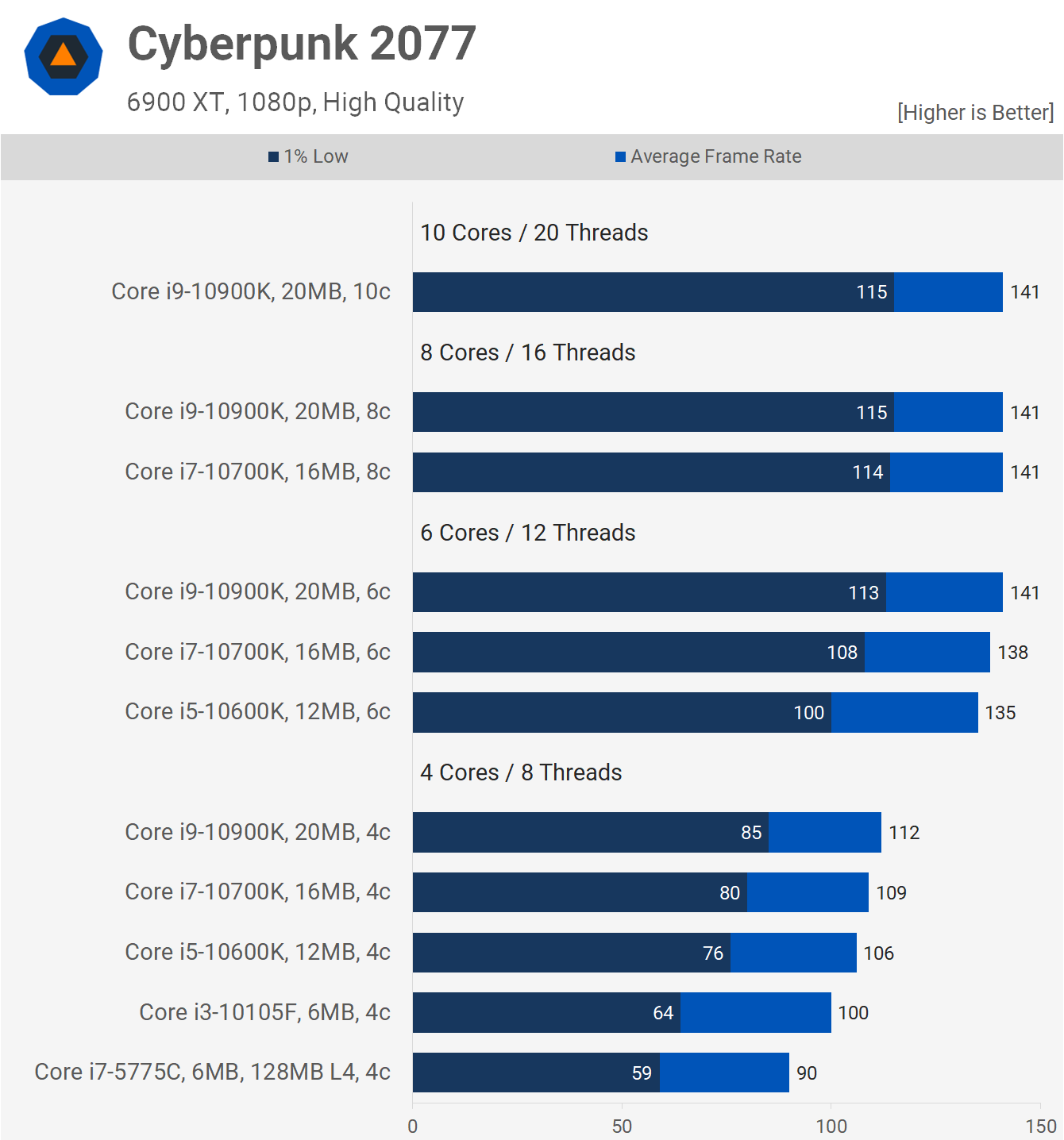

If you'd had told me back in 2015 that by the year 2021 Intel's latest and greatest CPUs would be around 10% faster in games at the same core count and clock speed, I doubt I would have believed you. More so considering that at the time I'd be using a GeForce GTX 980 Ti for benchmarking, whereas today's 6900 XT is nearly 3x faster.

Furthermore, if you had also told me that AMD would be dominating Intel on mainstream and high-end desktop platforms, as well as the server market by 2021, I would have passed out from laughter, so definitely don't listen to my long term predictions. Not that I make them, but if I do ALT+F4.

Now to be fair, Intel's taken some good steps forward since the 5th-gen Core i7 by adding more cores and while that has seen power usage skyrocket, so too has performance (and the price). Certainly not as much as Intel would have liked, but the 10900K and/or 11900K are much much faster than the 5775C, they're also much bigger dies, especially the 11900K, but as we've noted numerous times, that's because they all use the same 14nm process, or at least a variation of it.

And so, that's how the Core i7-5775C compares against more modern 10th-gen models and we've gotta say it's held up really well, especially when looking in a 4-core bubble. That L4 cache is not nearly as handy as you'd think, at least not for CPU performance, though it's certainly very handy for GPU performance which would otherwise be heavily limited by the bandwidth of the PCI Express 3.0 bus.

And that's going to do it for this strange look back at the Core i7-5775C. Hope you enjoyed this "for science" hardware feature. We'll come back for more.