Today, we're taking our first look at the 16GB version of the much-loved GeForce RTX 4060 Ti. It seems that this is indeed the case, as you all can't stop talking about your feelings towards the 4060 Ti, and we can't stop benchmarking it, so we must all love it.

Despite our fondness for the RTX 4060 Ti, even we admit that just 8GB of VRAM is not quite enough for a $400 graphics card in mid-2023 [sarcasm is over]; ideally we'd want a minimum of 12GB, with 16GB being the ideal amount of memory for a mid-range GPU. Fortunately, Nvidia has considered this and agrees. They have now released a 16GB model of this GPU, charging a steep $100 premium for an extra 8GB of VRAM that the card should have always had.

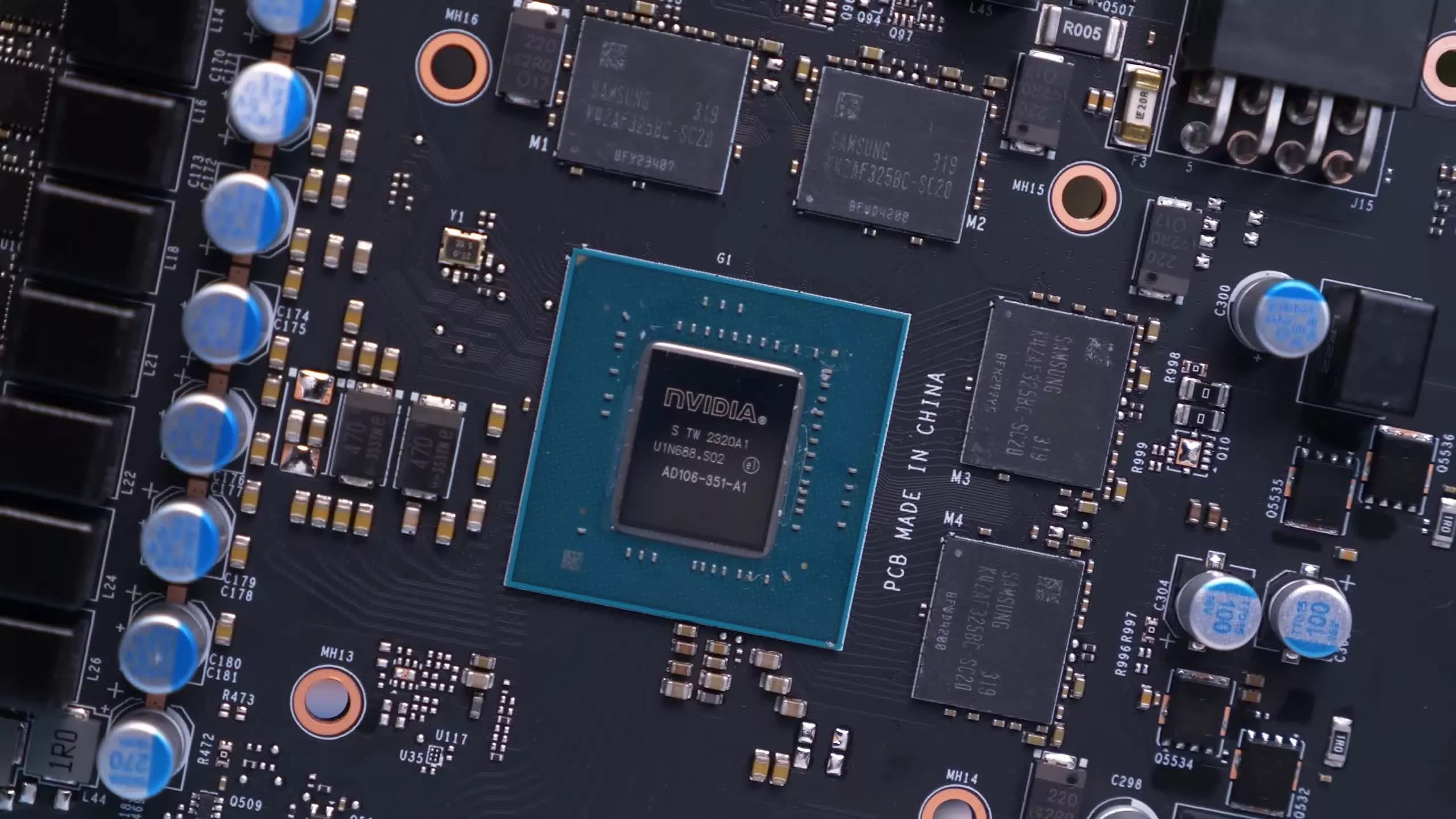

But why did the RTX 4060 Ti initially only come with 8GB of VRAM, and how has Nvidia managed to double that for a total of 16GB? The problem, as it often is, lies in the relatively small 128-bit wide memory bus. GDDR6 memory chips require a 32-bit memory controller, and with a 128-bit bus, that means only four of these chips can be used.

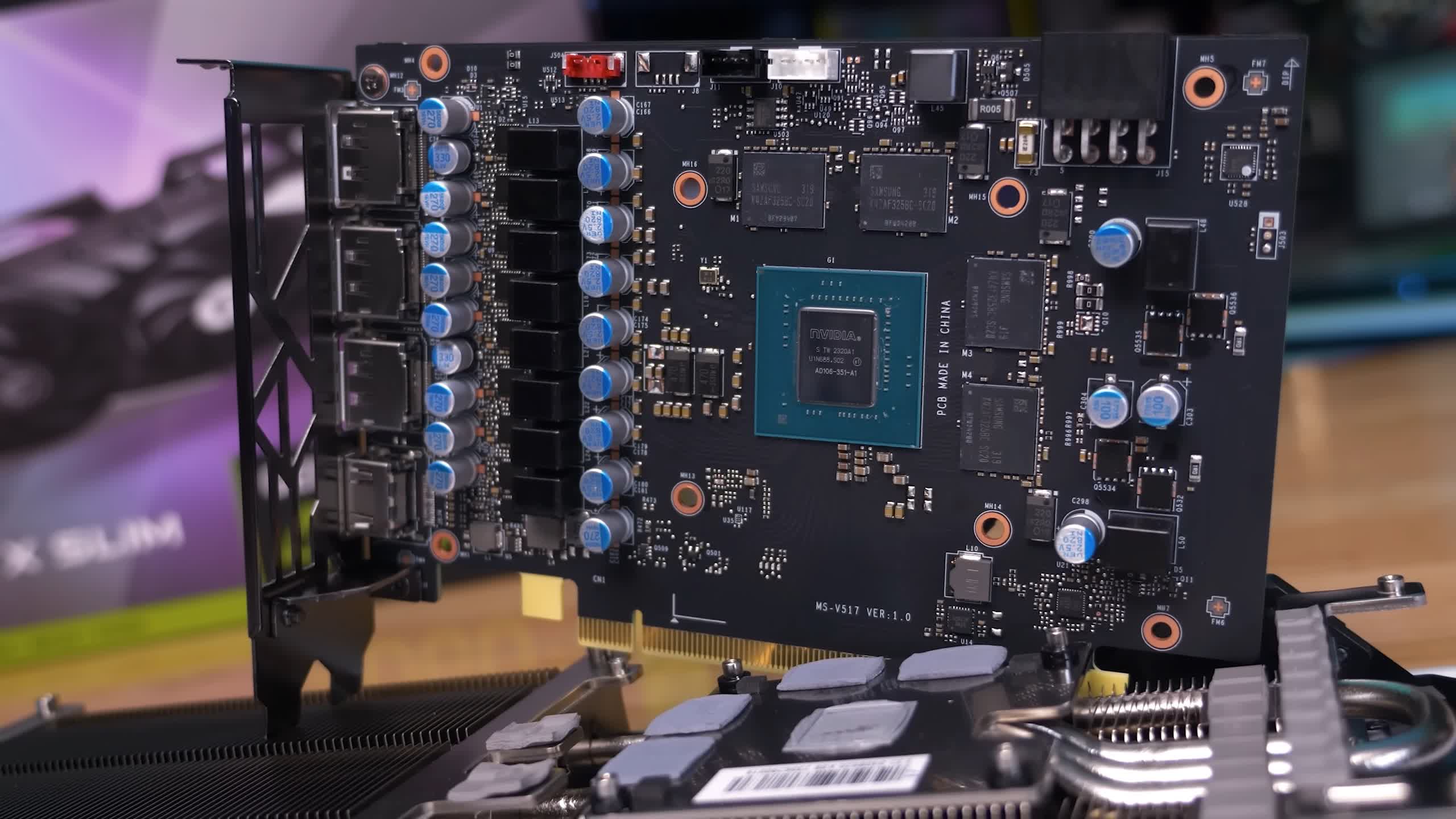

For 8GB, Nvidia had to use four double-density 2GB chips. However, to achieve a 16GB capacity, they can't simply use 4GB GDDR6 chips as they don't exist. Instead, they have to use Micron's clamshell configuration, which requires a complete PCB redesign.

The clamshell configuration allows for the use of two memory modules on a single 32-bit memory controller. However, this configuration doesn't allow all the memory chips to be placed on the front side of the PCB; half the chips must be positioned on the backside, directly adjacent to the chips on the front side, hence the term "clamshell."

When asked why the 16GB version of the 4060 Ti costs $100 more than the standard 8GB model, Nvidia told us that the clamshell method of expanding memory is quite complicated and therefore costly. So, that, coupled with the cost of four additional 2GB chips, is how they arrived at the $100 increase. It seems a little far-fetched to us, but Nvidia is charging $500 for the RTX 4060 Ti 16GB, and that's likely going to be a major problem for this product.

Besides the extra VRAM, there's no other difference between the 8GB and 16GB versions of the RTX 4060 Ti. This is both good and bad. It's good in the sense that they share the same product name, so they should be the same. However, it's bad in the sense that for most of today's games, performance will be the same.

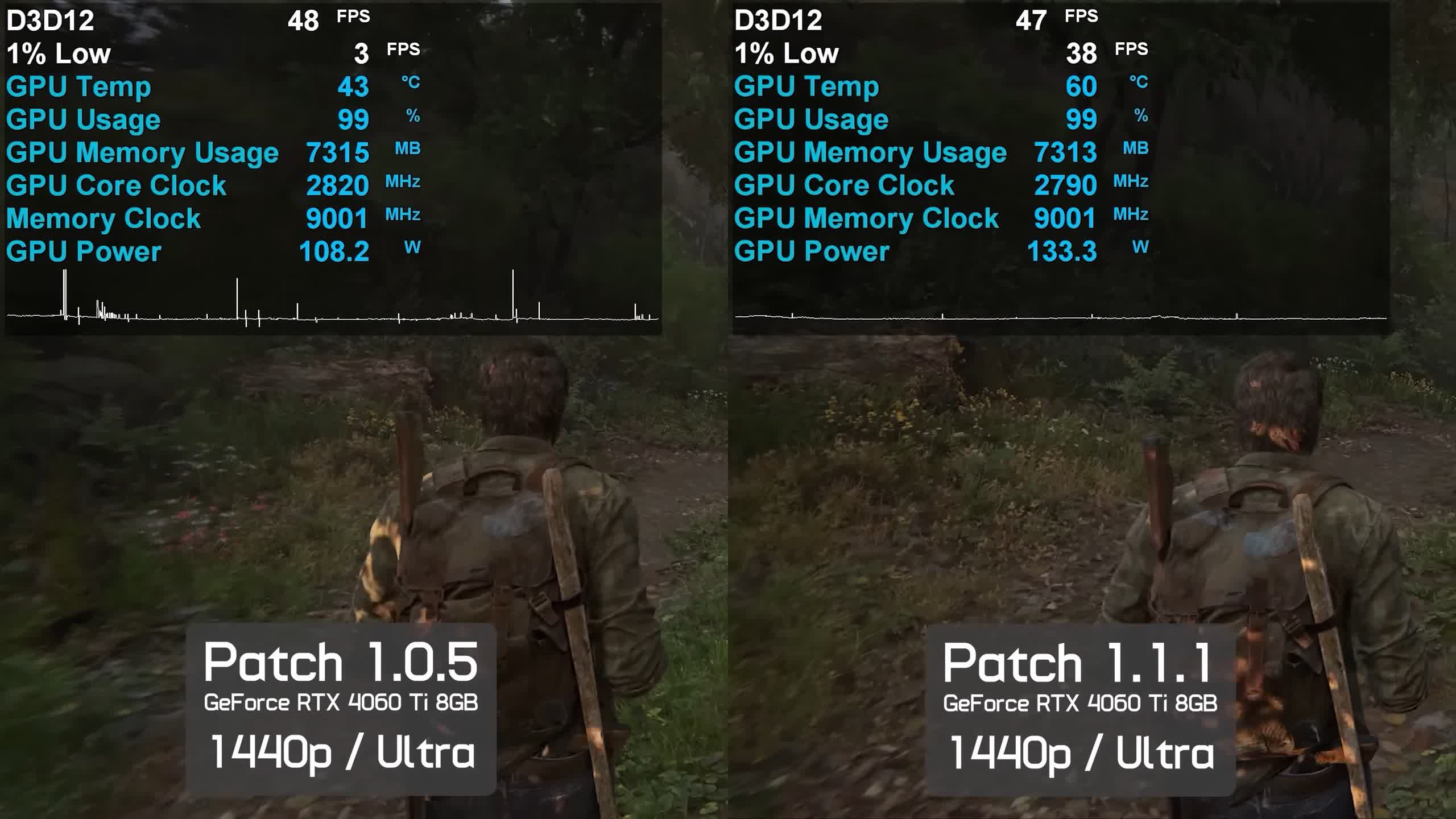

Ideally, an $400 product like the 8GB RTX 4060 Ti should come with a larger VRAM buffer than what we got 5 years ago on the $380 GTX 1070, for several reasons. Ample VRAM ensures the latest and greatest games run as they should. Many of this year's newly released games, such as The Last of Us Part 1, ran well on 16GB graphics cards like the Radeon RX 6800, while they struggled on 8GB cards.

The game has received about a dozen patches now and is in a much better condition. However, the release of unoptimized games is nothing new. It's been happening for decades now and will continue to happen.

It's also clear that games are moving beyond 8GB of VRAM, as game developers have publicly stated they're tired of optimizing for 8GB cards at the high-end. Future games will view 8GB graphics cards as low-quality 1080p solutions.

Consequently, buying an expensive graphics card today with just 8GB is almost certainly going to lead to disappointment. We also don't want future games to be held back or limited by 8GB graphics cards. Yes, we want them to work and scale well on 8GB cards, but we also want to see high and ultra settings offering a next-level visual experience with textures that take advantage of 16GB and larger memory buffers.

Therefore, a 16GB version of the RTX 4060 Ti is a good thing, but pricing remains a big issue. As it stands, Nvidia's most affordable current-generation GPU offering more than 8GB of VRAM costs $500. That's an absurd situation to find ourselves in, in mid-2023.

Interestingly, Nvidia seems to recognize the shortcomings of the RTX 4060 Ti. They know the 8GB version is a weak offering at $400 – and they know you know, because you haven't bought it. They also know the 16GB version is a complete joke and likely regret even making it, evidenced by the fact that there was no review program for the 16GB model, official or otherwise.

There's no Founders Edition version either, and apart from the official announcement a few months back, Nvidia has made no mention of the 16GB version. It's not just Nvidia who recognizes the product's limitations; their partners are all painfully aware, with some yet to release a 16GB version, and none of them were willing to send us a sample.

We were among the few who ordered one when they went on sale a few days ago. We picked it up on release day and got to testing. Given these circumstances, we've only had a few days to test this thing out, so think of this as more of a preview, though, to be fair, we think we've learned all we needed to with the testing done so far.

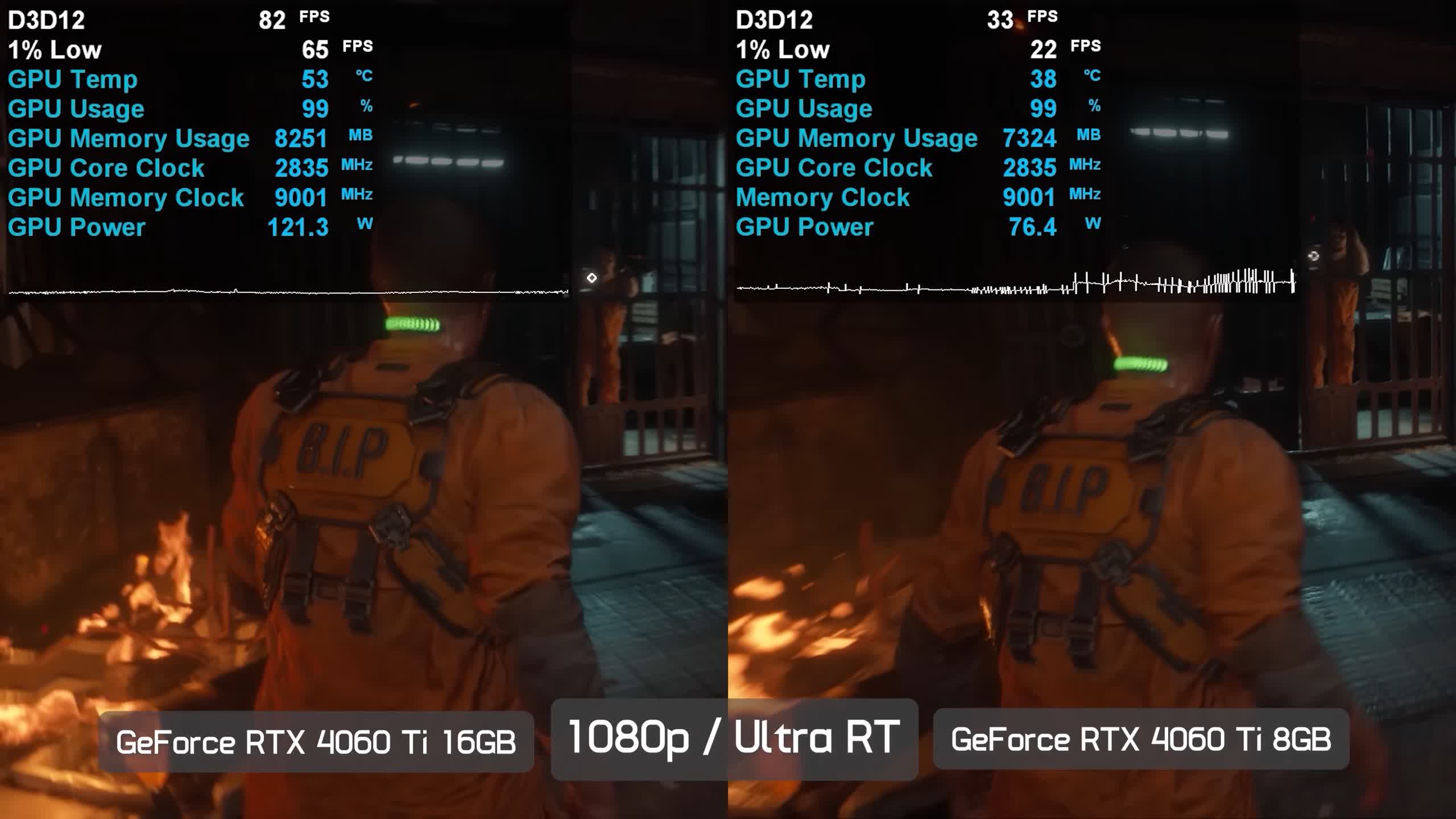

Something we want to address in this review is the concern that a 128-bit wide memory bus isn't enough to utilize 16GB of VRAM. We've seen this comment a lot over the past few months. We're not sure where it originates or what the exact theory is, but the belief seems to be that there simply isn't enough memory bandwidth to effectively use a 16GB memory buffer.

So, let's start by looking at that. All testing in this article has been conducted on our Ryzen 7 7800X3D test system with 32GB of DDR5-6000 memory, using the latest display drivers.

Benchmarks

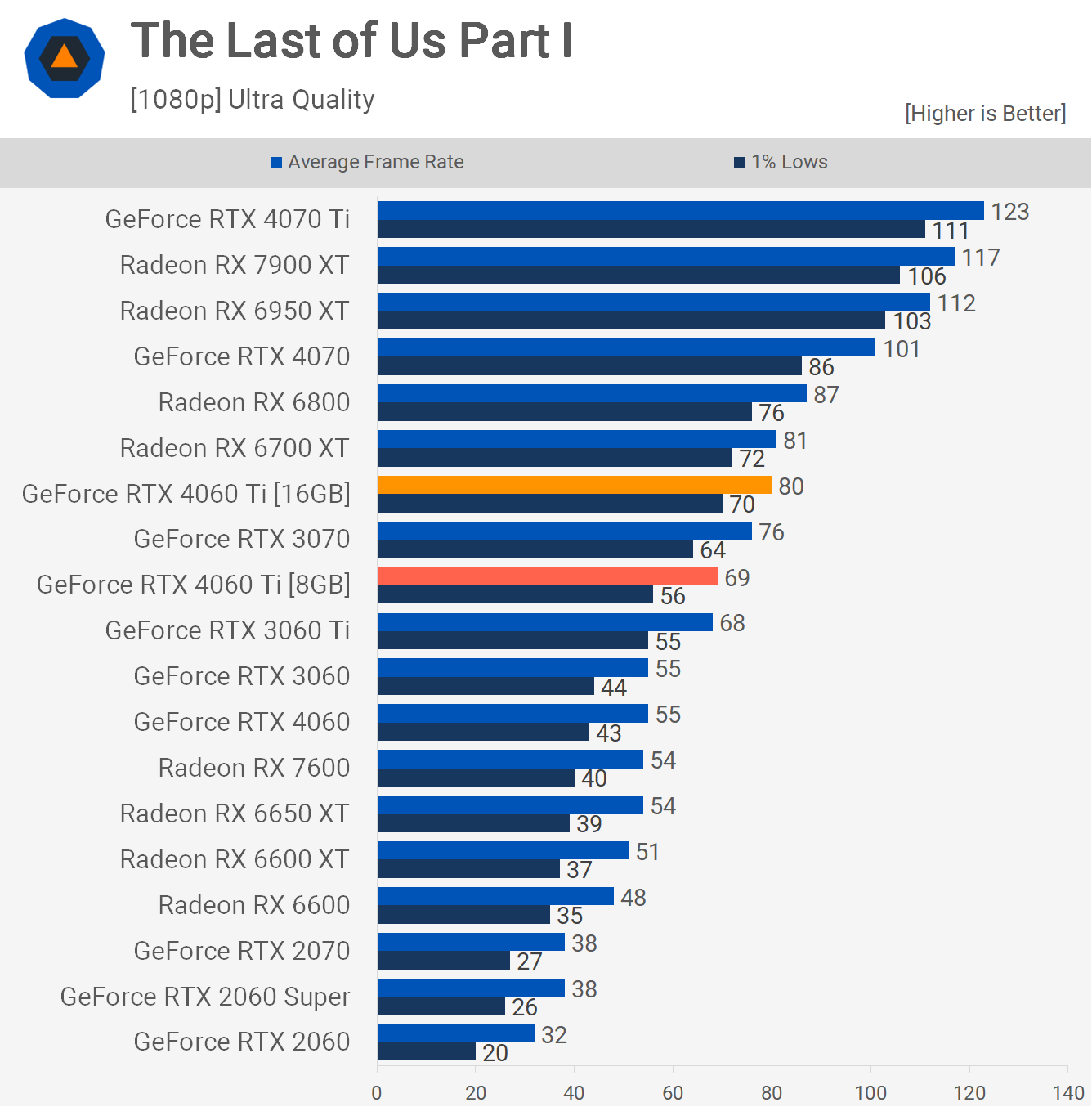

Here's a look at The Last of Us Part 1. Using the ultra-quality settings at 1080p, we found the 16GB model to be 16% faster on average, while the 1% lows were boosted by as much as 25%. This resulted in a substantial performance jump, achieving performance similar to the 6700 XT.

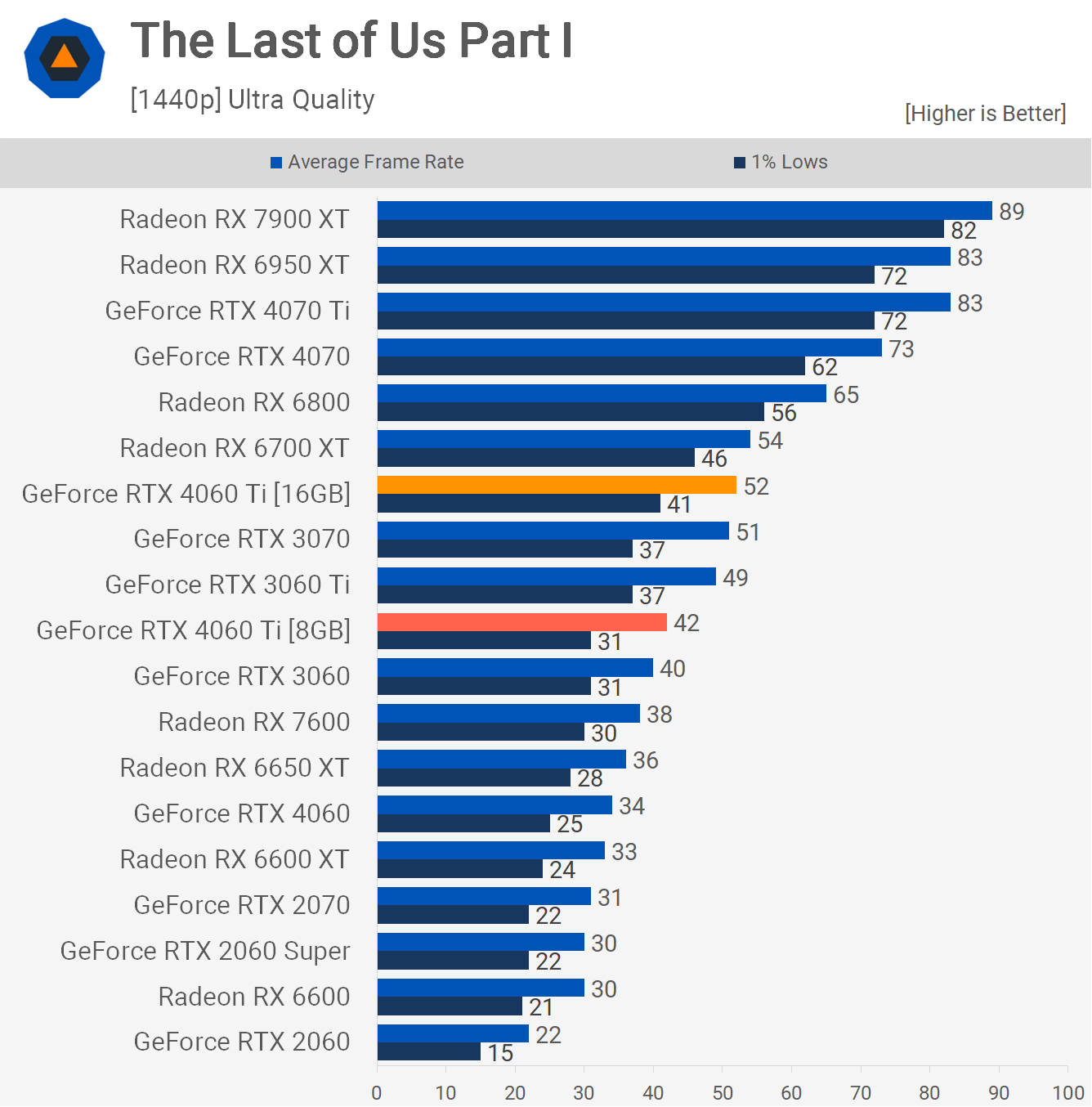

The story is similar at 1440p. Here, the 16GB model was 24% faster on average with a 32% boost to 1% lows, allowing it to closely match the 6700 XT.

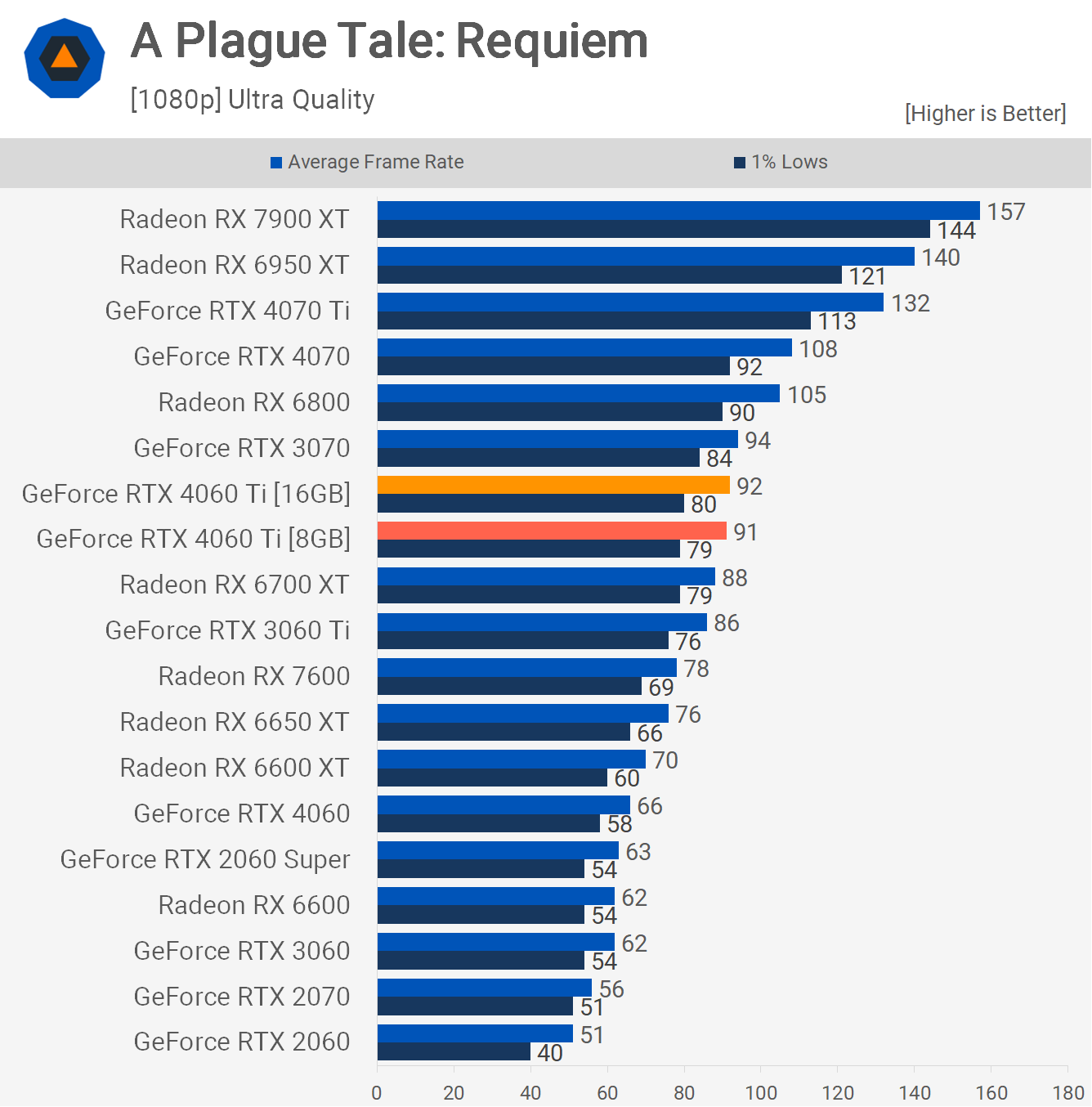

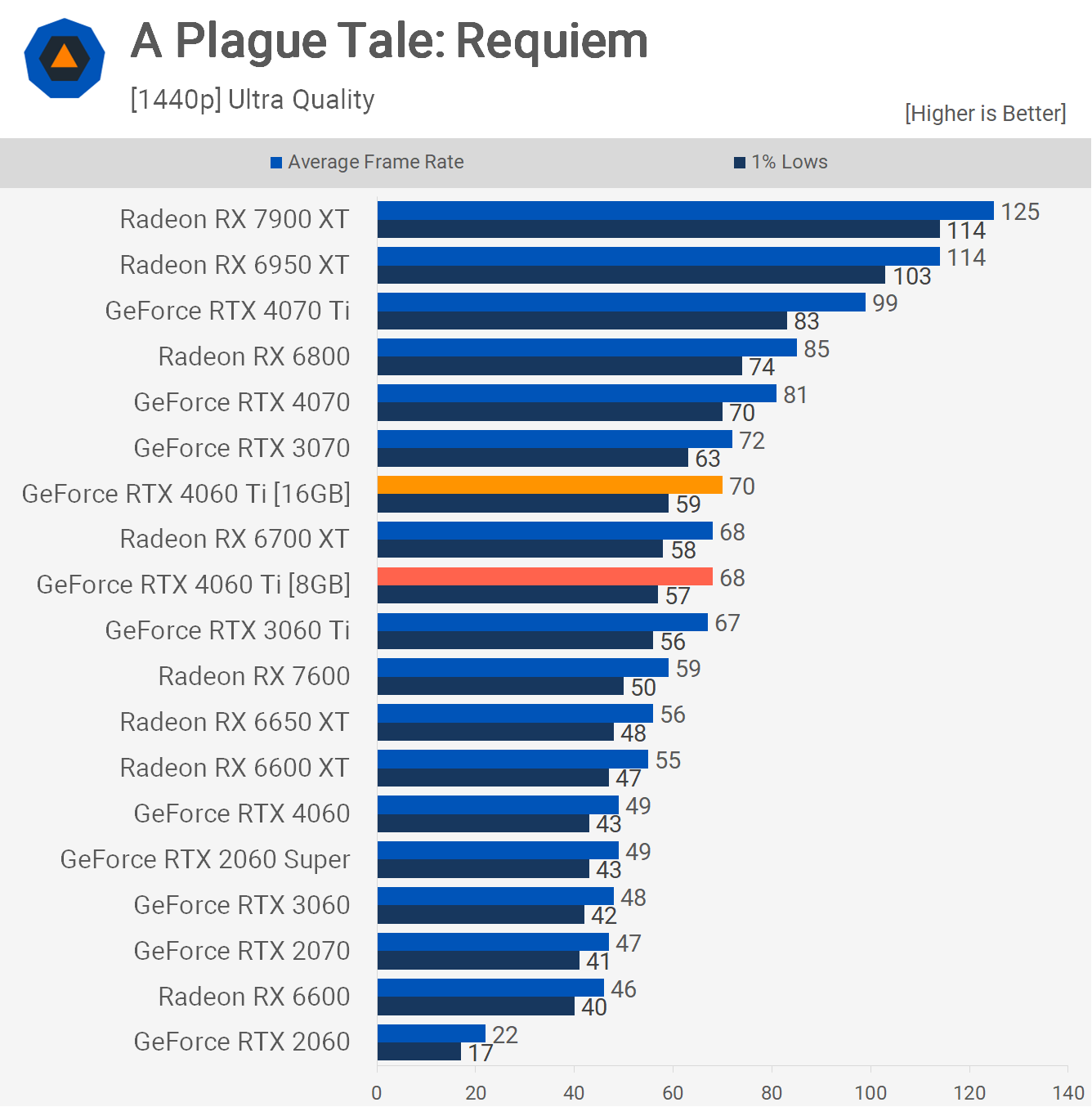

We examined A Plague Tale: Requiem using the ultra-quality settings but without ray tracing enabled, which doesn't exceed an 8GB VRAM buffer. The outcome was identical performance between the 8 and 16GB models at 1080p. At 1440p, the performance was nearly the same with only a minor frame difference.

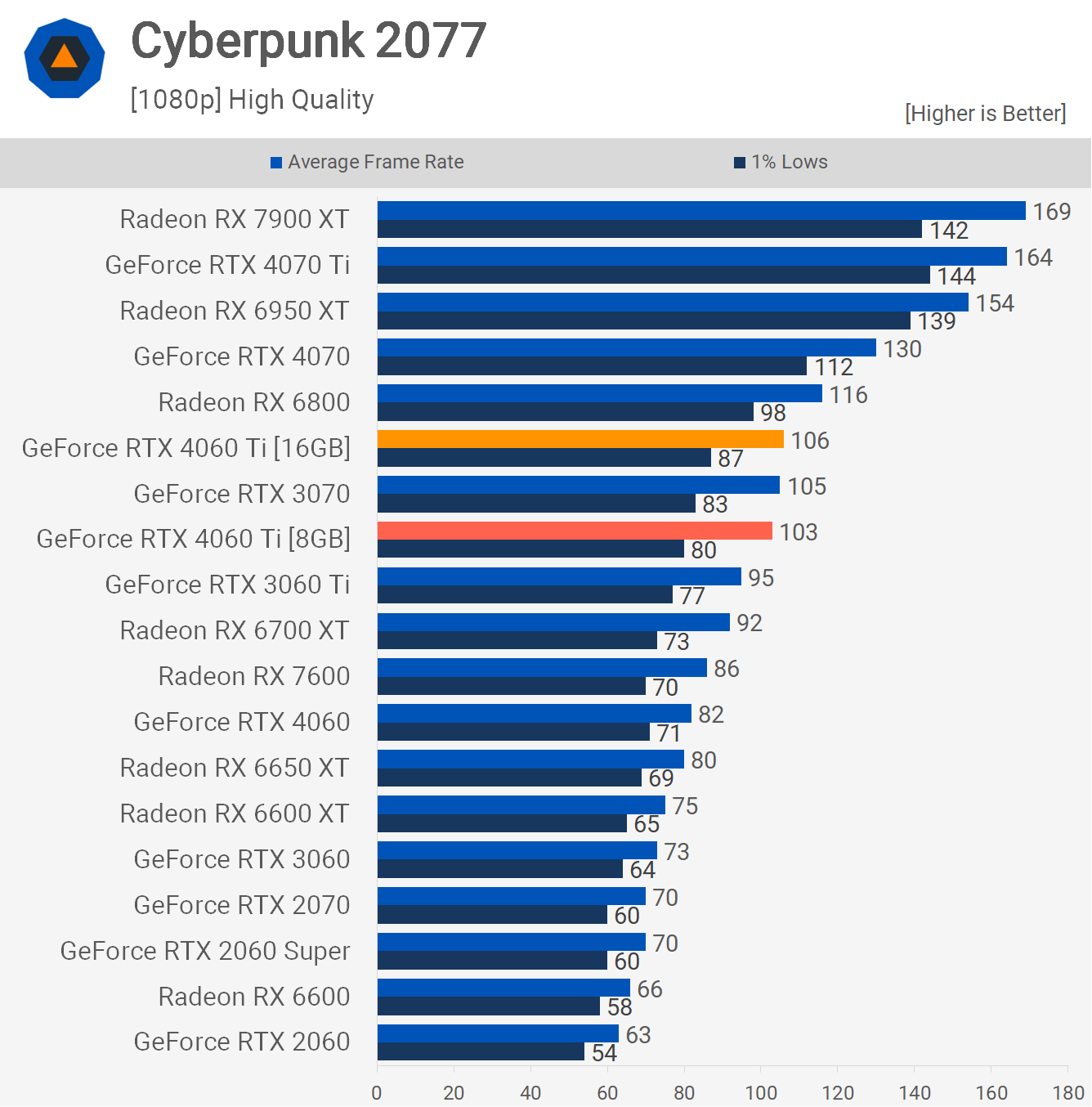

Cyberpunk 2077 was tested using the high-quality preset. Here, the 16GB model provided a modest performance enhancement. Although 1% lows improved by 9%, the average frame rate remained largely consistent.

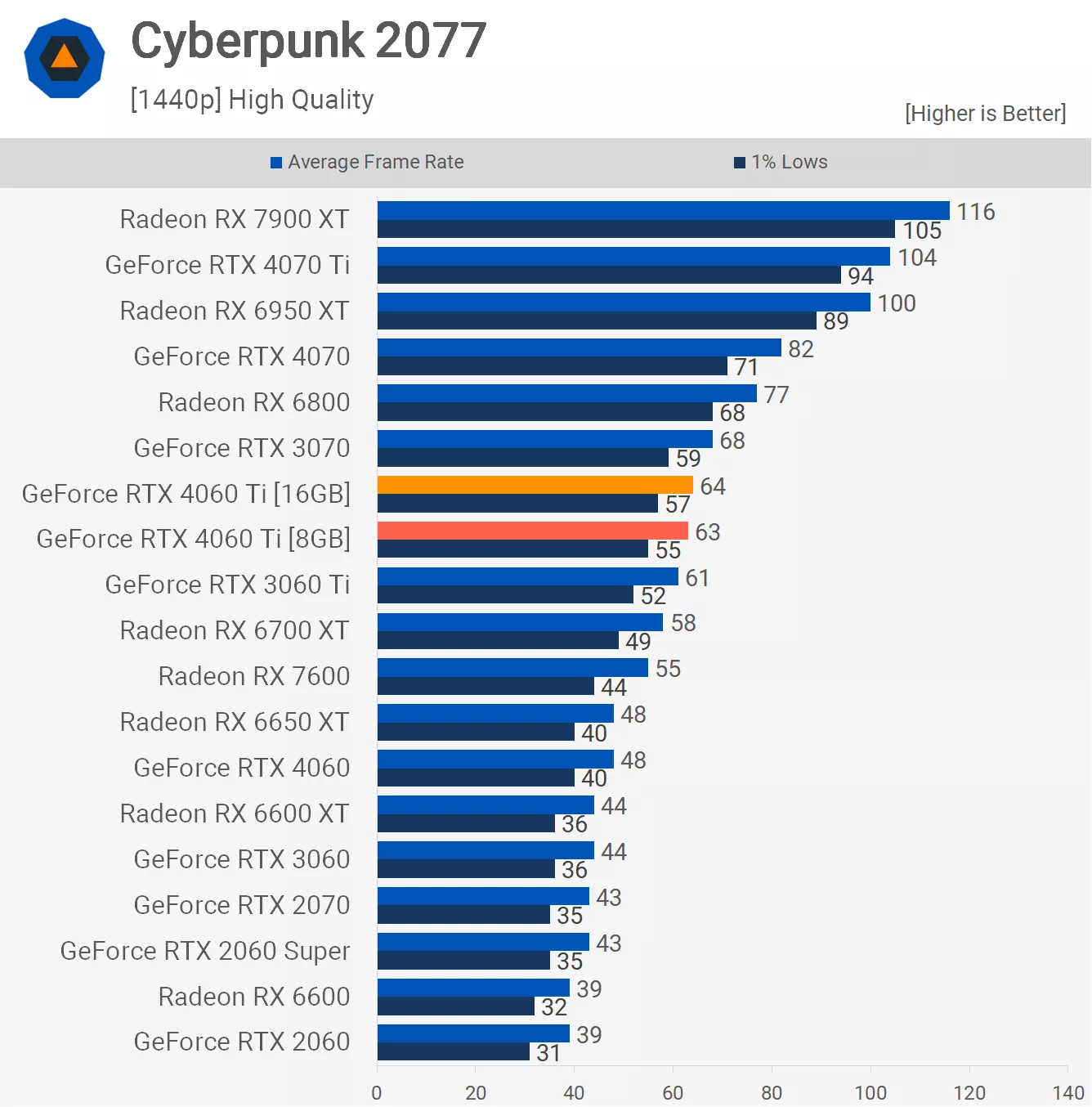

The difference was even smaller at 1440p, where the 16GB model was only 1-2 fps faster.

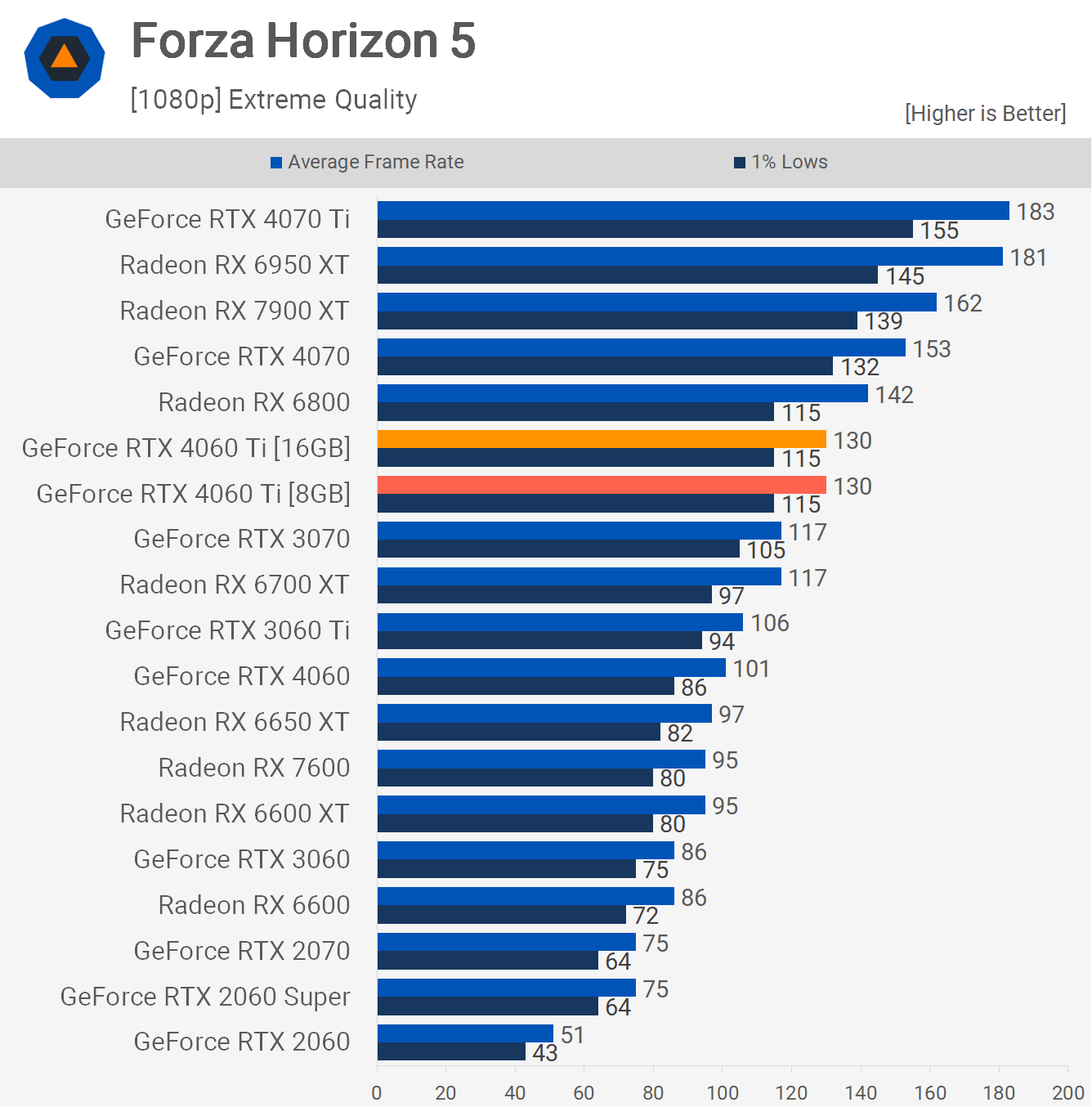

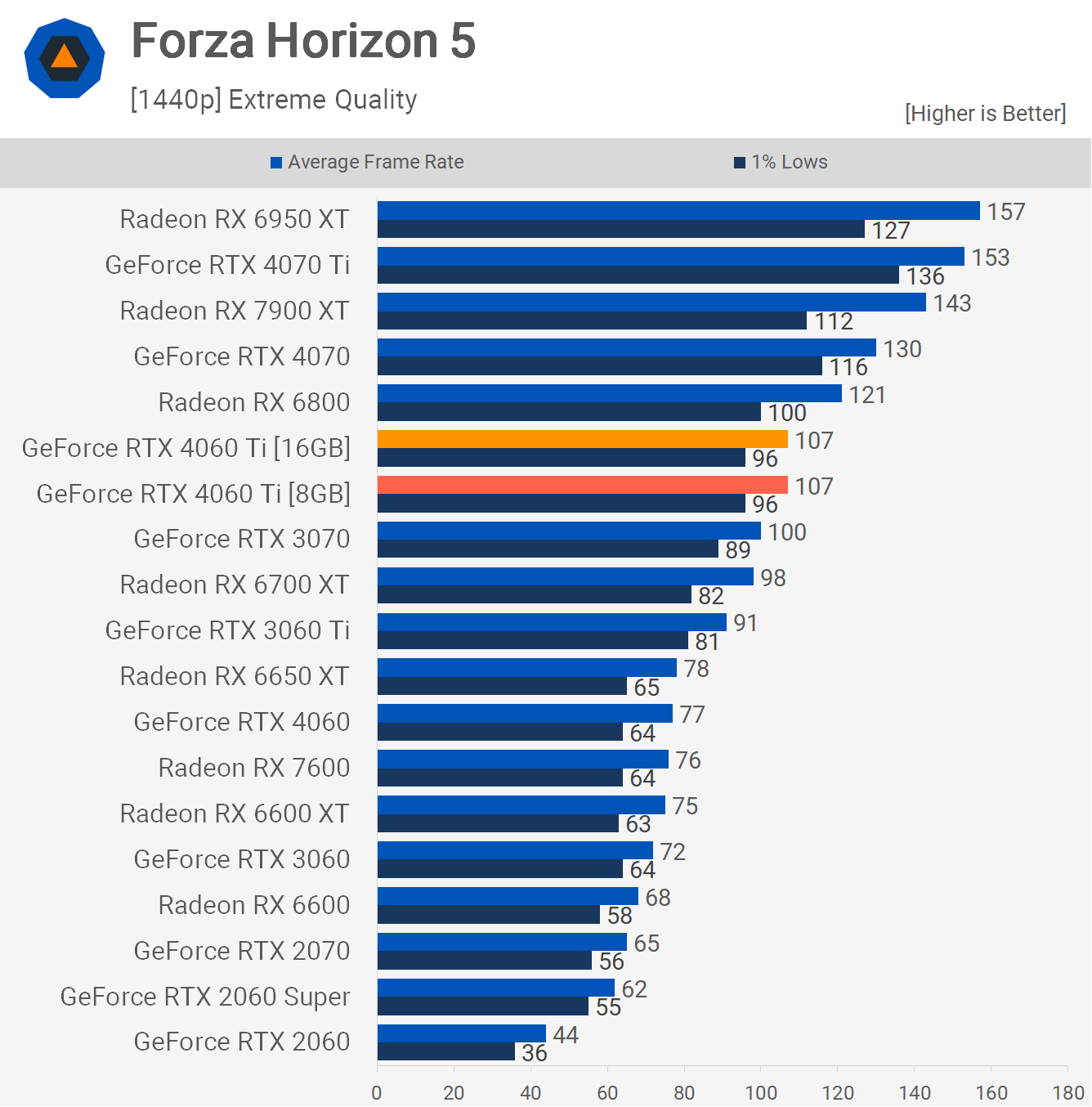

Forza Horizon 5 demonstrated identical performance for both models at 1080p and 1440p. Clearly, this title didn't benefit from the extra VRAM. Currently, most games don't demand more than 8GB of VRAM.

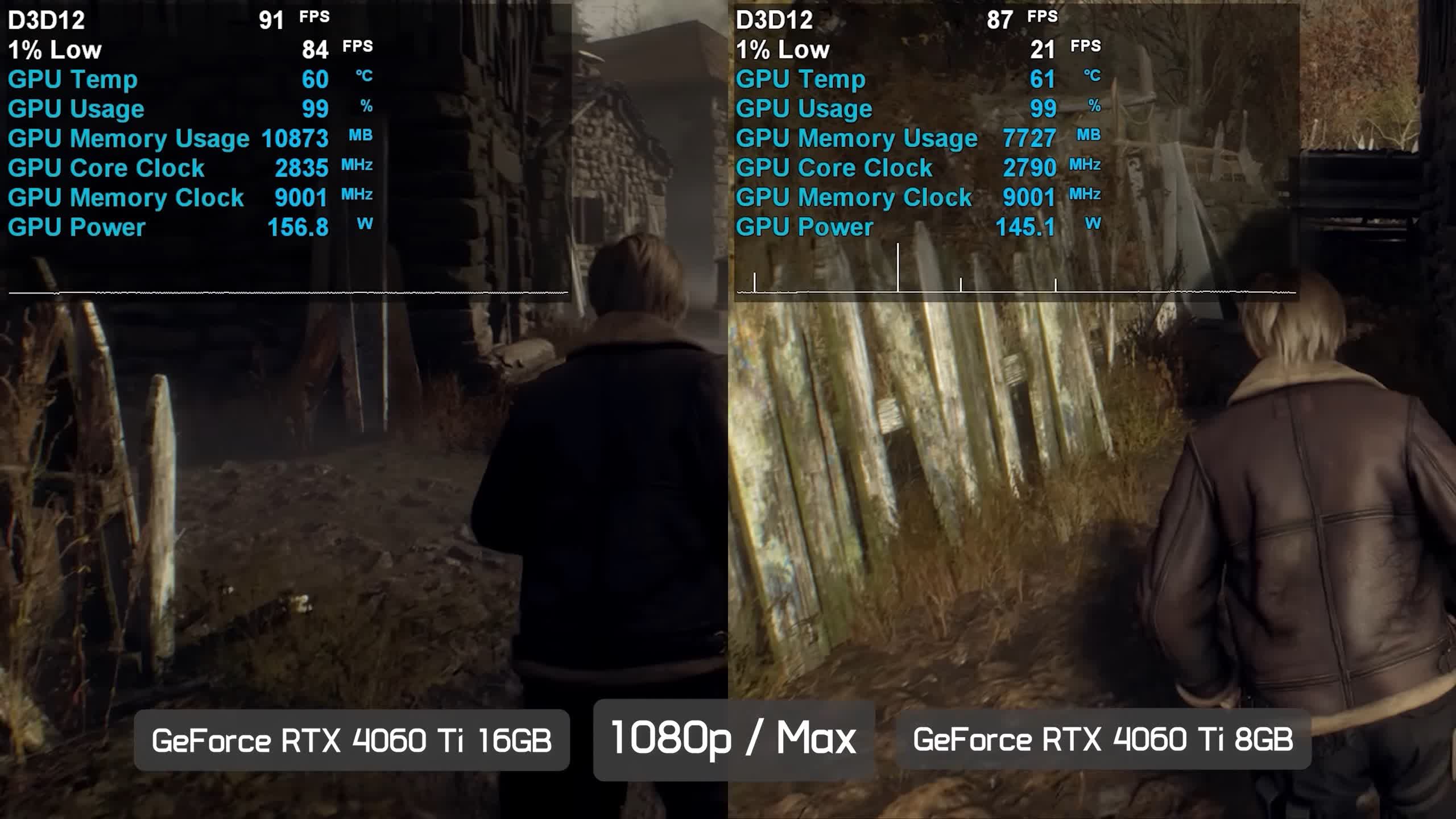

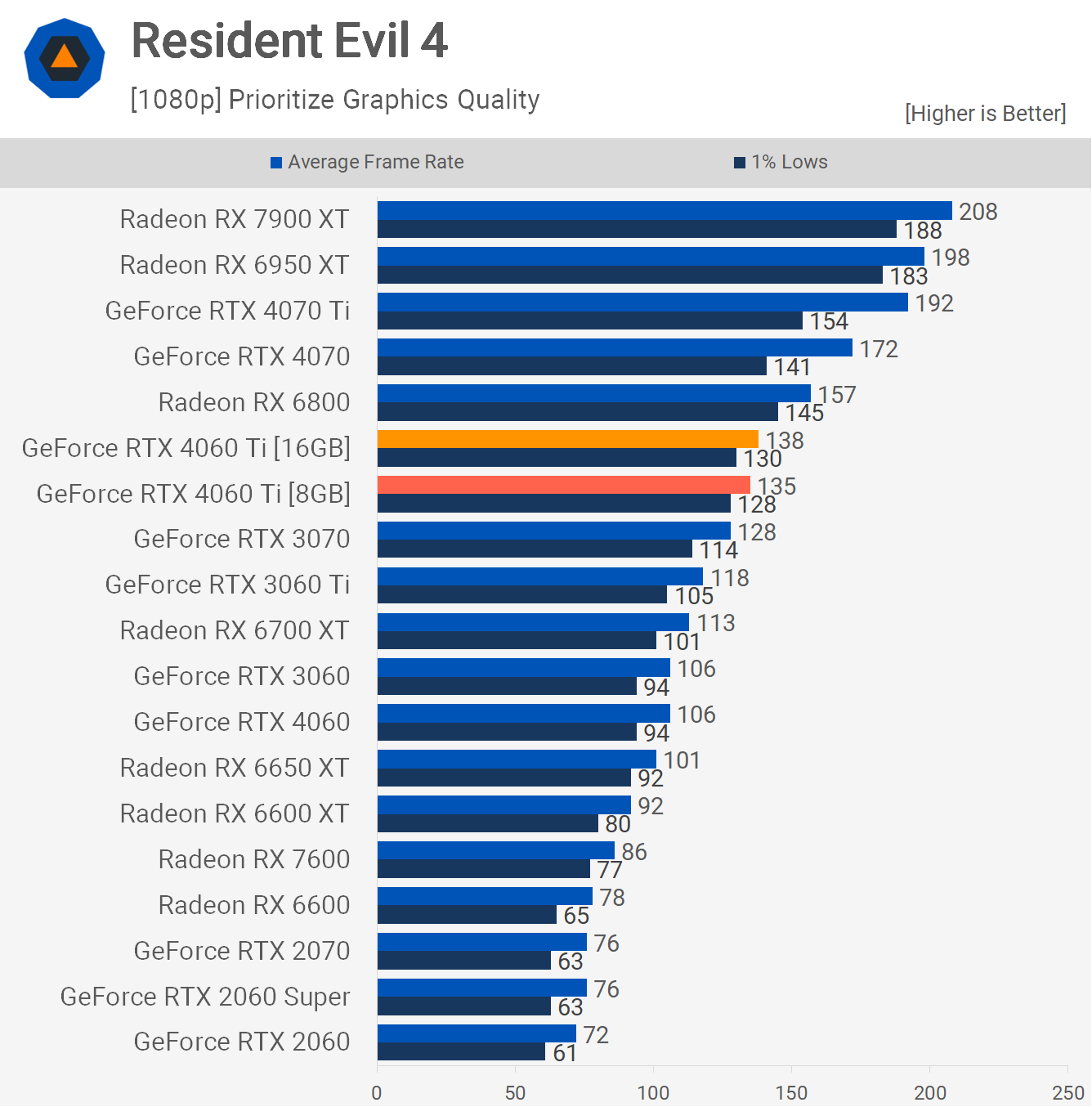

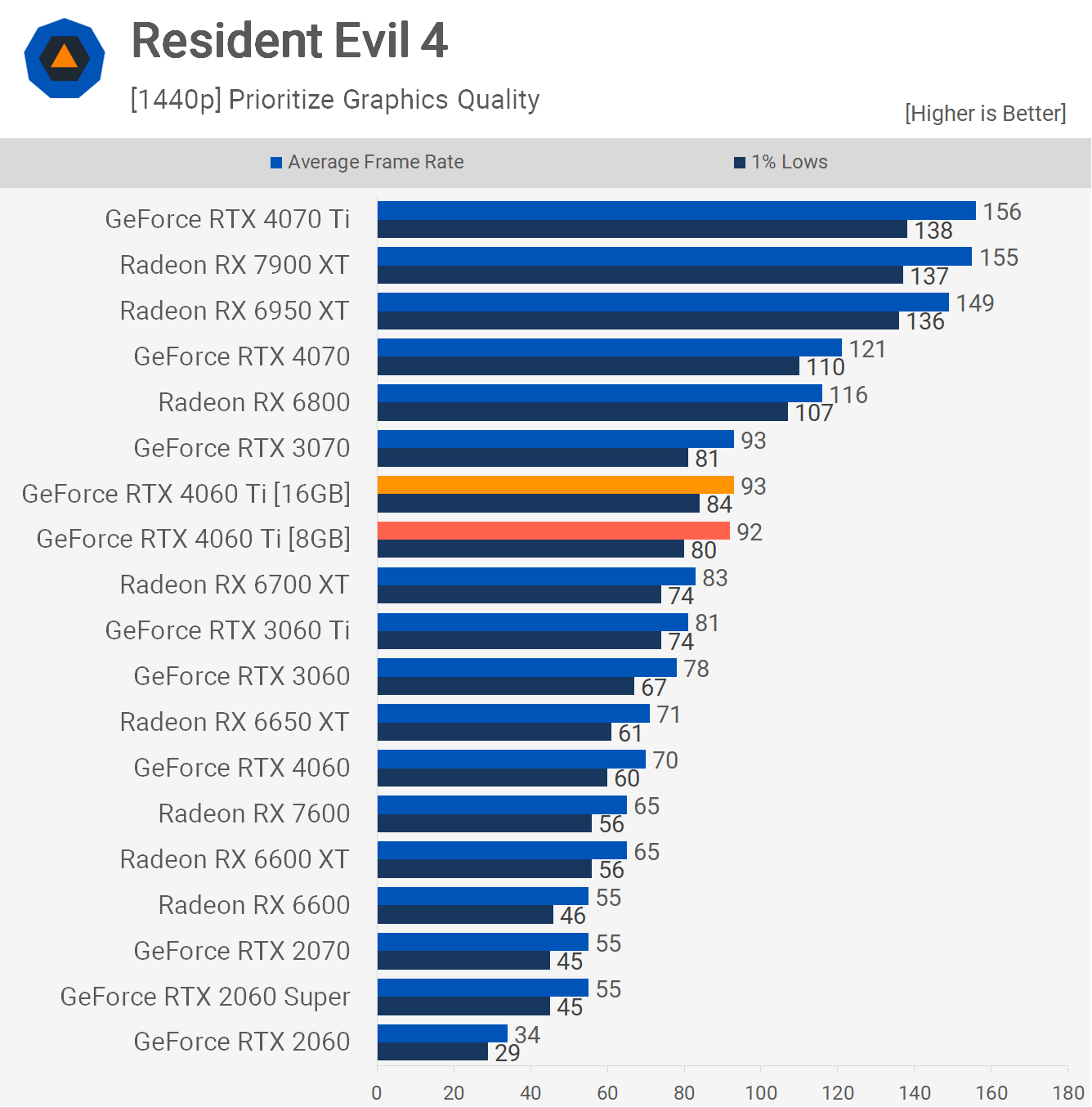

In our Resident Evil 4 tests using the second-highest preset, which significantly reduces VRAM usage, both the 8 and 16GB versions of the RTX 4060 Ti delivered comparable performance at both tested resolutions.

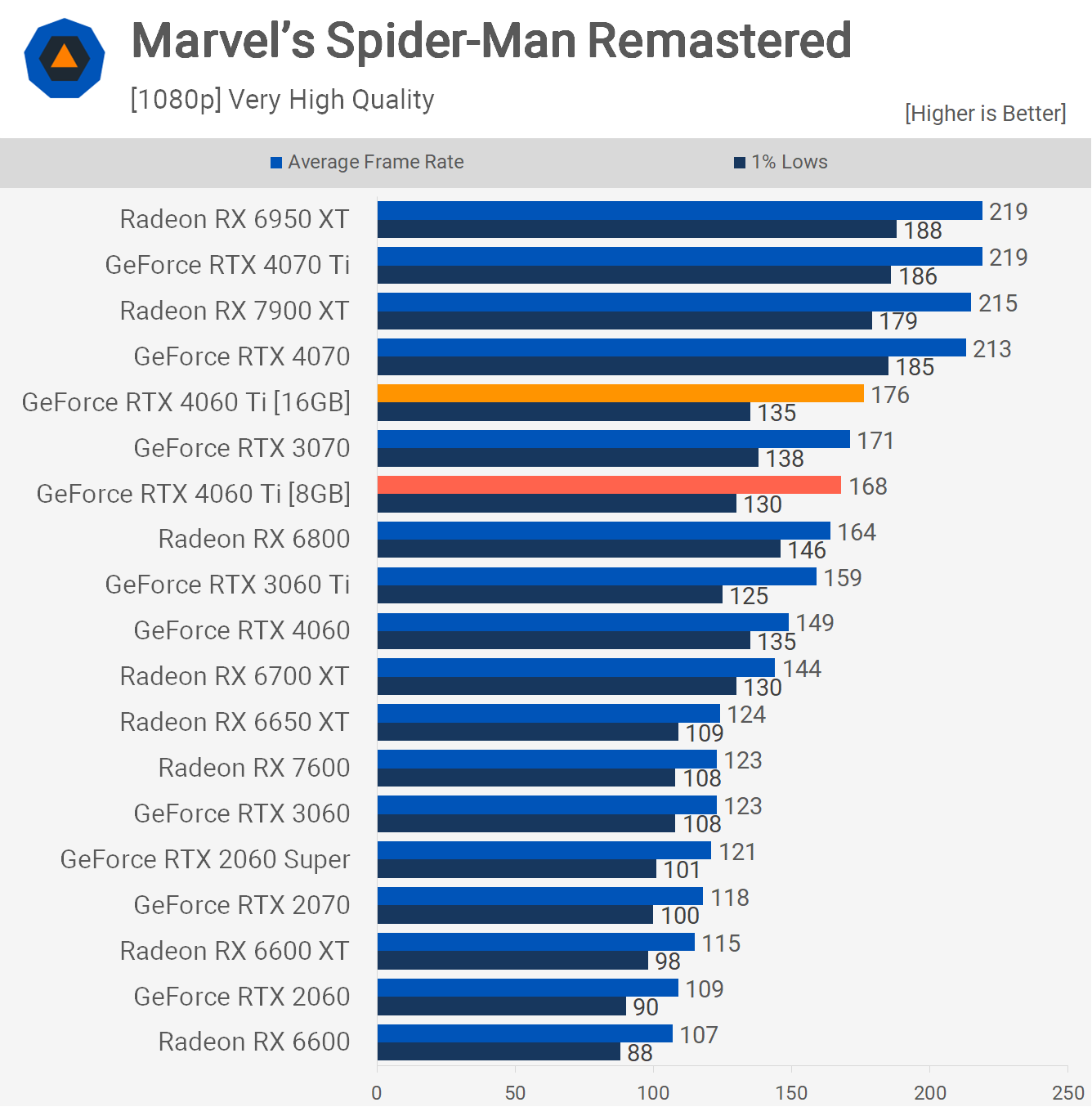

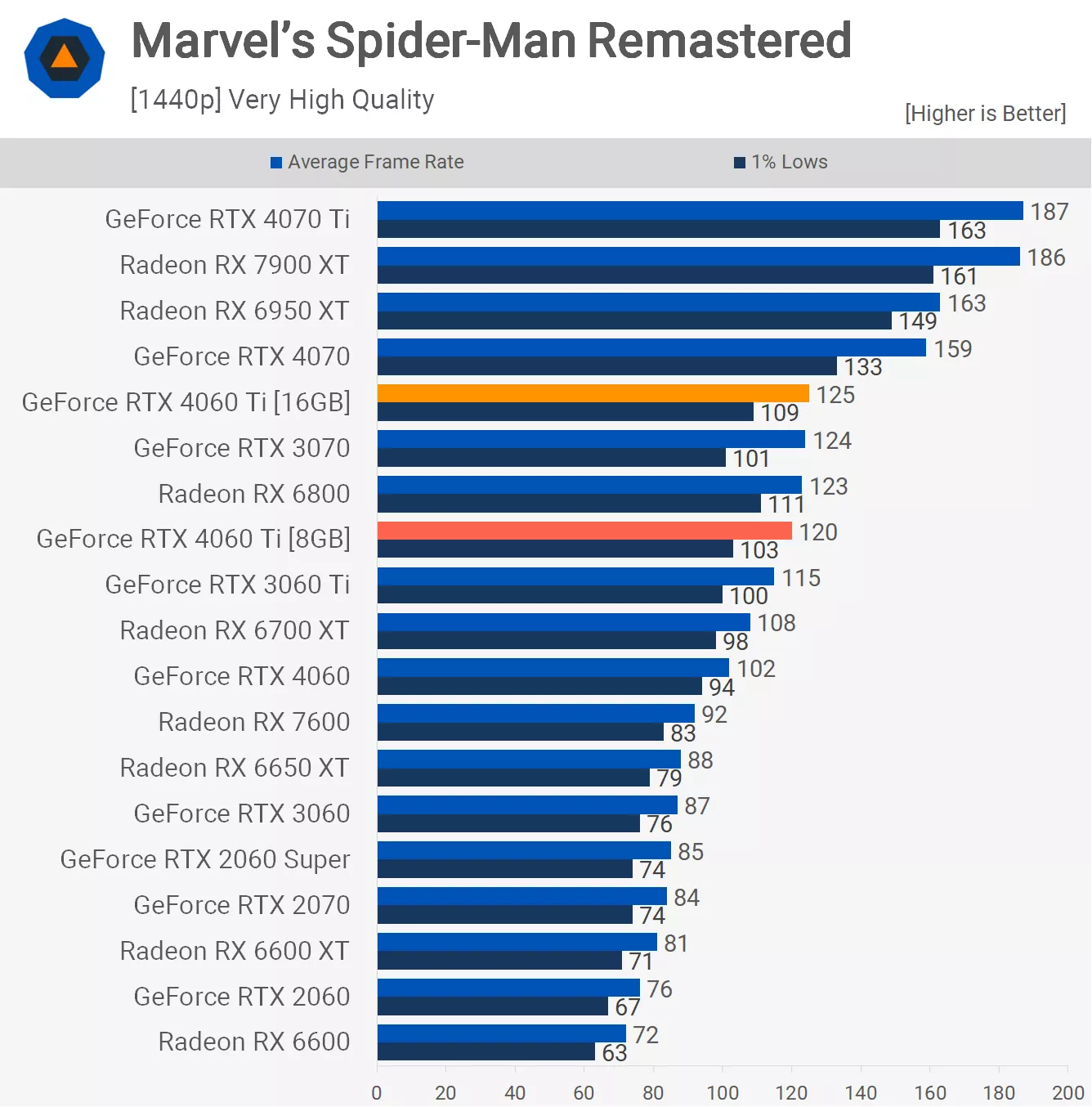

Spider-Man Remastered showed a slight performance increase with the extra VRAM, enhancing the average frame rate by 5%. However, this difference isn't significant. We observed similar margins at 1440p. While the 16GB model is faster, it isn't by a considerable margin.

Power Consumption

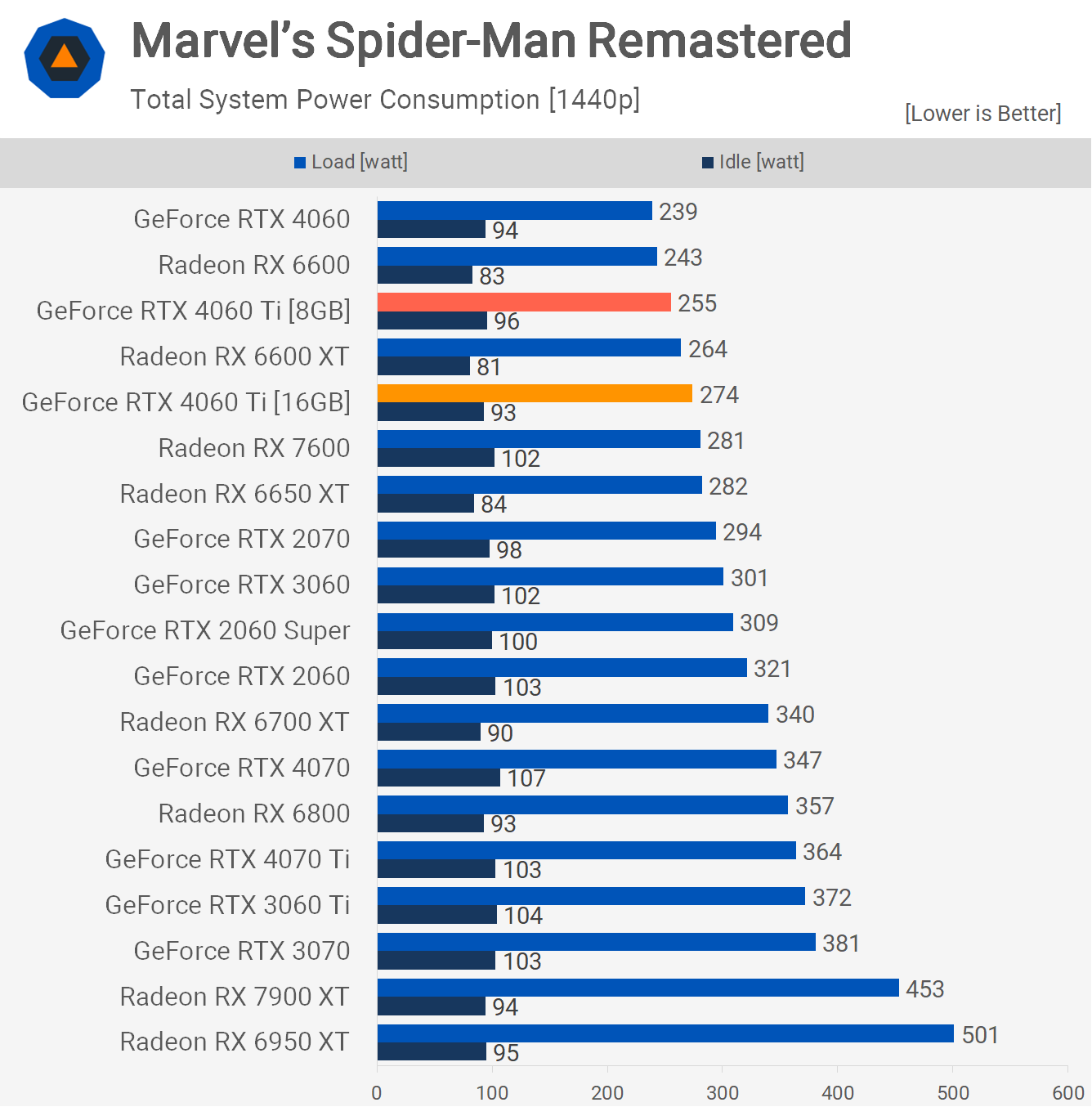

The 16GB RTX 4060 Ti remains power-efficient, as doubling the capacity only increases the TDP by 5 watts. In certain situations, with the extra VRAM, the GPU can work a bit harder, as we noticed in Spider-Man, causing the total system usage to rise by 7%.

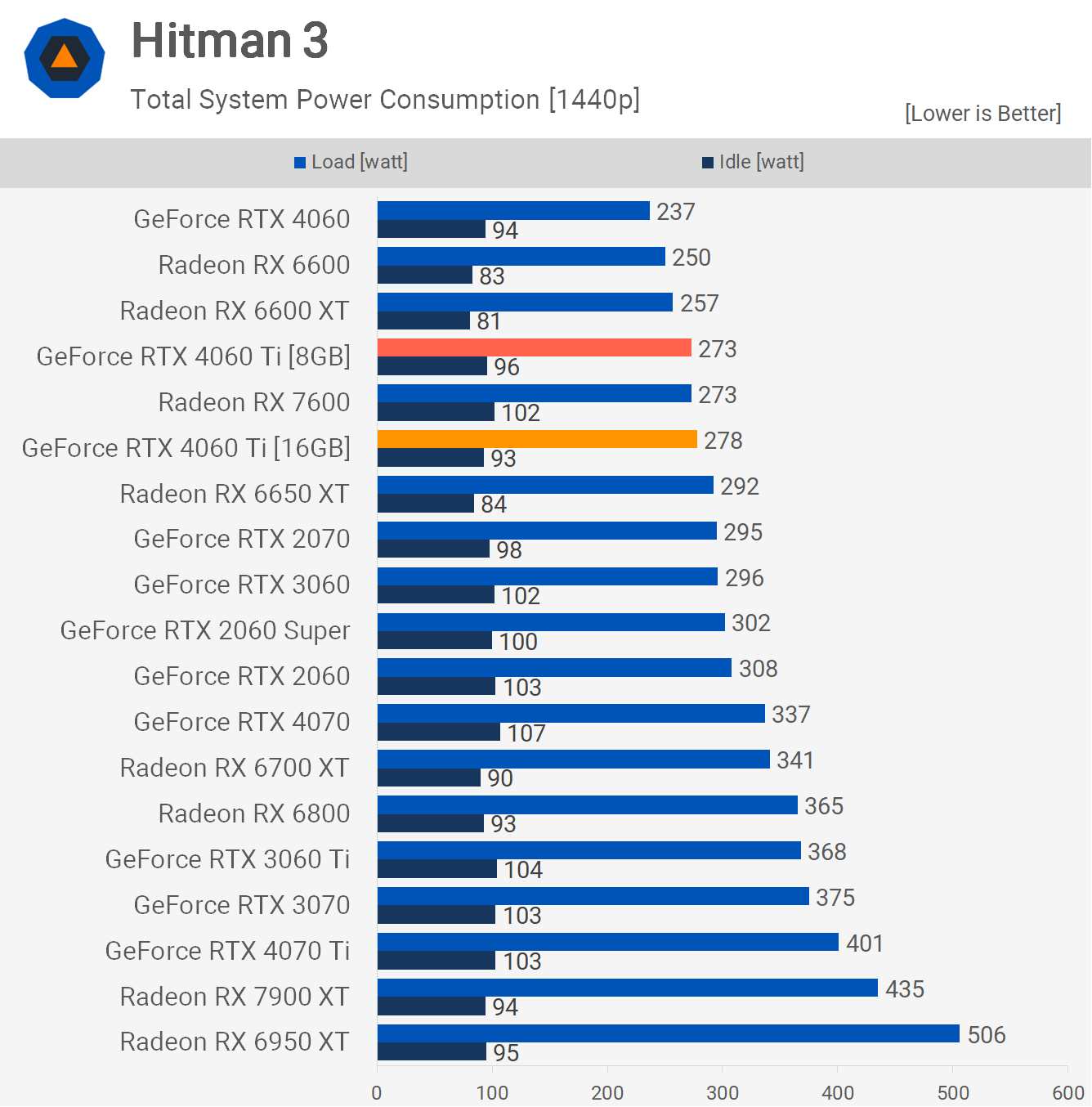

In Hitman 3, the extra VRAM didn't lead to any improvement, resulting in a precise 5-watt increase in power usage.

Performance Summary

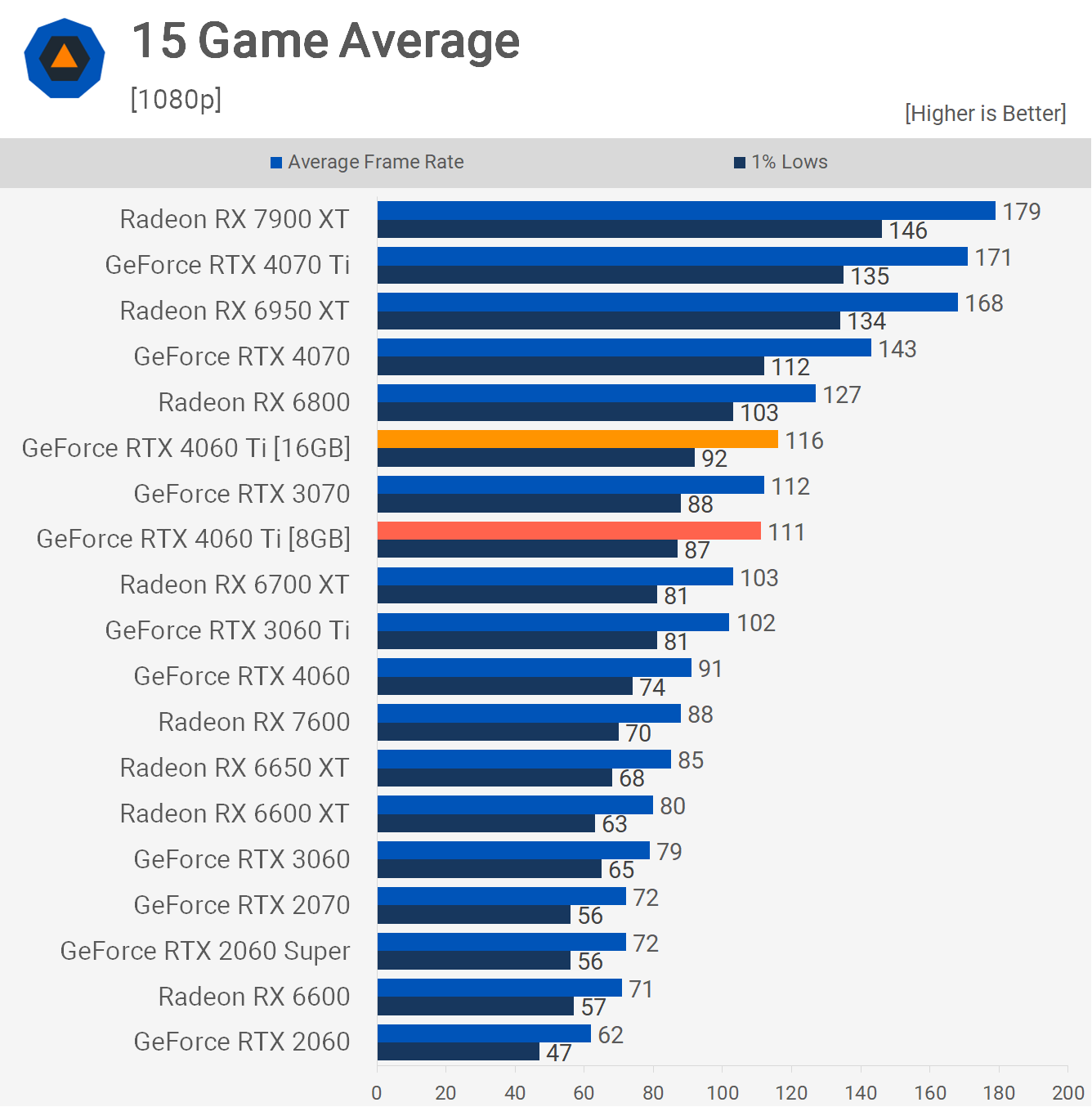

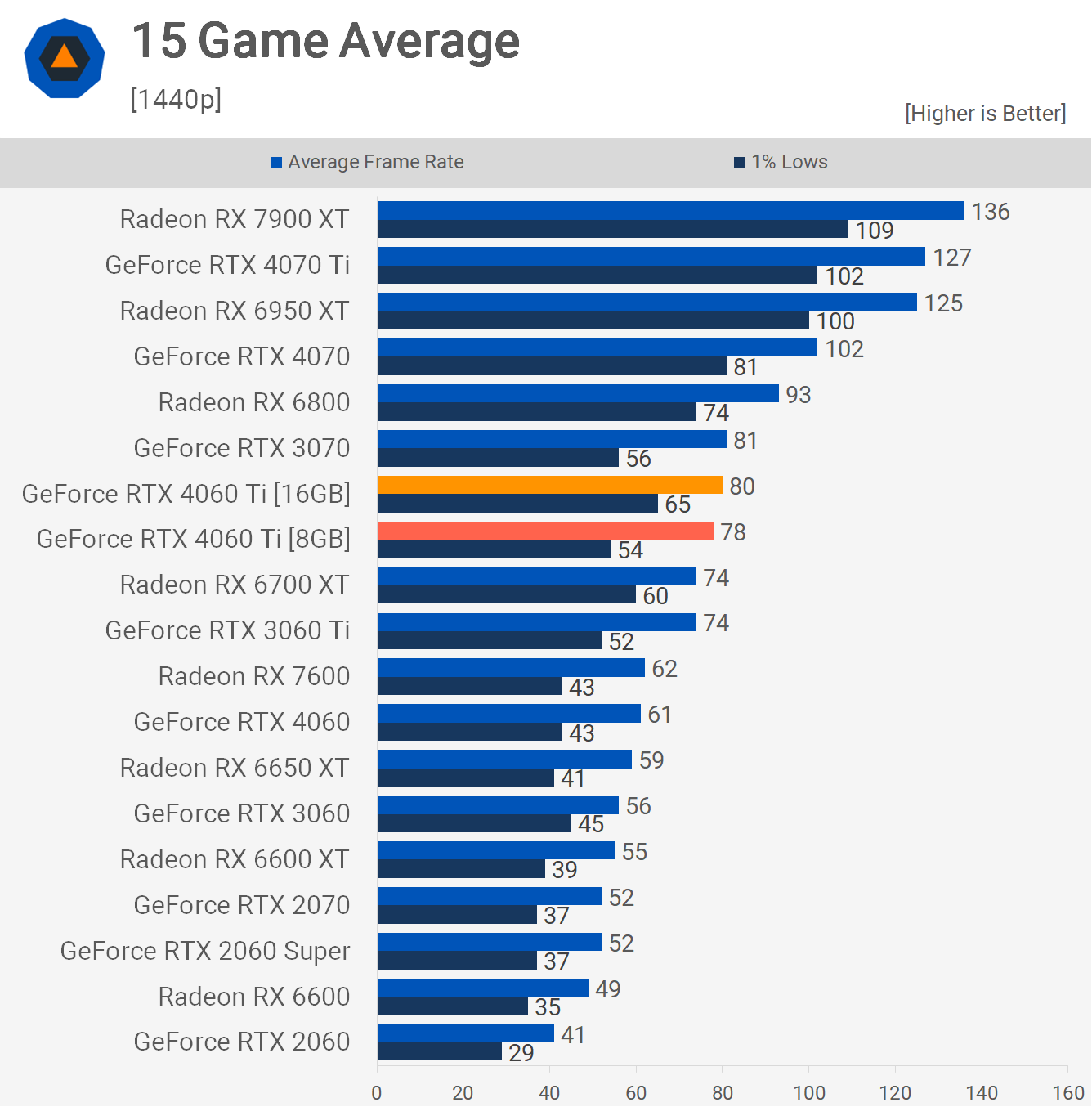

We can quickly examine our 15-game average data. The 16GB model is only 4% faster on average. This is expected since most of the games we tested showed no performance alteration.

We observed just a 3% improvement at 1440p. Again, most of the games tested didn't exhibit a significant performance change. Many of the more demanding games were tested with reduced quality settings, ensuring satisfactory performance on more mainstream models.

Wrap Up

There you have it, the 16GB buffer certainly works and it does make the RTX 4060 Ti a much better product. We're confident that in the years to come, this version will hold up reasonably well, offering RTX 3070-like performance without the VRAM limitations. Yes, it still has a 128-bit memory bus, but as we saw in the memory-hungry examples, that doesn't prevent the GPU from utilizing the 16GB buffer to evade performance-related issues.

However, the real issue with the RTX 4060 Ti is pricing, which applies to both variants. The 8GB model should ideally cost $300 and, ideally, $350 for the 16GB version, or maybe $400 at most – but that's currently the price of the 8GB version.

In other words, paying $500 for what should be the base configuration RTX 4060 Ti is insane.

It's also unreasonable that gamers wanting more than 8GB of VRAM from a current generation GeForce GPU must spend $500. Unfortunately, AMD doesn't seem to be coming to the rescue either. The current rumor suggests that the Radeon 7700 XT will offer 12GB and the 7800 XT 16GB, and that both will arrive in September. But pricing won't be great there either, and we don't expect the 7700 XT to be a sub-$500 product, so the current VRAM issue will continue.

As for the 16GB RTX 4060 Ti, obviously you shouldn't buy it at the current price – for $400 maybe, but we don't expect it to hit that price any time soon. In the meantime, this 16GB version of the RTX 4060 Ti will provide us with numerous great content opportunities over the next few years. It will be very interesting to monitor how the 8GB and 16GB versions track with future games.

For now, though, it's a terribly priced graphics card that you can ignore.

Shopping Shortcuts:

- Nvidia GeForce RTX 4060 Ti 16GB on Amazon

- Nvidia GeForce RTX 4060 Ti on Amazon

- AMD Radeon RX 6700 XT on Amazon

- Nvidia GeForce RTX 4070 on Amazon

- AMD Radeon RX 7600 on Amazon

- AMD Radeon RX 7900 XT on Amazon

- Nvidia GeForce RTX 4090 on Amazon

- AMD Ryzen 7 7800X3D on Amazon

Further Testing

Since we posted this review, we have written new comparisons and run relevant benchmarks you may be interested in: