In this article, we'll take a look at how L3 cache capacity affects gaming performance. More specifically, we'll be examining AMD's Zen 3-based Ryzen processors in a "for science" type of feature. This means it's not necessarily meant as buying advice, though it does align well with several recommendations we've made over the past few years. It's also handy information to be armed with moving forward.

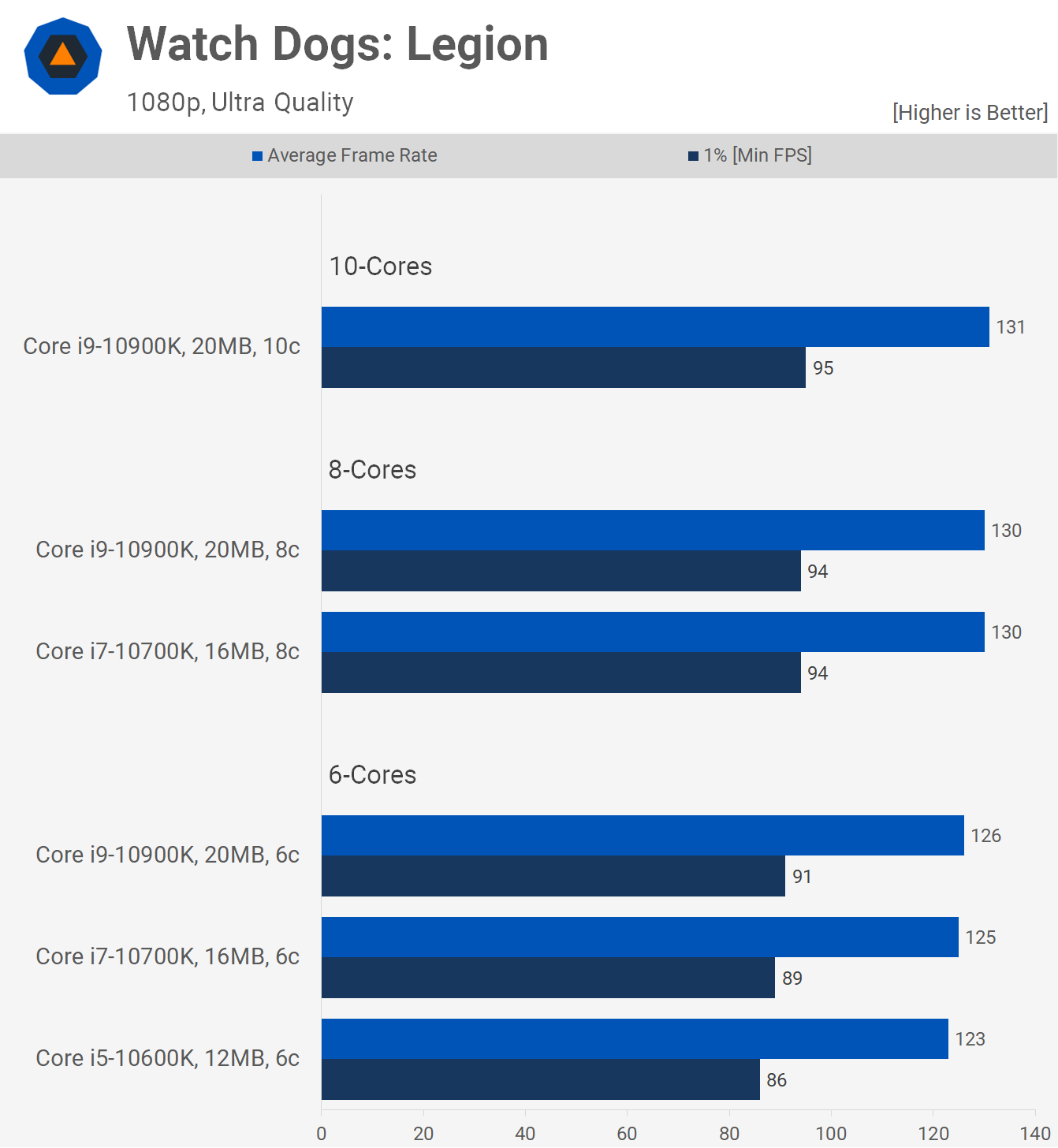

This is not the first time we have investigated the common claim that "more cores improve gaming performance." We tested back in 2021, when it was quite common to hear from users who had upgraded from something like a Core i5-10600K or Core i7-8700K, to the then flagship Core i9-10900K, saying that the extra cores in the premium CPU resulted in big FPS boosts for gaming.

You see, the 10900K offered a 67% increase in cores, going from 6 to 10 cores, but it also saw a 67% increase in L3 cache capacity. Given that most games were still unable to fully utilize all 6 cores of the 10600K or 8700K, this led us to wonder whether the extra cache or the cores were having a greater impact on performance.

The cool thing was, we could easily find out by disabling cores on the 10700K and 10900K, while locking the operating frequency, ring bus, and memory timings in place. This allowed us to compare 6-core, 8-core and 10-core configurations, with 12MB, 16MB and 20MB L3 cache capacities and this is how the results turned out.

For the most part, upgrading from 6 cores with a 20MB L3 cache to 10 cores with the same 20MB L3 yielded just a 3% performance increase on average. Thus, a 67% increase in core count netted us a mere 3% FPS boost.

However, with the 6-core configurations, increasing from a 12MB L3 cache to 20MB resulted in a more significant 14% increase on average. This provided us with conclusive evidence that cache was more important than cores for gaming, when comparing processors of the same architecture.

At the time, the significance of L3 cache for gaming was not widely recognized, so this data was surprising for many, especially those who had assumed the performance improvement seen when moving from a 6-core Core i5, or i7, to a 10-core Core i9 was due to the increased cores, rather than the extra cache.

About 8 months after we published that feature article, AMD released their first-ever 3D V-Cache processor, the 5800X3D, and the importance of L3 cache for gaming performance became widely acknowledged. Given that, you might wonder why we're revisiting this subject now, in early 2024. Honestly, we don't have a specific reason; we simply found this data intriguing and believe you will, too.

Actually, back when we published the Intel 10th gen Cores vs. Cache article, one of the most popular requests was for an AMD version. Unfortunately, at the time, this wasn't feasible as all Ryzen 5000 series processors featured a 32MB L3 cache capacity per CCD, making the Ryzen 7 5800X and Ryzen 5 5600X, for example, feature the same L3 capacity.

We later received the first Ryzen 5000 APUs, which were cut down to a 16MB L3 cache. However, this wouldn't have made for an exceptionally interesting article, since you already had that data in the 5700G and 5600G reviews, anyway. But, with the arrival of the 3D V-Cache parts (since late 2022 to be clear), we now have Ryzen 5000 CPUs with a 96MB L3 cache – we just got around making this test now.

Test notes and full disclaimer: we didn't retest all these CPUs at a locked frequency; instead, they've been benchmarked at their default operating frequencies, which range from 4.4GHz to 4.7GHz, leading to as much as a 7% clock speed discrepancy. However, after reviewing the data, it was clear that a full re-test to lock the clock speeds would have been unnecessary as the data we have is very clear and shows exactly what we'd expect to find.

All testing has been conducted using the GeForce RTX 4090 at 1080p, as this is a CPU benchmark. If you don't understand why reviewers test this way and would like to learn more, we have an article dedicated to explaining all the benchmarking basics.

For this test, we're limited to a 6-core vs. 8-core comparison as those are the Ryzen CPUs available that feature 16MB, 32MB, and 96MB L3 cache capacities. All CPUs have been tested using 32GB of DDR4-3600 CL14 memory. Now, let's dive into the data…

Benchmarks

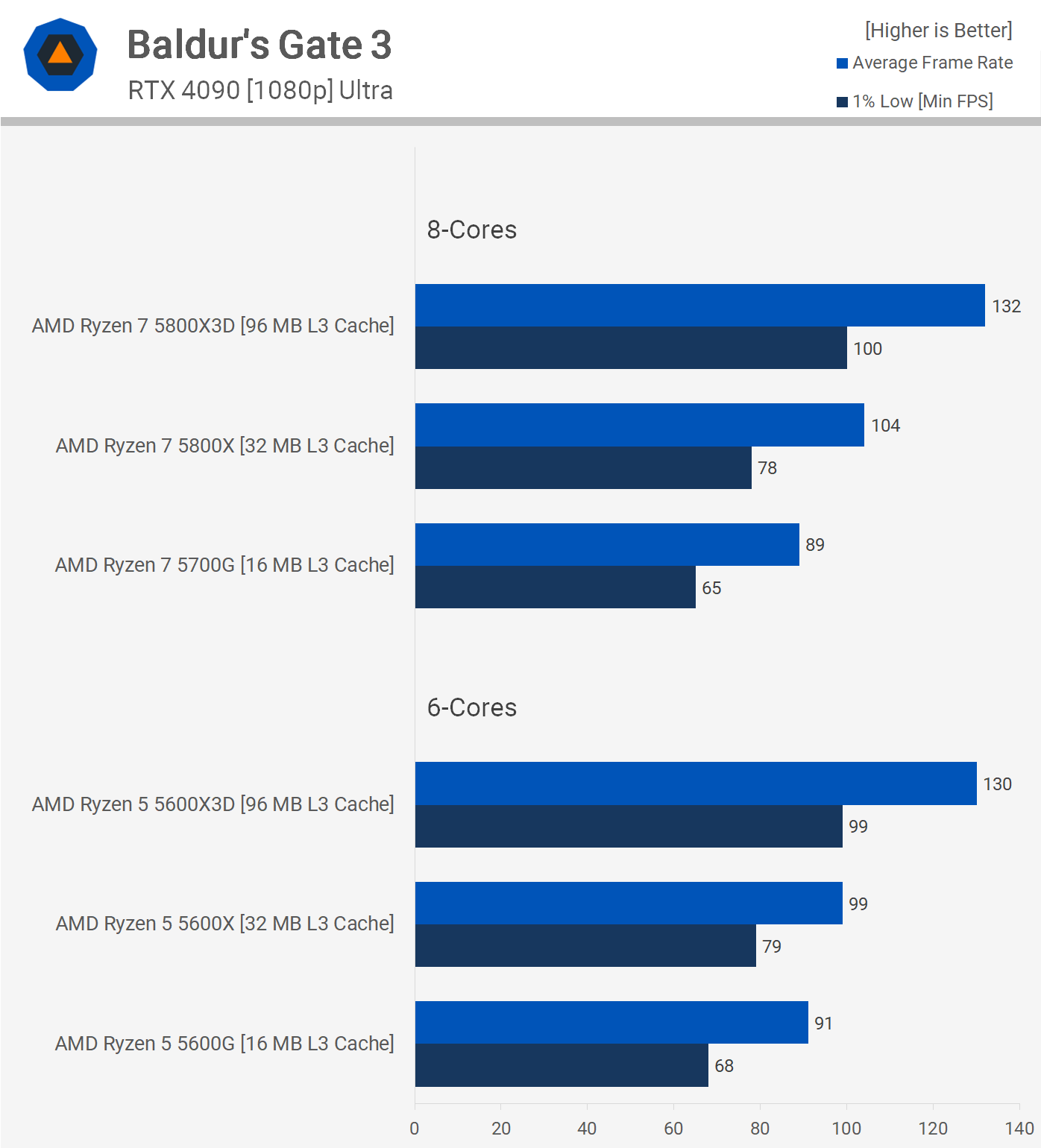

First up is Baldur's Gate 3, and there's a bit to unpack here. Cache capacity aside, it doesn't matter if we have a Zen 3 processor with 6 or 8 cores; performance is going to be the same, assuming cache capacity is equal. Rather, cache is king here; the 5600X, for example, with its 32MB L3 cache, is 11% faster than the 5700G, which has just 16MB of L3.

Looking at the 8-core data, we see that the 5800X is significantly faster than the 5700G, enhancing performance by 17%, while the 5800X3D is 27% faster than the 5800X. It's also quite remarkable to note that although all processors use Zen 3 cores, hence the same CPU architecture, going from 16MB to 96MB of L3 cache results in a substantial 48% performance increase, underscoring that cache significantly impacts performance.

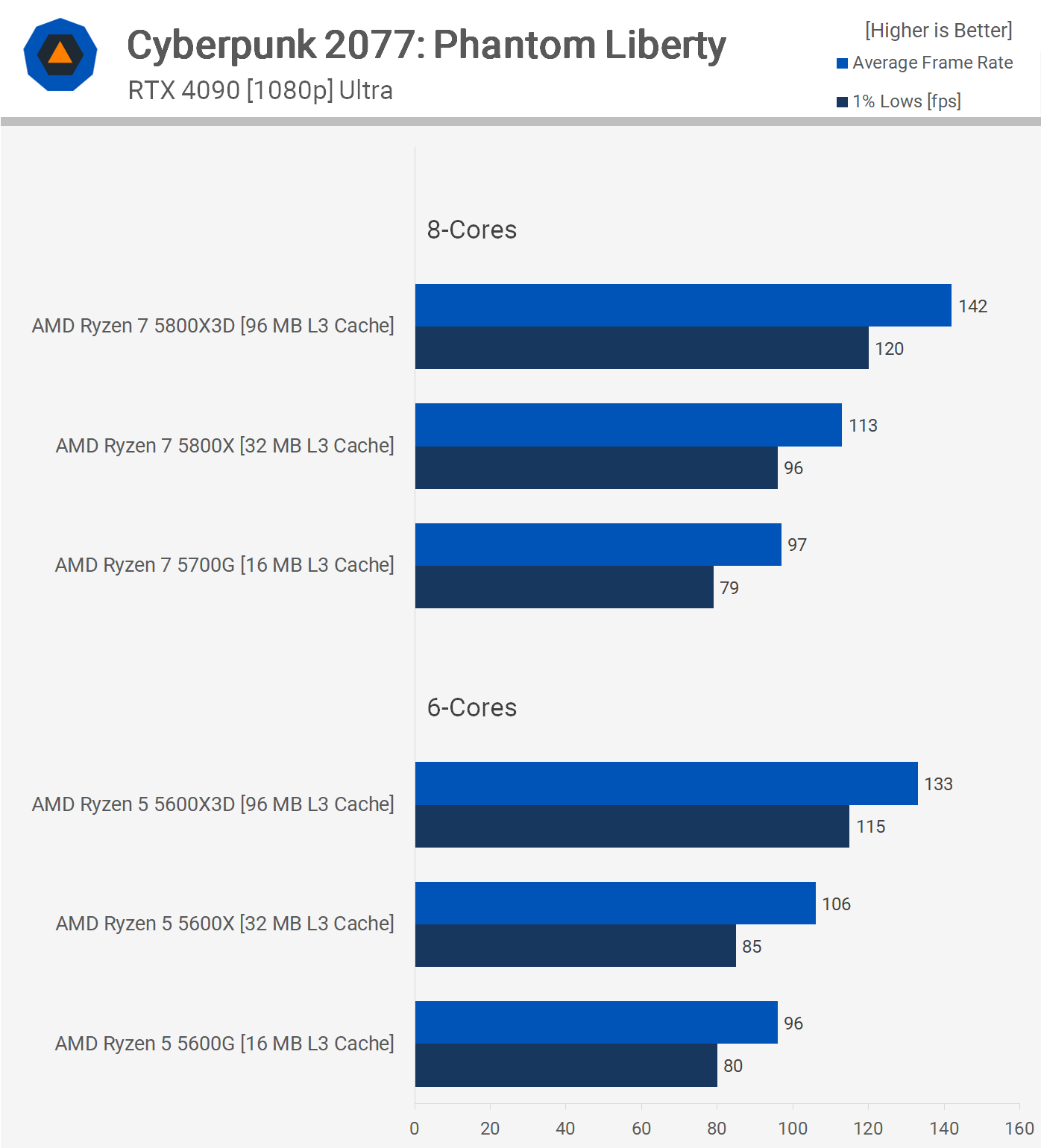

Next, we examine Cyberpunk 2077: Phantom Liberty. Here, we do see a slight performance advantage for the 8-core processors, though this advantage diminishes as the L3 cache capacity decreases. For instance, the 5800X3D was 7% faster than the 5600X3D, and we observe a similar margin when comparing the 5800X and 5600X. However, this margin disappears with the 16MB 5700G and 5600G, which is somewhat unexpected.

Nevertheless, cache capacity makes the most significant difference, enhancing 6-core performance from the 5600G to the 5600X by 10% and then from the 5600X to the 5600X3D by a further 25%. In the case of the 8-core models, we witness a 46% performance uplift when going from 16MB to 96MB.

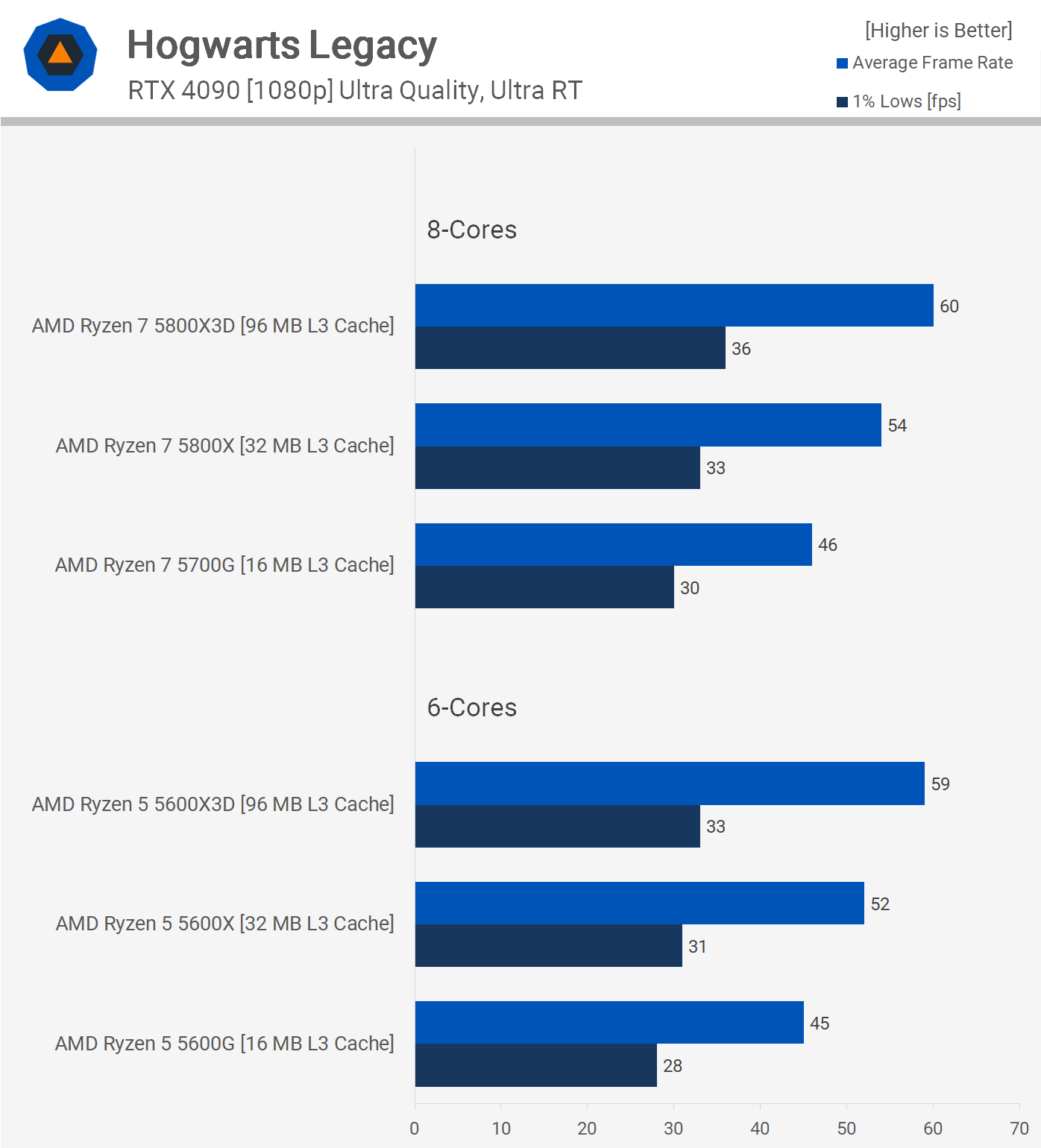

Moving on to Hogwarts Legacy, tested with ray tracing enabled, it seems to challenge CPU performance in this title significantly. That said, although the game becomes very CPU-limited, adding more cores doesn't resolve the issue or even help, with all three cache configurations delivering nearly identical results with either 6 or 8 cores.

Cache capacity does make a difference, and there appears to be no limit here. The 5800X was 17% faster than the 5700G, while the 5800X3D was 11% faster than the 5800X, marking a 30% performance increase from 16MB to 96MB of L3 cache.

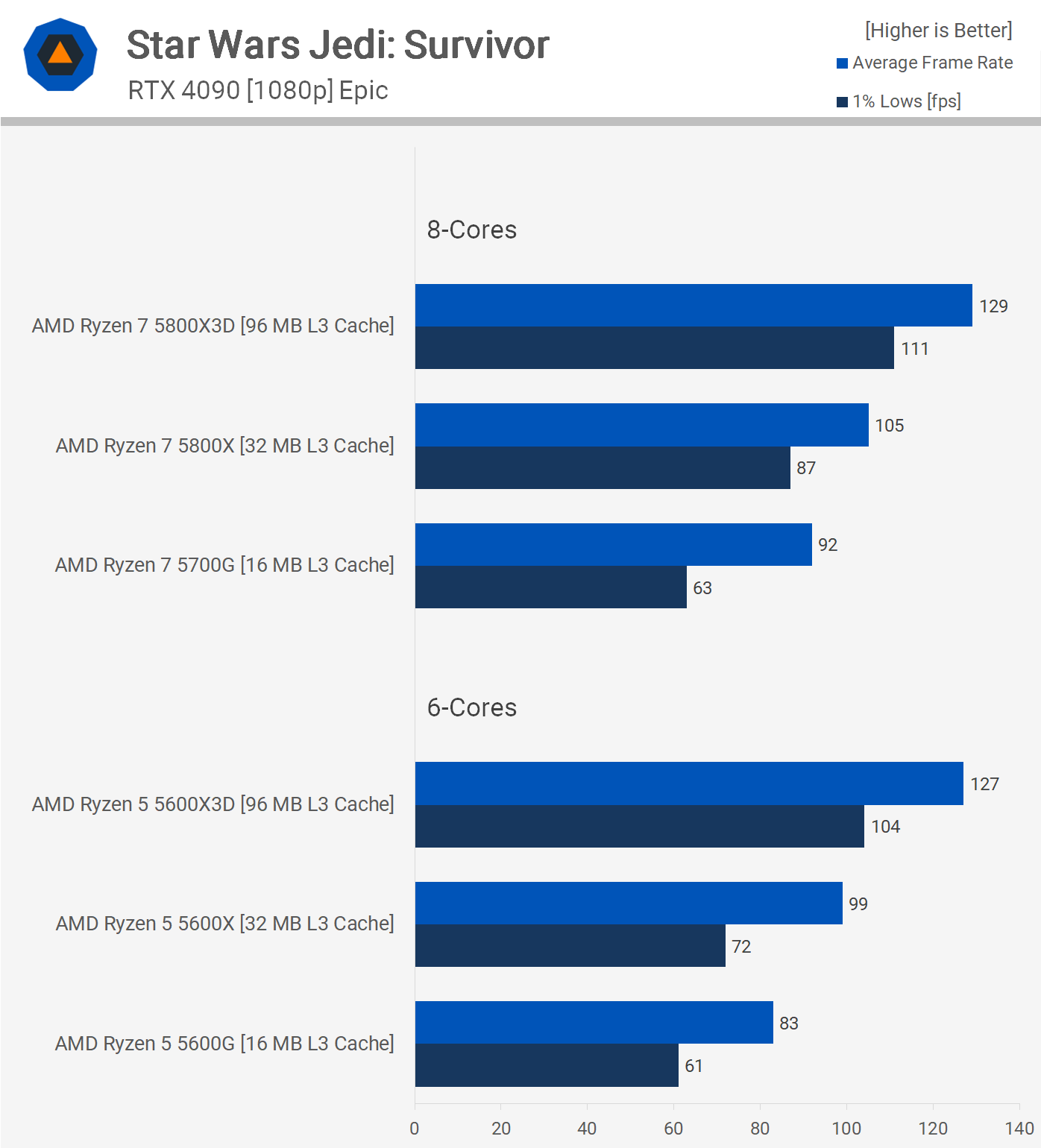

In Star Wars Jedi: Survivor, 6-core and 8-core performance is very similar, though the 5700G was 11% faster than the 5600G, despite delivering comparable low percentile performance. The margin shrinks to just 6% with the 32MB L3 cache models and then 2% for the X3D parts, indicating that as performance increases via a larger L3 cache, the dependence on core count becomes less significant in this title.

Examining the 6-core models, we see a 19% increase from the 5600G to the 5600X and then a further 28% increase from the 5600X to the 5600X3D. It's remarkable to observe a 53% increase from the 5600G to the 5600X3D, given both CPUs feature six Zen 3 cores.

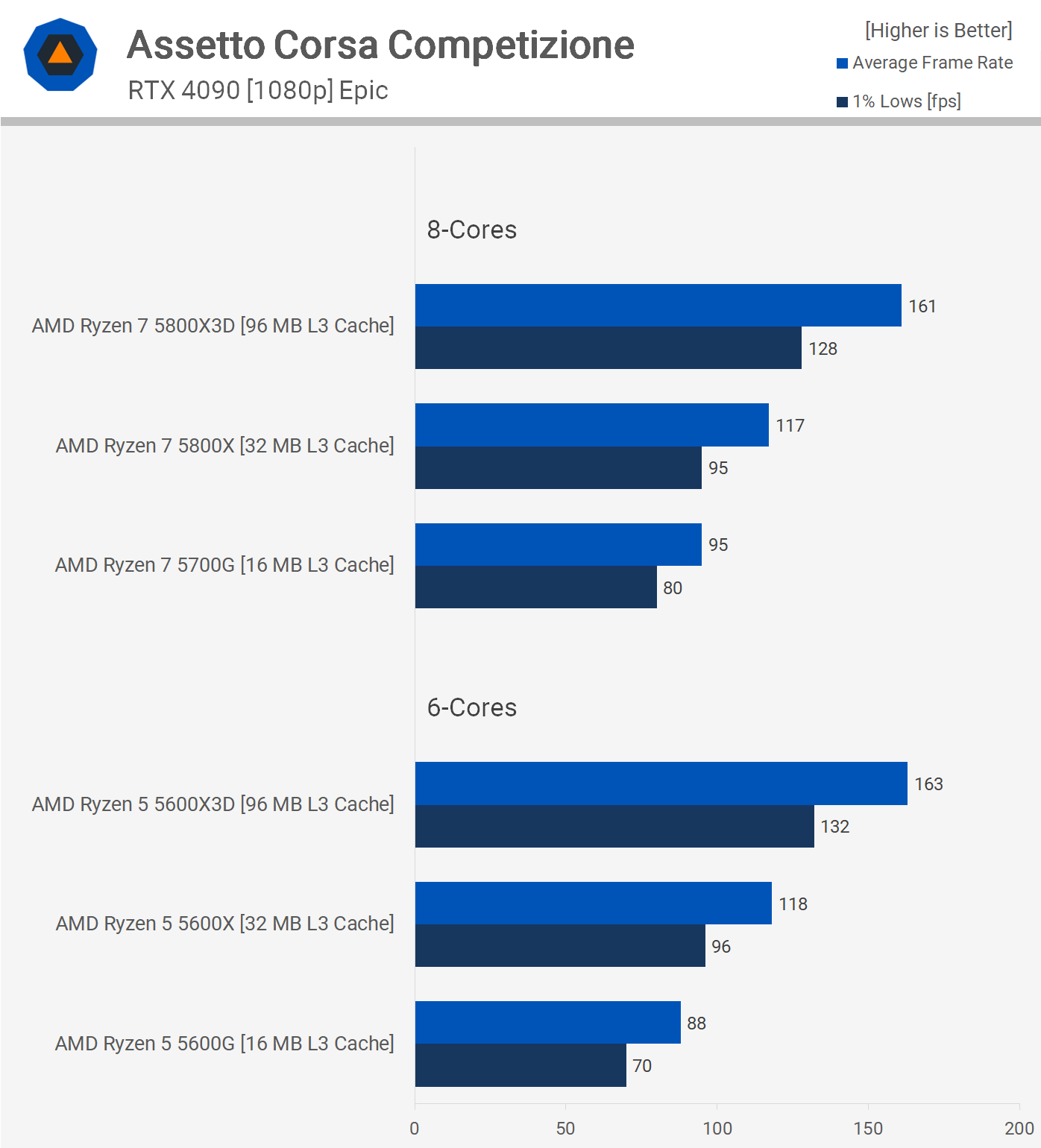

It's been known that ACC is a game that doesn't heavily rely on multiple threads but is very sensitive to cache size, and these CPUs highlight that. There's no significant difference between 6 and 8-core parts here, especially true when examining the 32 and 96MB models. There is an 8% margin favoring the 8-core 16MB chip, so the extra cores do seem to offer some benefit when cache is more limited.

Even when examining the 8-core parts, we see a 23% increase from the 5700G to the 5800X and then a significant 38% boost from the 5800X to the 5800X3D. This showcases just how much of a performance difference L3 cache can make, roughly a 70% boost from the 5700G to the 5800X3D, which is remarkable.

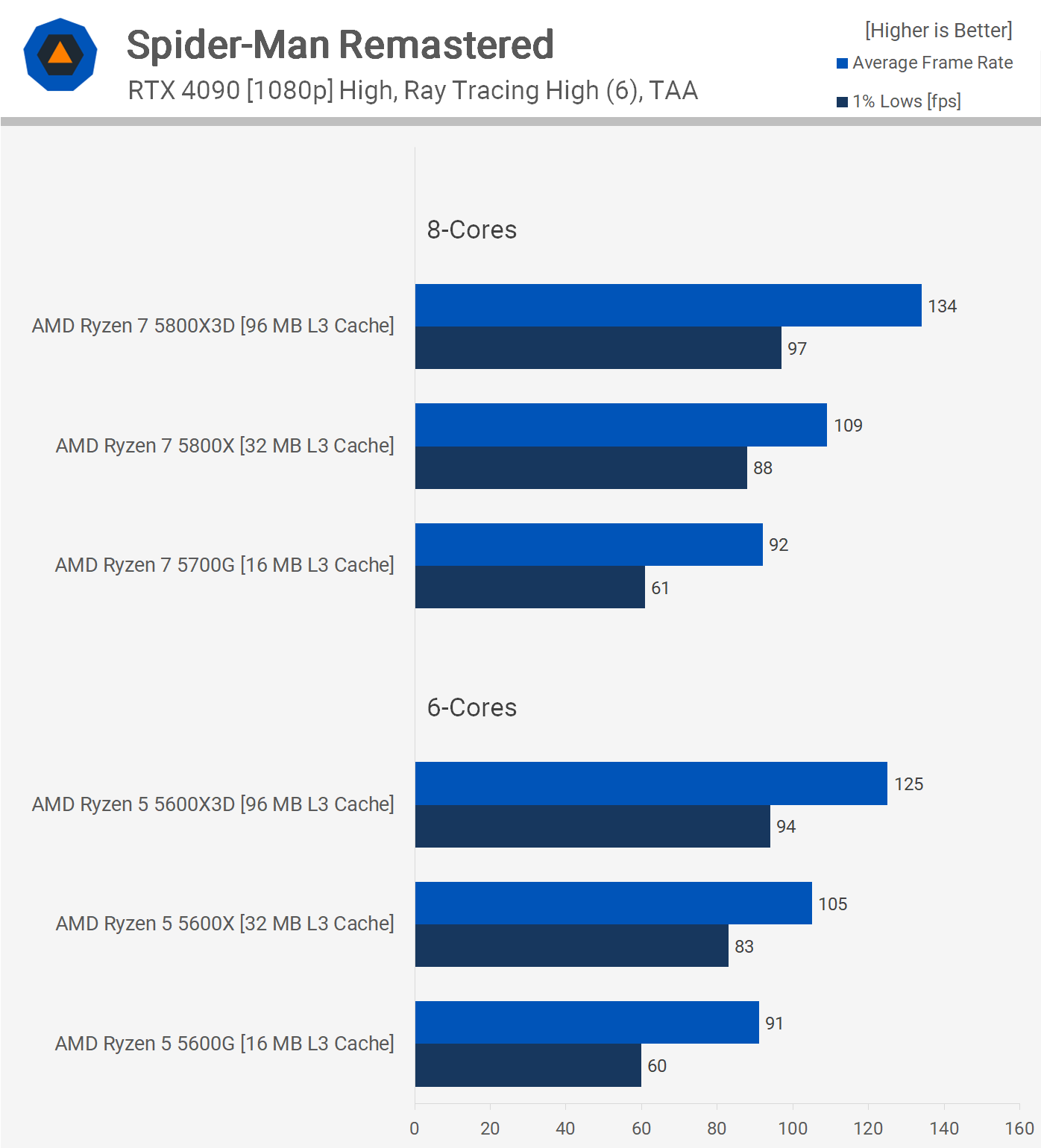

The Spider-Man Remastered results are intriguing because, again, although all CPUs tested feature the same Zen 3 cores, clocked at similar frequencies, the resulting performance can vary widely. Core count doesn't seem to play a significant role, 6 or 8; the results are much the same. The most considerable difference is seen when comparing the X3D models, as the 5800X3D was 7% faster on average.

What stands out is the slower performance of the 16MB models, just 60 fps for the low percentile. This means, when comparing low percentile performance, the 5800X was 44% faster than the 5700G.

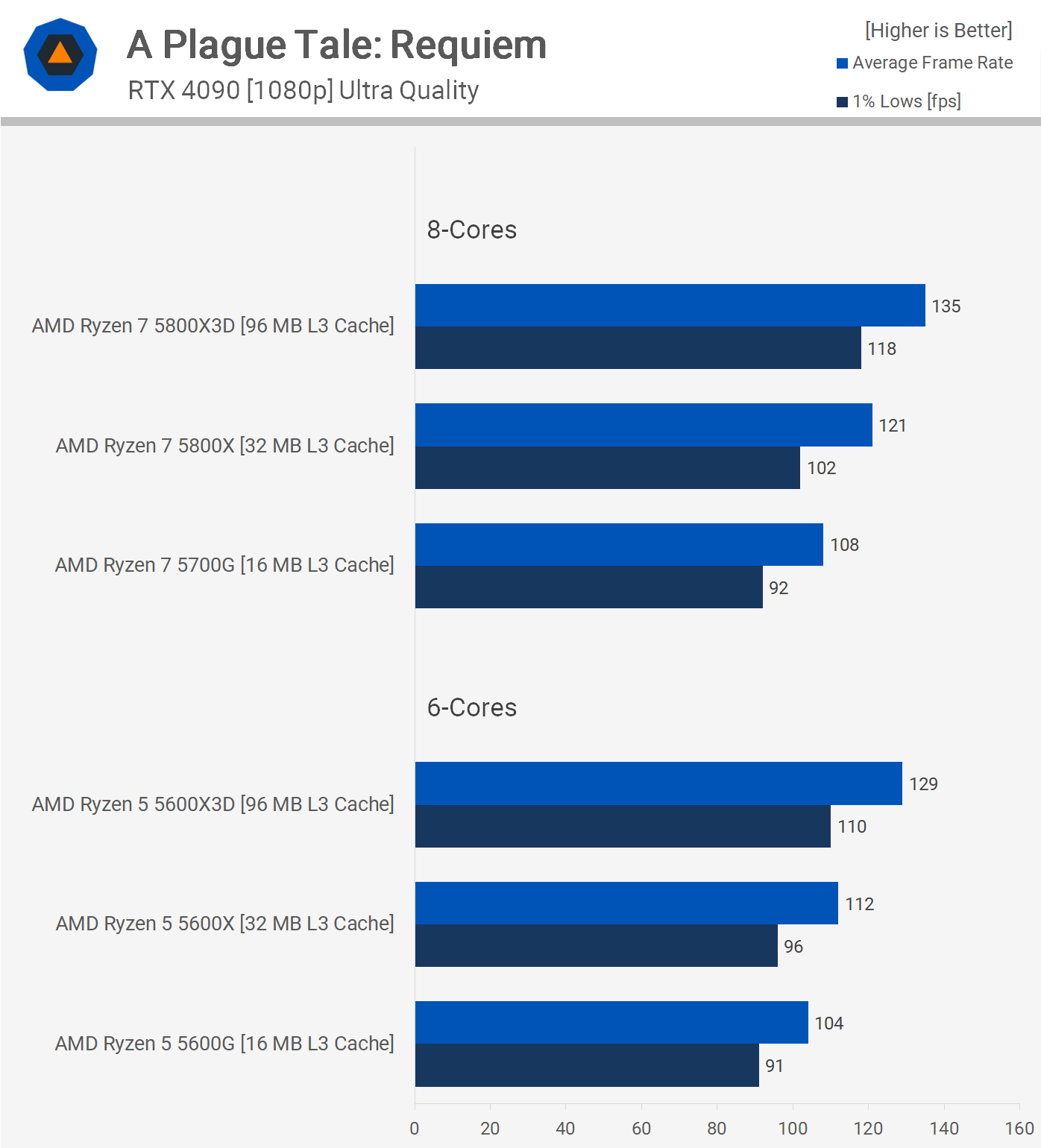

Performance in A Plague Tale: Requiem was closer than in other titles examined so far. There's also a slight performance advantage for the 8-core models, consistent across all three configurations.

What's different here is the smaller gains offered by the larger L3 cache, just a 12% increase from the 5700G to the 5800X, and then a further 12% from the 5800X to the 5800X3D. However, you're looking at a 25% uplift from the 5700G to the 5800X3D, which is a considerable improvement within the same generation.

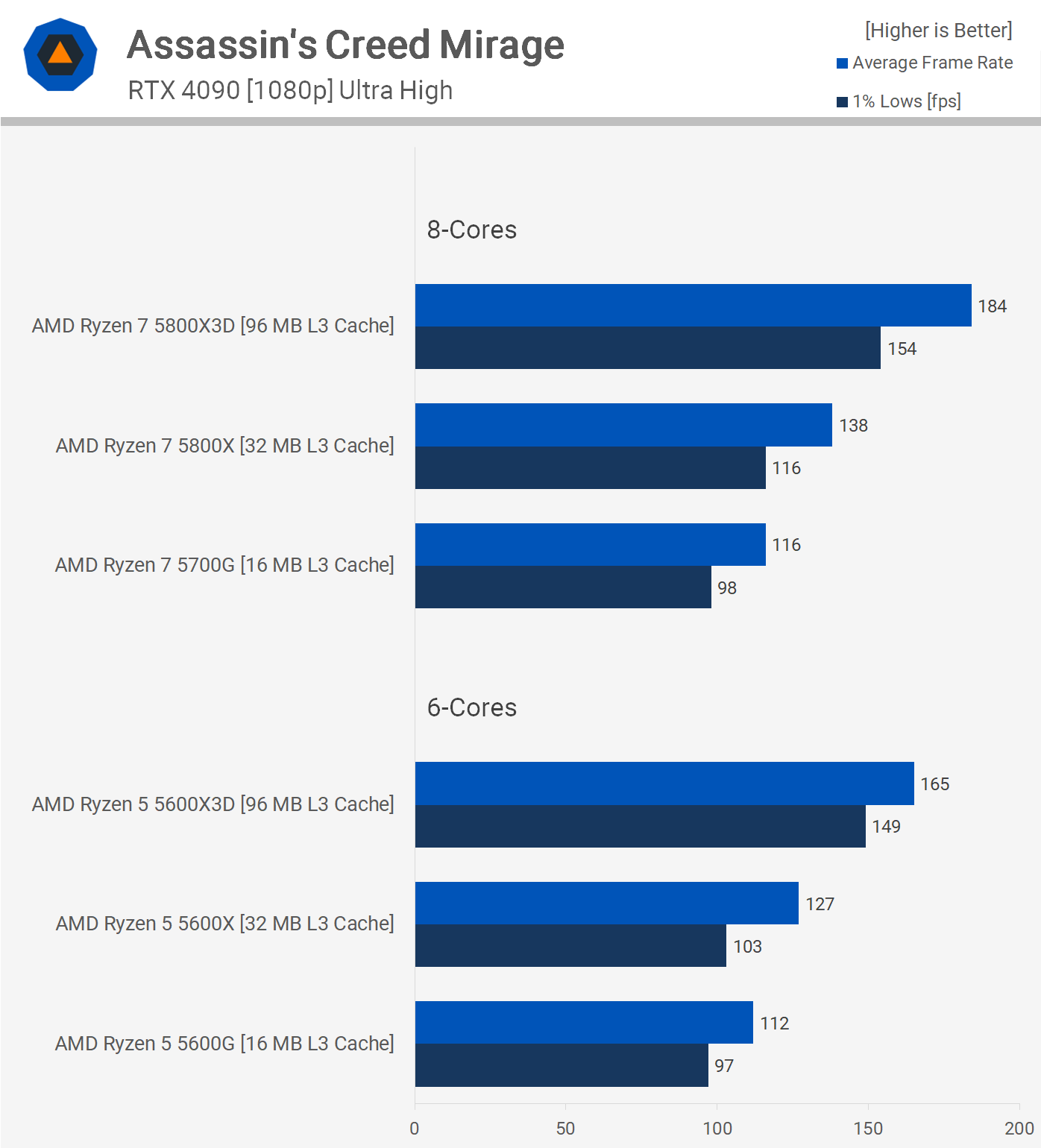

In Assassin's Creed Mirage, like most games, additional L3 cache is beneficial, but unlike most games, it also takes advantage of extra cores. Here, we're looking at a 12% boost for the 5800X3D over the 5600X3D, a 9% increase for the 5800X over the 5600X, and a 4% increase for the 5700G over the 5600G. Interestingly, the performance gains for the 8-core models increase with the L3 cache capacity.

Still, the increase in cache significantly outpaces the increase in cores; from the 5700G to the 5800X, we're looking at a 19% increase, and then a massive 33% boost from the 5800X to the 5800X3D.

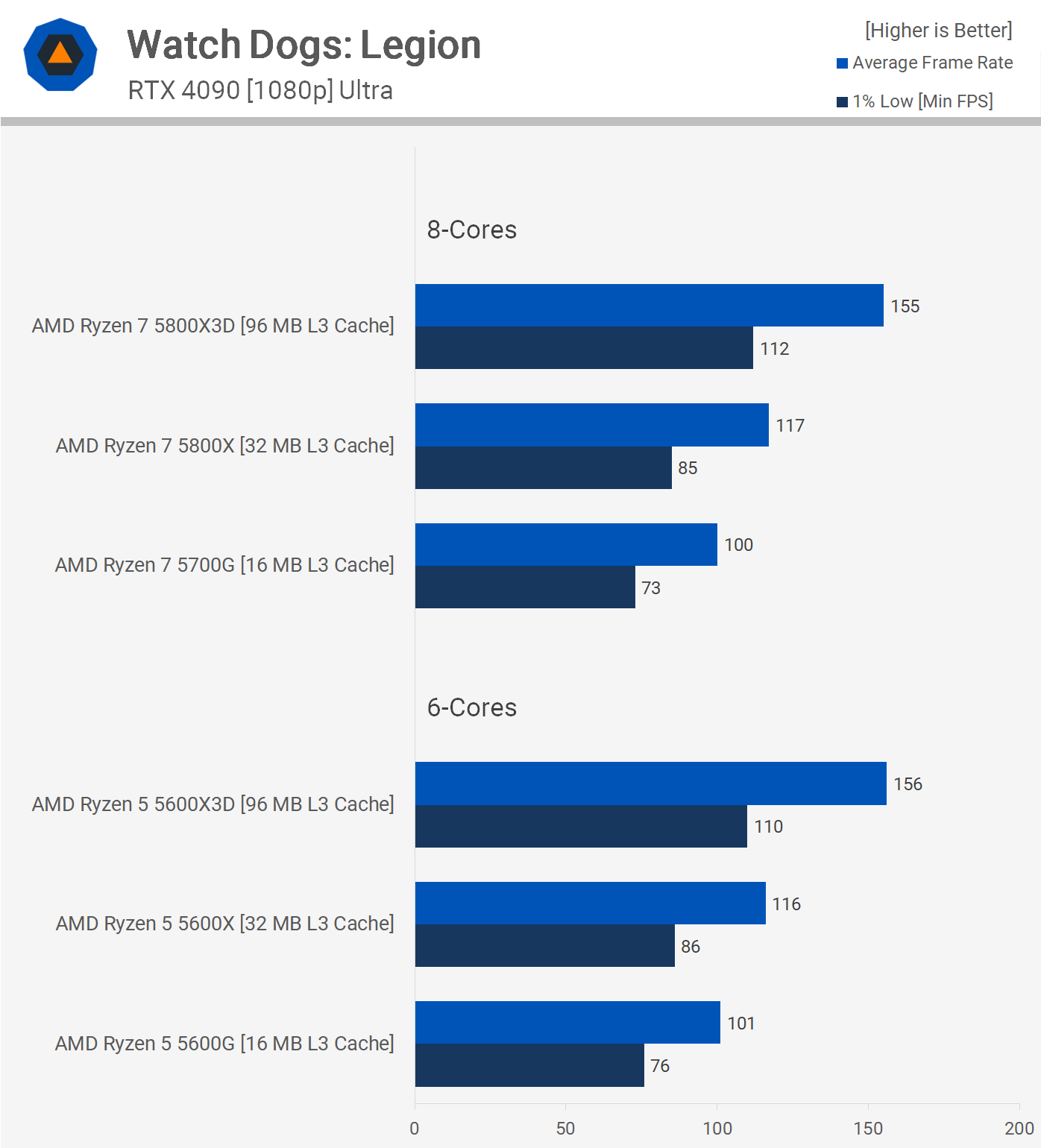

In Watch Dogs: Legion, extra cores don't bring benefits, with identical performance seen from the 6 and 8-core models. The real differences are observed when increasing cache capacity. Going from the 16MB models to 32MB improved performance by 17%, while going from 32MB to 96MB boosted performance by a further 32%. Altogether, you're looking at a 55% improvement from 16MB to 96MB.

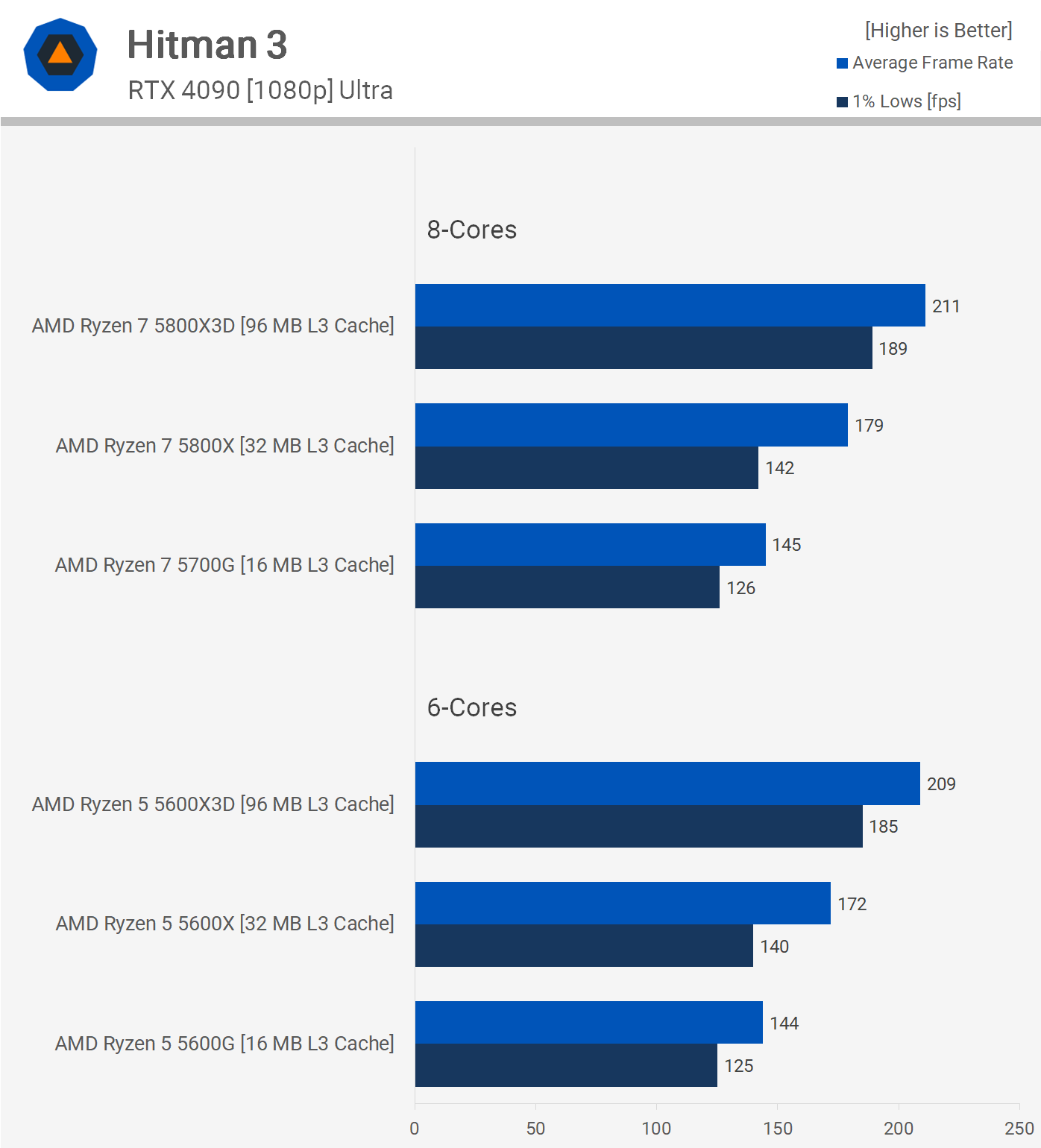

Finally we have Hitman 3 and again core count isn't that important here, but cache capacity is. From the 5700G to the 5800X we're looking at a massive 23% increase and then a further 18% increase from the 5800X to the 5800X3D. So in this example we're seeing a 46% uplift from 16 MB to 96 MB.

Hitman 3 Finally we have Hitman 3 and again core count isn't that important here, but cache capacity is. From the 5700G to the 5800X we're looking at a massive 23% increase and then a further 18% increase from the 5800X to the 5800X3D. So in this example we're seeing a 46% uplift from 16 MB to 96 MB.

12 Game Average

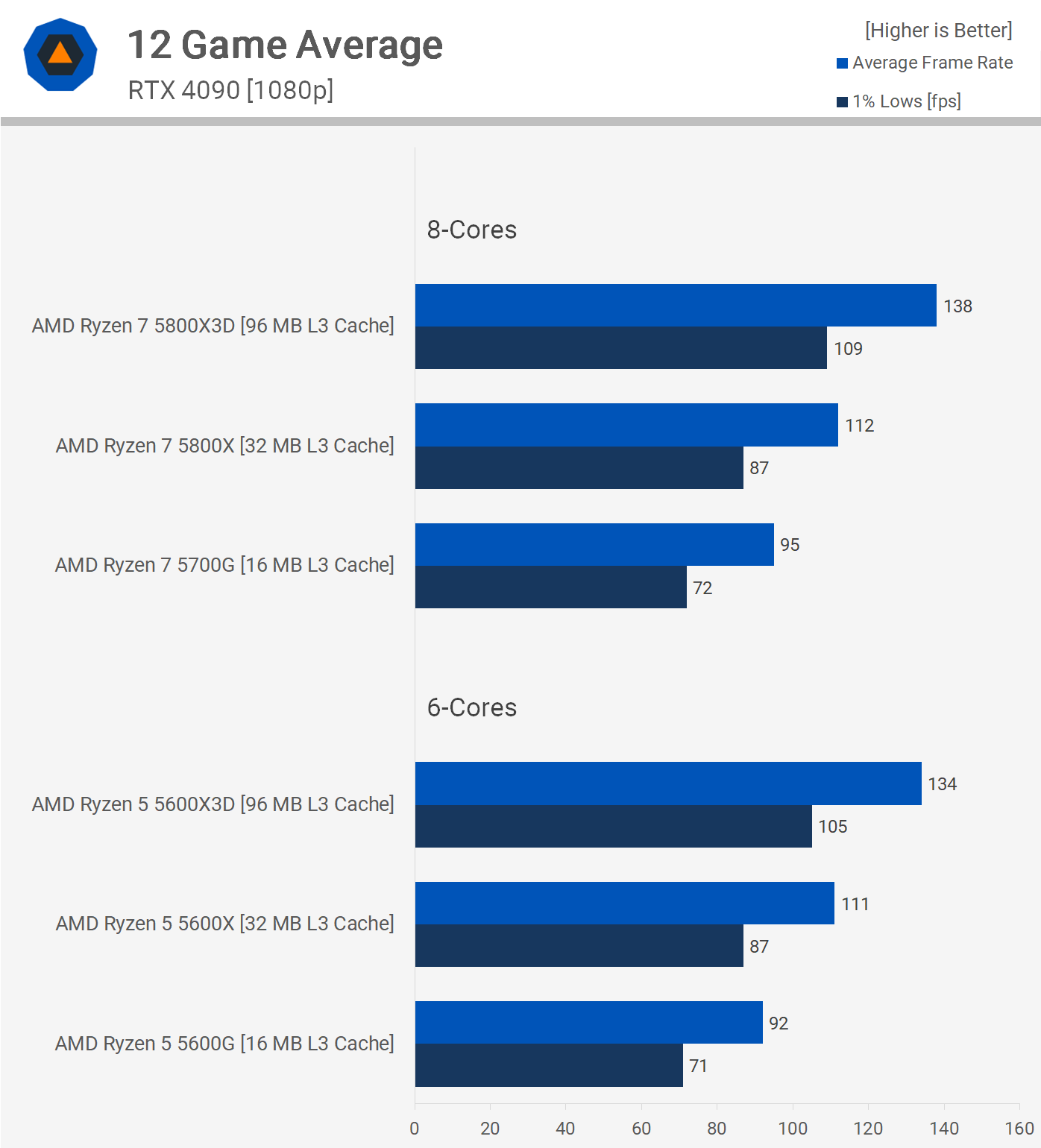

Here's the 12-game average data, and on average, we observed up to a 3% performance advantage when going from 6 to 8 cores. Thus, it's clear that for most modern games, half a dozen Zen 3 cores are more than sufficient. What matters more is the speed of the cores, and a significant part of that equation for gaming is L3 cache.

By doubling the L3 cache capacity from 16MB to 32MB, we saw an average of 18% better performance. However, the gains didn't stop there; going from 32MB to 96MB netted us a further 23% improvement. This means, on average, we saw a 45% uplift when going from 16MB to 96MB, while using the same number of Zen 3 cores.

What We Learned

Cache matters. Often more so than cores, when it comes to PC gaming performance. So, there you have it. As we found with Intel's 10th-gen series two years ago, and in fact, probably more than we realized two years ago. The arrival of AMD's 3D V-Cache processors has proven this beyond a shadow of a doubt, causing significant challenges for Intel's gaming performance, something the company deeply cares about.

This data also supports recommendations we made years ago. For example, we appreciated the value delivered by the Ryzen 5 5600 series and recommended it despite it having only 6 cores, which many at the time believed would be insufficient for future gaming.

Today, the 6 and 8-core Zen 3 parts are still delivering comparable performance, and of course, parts like the Ryzen 5 5600X are still very usable.

What we've never recommended for gaming, especially for those using discrete GPUs, were AMD's cut-down APUs such as the 5700G and 5600G. The smaller 16MB L3 cache significantly impacts gaming performance and, while still usable, for the same money, the 5600X and 5800X offer much better performance.

Of course, there are other disadvantages to the APUs, such as an older PCI Express spec and, in some instances, even fewer PCIe lanes. Still, the primary issue we have with those parts is the L3 cache capacity, and this data clearly shows why.

Now, getting back to core counts for a moment, I'm sure the topic of "multi-tasking" will come up. We don't want to delve into that here, as we've already covered it in detail, but in a nutshell, the myth that 8-core CPUs will game better than 6-core models because of multi-tasking is false, and we've never seen anyone making this claim provide any scientific data to back it up.

However, we did some testing and found the claims to be inaccurate. Moreover, for any serious multi-tasking, you'll find the same performance issues with the 5800X as you will with the 5600X. For example, updating or installing a Steam game in the background can lead to noticeable performance degradation, such as frame time stutters, but these occur regardless of the number of cores you have.

So, until games fully saturate the 5600X, you won't see an improvement with the 5800X, and by that time, we expect both CPUs will be struggling. Of course, there are instances where 8-core models of the same architecture are faster than their 6-core counterparts, but in those examples, the 6-core processors still deliver highly playable performance, making the core count argument moot, especially considering the cost difference.

As a more modern example, the Ryzen 5 7600 costs $210 and delivers comparable gaming performance to the 5800X3D. The Ryzen 7 7700 costs $310 – almost 50% more – and it would be challenging to find a game where the 7700 is even 20% faster than the 7600; in fact, such a scenario might not even exist. Looking over our most recent data from the 5700X3D review, the 7700X is just 4% faster than the 7600X on average, so in terms of value, the 6-core model is significantly better for gaming.