We're back with another big Spider-Man Remastered benchmark, this time focusing on CPU performance. Last week we threw 43 GPUs at the game to see which models performed the best in their respective price ranges, but noted at the time that CPU performance also appeared to be a real concern for this game, with very high utilization seen with the Ryzen 7 5800X3D.

Following that performance review, many readers reported seeing much lower performance than what was shown with the 5800X3D, and you were of course using a slower processor, but now we're going to get a good look at just how much difference your CPU can make when it comes to frame rates in Spider-Man Remastered.

For this CPU benchmark test we'll be using the GeForce RTX 3090 Ti and Game Ready display drivers 516.94. We'll be testing at 1080p, but also 1440p and 4K, using a range of quality settings including the medium preset, very high, and then a ray tracing configuration using the high quality preset, so there's loads of data to go over.

On the memory front, all CPUs have been tested using dual-rank, dual-channel DDR4-3200 CL14 memory, so it's an apples-to-apples comparison in terms of memory performance. That said, we have included a second configuration for the Core i9-12900K using DDR5-6400.

When compared to the GPU benchmark, we've tweaked our CPU pass, it's very similar but we've included a few quick twitches with a web swing at the end. So with that let's get into the results...

Benchmarks

Medium Quality 1080p, 1440p, 4K

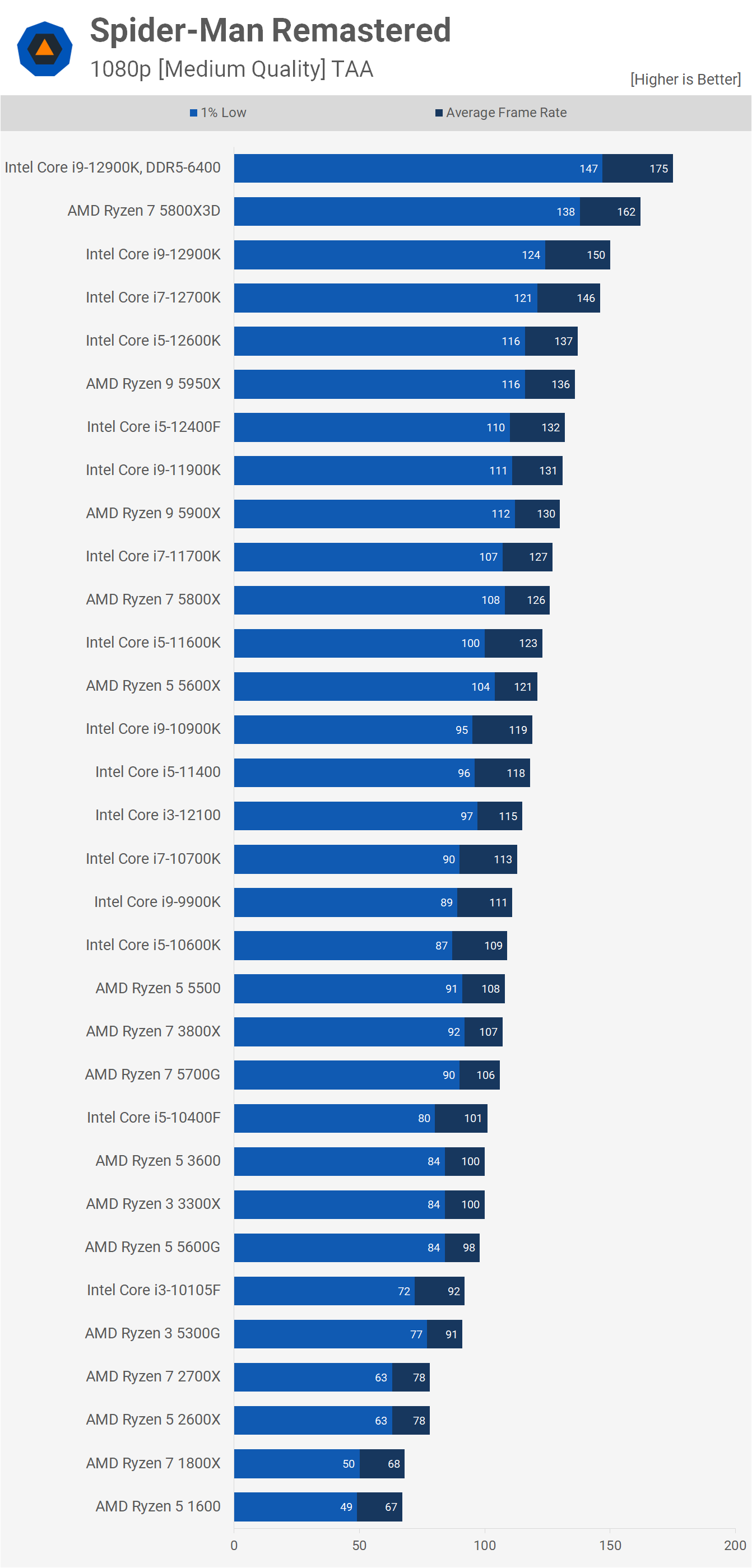

First up we have the medium quality preset data at 1080p, and we'll start from the top where we find the 12900K using DDR5-6400 memory allowing for 175 fps on average, which is a 17% improvement when compared to the DDR4-3200 configuration.

The 5800X3D which we used for the GPU benchmark is slower than the DDR5-enabled 12900K, trailing by a 7% margin with 162 fps on average. That said, it was 8% faster than the 12900K when using the same DDR4 memory.

As you might have expected, the Intel Alder Lake CPUs outperform the standard Zen 3 parts, but in such a CPU intensive game you might not have expected the 12600K to match the 5950X and the E-core disabled 12400 to come in ahead of the 12400F.

Quite surprisingly, the 11th-gen Core processors also performed really well as the 11900K can be seen matching the 5900X which isn't unheard of, but half the time the 11900K ends up slower than the 10900K. The Core i7-11700K and Ryzen 7 5800X can also be seen neck and neck meaning the 12700K was 16% faster than AMD's 8-core Zen 3 part when using the same memory.

The 5600X and 11600K are also found delivering a similar level of performance, though the 1% lows of the 6-core Zen 3 part were slightly stronger, and much stronger than the 10-core 10900K. It's interesting to see the 5600X beating the 10900K and delivering almost 10% greater 1% low performance after all the talk of games needing more cores, especially in what appears to be one of the most CPU intensive games we've ever seen.

That's not to say the 8, 12 and 16-core Zen 3 CPUs aren't faster, they clearly are, but the 5600X had no issue delivering high refresh rate performance without a hitch.

Interestingly, the 4-core / 8-thread Core i3-12100 also managed to deliver perfectly playable smooth performance with 115 fps on average. We played the game for about half an hour with this CPU just to see what it was like and the numbers don't lie, the Core i3 was flawless despite a constant utilization of over 90% and often being maxed out.

It was also intriguing to see the i3-12100 beating the Core i7-10700K, Intel's 10th-gen isn't particularly impressive in this game despite working well enough.

The bottom half of the graph is dominated by AMD. The primary explanation is that we didn't test many low-end or older Intel CPUs as it's a serious pain in the backside. All the AMD CPUs tested here were done with a single test system using the MSI X570S Carbon Max motherboard. For Intel, we were forced to use three different test systems for the results shown, and if I wanted to go back to first-gen Ryzen we'd need yet another test system.

We already know parts such as the Core i5-7600K are done for, so we didn't want to spend another day flogging that dead horse. So we're not basing AMD here, rather we're saying Intel older CPUs are too difficult and too slow to even bother with.

With that disclaimer out of the way, let's proceed... the Ryzen 5 5500 and Ryzen 7 3800X and 5700G were all good for around 106 - 108 fps in our test, with similar 1% low performance. Then we have the Core i5-10400F roughly matching the Ryzen 5 3600, Ryzen 3 3300X and Ryzen 5 5600G, all with around 100 fps on average.

The old Core i3-10105F which is basically the same as the 10100 managed to crack the 90 fps barrier, which is still very playable in this title and that put it alongside the Ryzen 3 5300G. Old Zen+ parts such as the Ryzen 7 2700X and Ryzen 5 2600X performed poorly relative to more modern processors, but we're still looking at 78 fps on average with 1% lows of 63 fps when paired with high quality memory.

The first-gen Ryzen CPUs which would be going up against Intel 7th-gen dropped below 60 fps for the 1% lows, so you'll certainly notice some slow downs if you're used to 60 fps-plus.

We're not going to pour over the 1440p medium data as we're still heavily CPU limited and therefore the data doesn't change much. We're looking at very similar margins and around a 3% decline in performance at the high-end as the RTX 3090 Ti drops a few frames at the higher resolution, so let's move on to 4K now...

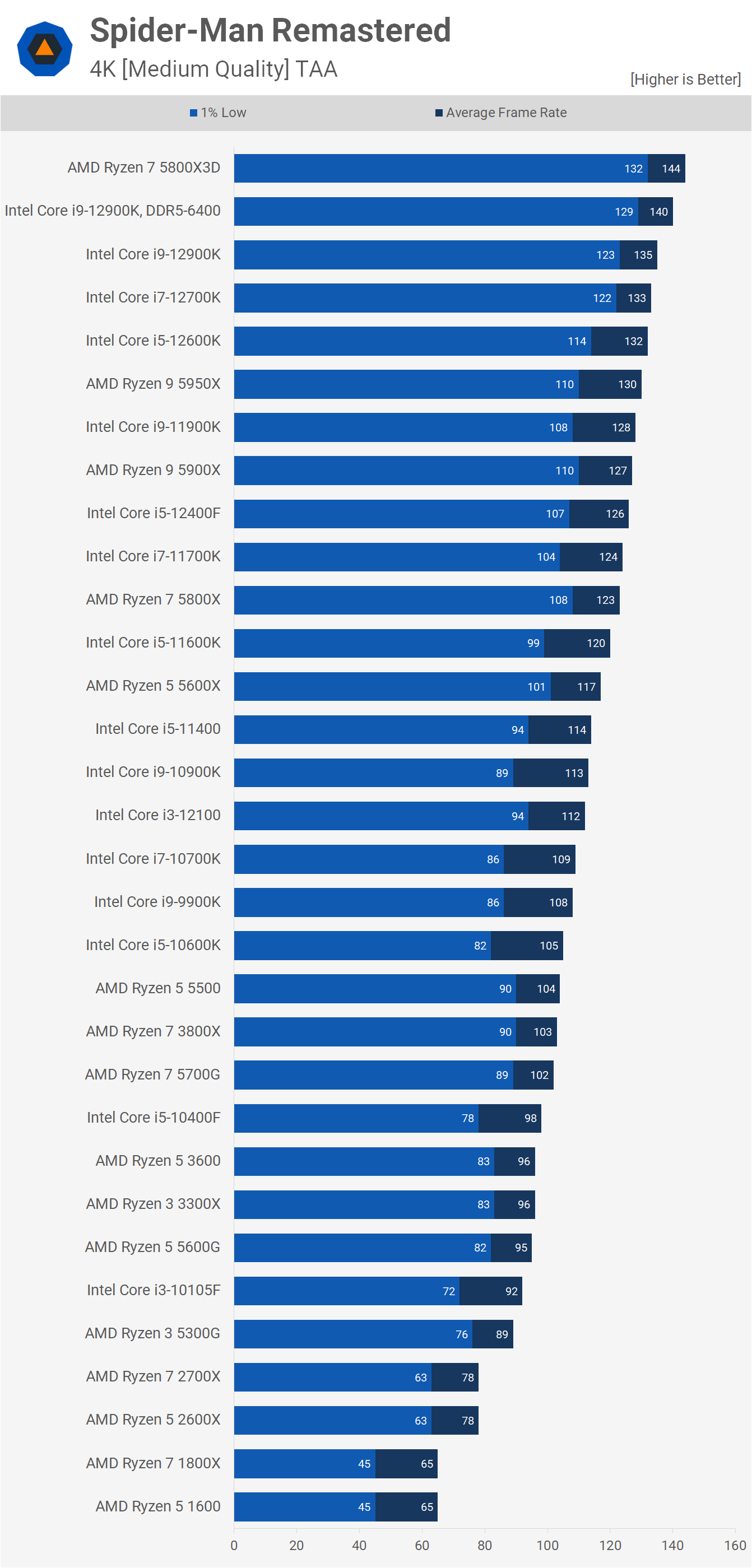

The game becomes more GPU bound at 4K as expected, though this is only true when using a high-end CPU. Interestingly, the 5800X3D overtakes the Core i9-12000K here, even when pairing the Intel CPU with DDR5 memory. The margin is very small though, just a 3% win for the 5800X3D.

The Core i9-12900K was also 4% faster using the DDR5-6400 memory as opposed to DDR4-3200, so for those of you using a high-end GPU targeting high resolutions such as 4K, which we assume you'd do for a single player action adventure game, memory performance is far less of an issue.

The 5950X was similar to the 12600K and 11900K, pushing 130 fps while the 5900X was just 3 fps slower, matching the much cheaper 12400F, a personal favorite of ours.

Further down, we find the 5600X with 117 fps and although that only made it slightly faster than the 11400, it did outpace the 10900K. The Core i3-12100 was again impressive with strong 1% low performance and 112 fps on average.

Below that we find the 10700K, 9900K and 10600K all delivering similar performance with just over 100 fps on average. The Ryzen 5 5500, Ryzen 7 3800X and 5700G also delivered a similar level of performance. Again, it's not until we get to the Zen and Zen+ parts that performance starts to drop away.

Very High Quality - 1080p, 1440p, 4K

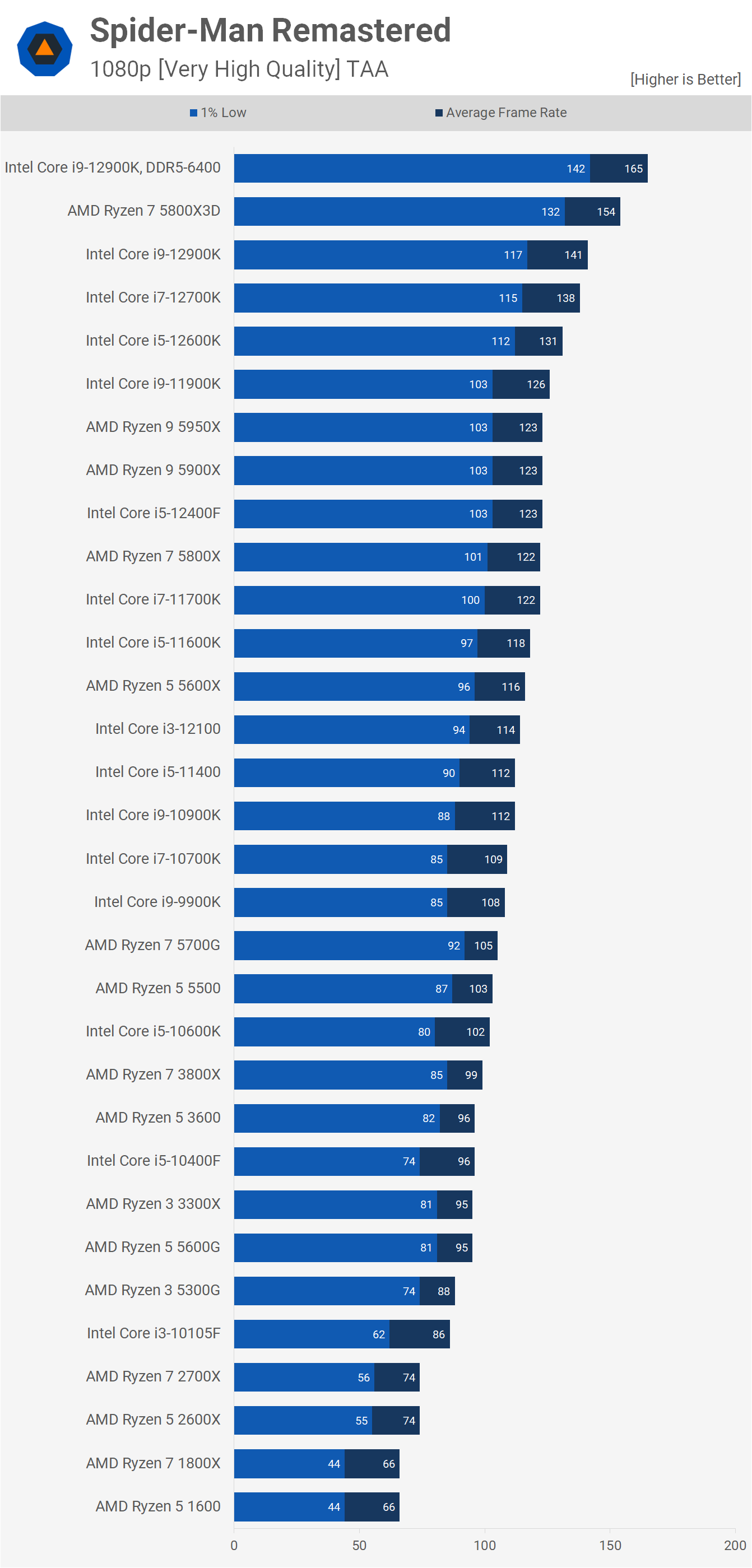

Moving to the very high quality preset, we'll once again start with 1080p results. Once again, the 12900K using DDR5-6400 memory topped our chart, this time with 165 fps making it 7% faster than the 5800X3D and 17% faster than its DDR4-3200 configuration. That means when using the same DDR4 memory, the 5800X3D was actually 9% faster than the 12900K.

But it also means that the 12900K, 12700K and 12600K are all a good bit faster than the non-3D V-Cache Zen 3 processors, such as the 5950X, 5900X, 5800X and 5600X. The 8, 12 and 16-core Zen 4 processors all delivered basically the same result with the 5600X dropping off the pace a little to come in 6% slower, but again frame pacing was good.

Intel's 11th-gen was again impressive and the 11400 game in just behind the 5600X, as did the 12th-gen Core i3-12100. The much older Intel's 10th-gen wasn't particularly impressive and the 10-core 10900K couldn't even beat the 5600X.

Further down, we see that the Ryzen 5 5500 was able to beat the Core i5-10600K and the Ryzen 5 3600 matched the average frame rate of the Core i5-10400F, but delivered much stronger 1% lows. The Ryzen 5 5300G performed surprisingly well and easily outperformed the Core i3-10105F, particularly when looking at the 1% lows. At the very bottom of our graph we find the Zen and Zen+ processors with sub 60 fps 1% lows.

The 1440p data is very much CPU limited once again and therefore the results don't change all that much. We see a bit of movement at the top end, but other than that the standings and margins are much the same to what was just shown at 1080p using the very high quality preset. This means for many of you with a mid-range to high-end GPU, lowering the quality settings won't actually boost your frame rate when playing Spider-Man Remastered.

Most CPUs such as the Core i9-10900K dropped just 2fps when going from 1080p to 1440p, and then we saw just an 8 fps reduction from 1440p medium to very high, so you might as well crank up those visuals and enjoy the single player title in all of its glory.

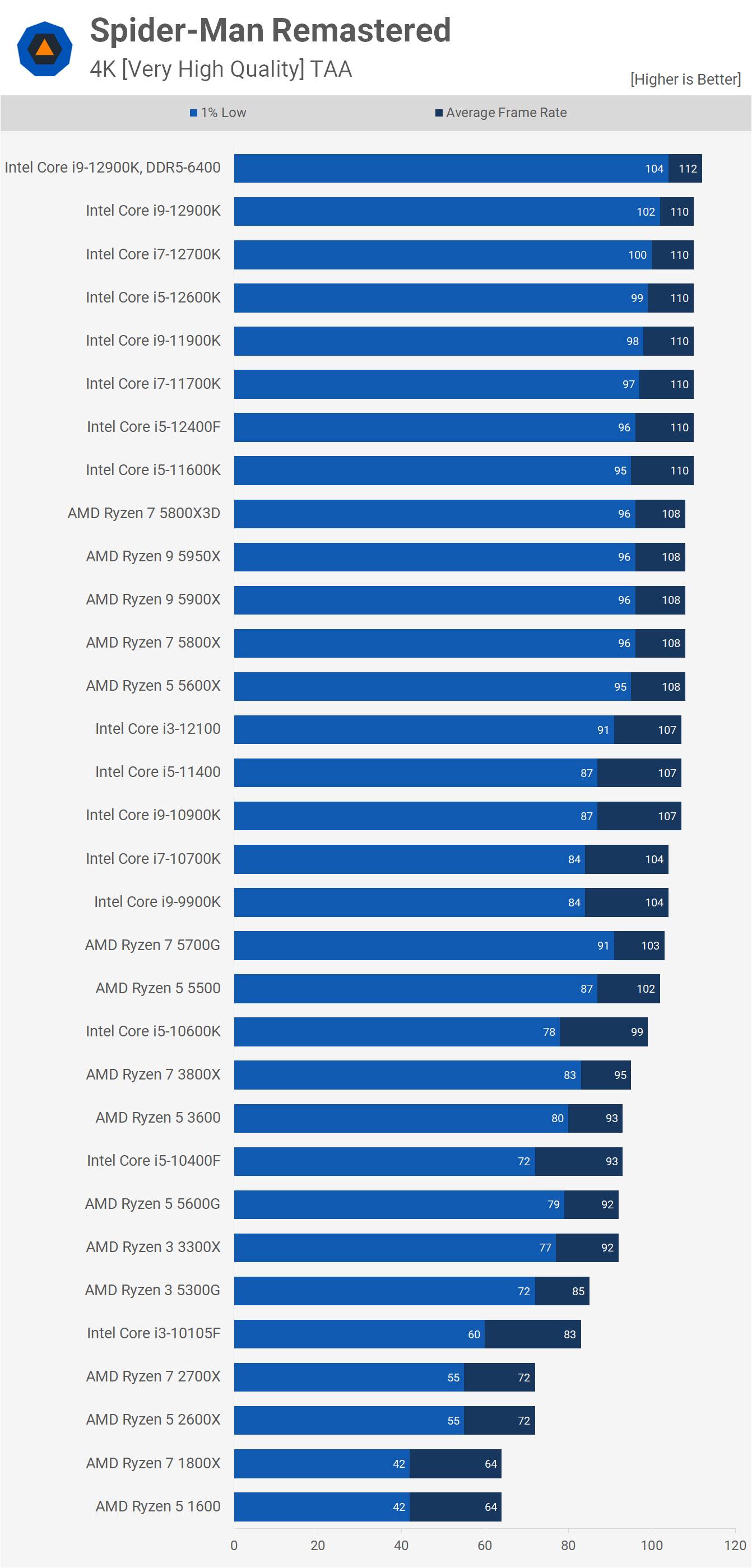

Once we reach 4K the game becomes GPU limited, even with an extreme high-end graphics card such as the GeForce RTX 3090 Ti. We still see an improvement in 1% lows, but they're not nearly as significant as what was shown when more CPU limited at lower resolutions such as 1080p and 1440p.

The 1% lows of the 12400F, for example, trailied the DDR5 enabled 12900K by just an 8% margin here whereas at 1440p that margin was 26%. The Zen 3 CPUs topped out at 108 fps which is just 2 fps less than that of the Intel CPUs, though we are seeing slightly slower 1% lows of up to 6 fps.

It's worth noting that from the Core i3-12100 and up you'd have a hard time telling any of these configurations apart under these test conditions, which frankly we'd consider to be the most real-world testing shown so far for someone using an RTX 3090 Ti.

Closely following the 12100 we find the 11400, 10900K, 10700K and 9900K, all of which delivered highly playable performance. The Ryzen 7 5700G and Ryzen 5 5500 were also there, both delivering strong 1% low performance.

Then for around 90 fps the Ryzen 3 5300G, 3300X, 5600G, 10400F, 3600 and 3800X were all right around the mark. The Core i3-10105F did have some consistency issues, as did the Zen and Zen+ processors.

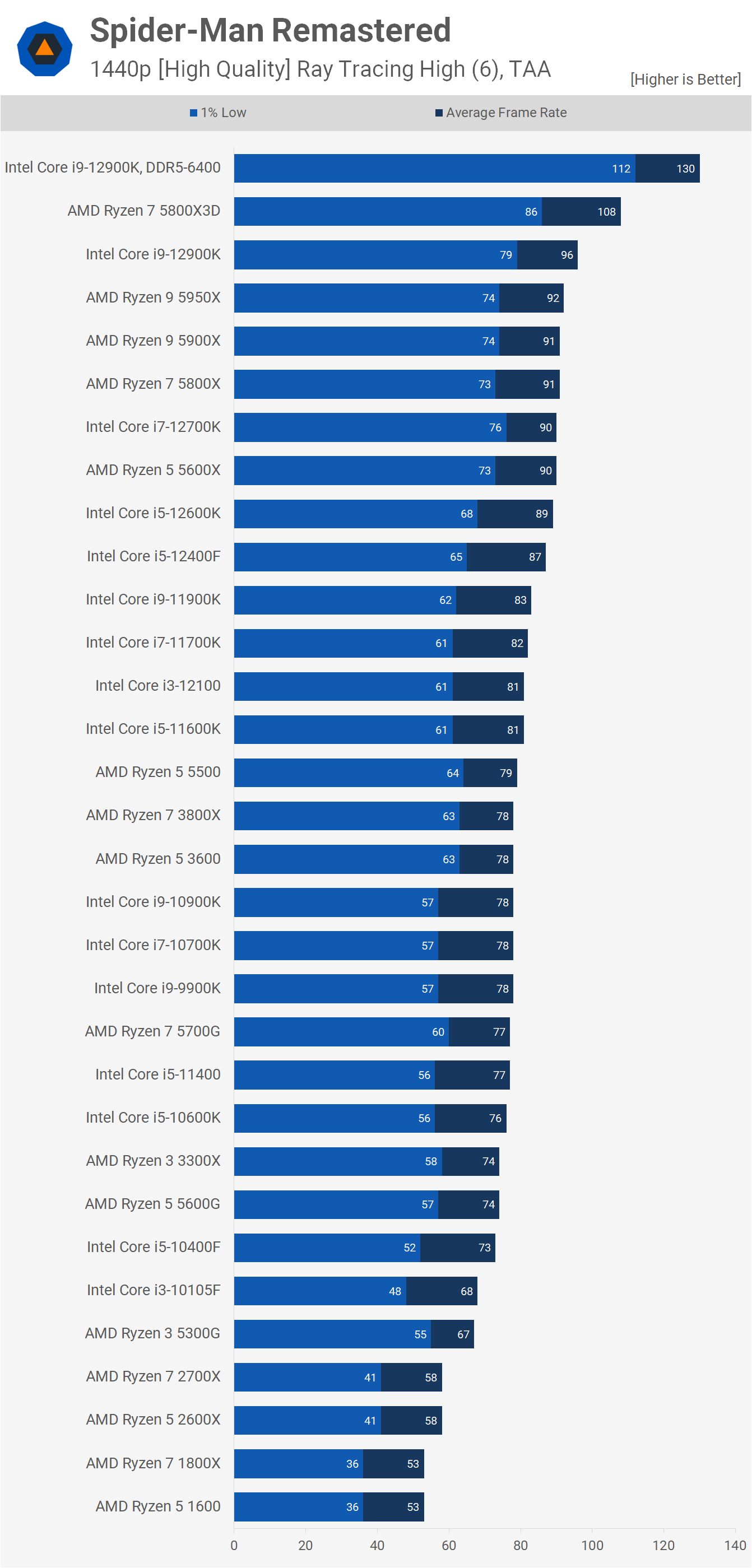

Enabling Ray Tracing

As you would expect, enabling ray tracing even with the slightly dialed down 'high' quality preset greatly reduces frame rates, even at 1080p. But as many have noted, this does increase CPU usage further. Given that these results might surprise you.

Despite the higher CPU usage, lower core count CPUs don't suffer. The Core i3-12100, for example, was just 6% slower than the 12400F and you can attribute that to the difference in cache capacity rather than core count. It's the same deal when looking at Zen 3 processors, the 5600X is now much closer to the 5800X, 5900X and 5950X, basically matching them.

Despite that, the DDR5-enabled Core i9-12900K runs away with the prize, delivering almost 20% more performance than the 5800X3D and a massive 36% improvement over its DDR4 configuration.

As far as we can tell, enabling ray tracing in this game significantly increases the amount of traffic crossing the PCI Express bus. It also increases the amount of RAM used and therefore the amount of data being shifted in and out of memory. So although CPU usage has increased, it appears as though memory bandwidth is the primary bottleneck for most processors.

Throwing more cores at the problem isn't the solution, which is a bit surprising given the game will spread the load quite well across even 12 cores, but like most games it does appear to be primary thread dependent.

That means individual core performance is key and it's why the 12900K is so fast relative to the 5950X, for example. It's also why the 5950X, 5900X, 5800X and 5600X are all very similar, while the difference in performance between the 12th-gen Core processors can be largely attributed to L3 Cache capacity.

Looking at CPU utilization alone, we had expected the Core i3-12100 to crumble, but that wasn't the case and in fact it comfortably beat the 10-core Core i9-10900K, suggesting that IPC is king here rather than core count, within reason of course.

More evidence of this can be seen with AMD's APUs, the 5700G has 8-cores/16-threads but half the L3 cache of the Zen 3 CPU models such as the 5600X which were almost 20% faster. The 5300G halves its cache again and this is likely why the 5700G was 15% faster.

The older Zen and Zen+ processors aren't particularly impressive when it comes to memory performance and like the APUs they also lack PCIe 4.0 support, which could be playing a role here. Despite packing 8-cores / 16-threads, the 1800X and 2700X really struggle in this title.

As was shown before, moving to 1440p from 1080p does little to change the margins and this was also true with ray tracing enabled. The Core i9-12900K DDR5 configuration was much faster than the DDR4 version, as well as AMD's 5800X3D. Then the rest of the results which were very heavily CPU limited remained almost the same.

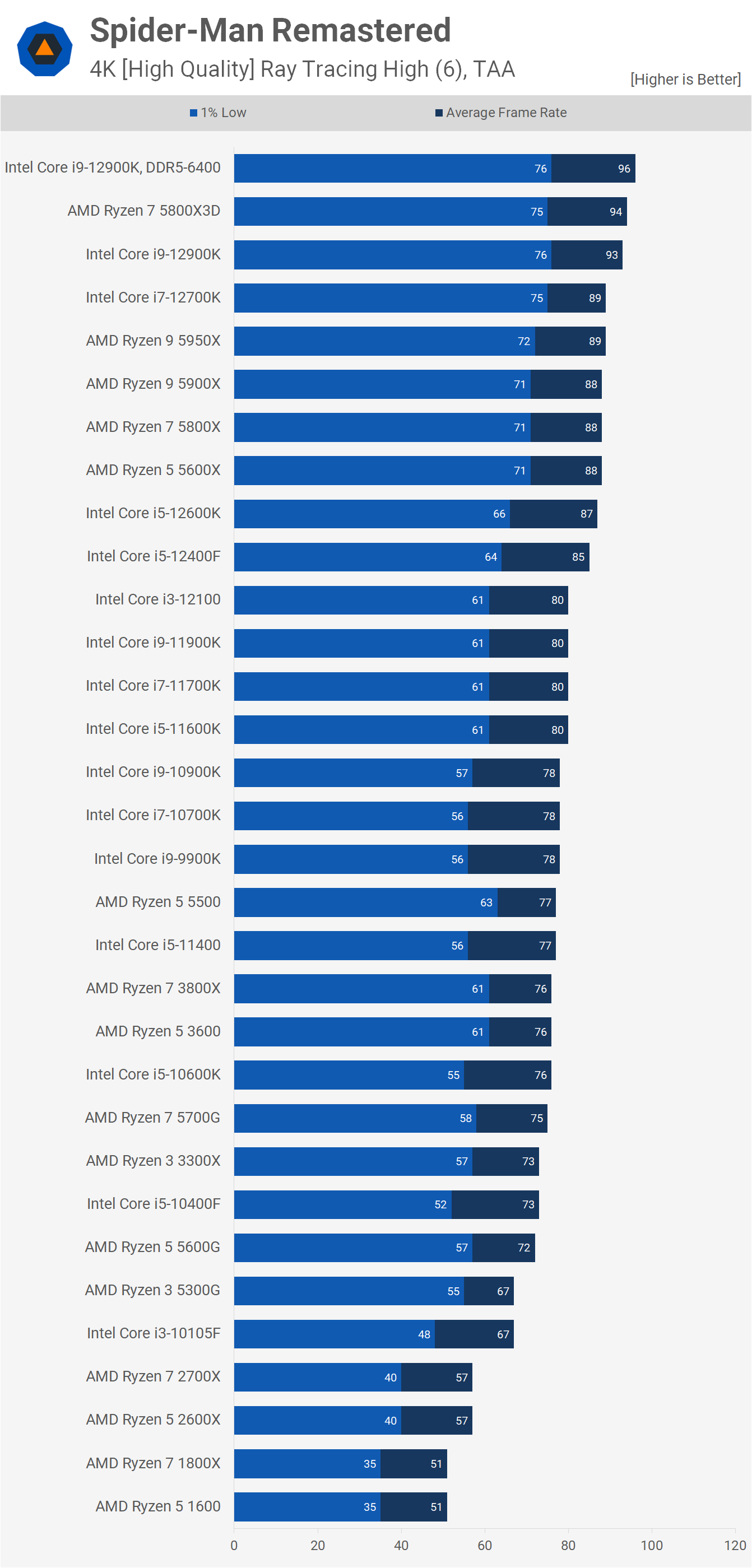

Now as we move to 4K, the game becomes increasingly GPU limited at the high-end, but still very much CPU limited for the bulk of the results. The GPU limit at the high-end means the DDR5 configuration for the 12900K offered very little benefit over DDR4 and that the 5800X3D was able to roughly match its performance.

Then the Core i7-12700K and Zen 3 processors all delivered about the same level of performance, along with the 12600K and even the 12400F. The next tier down managed to maintain 60 fps for the 1% low and this included the 12100, 11900K, 11700K and 11600K.

The Ryzen 5 5500, Ryzen 7 3800X and Ryzen 5 3600 also managed to break the 60 fps barrier for the 1% lows. In fact, most of the CPUs tested delivered satisfactory performance under these test conditions with the exceptions being the Core i3-10105F and Zen and Zen+ CPUs.

Resizable BAR on/off

Before wrapping up the testing, here's a look at how Resizable BAR influences performance in Spider-Man Remastered and as you can see it doesn't.

Results should be the same with or without this PCI Express feature enabled which is true of most games. The only major exception would be for Intel Arc GPUs which will likely benefit from the technology, but this isn't true for Radeon or GeForce GPUs, we're showing the Radeon RX 6800 XT here.

Memory Performance

DDR4

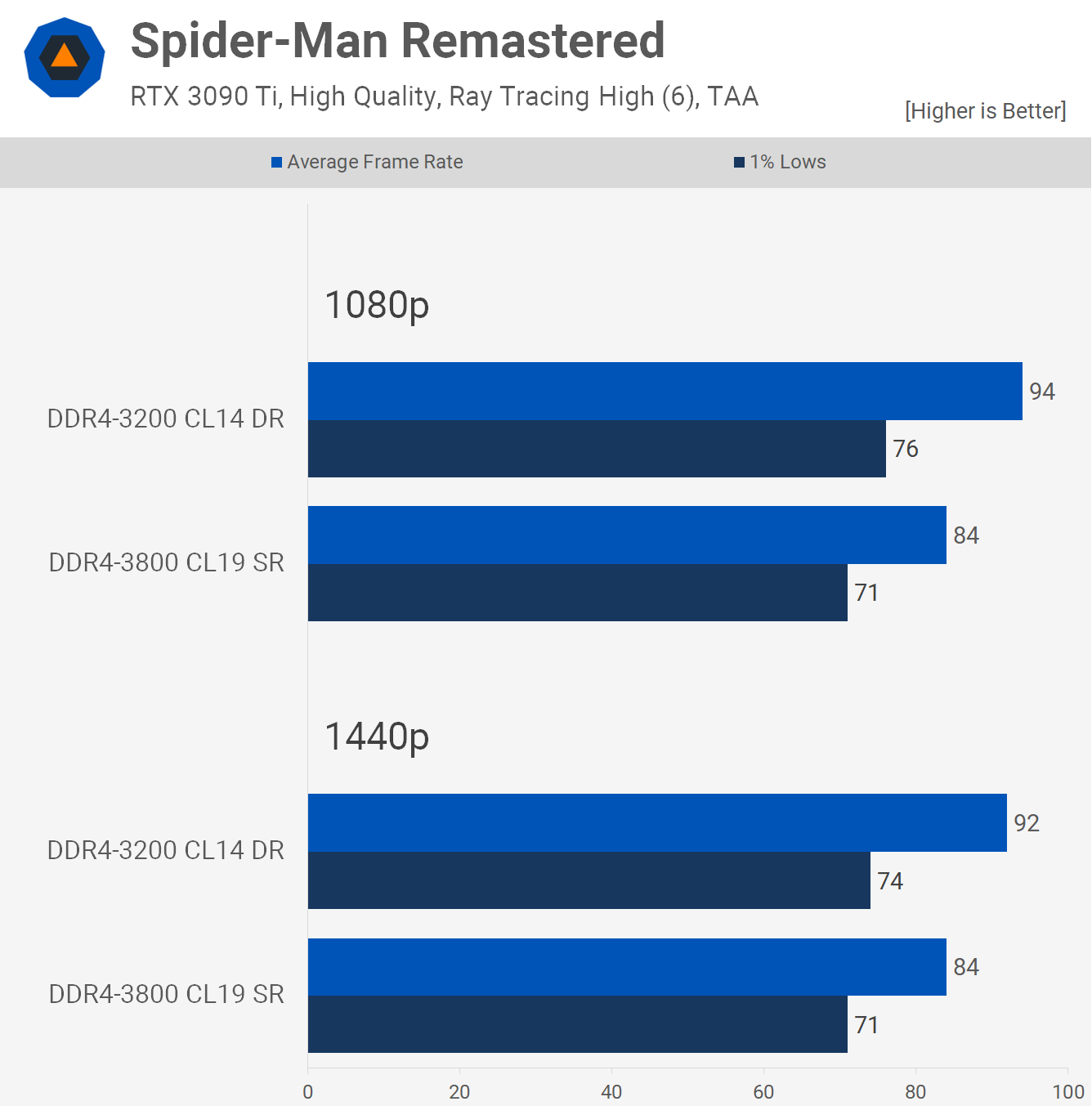

Now here's a look at DDR4-3200 CL14 performance in a single-rank and then the dual-rank configuration that we used for testing. As we've found in the past, dual-rank memory typically offers good performance gains in games and here we're looking at an 8% uplift at 1080p and 6% at 1440p, certainly not massive but still impressive given frequency and timings remain the same.

Now here's a look at some cheap DDR4-3800 CL19 modules versus our dual-rank DDR4-3200 CL14 memory. This time we're looking at a 10-12% performance advantage using our test configuration over high clocked, but also higher latency memory.

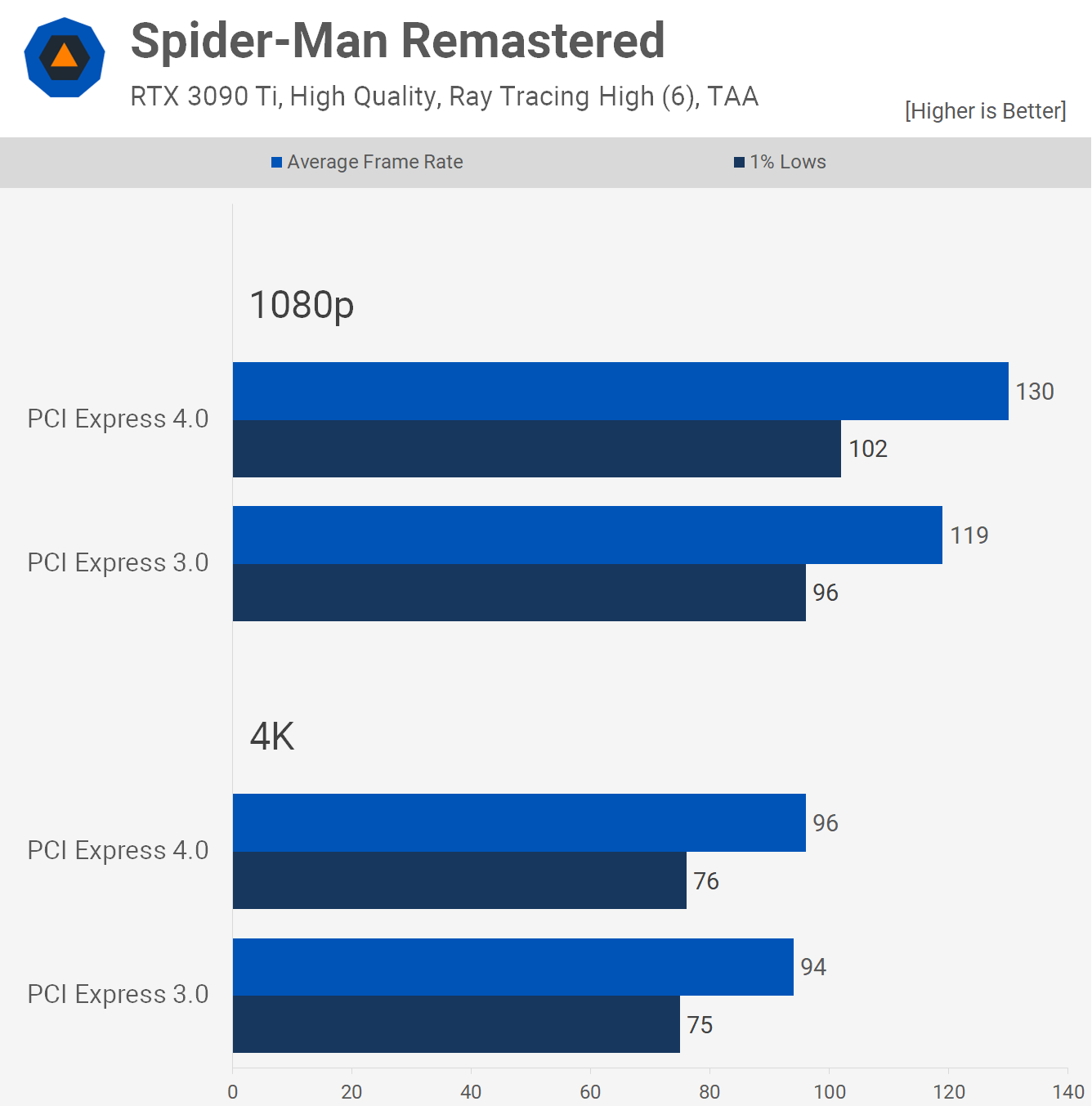

Finally, we wanted to take a quick look at PCI Express performance with the Core i9-12900K using DDR5-6400 memory. For a bit of context, the biggest difference we've seen previously using an RTX 3080 between PCIe 3.0 and 4.0 was about 5%, though admittedly we haven't looked at this for quite some time.

We were shocked to find a 9% performance improvement at 1080p using PCIe 4.0 over 3.0, that's quite a large margin. Of course, as we've found in the past, that margin almost entirely evaporates at 4K where we're driving fewer frames. Still, the 1080p results were eye opening and it means for those of you using not just slower DDR memory but also an older PCIe interface can be sacrificing quite a lot of performance.

This is possibly another reason why Intel's 10th-gen performed a bit weaker than expected as they are limited to PCIe 3.0.

A Word About Stuttering

Something a lot of people mentioned in the GPU benchmark comments was that Spider-Man Remastered has a lot of performance related issues, from frame stuttering to memory leaks. We certainly don't doubt those claims for a second as there's no shortage of reports online, but we can tell you that we ran into no such issues, either playing the game which we've done now for several hours using various configurations, or from our detailed benchmarking.

The game has been buttery smooth for us, with the exception of a few older configurations using Zen and Zen+ processors, and we'd expect the experience to be dramatically worse with the likes of the older Core i5-7600K as the Core i3-10105F – which is basically a 7700K, which is basically a 6700K – wasn't very good.

We cannot tell you why our experience was so flawless to what many are reporting, as always with computers it could be any number of things from the exact hardware configuration to what we do or don't have installed in Windows.

Hopefully though patches continue to address any performance related issues. Assuming you're in the clear like we are, most CPUs will enable a good gaming experience, particularly newer models. AMD's Zen 3 CPU range performed well, as did Intel 11th and 12th-gen series.

It was interesting to see how the game behaved with ray tracing enabled, and if you were to look at CPU utilization alone, you'd be given the wrong impression. Provided you have 4 cores with 8 threads on a modern processor, the game should run just fine. Rather, the key is IPC performance with a heavy emphasis on memory and PCIe bandwidth, or at least that was the case with the 12900K.

We probably don't need to waffle on any longer, the results have said it all. This was a massive undertaking so we hope you found the results interesting, we know we did and Spider-Man Remastered is now going to join the many games we test with regularly.