The AMD-Nvidia war is about to go dual GPU. Following the leak of AMD Radeon HD 6970 benchmark numbers, a slide from what appears to be a presentation on the AMD Radeon HD 6990 (codenamed Antilles) has leaked, courtesy of user Gast on the 3DCenter forums.

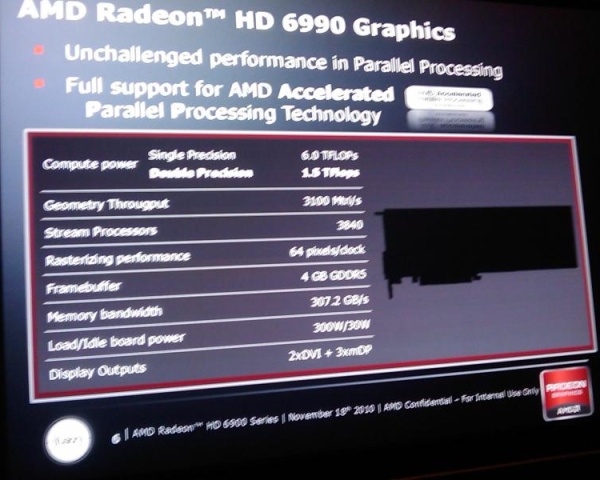

If the slide is legitimate, and it appears to be, the graphics card will integrate 3840 stream processors and have 4GB of GDDR5 memory clocked at 4.80GHz. It will be equipped with two DVI-I and three mDP connectors. Power consumption will be at 300W under load and around 30W when idle. The next-generation dual-chip flagship offering from AMD will carry two codename Cayman GPUs with 1920 stream processors per chip. The card will deliver 6.0 trillion floating point operations per second (TFLOPS) single-precision performance or up to 1.5TFLOPS double-precision performance.

If it were released today, Antilles would become the top performing graphics card in the world. Nvidia is rumored to be delaying its dual-GPU GTX 590 in order to implement further improvements so that it can once again beat AMD's offering (Nvidia currently holds the crown for single-GPU performance with its GeForce GTX 580). Antilles is slated to ship in the first quarter of next year, though pricing has yet to be announced.

https://www.techspot.com/news/41295-leaked-ati-radeon-hd-6990-specifications.html