Although our news team covered AMD's latest APUs last week, we're a bit behind on the review. The chipmaker couldn't deliver our Kaveri kit until a few days after the official launch date, so we have a case of better late than never. On the bright side, without a hard deadline, we had more time to hit the chip with our full array of benchmarks.

As the successor to last year's Richland APUs, Kaveri has been updated with new CPU cores based on AMD's Steamroller architecture (Richland uses Piledriver cores). The Radeon R7 series GPU has also been integrated, though the 384 SPU version on most Kaveri APUs isn't much different than the A10-6700 and A10-6800's Radeon HD 8670D.

Kaveri is AMD's fourth-gen APU while Steamroller is third-gen CPU technology that is supposedly 10% faster per-clock and per-core than Piledriver. This is achieved by moving from Global Foundries' 32nm High-K Metal Gate SOI process to its bulk 28nm SHP (Super High Performance) process.

However, despite moving from 32nm to 28nm, Kaveri's 245mm2 die is actually ~4% larger than Richland's 236mm2 part, though transistor density has increased 85% from 1.3 billion to a whopping 2.41 billion.

On the GPU side of things, AMD is moving away from the Cayman architecture featured in Richland, which was first seen back in 2010 with the Radeon HD 6000 series. In its place will be a Hawaii / GCN-based GPU, allowing for HSA Heterogeneous Computing.

A new memory controller is also included with Kaveri, supporting DDR3-2400 and hUMA (heterogeneous unified memory access), which gives the CPU and GPU simultaneous access to the same memory. There's PCI Express 3.0 support as well, providing up to 24 lanes for better CrossFire performance.

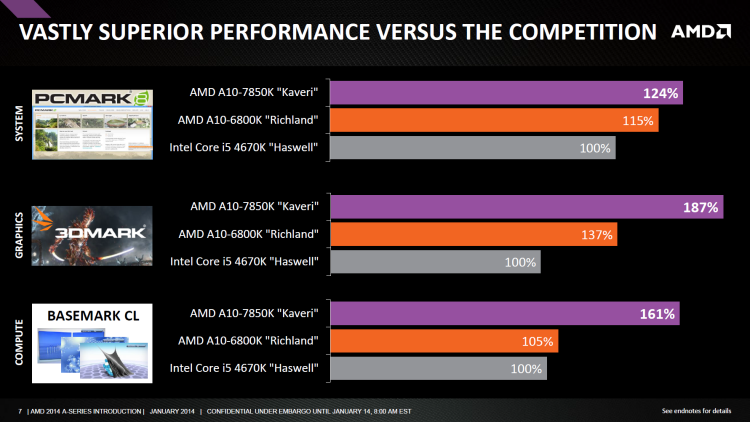

AMD really is focused on gaming performance with Kaveri and believes this is where its latest APUs have a serious advantage over the competition. The company's latest processors are being pushed as budget solutions for modern 1080p gaming, though on paper the Radeon R7 doesn't look quite up to the task...

Kaveri in Detail

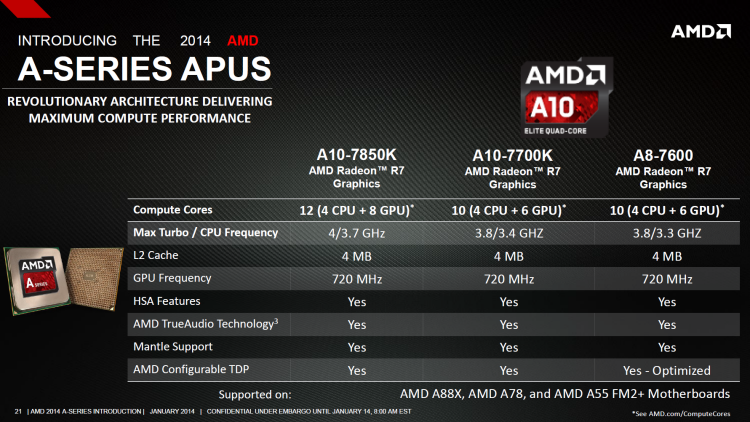

Four Kaveri-based APUs are available: the A8-7600 (the model we're testing today) along with the A10-7700K, A10-7800 and A10-7800K. Unfortunately, AMD not only slipped up on the timing of our delivery, they also forgot to include the flagship model. Nonetheless, the A8-7600 is probably the best value of them so it's a good starting point.

The flagship A10-7850K is a quad-core part that operates at 3.7GHz with a 4.0GHz turbo frequency and has a 95W TDP. Confusingly, there are two models of the R7, both of which are plainly called the R7. The A10-7850K boasts the full version with eight GCN compute units (of 64 stream cores each) for a total of 512 SPUs.

The A10-7800 is only other APU with the full R7, and this 65W TPD part operates at 3.5GHz with a turbo frequency of 3.9GHz.

The 7700K is also a 3.4/3.8GHz quad-core part but its version of the R7 only features six GCN compute units, which cuts the number of SPUs to 384.

The same reduced version of the R7 is also in the A8-7600, which has TDP ratings of 45W and 65W depending on how you configure it. The former calls for a base clock speed of just 3.1GHz with a turbo frequency of 3.3GHz. The 65w preset essentially overclocks the chip, allowing for a base clock of 3.3GHz with a turbo clock of 3.8GHz.

Both variants of the R7 run at 720MHz, considerably slower than the 844MHz that we saw from Richland's 8670D and 8570D GPUs.

All models also support DDR3-2133 memory along with the new Socket FM2+ with chipset support for PCIe 3.0. Since Kaveri brings some pretty significant changes, it's unsurprising that a socket update was in order.

The new socket is supported by the A88X chipset, though because the FM2+ socket is backward compatible with FM2, the older A55, A75 and A85X chipsets will also work, provided they are used in conjunction with the FM2+ socket. There are two new pins on the FM2+ socket which means Kaveri APUs won't fit in FM2. While backward compatibility is nice, obviously FM2 owners would prefer things to be the other way around so they could install a new Kaveri APU into an older system as an upgrade.