Through the looking glass: When AI image generators first emerged, misinformation immediately became a major concern. Although repeated exposure to AI-generated imagery can build some resistance, a recent Microsoft study suggests that certain types of real and fake images can still deceive almost anyone.

The study found that humans can accurately distinguish real photos from AI-generated ones about 63% of the time. In contrast, Microsoft's in-development AI detection tool reportedly achieves a 95% success rate.

To explore this further, Microsoft created an online quiz (realornotquiz.com) featuring 15 randomly selected images from stock photo libraries and various AI models. The study analyzed 287,000 images viewed by 12,500 participants from around the world.

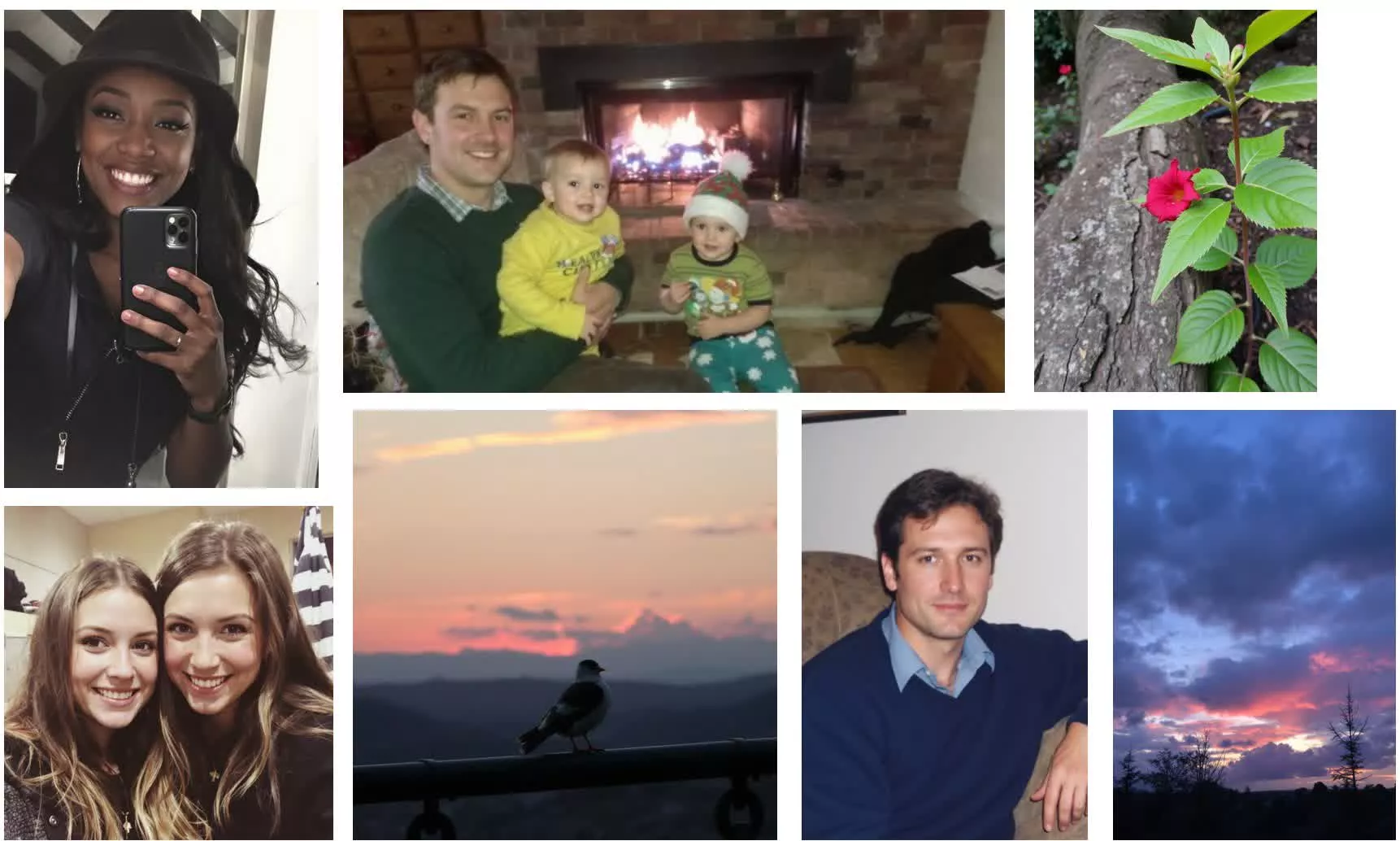

Participants were most successful at identifying AI-generated images of people, with a 65% accuracy rate. However, the most convincing fake images were GAN deepfakes that showed only facial profiles or used inpainting to insert AI-generated elements into real photos.

Despite being one of the oldest forms of AI-generated imagery, GAN deepfakes (Generative Adversarial Networks) still fooled about 55% of viewers. This is partly because they contain fewer of the details that image generators typically struggle to replicate. Ironically, their resemblance to low-quality photographs often makes them more believable.

Researchers believe that the increasing popularity of image generators has made viewers more familiar with the overly smooth aesthetic these tools often produce. Prompting the AI to mimic authentic photography can help reduce this effect.

Some users found that including generic image file names in prompts produced more realistic results. Even so, most of these images still resemble polished, studio-quality photos, which can seem out of place in casual or candid contexts. In contrast, a few examples from Microsoft's study show that Flux Pro can replicate amateur photography, producing images that look like they were taken with a typical smartphone camera.

Participants were slightly less successful at identifying AI-generated images of natural or urban landscapes that did not include people. For instance, the two fake images with the lowest identification rates (21% and 23%) were generated using prompts that incorporated real photographs to guide the composition. The most convincing AI images also maintained levels of noise, brightness, and entropy similar to those found in real photos.

Surprisingly, the three images with the lowest identification rates overall: 12%, 14%, and 18%, were actually real photographs that participants mistakenly identified as fake. All three showed the US military in unusual settings with uncommon lighting, colors, and shutter speeds.

Microsoft notes that understanding which prompts are most likely to fool viewers could make future misinformation even more persuasive. The company highlights the study as a reminder of the importance of clear labeling for AI-generated images.