Gameplay Footage & Conclusion

The performance seen in many of those games wasn't great. The quality settings weren't tuned to suit the GTX 580, these are simply the settings we've used to test modern low-end GPUs. Those results were recorded on our Core i7-7700K tests system clocked at 4.9GHz, though the CPU used isn't really a big deal as none of those GPUs will be limited by any modern quad-core processor.

That said, since I will be using more appropriate quality presets in the following gameplay footage and I've also downgraded to the Core i3-8100 using DDR4-2400 memory, albeit 16GB of DDR4 memory.

Let's see what the GTX 580 can do...

Here's a look at how Battlefield 1 runs using the medium quality preset at 1080p. After about five minutes of gameplay we averaged around 60fps with dips as low as 45fps. As you'd expect, the 1.5GB VRAM buffer was maxed out the entire time and we were eating into system memory, but not too badly. Frame dips and stuttering will likely be much worse with a mechanical hard drive, so please note for testing I was using a Crucial MX500 SATA SSD. Still though, overall the game looked good and played surprisingly well.

Call of Duty WWII had to be turned down to the normal quality settings and here performance was quite good. The average frame rate at 1080p sat in the mid 70's, while we never dipped below 60fps, so not bad at all and certainly playable. Please note that VRAM usage is not displayed in some of these tests, I assume this was a glitch with RivaTuner.

Assassin's Creed Origins had to be dialed down to its 'very low' preset and here we saw a number of graphical anomalies. Frame rates were also terrible at 1080p and we saw just 24fps on average with dips down to 18fps, which is technically playable but not by my standards it isn't and many of you would surely agree.

Dirt 4 still looks nice with the medium quality preset and it played superbly, never dropping below 60fps and like Call of Duty WWII frame rates often hovered above 70fps. VRAM usage is quite low in this game though again RivaTuner isn't displaying that information unfortunately.

Towards the end of my test I swapped to the high quality preset which improved visuals noticeably, but as you'd expect, that did reduce frame rates quite a bit. However, an average of 53fps with dips to 48fps was still playable.

F1 2017 also ran quite nicely at 1080p using the medium quality preset, here the GTX 580 averaged 56fps and only dropped down to 46fps, so it was playable and actually very enjoyable.

Fortnite was also playable using the medium quality settings, I saw an average of almost 70fps with a dip down to 53fps. Of course, this isn't a particularly demanding game and VRAM usage only peaked at just over 1.1GB, so RAM usage was quite low.

Hellblade played reasonably well. Here the GTX 580 almost averaged 60fps after a five minute test and only dropped down to 51fps, so again, very playable under these conditions.

Mass Effect also ran decently with the medium quality preset enabled and my test run saw an average of 55fps with a minimum of 45fps.

Overwatch is the kind of game that runs really well on anything and I was able to enjoy over 60fps using the high quality settings. At 1080p, my 10 minute long test saw an average of 85fps for what was a smooth and enjoyable experience.

Prey is a well optimized title but it does love to use plenty of VRAM and this caused some issues for the GTX 580. In the graphs seen previously, we saw a 1% low result of 39fps and here we see 42fps as the minimum of which is a reasonable drop from the 56fps seen on average. Still, the game was playable and frame rates really weren't the issue here, but instead it was the lack of textures that spoiled the experience.

The PUBG test also ran for 10 minutes and in that time I saw almost 70fps on average with dips as low as 44fps. Overall, the experience was certainly playable but I was forced down to the lowest possible quality settings to achieve this performance.

Finally, for testing World of Tanks I used the built in benchmark using the medium quality option which renders at 1080p. Here, the GTX 580 averaged 142fps by the end of the test and never dipped below 82fps, so very playable performance in this title.

Closing Thoughts

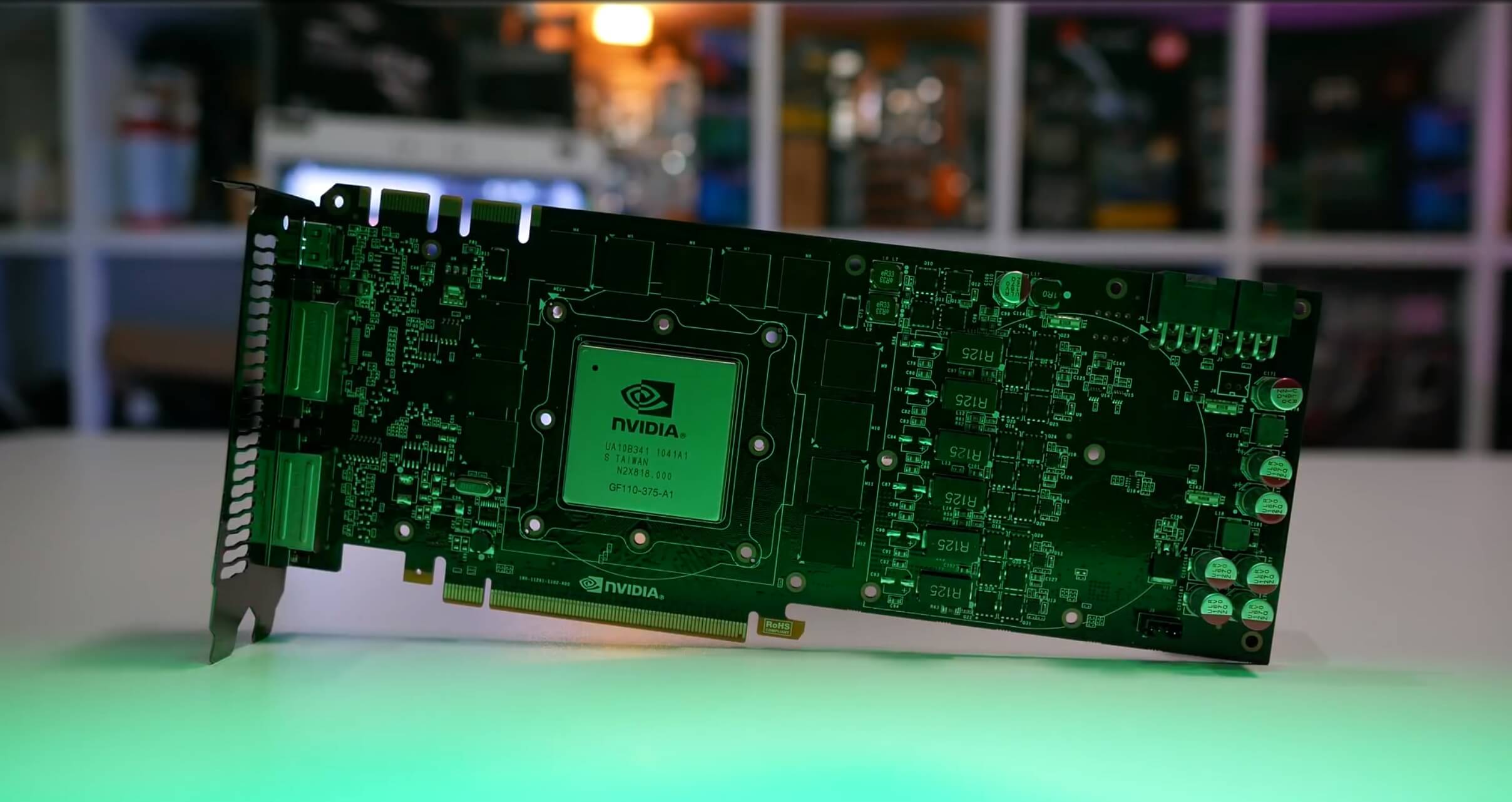

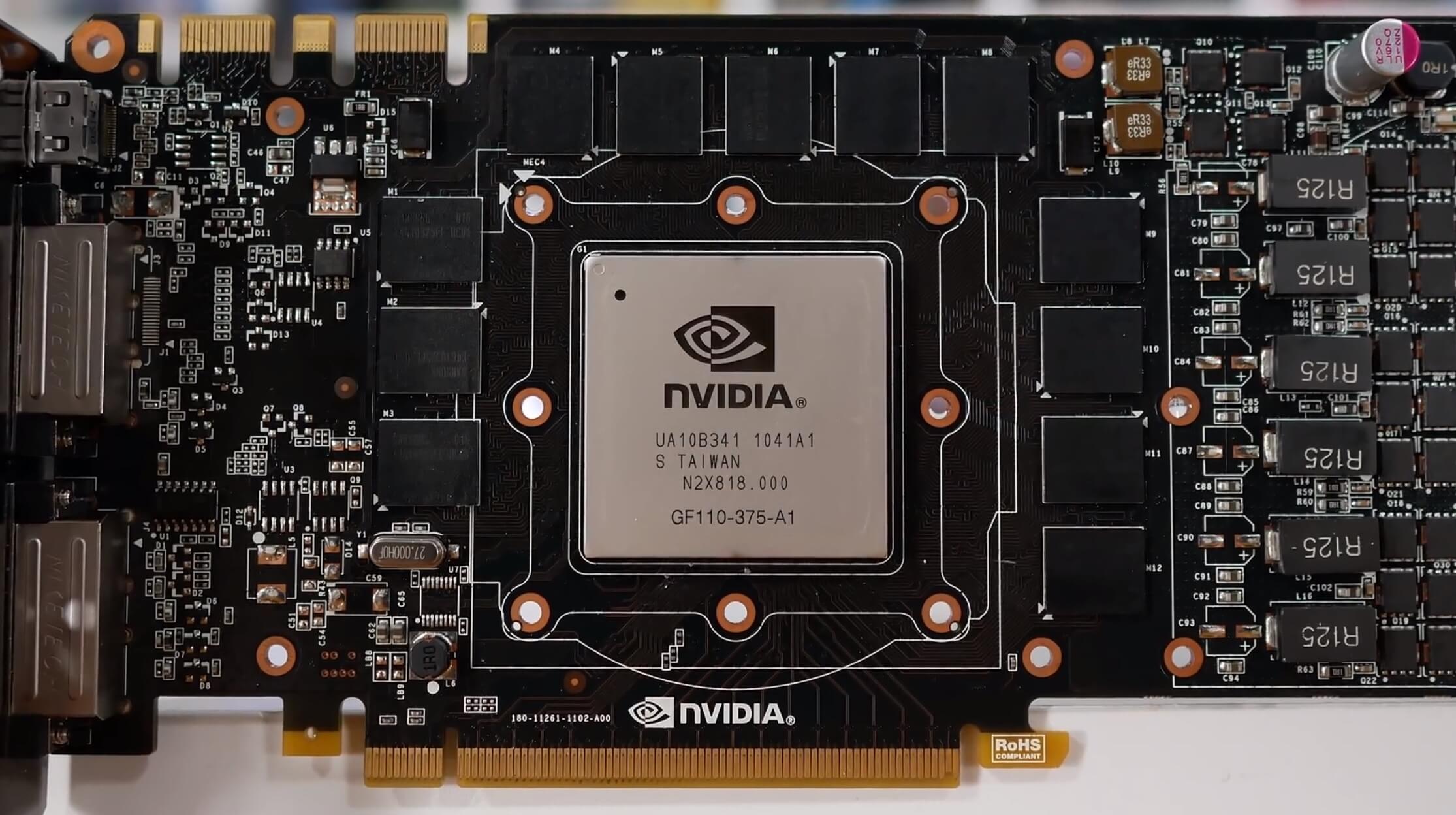

Well there you have it, eight years later the GeForce GTX 580 can still kind of, almost, play all the latest tiles. In fact, except Assassin's Creed Origins, I was able to achieve smooth playable performance in all the other titles using low to medium quality settings.

For bargain shoppers, is the GTX 580 worth buying? Well right now you can expect to pay anywhere from $30 to $60. I got one for $40. Considering the performance you get for that price, the GTX 580 seems like a great option, but it's a better value at $30 seeing as ~$70 buys a GTX 680, which is a much better card.

The big issue with the GTX 580, other than its limited 1.5GB frame buffer, is of course power consumption. It doubled the draw of our Core i3-8100 system, so personally for that alone I would avoid the GTX 580 and look for something a little more fuel efficient.