Every year hundreds of new games are released to the market. Some do very well and sell in the millions, but that alone does not guarantee ascending to icon status. Every once in a while though, a game is made that becomes part of the industry's history, and we still talk about them or play them years after they first appeared.

For PC gamers, there's one title that's almost legendary thanks to its incredible, ahead-of-its-time graphics and ability to grind PCs into single digit frame rates. Later on, for providing a decade-plus worth of memes. Join us as we take a look back at Crysis and see what made it so special.

Crytek's Early Days

Before we dig into the guts and glory of Crysis, it's worth a quick trip back in time, to see how Crytek laid down its roots. Based in Coburg, Germany, the software development company formed in the fall of 1999, with 3 brothers – Avni, Cevat, and Faruk Yerli – teaming up under Cevat's leadership, to create PC game demos.

A key early project was X-Isle: Dinosaur Island. The team promoted this and other demos at 1999's E3 in Los Angeles. Nvidia was one of the many companies that the Yerli brothers pitched their software to, but they were especially keen on X-Isle. And for good reason: the graphics were astonishing for the time. Huge draw distances, coupled with beautiful lighting and realistic surfaces, made it an absolute stunner.

To Nvidia, Crytek's demo was the perfect tool with which to promote their future GeForce range of graphics cards and a deal was put together – when the GeForce 3 came out in February 2001, Nvidia used X-Isle to showcase the abilities of the card.

With the success of X-Isle and some much-needed income, Crytek could turn their attention to making a full game. Their first project, called Engalus, was promoted throughout 2000, but the team eventually abandoned it. The reasons aren't overly clear, but with the exposure generated by the Nvidia deal, it wasn't long before Crytek picked up another big name contract: French game company Ubisoft.

This would be about creating a full, AAA title out of X-Isle. The end result was 2004 release Far Cry.

It's not hard to see where the technological aspects of X-Isle found a home in Far Cry. Once again, we were treated to huge landscapes, replete with lush vegetation, all rendered with cutting edge graphics. While not a hit with the critics (it was a TechSpot favorite nonetheless), Far Cry sold pretty well, and Ubisoft saw lots of potential in the brand and the game engine that Crytek had put together.

Another contract was drawn up, where Ubisoft would retain full rights to the Far Cry intellectual property, and permanently licenced Crytek's software, CryEngine. This would ultimately, over the years, morph into their own Dunia engine, powering games such as Assassin's Creed II and the next three Far Cry titles.

By 2006, Crytek had come under the wings of Electronic Arts, and moved offices to Frankfurt. Development of CryEngine continued and their next game project was announced: Crysis. From the very beginning, Crytek wanted this to be a technology tour-de-force, working with Nvidia and Microsoft to push the boundaries of what DirectX 9 hardware could achieve. It would also eventually be used to promote the feature set of DirectX 10, but this came about relatively late in the project's development.

2007: A Golden Year for Games

Crysis was scheduled for release towards the end of 2007 and what a year that turned out to be for gaming, especially in terms of graphics. You could say The Elder Scrolls IV: Oblivion started the ball rolling the year prior, setting a benchmark for how an open world 3D game should look (except for character models) and what levels of interaction could be achieved.

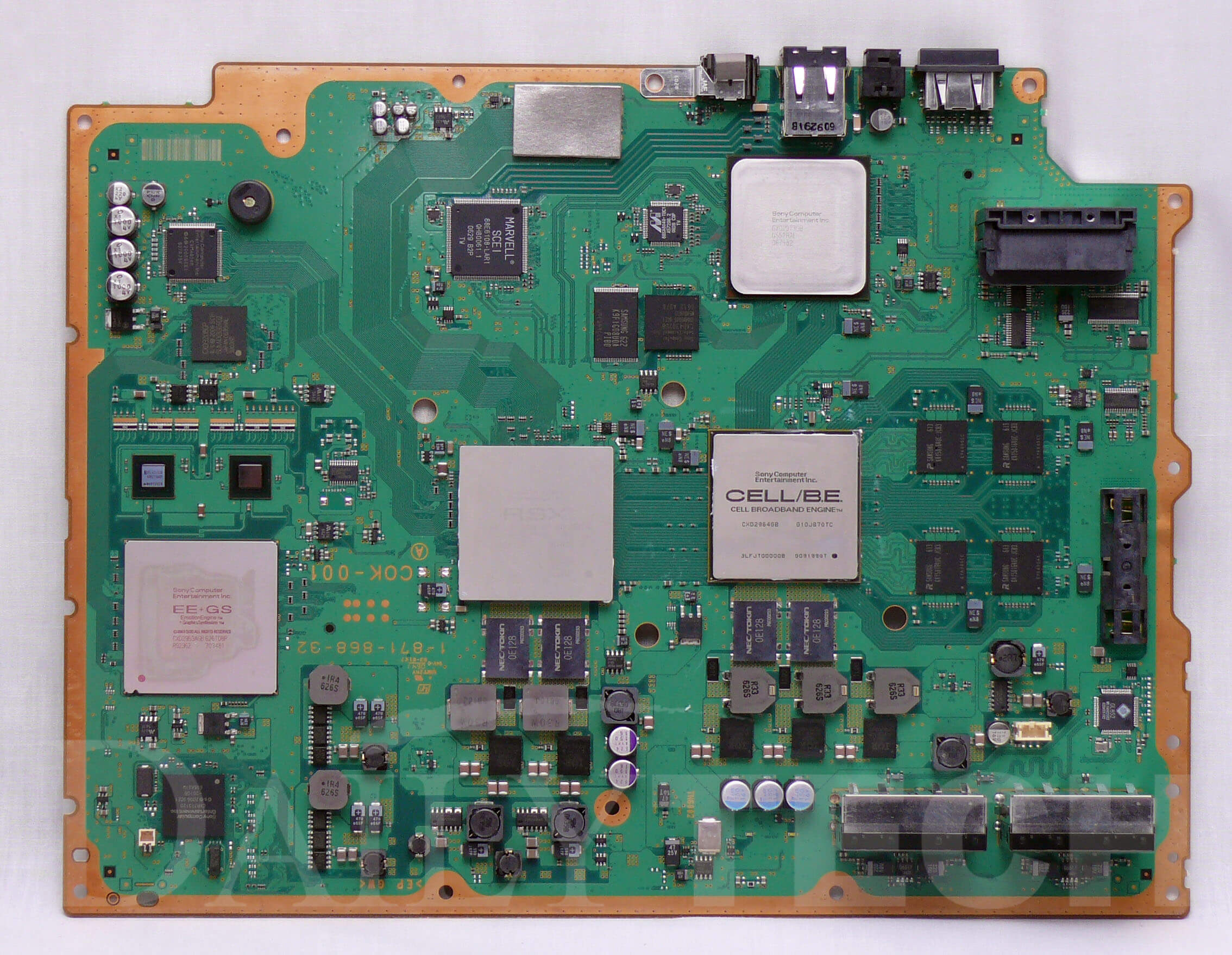

For all its visual splendor, Oblivion showed signs of limitations when developing for multiple platforms, namely PCs and consoles of the time. The Xbox 360 and PlayStation 3 were complex machines, and in terms of architectural structures, they were quite different to Windows systems. The former, from Microsoft, had a CPU and GPU unique to that console: a triple core, in-order-execution PowerPC chip from IBM and an ATI graphics chip that boasted a unified shader layout.

Sony's machine sported a fairly regular Nvidia GPU (a modified GeForce 7800 GTX), but the CPU was anything but standard. Jointly developed by Toshiba, IBM, and Sony, the Cell processor also had a PowerPC core to it, but also packed in 8 vector co-processors inside.

With each console having its own distinct benefits and quirks, creating a game that would work well on them and a PC was a significant challenge. The majority of developers generally aimed for a lowest common denominator, and this was typically set by the relatively low amount of system and video memory available, as well as the outright graphics processing capabilities of the systems.

The Xbox 360 had just 512 MB of GDDR3 shared memory and an additional 10 MB of embedded DRAM for the graphics chip, whereas the PS3 had 256 MB of XDR DRAM system memory and 256 MB of GDDR3 for the Nvidia GPU. However, by the middle of 2007, top end PCs could be equipped with 2 GB of DDR2 system RAM and 768 MB of GDDR3 on the graphics side.

Where the console versions of the game had fixed graphics settings, the PC edition allowed for a wealth of things to be changed. With everything set to their maximum values, even some of the best computer hardware at the time struggled with the workload. This is because the game was designed to run best at the supported resolutions offered by the Xbox 360 and PS3 (such as 720p or scaled 1080p) at 30 frames per second, so even though PCs had potentially better components, running with more detailed graphics and higher resolutions significantly increased the workload.

The first aspect of this particular 3D game to be limited, due to the memory footprint of consoles, was the size of textures, so lower resolution versions were used wherever possible. The number of polygons used to create the environment and models were kept relatively low as well.

Looking at another Oblivion scene using wireframe mode (no textures) shows trees made of several hundred triangles, at most. The leaves and grass are just simple textures, with transparent regions to give the impression that each blade of foliage is a discrete item.

Character models and static objects, such as buildings and rocks, were made out of more polygons, but overall, more attention was paid to how scenes were designed and lit, than to sheer geometry and texture complexity. The art direction of the game was not aimed at realism – instead, Oblivion was set in a cartoon-like fantasy world, so the lack of rendering complexity can be somewhat forgiven.

That said, a similar situation can also be observed in one of the biggest game releases of 2007: Call of Duty 4: Modern Warfare. This game, set in the modern, real world, was developed by Infinity Ward and published by Activision, using an engine that was originally based off id Tech 3 (Quake III Arena). The developers adapted and heavily customized it, until it was able to render graphics featuring all of the buzzwords of that time: bloom, HDR, self-shadowing, dynamic lighting and shadow mapping.

But as we can see in the image above, textures and polygon counts were kept relatively low, again due to the limitations of the consoles of that era. Objects that are persistent in the field of view (e.g. the weapon held by the player) are well detailed, but the static environment was quite basic.

All of this was artistically designed to be as realistic-looking as possible, so a judicious use of lights and shadows, along with clever particle effects and smooth animations, cast a magician's wand over the scenes. But as with Oblivion, Modern Warfare was still very demanding on PCs with all details set to their highest values – having a multi-core CPU, made a big difference.

Another multi-platform 2007 hit was BioShock. Set in an underwater city, in an alternate reality 1960, the game's visual design was as key as the storytelling and character development. The software used for this title was a customized version of Epic's Unreal Engine, and like the other titles we've mentioned so far, BioShock ran the full gamut of graphics technology.

That's not to say corners weren't cut where possible – look at the character's hand in the above image (click for full version) and you can easily see the polygon edges used in the model. The gradient of the lighting across the skin is fairly abrupt, too, which is an indication that the polygon count is fairly low.

And like Oblivion and Modern Warfare, the multi-platform nature of the game meant texture resolution wasn't super high. A diligent use of lights and shadows, particle effects for the water, and exquisite art design, all pushed the graphics quality to a high standard. This was seen repeated across other games made for PCs and consoles alike: Mass Effect, Half Life 2: Episode Two, Soldier of Fortune: Payback, and Assassin's Creed all took a similar approach to graphics.

Crysis, on the other hand, was conceived to be a PC-only title and had the potential to be free of any hardware or software limitations of the consoles. Crytek had already shown they knew how to push the boundaries for graphics and performance in Far Cry... but if PCs were finding it difficult to run the latest games developed with consoles in mind, at their highest settings, would things be any better for Crysis?

Everything and the Kitchen Sink

During SIGGRAPH 2007, the annual graphics technology conference, Crytek published a document detailing the research and development they had put into CryEngine 2 – the successor to the software used to make Far Cry. In the piece, they set out several goals that the engine had to achieve:

- Cinematographic quality rendering

- Dynamic lighting and shadowing

- HDR rendering

- Support for multi-CPU and GPU systems

- A 21 km x 21 km game play area

- Dynamic and destructible environments

With Crysis, the first title to be developed on CryEngine 2, all but one were achieved, with the gameplay area scaled down to a mere 4 km x 4 km. That may still sound large, but Oblivion had a game world that was 2.5 times larger in area. Otherwise, though, just look at what Crytek managed to achieve in a smaller scale.

The game starts on the coastline of a jungle-filled island, located not far from the Philippines. North Korean forces have invaded and a team of American archeologists, conducting research on the island, have called for help. You and a few other gun-toting chums, equipped with special nanosuits (which give you super strength, speed, and invisibility for short periods of time) are sent to investigate.

The first few levels easily demonstrated that Crytek's claims were no hyperbole. The sea is a complex mesh of real 3D waves that fully interacts with objects in it – there are underwater light rays, reflection and refraction, caustics and scattering, and the waves lap up and down the shore. It still holds up to scrutiny today, although it is surprising that, for all the work Crytek put into making the water look so good, you barely get to play in it, during the single player game.

You might be looking at the sky and thinking that it looks right. As it should do, because the game engine solves a light scattering equation on the CPU, accounting for time of day, position of the sun, and other atmospheric conditions. The results are stored in a floating point texture, to be used by the GPU to render the skybox.

The clouds seen in the distance are 'solid', in that they're not just part of the skybox or simple applied textures. Instead they are a collection of small particles, almost like real clouds, and a process called ray-marching is done to work out where the volume of the particles lies within the 3D world. And because the clouds are dynamic objects, they cast shadows onto the rest of the scene (although this isn't visible in this image).

Continuing through the early levels provides more evidence of the capabilities of CryEngine 2. In the above image, we can see a rich, detailed environment – the lighting, indirect and directional, is fully dynamic, with trees and bushes all casting shadows on themselves and other foliage. Branches move as you push through them and the small trunks can be shot to pieces, clearing the view.

And speaking of shooting things to pieces, Crysis let you destroy lots of things – vehicles and buildings could be blown to smithereens. Enemy hiding behind a wall? Forget shooting through the wall, as you could in Modern Warfare, just take out the whole thing.

And as with Oblivion, almost anything could be picked up and thrown about, although it's a shame that Crytek didn't make more of this feature. It would have been really cool to pick up a Jeep and lob it into a group of soldiers – the best you could do was punch it a bit and hope it would move enough to cause some damage. Still, how many games would let you take out somebody with a chicken or a turtle?

Even now, these scenes are highly impressive, but for 2007 it was a colossal leap forward in rendering technology. But for all its innovation, Crysis could be viewed as being somewhat run-of-the-mill, as the epic vistas and balmy locations masked the fact that the gameplay was mostly linear and quite predictable.

For example, back then vehicle-only levels were all the rage, and so unsurprisingly, Crysis followed suit. After making good progress with the island invasion, you're put in command of a nifty tank but it's all over very quickly. And perhaps for the best, because the controls were clunky, and one could get through the level without needing to take part in the battle.

There can't be too many people who haven't played Crysis or don't know about the general plot, but we won't say too much about the specifics of the storyline. However, eventually you end up deep underground, in an unearthly cave system. It's very pretty to look at, but frequently confusing and claustrophobic.

It does provide a good showing of the engine's volumetric light and fog systems, with ray-marching being used for both of them again. It's not perfect (you can see a little too much blurring around the edges of the gun) but the engine handled multiple directional lights with ease.

Once out of the cave system, it's time to head through a frozen section of the island, with intense battles and an another standard gameplay feature: an escort mission. For the rendering of the snow and ice, Crytek's developers went through four revisions, cutting it very close to some internal production deadlines.

The end result is very pretty, with snow as a separate layer on top of surfaces and frozen water particles glittering in the light, but the visuals are somewhat less effective than the earlier beach and jungle scenes. Look at the large boulder in the left section of the image below: the texturing isn't so hot

Eventually, you're back in the jungle and, sadly, yet another vehicle level. This time a small scale dropship (with clear influences from Aliens) needs an emergency pilot – which, naturally, is you (is there anything these troopers can't do?) and once again, the experience is a brief exercise in battling against a cumbersome control system and a frantic firefight that you can avoid taking part in.

Interestingly, when Crytek eventually released a console version of Crysis, some four years later, this entire level was dropped in the port. The heavy use of particle-based cloud systems, complex geometry, and draw distances were perhaps too much for the Xbox 360 and PS3 to handle.

Finally, the game concludes with a very intensive battle onboard an aircraft carrier, with an obligatory it's-not-dead-just-yet end boss fight. Some of the visuals in this portion of the game are very impressive, but the level design doesn't hold up to the earlier stages of the game.

All things considered, Crysis was fun to play but it wasn't an overly original premise – it was very much a spiritual successor to Far Cry, not just in terms of location choice, but also with regards to weapons and plot twists. Even the much-touted nanosuit feature wasn't fully realized, as there was generally little specific need for its functionality. Apart from a couple of places when the strength mode was needed, the default shield setting was sufficient to get through.

It was stunningly beautiful to look at, by far the best looking release of 2007 (and for many years after that), but Modern Warfare arguably had far superior multiplayer, BioShock gave us richer storytelling, and Oblivion and Mass Effect offered depth and lots more scope for replay.

But of course, we know exactly why we're still talking about Crysis 13 years later and it's all down to memes...

All those glorious graphics came at a cost and the hardware requirements, for running at maximum settings, seemed to be beyond any PC around that time. In fact, it seemed to stay that way year after year – new CPUs and GPUs appeared, and all failed to run Crysis like other games.

So while the scenery put a smile on your face, the seemingly permanently low frame rates definitely did not.

Why So Serious?

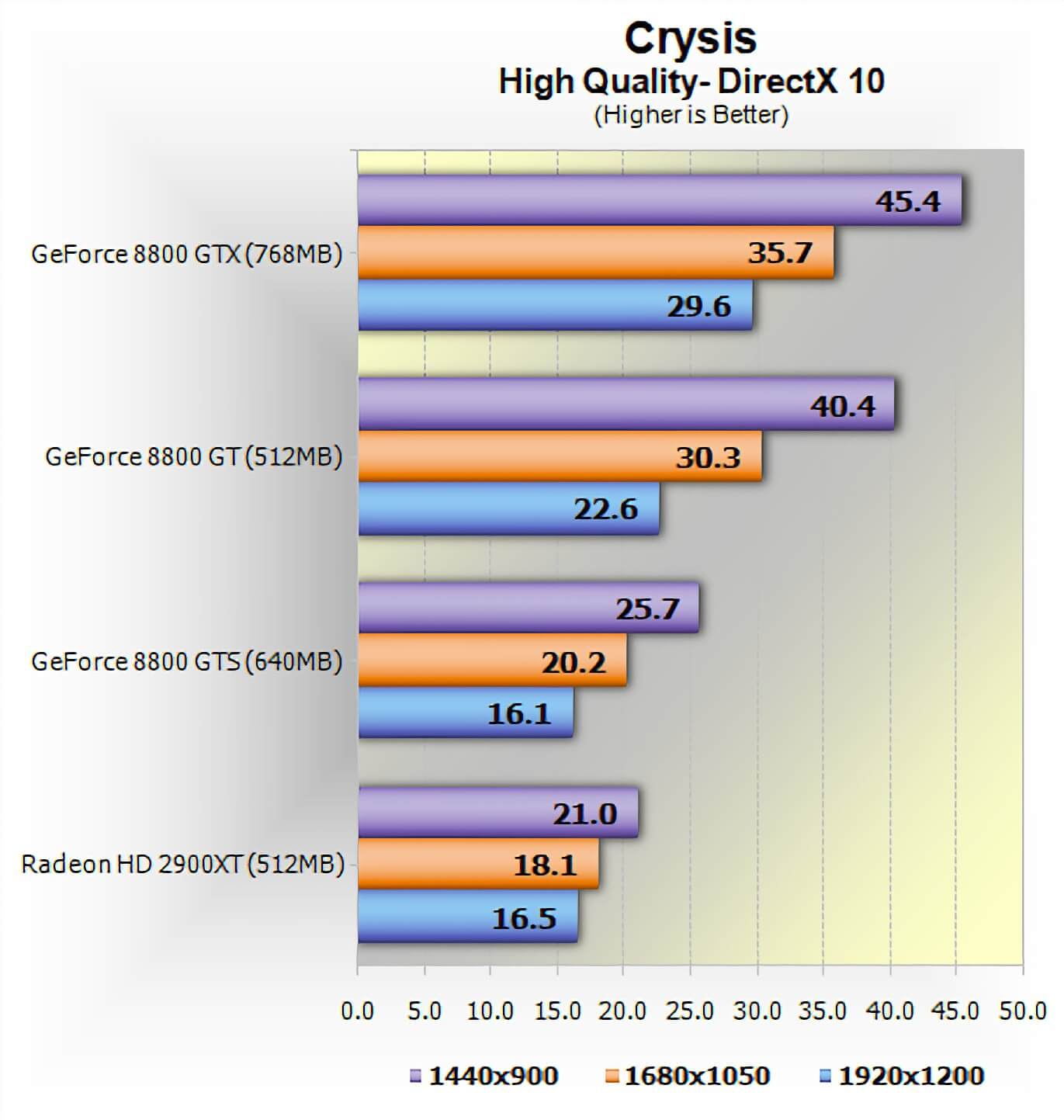

Crysis was released in November 2007, but a one level demo and map editor were made available a month earlier. We were already in the business of benchmarking hardware and PC games, and we tested the performance of that demo (and the 1.1 patch with SLI/Crossfire later on). We found it to be pretty good – well, only when using the very best graphics cards at the time and with medium quality settings. Switching things to high settings completely annihilated mid-range GPUs.

Worse still, the much touted DirectX 10 mode and anti-aliasing did little to inspire confidence in the game's potential. During earlier previews to the press, the less-than-stellar frame rates were already being identified as a potential issue, but Crytek had promised that further refinement and updates would be forthcoming.

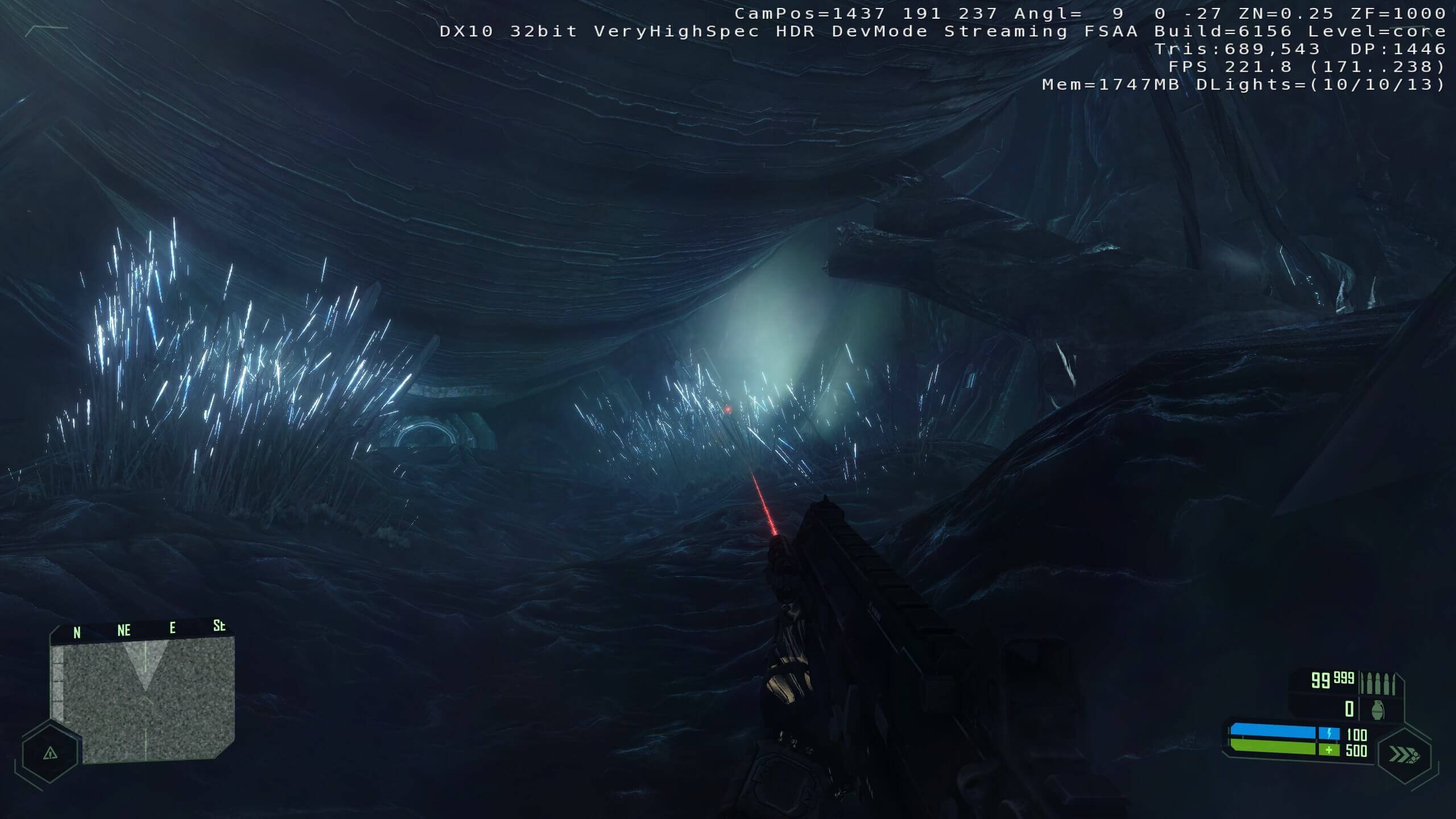

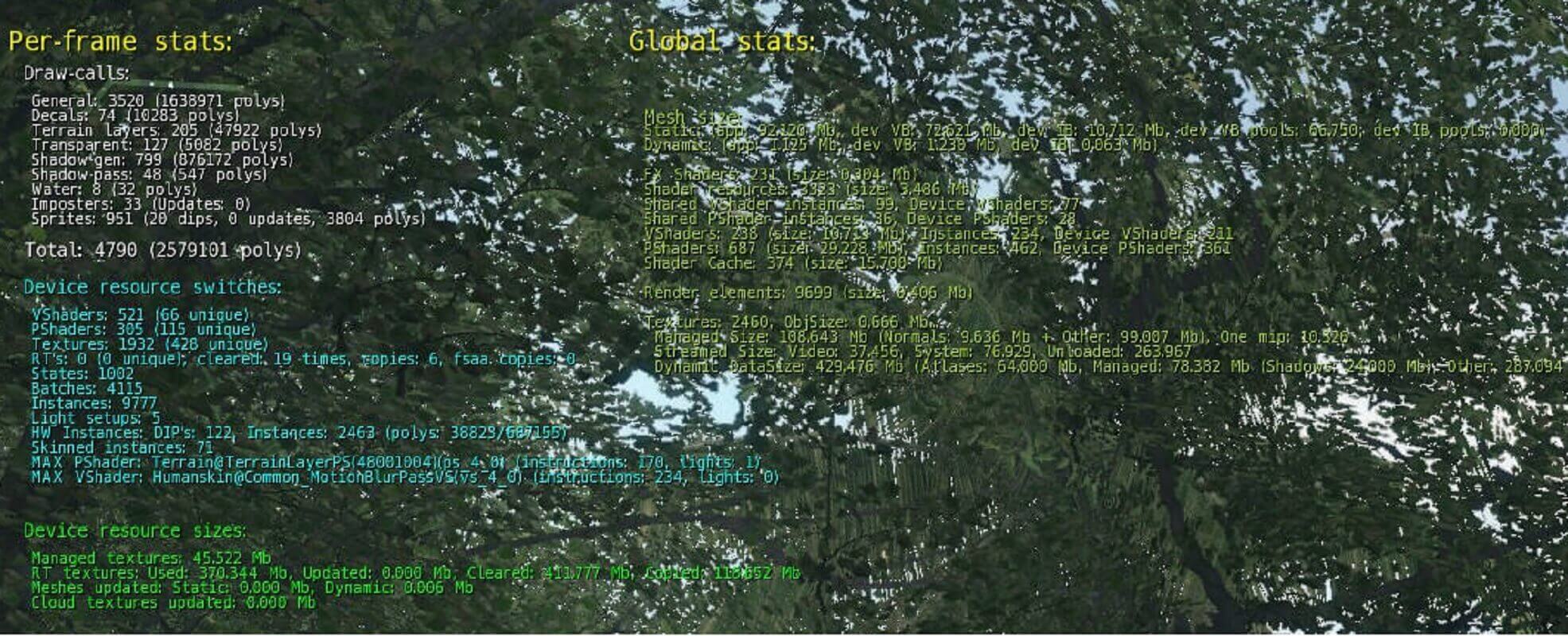

So what exactly was going on to make the game so demanding on hardware? We can get a sense of what's happening behind the scenes by running the title with 'devmode' enabled. This option opens up the console to allow all kinds of tweaks and cheats to be enabled, and it provides an in-game overlay, replete with rendering information.

In the above image, taken relatively early in the game, you can see the average frame rate and the amount of system memory being used. The values labelled Tris, DP, and DLights displayed the number of triangles per frame, the amount of draw calls per frame, and how many directional (and dynamic) lights are being used.

First, let's talk about polygons: more specifically, 2.2 million of them. That's how many were used to create the scene, although not all of them will actually be displayed (non-visible ones are culled early in the rendering process). Most of that count is for the vegetation, because Crytek chose to draw out the individual leaves on the trees, rather than use simple transparent textures.

Even fairly simple environments packed a huge amount of polygons (compared to other titles of that time). This image from later in the game doesn't show much, as it's dark and underground, but there's still nearly 700,000 polygons in use here.

Also compare the difference in the number of directional lights: in the outdoor jungle scene, there's just the one (i.e. the Sun) but in this area, there are 10. That might not sound like much, but every dynamic light casts active shadows. Crytek used a mixture of technologies for these, and while all of them were cutting edge (cascaded and variance shadow maps, with randomly jittered sampling, to smooth out the edges), they added a hefty amount to the vertex processing load.

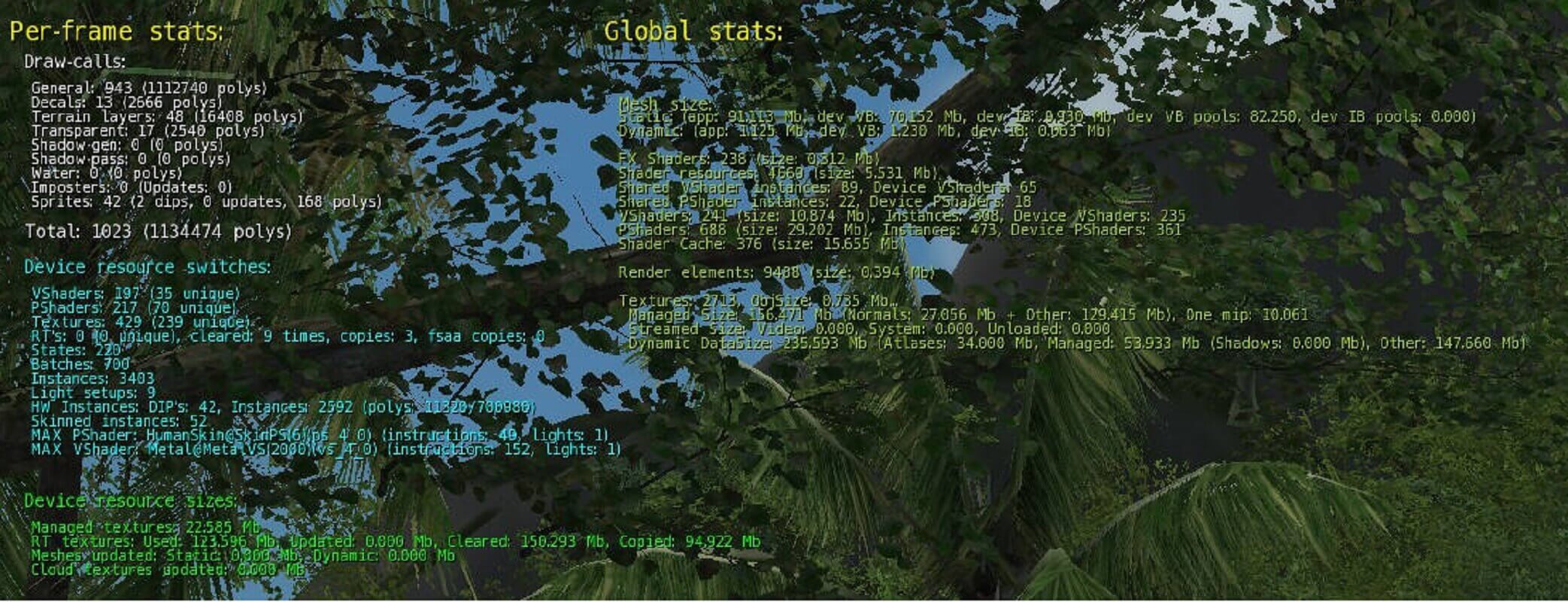

The DP figure tells us how many times a different material or visual effect has been 'called' for the objects in the frame. A useful console command is r_stats – it provides a breakdown of the draw calls, as well as the shaders being used, details on how the geometry is being handled, and the texture load in the scene.

We'll head back into the jungle again and see what's being used to create this image.

We ran the game in 4K resolution, to get the best possible screenshots, so the stats information isn't super clear. Let's zoom in for a closer inspection.

In this scene, over 4,000 batches of polygons were called in, for 2.5 million triangles worth of environment, to apply a total of 1,932 textures (although the majority of these were repeats, for objects such as the trees and ground). The biggest vertex shader was just for setting up the motion blur effect. comprising 234 instructions in total, and the terrain pixel shader was 170 instructions.

For a 2007 game, these many calls was extremely high, as the CPU overhead in DirectX 9 (and 10, although to a lesser extent) for making each call wasn't trivial. Some AAA titles on consoles would soon be packing this many polygons, but their graphics software was far more streamlined than DirectX.

We can get a sense of this issue by using the Batch Size Test in 3DMark06. This DirectX 9 tool draws a series of moving and color changing rectangles across the screen, using an increasing number of triangles per batch. Tested on a GeForce RTX 2080 Super, the results show a marked difference in performance across the various batch sizes.

We can't easily tell how many triangles are being processed per batch, but if the number indicated covers all of the polygons used, then it's just average of 600 or so triangles per batch, which is far too small. However, the large number of instances (where an object can be used multiple times, for one call) probably counters this issue.

Some levels in Crysis used even higher numbers of draw calls; the aircraft carrier battle is a good example. In the image below, we can see that while the triangle count is not as high as the jungle scenes, the sheer number of objects to be moved, textured, and individually lit pushes the bottleneck in the rendering performance onto the CPU and graphics API/driver systems.

A common criticism of Crysis was that it was poorly optimized for multicore CPUs. While most top-end gaming PCs sported dual core processors prior to the game being launched, AMD released their quad core Phenom X4 range at the same time and Intel did have some quad core CPUs in early 2007.

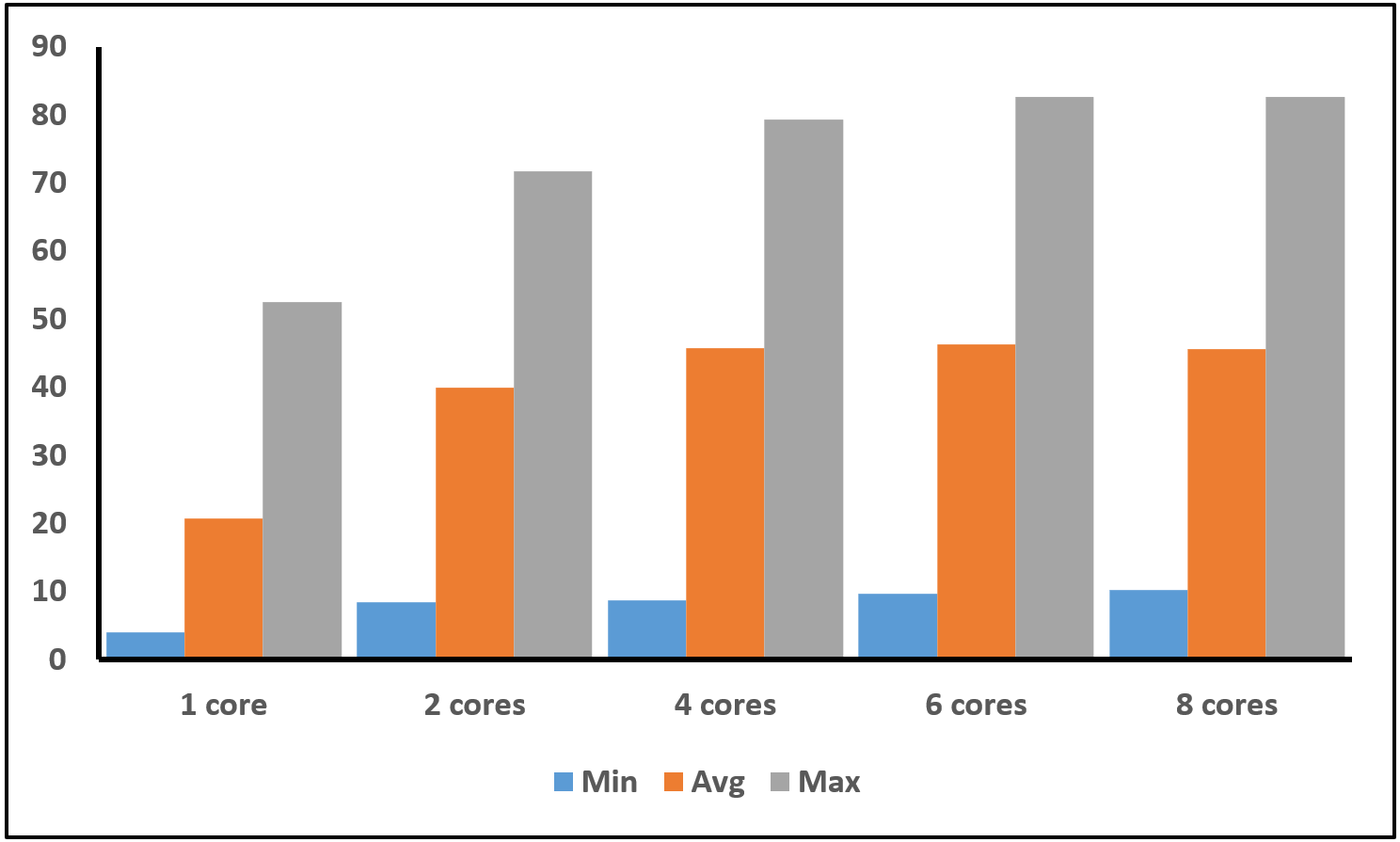

So did Crytek mess this up? We ran the default CPU2 timedemo on a modern 8-core Intel Core i7-9700 CPU, that supports up to 8 threads. At face value, it would seem there is some truth to the accusations, as while the overall performance was not too bad for a 4K resolution test, the frame rate frequently ran below 25 fps and into single figures, at times.

However, running the tests again, but this time limiting the number of available cores in the motherboard BIOS, tells a different side to the story.

There was a clear increase in performance going from 1 to 2 cores, and again from 2 to 4 (albeit a much smaller gain). After that, there was no appreciable difference, but the game certainly made use of the presence of multiple cores. Given that quad core CPUs were going to be the norm for a number of years, Crytek had no real need to try and make use of anything more than that.

In DirectX 9 and 10, the execution of graphics instructions is typically done via one thread (because the performance hit for not doing otherwise is enormous). This means that the high number of draw calls seen in many of the scenes results in just one or two cores being seriously loaded up – neither version of the graphics API really allows for multiple threads to be used to queue up everything that needs to be displayed.

So it's not really a case that Crysis doesn't properly support multithreading, as it clearly does with the limitations of DirectX; instead, it's the design choices made for the rendering (e.g. accurately modelled trees, dynamic and destructible environments) that's responsible for the performance drops. Remember the image from the game taken in a cave, earlier in this article? Note that the DP figure was under 2,000, then look at the FPS counter.

Beyond the draw call and API issue, what else is going on that's so demanding? Well, everything really. Let's go back to the scene where we first examined the draw calls, but this time with all of the graphics options switched to the lowest settings.

We can see some obvious things from the devmode information, such as the polygon count halving and draw calls cut to almost 25% the amount when using the very high settings. But notice how there are no shadows at all, nor any ambient occlusion or fancy lighting.

The oddest change, though, is that the field of view has been reduced – this simple trick reduces the amount of geometry and pixels that have to be rendered in the frame, because any that fall out of the view get culled from the process early on. Digging into the stats again shines even more light on the situation.

We can see just how much simpler the shader load is, with the largest pixel shader only having 40 instructions (where previously it was 170). The biggest vertex shader is still quite meaty at 152 instructions, but it's only for the metallic surfaces.

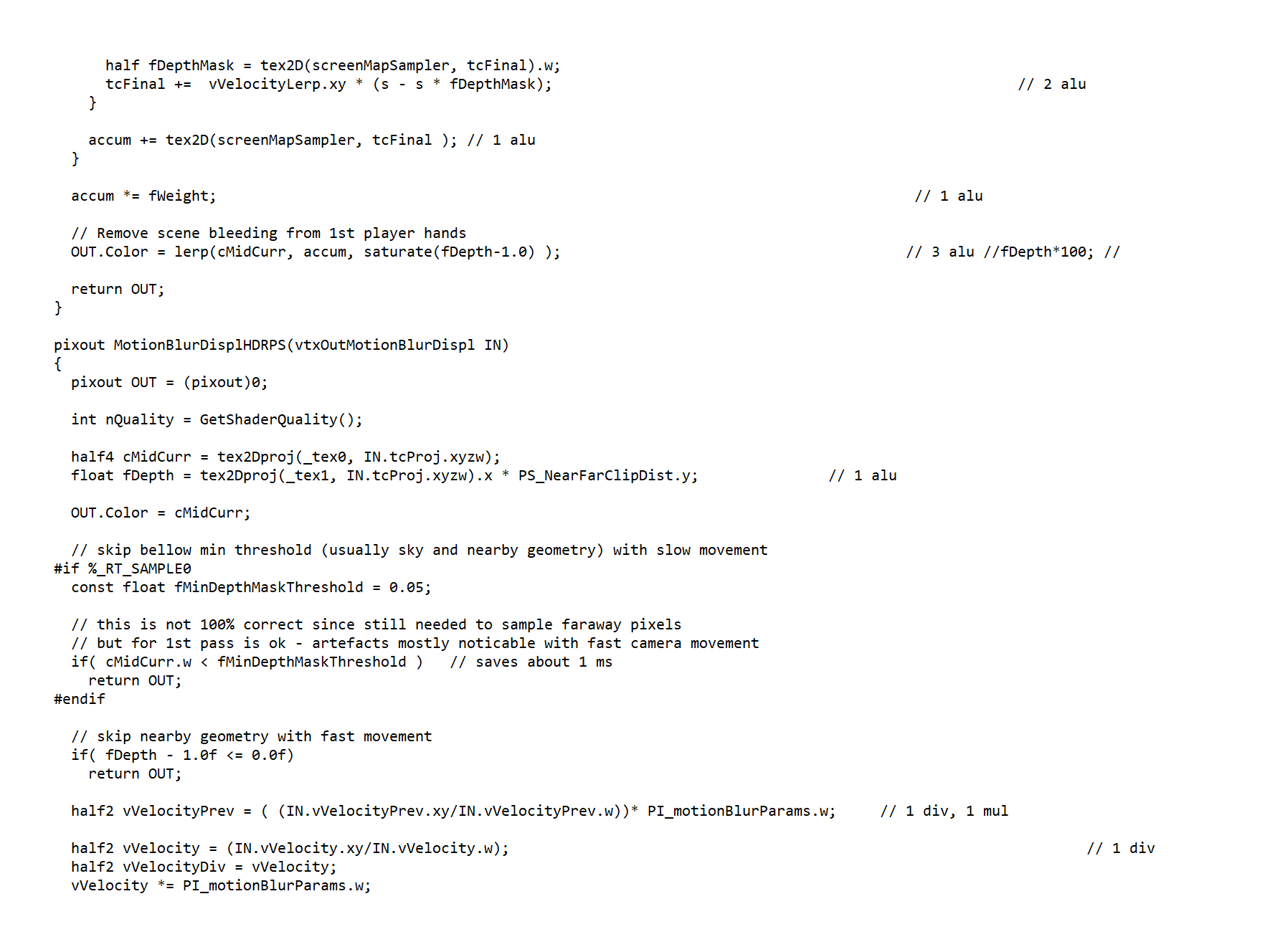

As you increase the graphics settings, the application of shadows hugely increases the total polygon and draw call count, and once into the high and very high quality levels, the overall lighting model switches to HDR (high dynamic range) and fully volumetric; motion blur and depth of field are also heavily used (the former is exceedingly complex, involving multiple passes, big shaders, and lots of sampling).

So does this mean Crytek just slapped in every possible rendering technique they could, without any concern of performance? Is this why Crysis deserves all the jokes? While the developers were targeting the very best CPUs and graphics cards of 2007 as their preferred platform, it would be unfair to claim that the team didn't care how well it ran.

Unpacking the shaders from the game's files reveals a wealth of comments within the code, with many of them indicating hardware costs, in terms of instruction and ALU cycle counts. Other comments refer to how a particular shader is used to improve performance or when to use it in preference of another to gain speed.

Developers don't usually make games in such a way that they're fully supported by all changes in technology over time. As soon as one project has finished, they're on to the next one and it's in future titles that lessons learnt in the past can be applied. For example, the original Mass Effect was quite demanding on hardware, and still runs quite poorly when set to 4K today, but the sequels run fine.

Crysis was made to be the best looking game that could possibly be achieved and still remain playable, bearing in mind that games on consoles routinely ran at 30 fps or less. When it came time to move onto the obligatory successors, Crytek clearly chose to scale things back down and the likes of Crysis 3 runs notably better than the first one does – it's another fine looking game, but it doesn't quite have the same wow factor that its forefather did.

Once More Unto the Breach

In April 2020, Crytek announced that they would be remastering Crysis for the PC, Xbox One, PS4, and Switch, targeting a July release date – they missed a trick here, as the original game actually begins in August 2020!.

That was rapidly followed by an apology for a launch delay to get the game up to the "standard [we've] come to expect from Crysis games."

It has to be said that the reaction to the trailer and leaked footage was rather mixed, with folks criticizing the lack of visual differences to the original (and in some areas, it was notably worse). Unsurprisingly, the announcement was followed by a raft of comments, echoing the earlier memes – even Nvidia jumped onto the bandwagon with a tweet that simply said "turns on supercomputer."

When the first title made its way onto the Xbox 360 and PS3, there were some improvements, mostly concerning the lighting model, but the texture resolution and object complexity were greatly decreased.

With the remaster targeting multiple platforms all together, it will be interesting to see how Crytek embraces the legacy that they created or if they're willing to take the safe road to keep all and sundry happy. For now, the Crysis from 2007 still lives up to its reputation, and deservedly so. The bar it set for what could be achieved in PC gaming is still aspired to today.

Shopping Shortcuts:

- GeForce RTX 2070 Super on Amazon

- Radeon RX 5700 XT on Amazon

- Radeon RX 5700 on Amazon

- GeForce RTX 2080 Super on Amazon

- GeForce RTX 2080 Ti on Amazon

- AMD Ryzen 9 3900X on Amazon

- AMD Ryzen 5 3600 on Amazon