Today we're taking a look at Far Cry 6 to see how it runs on a variety of PC hardware and GPUs, so lots and lots of benchmarks. To be clear this isn't a review of Far Cry 6, although we have spent several hours playing the game and can confirm, it plays like a Far Cry game, but with more interesting gunplay, which is probably what Far Cry fans want.

Graphically, it also looks a lot like other recently released titles in the franchise, think New Dawn and Far Cry 5, but with some visual upgrades. Ubisoft is still using the Dunia 2 game engine introduced way back in 2012 with Far Cry 3, though the engine is meant to be in constant development and the game has certainly evolved visually over the past 9 years.

Now, because Far Cry 6 is an AMD sponsored title, it arrives with support for FSR with four presets to choose from, but for today's benchmark we're going to ignore FSR for a few reasons, chief among them time. Proper FSR testing requires its own dedicated article that not only includes benchmark data, but also detailed image quality analysis, something we simply couldn't do while also benchmarking 30+ current and previous-gen AMD and Nvidia GPUs at three resolutions using two quality presets along with a look at ray tracing.

Speaking of which, Far Cry 6 supports ray tracing at launch, with the ability to enable DXR reflections and DXR shadows. But because DXR shadows are kind of pointless and not worth the performance hit, we haven't tested with them enabled, but I have taken a look at DXR reflections, so we'll look at that shortly using supported hardware.

Far Cry 6 includes a built-in benchmark which appears to accurately represent in-game performance, but we've decided to do manual benchmark runs as we generally prefer to test. We will refer to the benchmark tool for a few direct visual quality comparisons though. We've also tested using the HD texture pack which according to the settings menu takes the VRAM requirement at ultra from 5.2 GB up to 6.2 GB.

For testing we're using our Ryzen 9 5950X GPU test system which features 32GB of dual-rank, dual-channel DDR4-3200 CL14 memory. For the GeForce cards we're using Game Ready driver 472.12 and for Radeons early access driver Adrenalin 21.10.1.

Benchmarks

Ultra Quality Performance

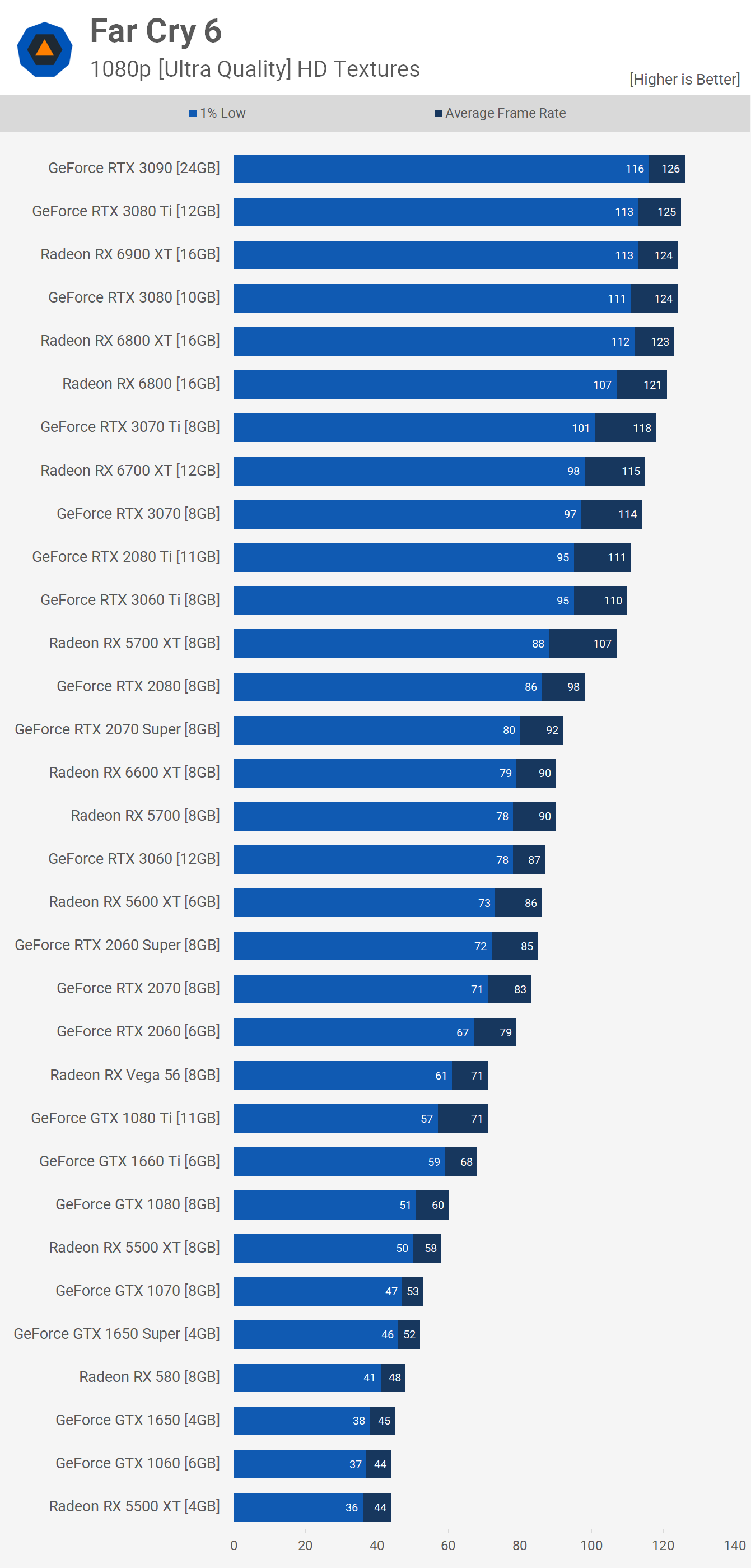

Starting with the 1080p ultra results and starting from the top, we unsurprisingly find the RTX 3090 which is either CPU or game engine limited, peaking at just 126 fps. This is the same performance you'll receive from the 3080 Ti, as well as the 6900 XT and standard RTX 3080.

Even the 6800 XT and 6800 are right there, so this isn't a game where you can easily drive really high frame rates for super fast panels. That said, 100+ fps is probably more than enough for a Far Cry-style game, at least for most players.

Although this is an AMD sponsored title, and AMD did provide an early access driver, I'd say overall performance looks quite neutral, at least at 1080p on the high-end segment. The RX 6700 and RTX 3070 are evenly matched and Nvidia's Turing-based GPUs are where you'd expect them to be with the RTX 2080 Ti matching the 3060 Ti.

The 5700 XT did perform exceptionally well, beating the RTX 2080 and that meant it was 16% faster than the 2070 Super. That's uncharacteristically fast for the old RDNA GPU, but we have seen it do well in other sponsored AMD titles.

Interestingly, the RX 6600 XT which typically mimics 5700 XT performance was quite a bit slower, rendering just 90 fps on average and that was only enough to match the vanilla RX 5700. It was able to match the RTX 3060 though, which seems to be its primary competitor at the moment.

The 5600 XT performs well in Far Cry 6, too, matching the RTX 3060 and 2060 Super, which isn't something we can often see. Even Vega does exceptionally well here, with Vega 56 matching the GTX 1080 Ti... a bit of a "what the..." moment when I saw those results. Pascal in general appears to do badly in Far Cry 6, at least with the current driver so that might change, as the 8GB 5500 XT was able to roughly match the GTX 1080, though the 4GB 5500 XT was a lot less impressive, presumably running out of VRAM.

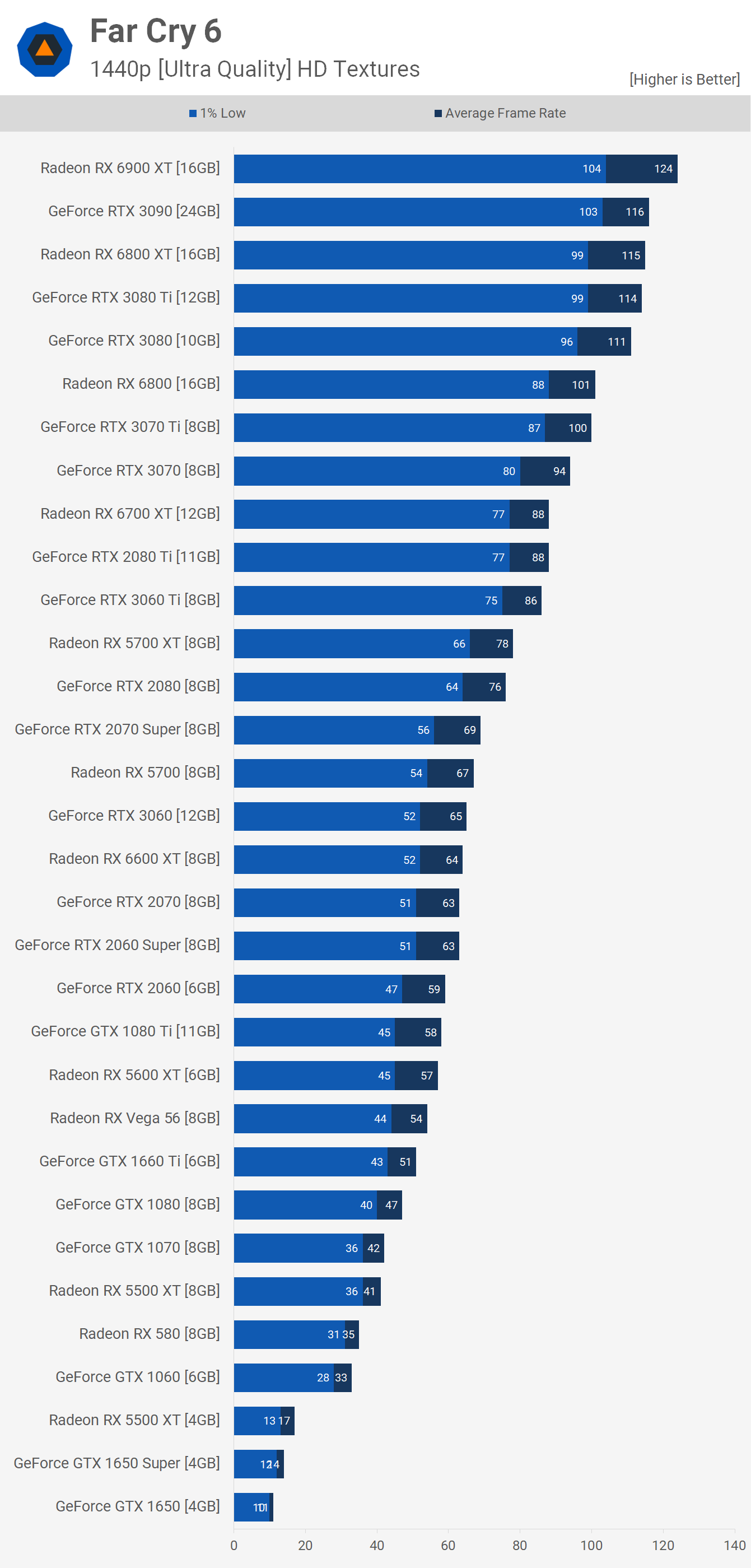

Moving up to 1440p provides a few unexpected results, though the margins remain incredibly small. The 6900 XT is able to overtake the RTX 3090 and normally you'd expect the margin to widen in Ampere's favor as the resolution increases. We also see that the 6800 XT nudges ahead of both the RTX 3080 Ti and 3080, albeit marginally.

The RX 6800 also matched the RTX 3070 Ti, while the RX 6700 was able to match the previous-gen flagship GeForce part, the RTX 2080 Ti. Again, the 5700 XT was seen punching above its weight, edging out the RTX 2080 which made it 13% faster than the 2070 Super. Then we see the RX 5700 matching the RTX 3060.

Again though, the more limited bandwidth of the 6600 XT appears to be hurting it as the new mid-range Radeon GPU was good for just 64 fps on average at 1440p, and that saw it only match the RTX 3060 along with the RTX 2070 and 2060 Super.

Then we have the 5600 XT which relative to the Turing GPUs is right where you'd expect it to be, trailing the 2060 Super by a 10% margin. However, it's Pascal that really struggles in this title. Typically, you'd expect to find the GTX 1080 shadowing the 2060 Super by less than a 10% margin, but here it was 25% slower.

The Radeon 5500 XT 8GB was also able to match the GTX 1070, though the RX 580 is about where you'd expect it to be – on par with the GTX 1060. Elsewhere, any GPU with a 4GB VRAM buffer can't handle these quality settings at 1440p.

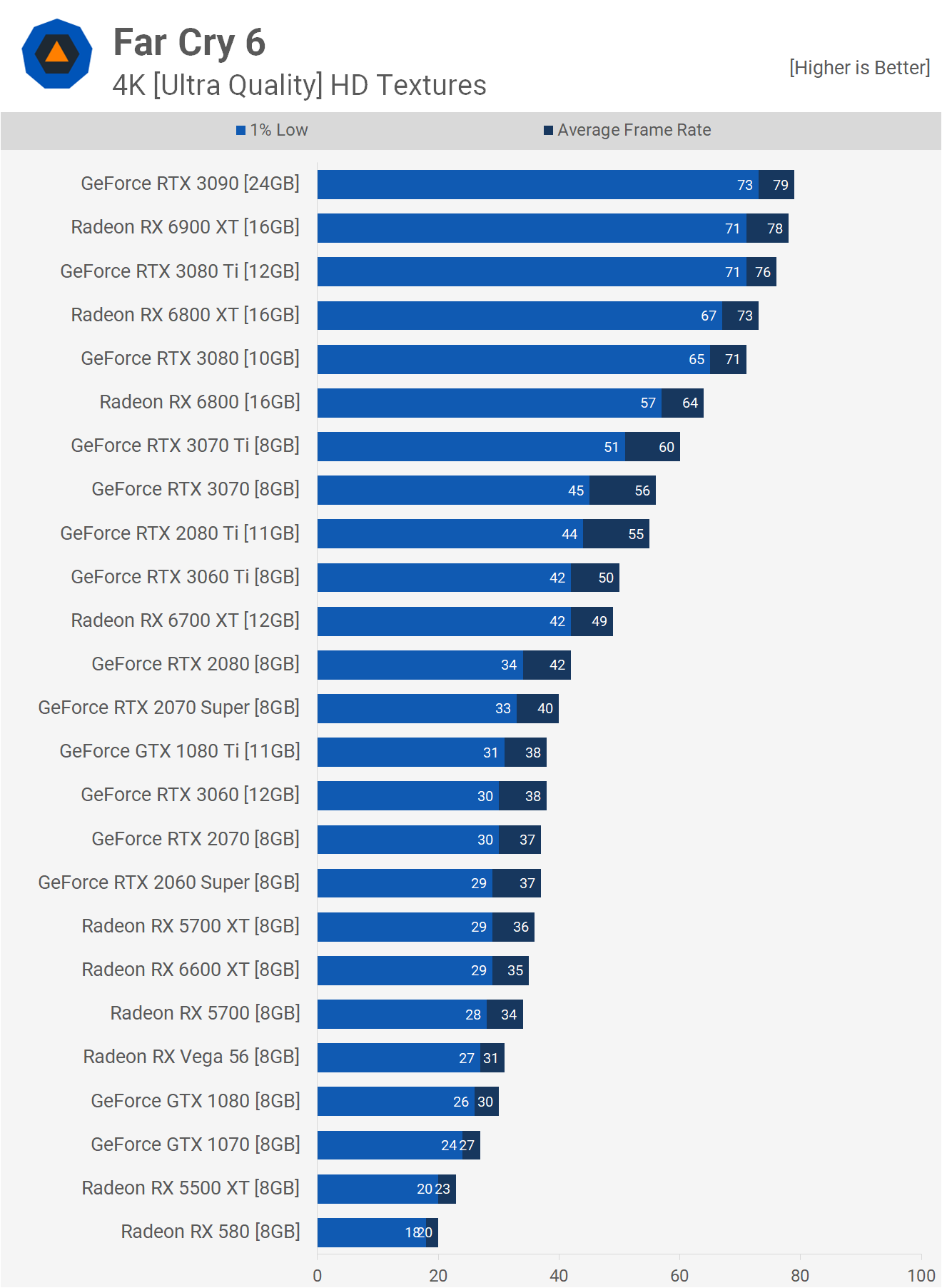

At 4K is where we find gamers who are seeking the most visually impressive Far Cry 6 experience, short of enabling ray tracing, you'll require some pretty serious rendering hardware. The RTX 3090 was back on top here with an 79 fps average which for an open world game of this quality is great.

In our opinion, Far Cry 6 looks better than Assassin's Creed Valhalla and here the RTX 3090 is 34% faster when comparing our test data. It's also a similar margin when comparing the Watch Dogs Legion performance.

The 6900 XT also performed well, essentially matching the RTX 3090. The 6800 XT also did well and was positioned between the 3080 Ti and 3080. Below that we're heading down towards 60 fps with the RX 6800 and 3070 Ti, while parts like the 3060 Ti and RX 6700 are down around 50 fps, which is still okay and certainly playable, but anything slower and I'd rather drop the resolution down to 1440p and take the hit on visual quality for the higher frame rates.

Medium Quality Performance

As noted before, we're using HD textures for both ultra and medium quality tests, so the image quality is pretty similar. The difference in terrain quality is small, as is water. The big one here is shadow quality, though the difference isn't always noticeable. Volumetric fog does look better on ultra.

We think it's fair to say most of you will be just as happy using the medium quality preset, but let's move on to see how much extra performance it offers...

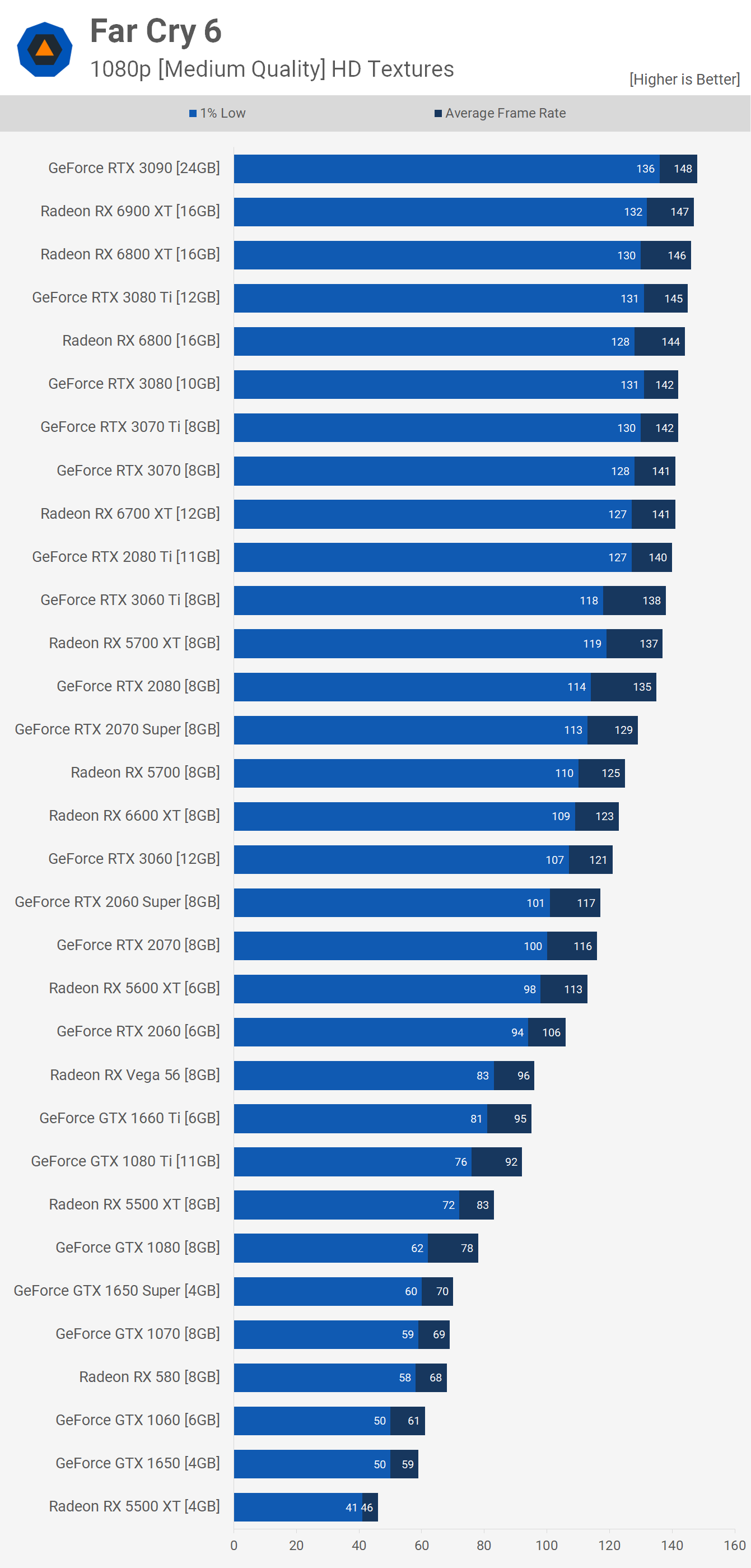

As expected, we're seeing an increase in performance at 1080p using the medium quality preset, even with the flagship GPUs. Lower quality settings can reduce CPU load and even alleviate game engine bottlenecks, so it's not entirely clear which limitation has been mitigated in order to increase the bottleneck limit by roughly 15%, but we can tell that we're still not GPU limited since the Radeon 6900 XT and 6800 XT both deliver the same level of performance.

As we scroll down the graph we're seeing strong performance from all the usual suspects and again, just as we saw with the ultra quality preset, the Radeon RX 6700 and GeForce RTX 3070 deliver identical performance. The Radeon 5700 XT is also extremely strong, matching the RTX 3060 Ti which is nuts, and the 137 fps average again meant it edged out the RTX 2080, making it 6% faster than the RTX 2070 Super.

The 6600 XT was only able to match the standard RX 5700, though that did make it a few frames faster than the RTX 3060. Then we have the 5600 XT roughly matching the GTX 2070 which made it a little faster than the RTX 2060, and again Vega 56 can be seen beating the GTX 1080 Ti, making it a whopping 23% faster than the standard GTX 1080.

The Radeon RX 580 also does better with the medium quality preset, pulling ahead of the GTX 1060 by an 11% margin to match the GTX 1070. It was a struggle for some of the 4GB graphics cards and although the GTX 1650 Super did well, the 5500 XT can once again be found at the very bottom of our graph.

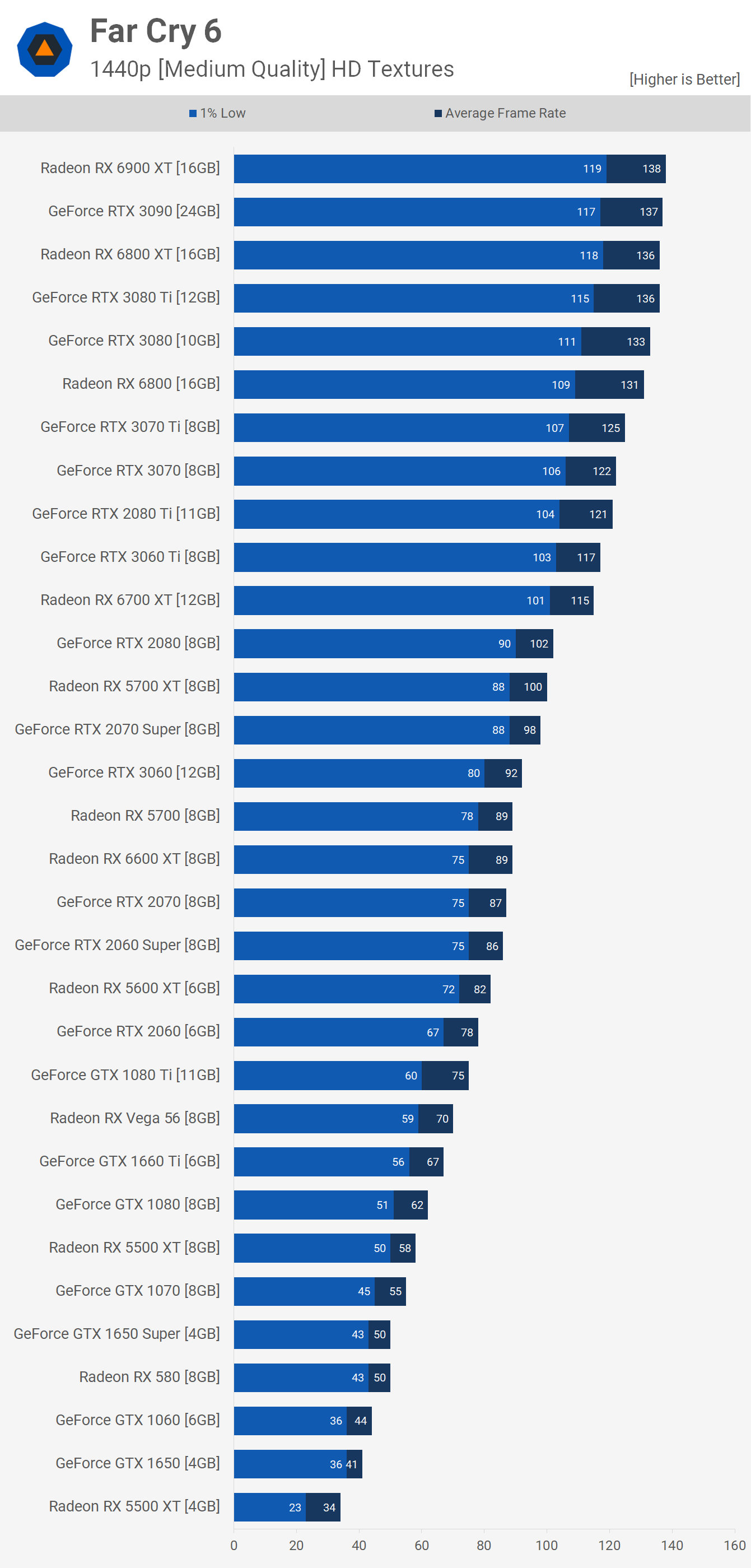

The 1440p medium results see the vast majority of the GPUs tested still able to render over 60 fps on average. But we find again the Radeon 6900 XT matching the RTX 3090, along with the 6800 XT and 3080 Ti. The RTX 3080 and RX 6800 both managed to average over 130 fps.

Then from 125 fps down to 155 fps we have a range of Nvidia GPUs with the RX 6700 coming in at 115 fps, so above it was the 3060 Ti, 2080 Ti, 3070 and 3070 Ti. Again the 5700 XT was able to match the RTX 2080, an incredible result for the first-gen RDNA part. Once again we see the 6600 XT only matching the vanilla 5700, and that made it a few frames slower than the standard RTX 3060, which is a disappointing result for an AMD sponsored title.

It's worth mentioning that the mid-range to low-end GPUs saw a 30-40% performance improvement with the medium quality preset, opposed to ultra, and I've got to say that's a massive increase for what's often only a small difference in visual quality. Parts such as the GTX 1070, 1650 Super and RX 580 did enable playable performance, but depending on the frame rates you're chasing, you might want to lower the quality settings even further.

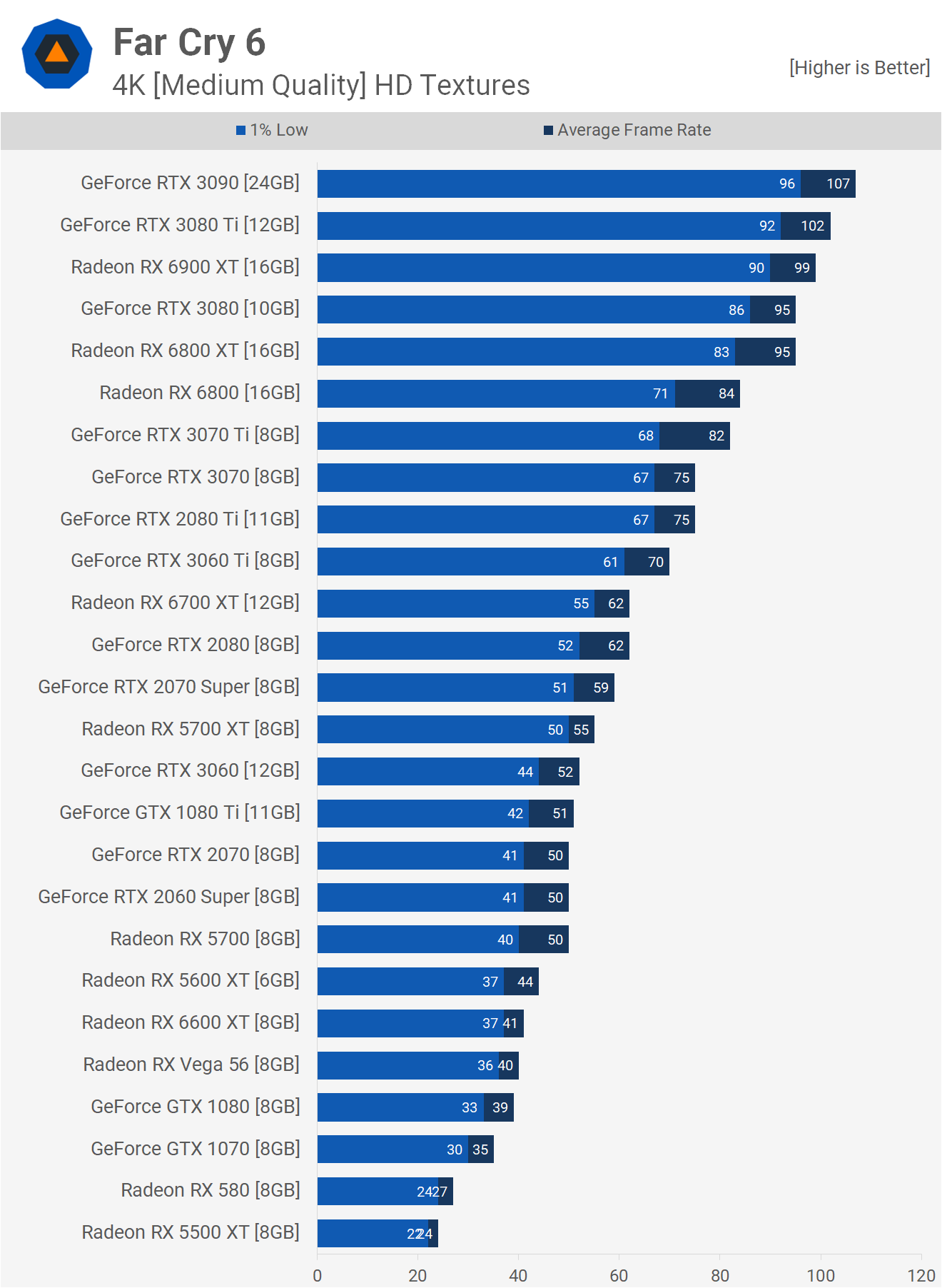

Then for those of you wishing to game at 4K and are happy to do so with dialed down quality settings we've got good news, Far Cry 6 is very playable with most modern graphics cards. Nvidia pulls ahead at the high-end with the RTX 3090 and 3080 Ti edging out the 6900 XT, though the RTX 3080 and 6800 XT were comparable, as were the RX 6800 and RTX 3070 Ti.

With the RX 6700 and RTX 2080 we're dipping down towards 60 fps, while there were several options capable of 50 fps such as the RTX 3060 Ti, GTX 1080 Ti, RTX 2070 and 2060 Super. Below that you can start to really notice the frame rate lag and as such you'd drop the resolution back down to 1440p or lower.

Ultra vs. Ultra DXR Comparison

For a quick look at the visual quality difference, or lack thereof, between ultra with and without DXR reflections enabled. I watched some footage over and over and couldn't spot the difference, so I turned to a trained eye in Tim who informed me that ray tracing was working and there was a difference.

Below is an example, but to best illustrate it, we've zoomed in by 400%. You can see the car's shadow that is bigger and more realistic in the puddle of water, but yeah that's it...

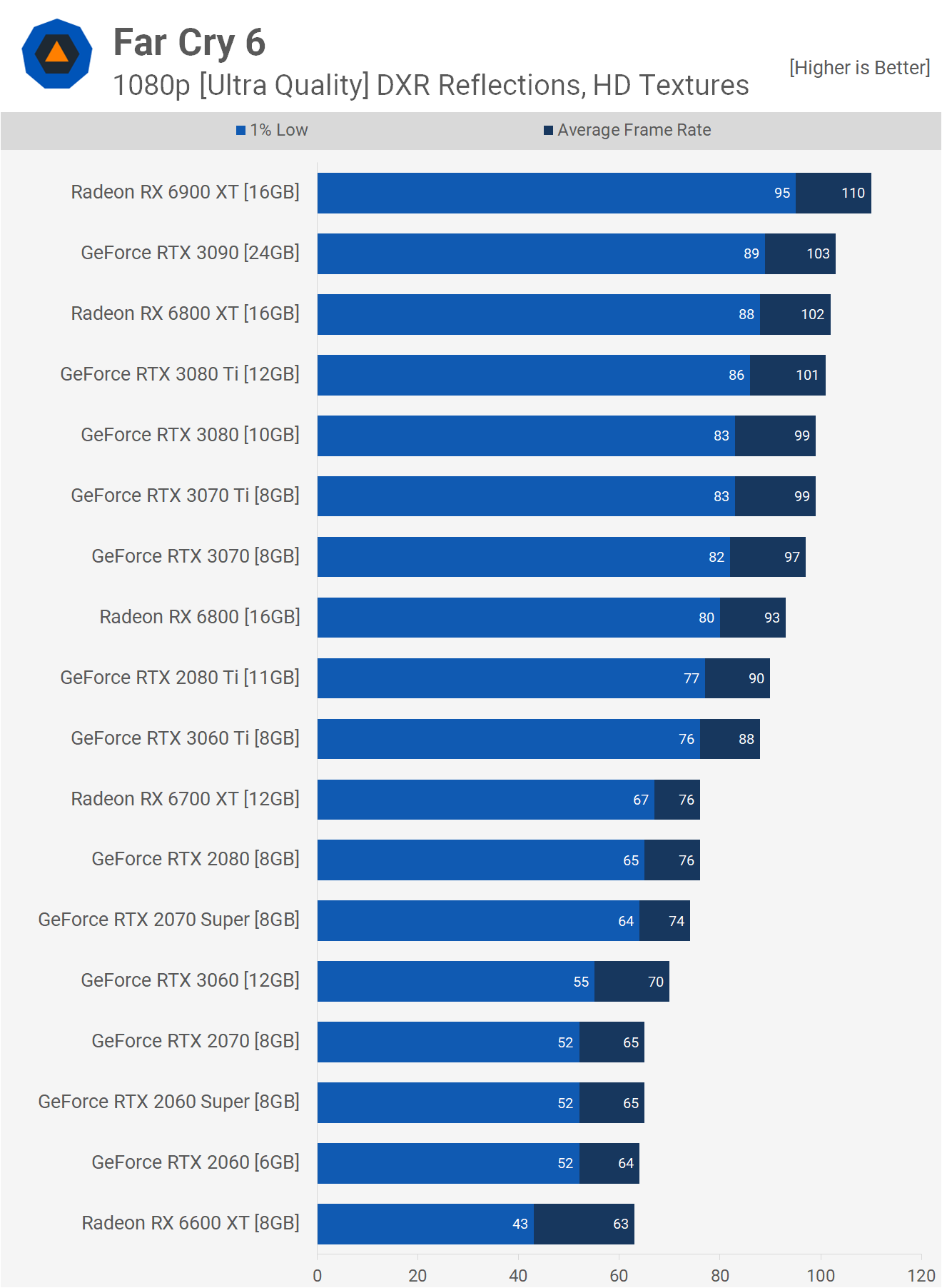

When playing the game the visual difference isn't more noticeable than the built-in benchmark, and as such the performance hit is much the same. No surprises, Radeon GPUs aren't very good at real-time ray tracing yet, but since they are advertised to support it and AMD wants to push that marketing angle forward, best to do it, but just do very little of it to avoid a serious performance penalty.

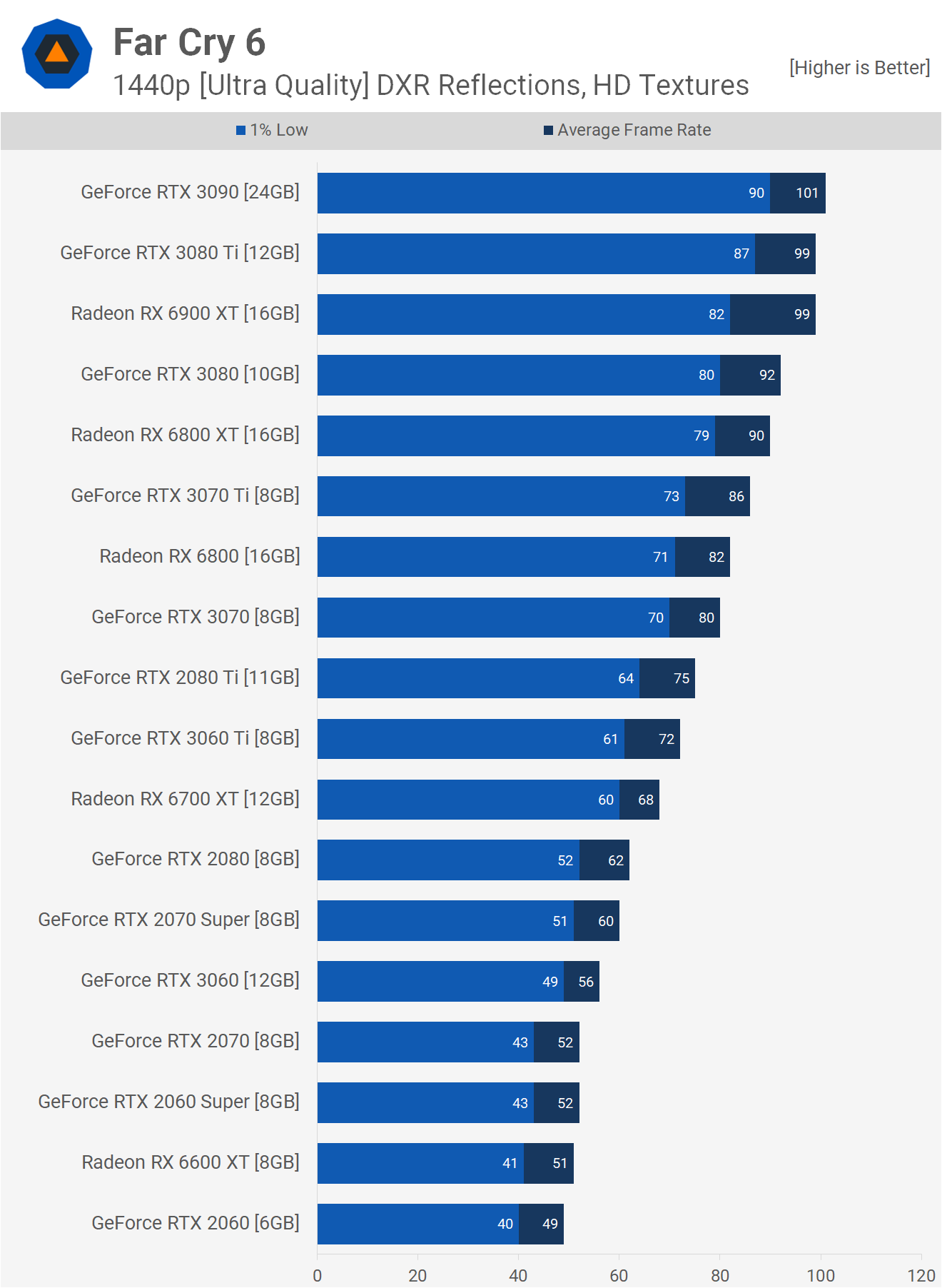

With DXR reflections enabled we're only looking at a 10-15% reduction in performance which explains why the effects are so hard to spot. Games with more prominent ray traced effects will see a part like the 6900 XT drop at least 40% of its original performance, so again given how small the performance hit here is, it's unsurprising that I really struggled to tell when the feature was enabled. Frankly I'd run with DXR disabled, but let's take a look at the 1440p results.

At 1440p we're looking at a 20% performance hit for the 6900 XT with DXR reflections enabled and a 13% hit for the RTX 3090. Both are comparatively small to most other titles that support DXR, but even so given the very minor upgrade to visuals, at least seen in my testing, I don't think I'd bother enabling it.

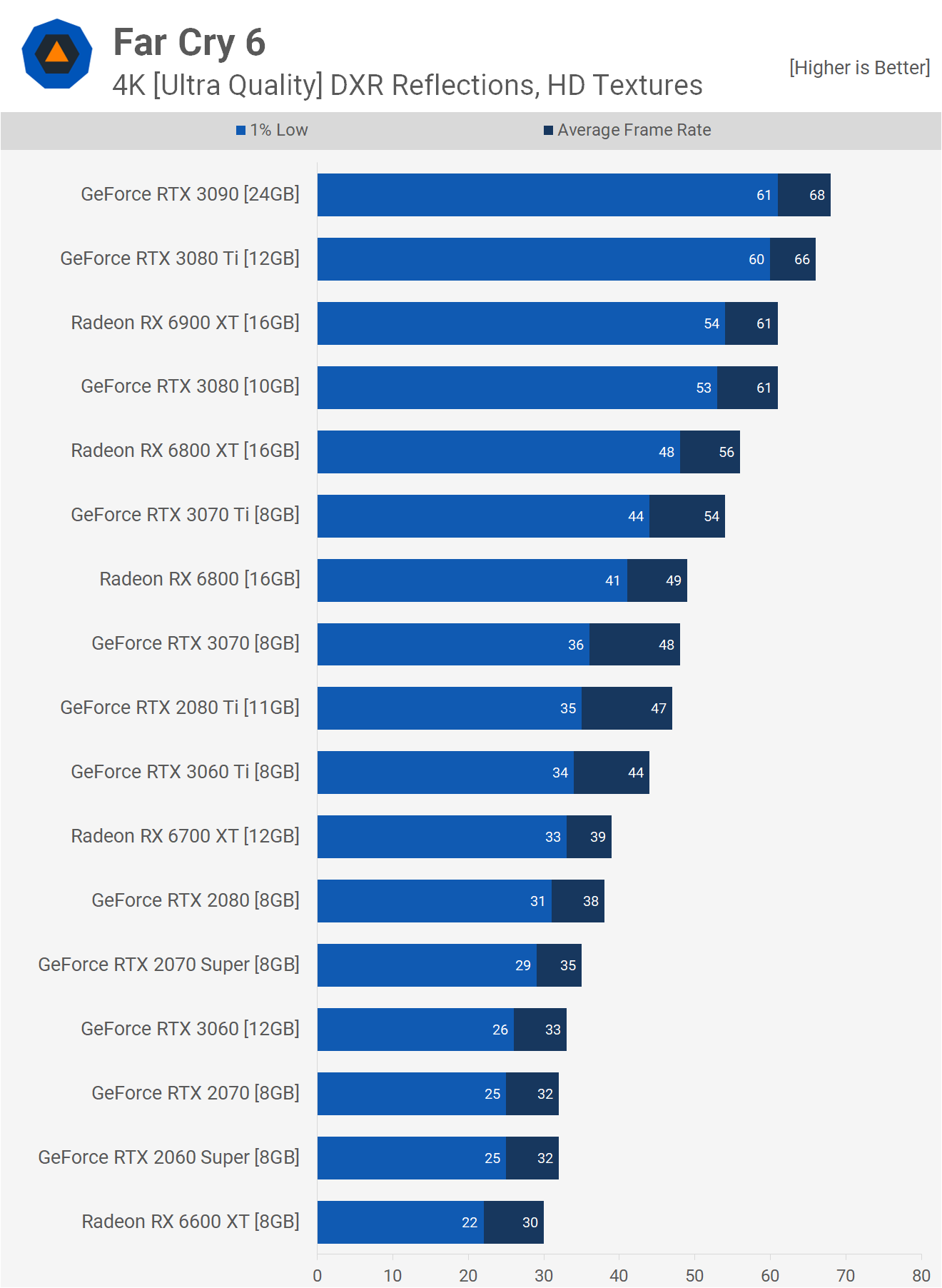

At 4K we're looking at a 22% drop in performance for the 6900 XT with just a 13% hit for the RTX 3090. The more rays cast, the more that margin will be exacerbated, which is obviously why AMD has opted for extremely limited use of ray tracing for this title.

VRAM Usage

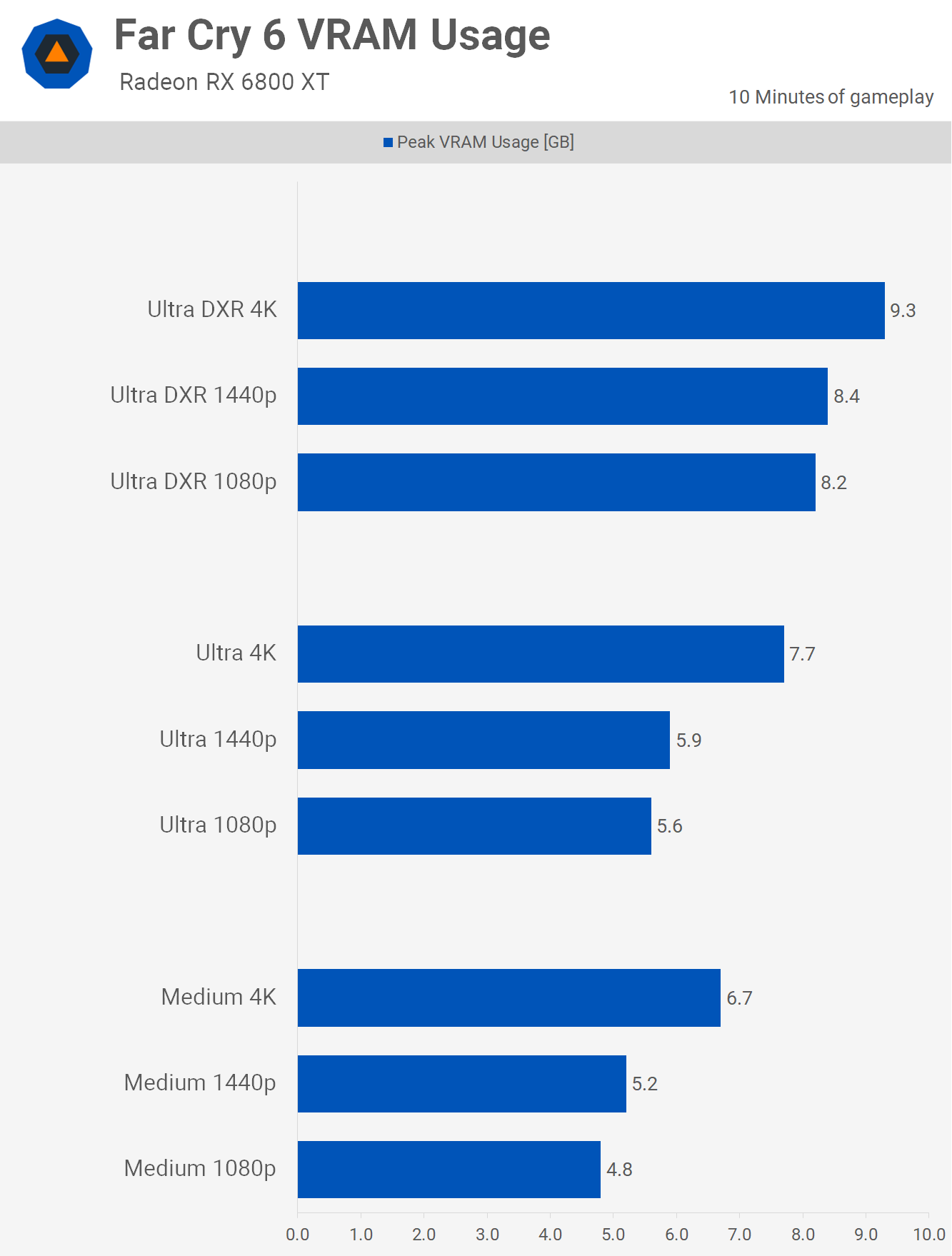

Now here's a look at Far Cry 6 VRAM usage with the HD texture pack using a Radeon RX 6800 XT. These figures were recorded after 10 minutes of gameplay and for each quality setting or resolution change, the game was completely closed and the process repeated for accurate data.

You may have noticed 4GB and 6GB graphics cards were removed from the 4K results, and that's because they basically didn't work. Some configurations did work, like the RTX 2060 which appeared fine with ray tracing enabled, despite the game using over 8GB of VRAM at 1080p and 1440p, though it was broken at 4K.

For 1440p gaming you'll ideally want a GPU with 6GB of VRAM as the minimum using the ultra and medium quality settings and 4GB is sketchy even at 1080p using medium.

How Does It Run?

Considering the visual fidelity in Far Cry 6, we'd say the results are quite good. The Far Cry series has never been well optimized for driving high frame rates, but we don't think it needs to be. As a predominantly single player open-world game, most gamers will be happy with 60 fps, while the pickier of the bunch such as myself will be content with 90 fps.

That means for those who just want to max out the quality settings without any tinkering to get the best balance of performance and visuals, you can achieve around 90 fps with an RTX 3060 Ti or RX 6700 at 1440p, which is pretty decent.

Then more modest previous generation GPUs such as the 5700 XT will be good for around 80 fps, though you will require something a lot more expensive from Nvidia to achieve the same level of performance, such as the RTX 2080.

Dialing the quality preset back to medium can improve frame rates substantially while only slightly downgrading the visuals. For gamers using mid-range to low-end hardware, this is a great option.

The 5700 XT, RTX 2080, 2070 Super and RTX 3060 were all good for over 90 fps at 1440p, while 60 fps was achievable with more modest hardware, such as the GTX 1660 Ti or Radeon 5600 XT.

Also read: 17 Years of Far Cry – From Tech Demo to Successful Video Game Franchise

Because this is an AMD sponsored title, you'd expect Radeon GPUs to perform well and they did. High-end RDNA2 parts such as the 6900 XT, 6800 XT 6800 and 6700 all performed very well relative to their GeForce competitors. However, the 6600 XT was disappointing as it could only match the RX 5700.

But maybe it's not a case of the 6600 XT performing worse than expected – after all, it did match the RTX 3060 in most of our testing – rather it could be RDNA-based GPUs like the 5600 XT, 5700 and 5700 XT performing well beyond expectation. A possible reason for this is memory bandwidth, as all three models enjoy a lot more of it when compared to the 6600 XT, which is comparable to the 5500 XT. Of course, the 6600 XT does have some Infinity Cache as well, though it's hard to say how useful the smaller 32MB buffer is.

Other surprises include the venerable Vega 56 pumping out 70 fps on average at 1440p using the medium quality preset and that made it just 7% slower than the GTX 1080 Ti and faster than the rest of the GeForce 10 series. It'll be interesting to see if Nvidia does improve performance with an updated driver for the older GPUs.

Finally, Far Cry 6 ray tracing is not as relevant as you may think. We know this isn't a strength of RDNA2 and so the average result was kind of expected. However the game's ray traced graphics are nothing to write home about. Granted, we didn't go deep in the exploration, but I personally couldn't even tell when it was enabled.