It's hard to overemphasize how far computers have come and how they have transformed just about every aspect of our lives. From rudimentary devices like toasters to cutting-edge devices like spacecrafts, you'll be hard pressed not to find these devices making use of some form of computing capability.

At the heart of every one of these devices is some form of CPU, responsible for executing program instructions as well as coordinating all the other parts that make the computer tick. For an in-depth explainer on what goes into CPU design and how a processor works internally, check out this amazing series here on TechSpot. For this article, however, the focus is on a single aspect of CPU design: the multi-core architecture and how it's driving performance of modern CPUs.

Unless you're using a computer from two decades ago, chances are you have a multi-core CPU in your system and this isn't limited to full-sized desktop and server-grade systems, but mobile and low-power devices as well. To cite a single mainstream example, the Apple Watch Series 7 touts a dual-core CPU. Considering this is a small device that wraps around your wrist, it shows just how important design innovations help raise the performance of computers.

On the desktop side, taking a look at recent Steam hardware surveys can tell us how much multi-core CPUs dominate the PC market. Over 70% of Steam users have a CPU with 4 or more cores. But before we delve any deeper into the focus of this article, it should be appropriate to define some terminology and even though we're limiting the scope to desktop CPUs, most of the things we discuss equally apply to mobile and server CPUs in different capacities.

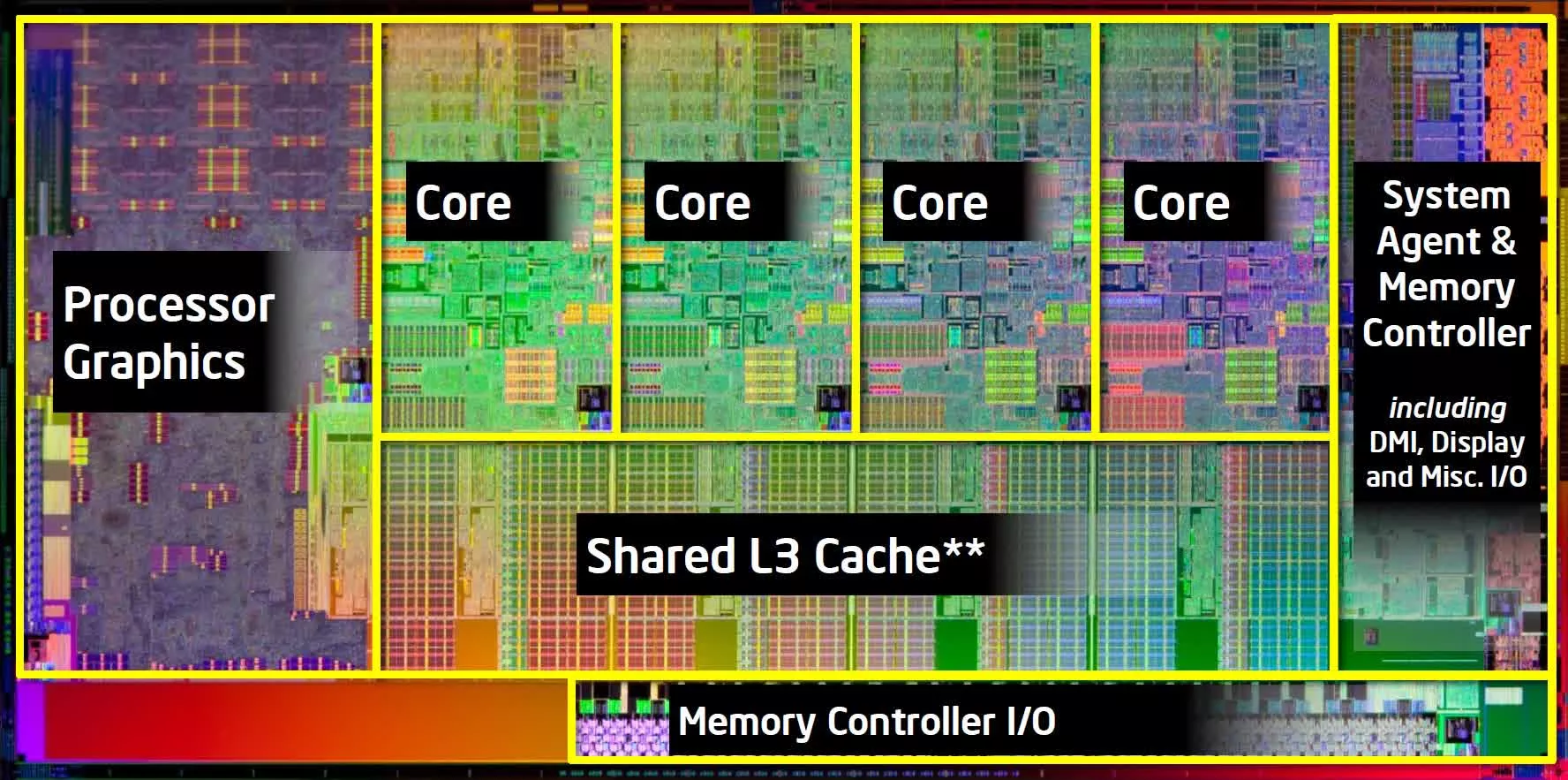

First and foremost, let's define what a "core" is. A core is a fully self-contained microprocessor capable of executing a computer program. The core usually consists of arithmetic, logic, control-unit as well as caches and data buses, which allow it to independently execute program instructions.

The multi-core term is simply a CPU that combines more than one core in a processor package and functions as one unit. This configuration allows the individual cores to share some common resources such as caches, and this helps to speed up program execution. Ideally, you'd expect that the number of cores a CPU has linearly scales with performance, but this is usually not the case and something we'll discuss later in this article.

Another aspect of CPU design that causes a bit of confusion to many people is the distinction between a physical and a logical core. A physical core refers to the physical hardware unit that is actualized by the transistors and circuitry that make up the core. On the other hand, a logical core refers to the independent thread-execution ability of the core. This behavior is made possible by a number of factors that go beyond the CPU core itself and depend on the operating system to schedule these process threads. Another important factor is that the program being executed has to be developed in a way that lends itself to multithreading, and this can sometimes be challenging due to the fact that the instructions that make up a single program are hardly independent.

Moreover, the logical core represents a mapping of virtual resources to physical core resources and hence in the event a physical resource is being used by one thread, other threads that require the same resource have to be stalled which affects performance. What this means is that a single physical core can be designed in a way that allows it to execute more than one thread concurrently where the number of logical cores in this case represents the number of threads it can execute simultaneously.

Almost all desktop CPU designs from Intel and AMD are limited to 2-way simultaneous multithreading (SMT), while some CPUs from IBM offer up to 8-way SMT, but these are more often seen in server and workstation systems. The synergy between CPU, operating system, and user application program provides an interesting insight into how the development of these independent components influence each other, but in order not to be sidetracked, we'll leave this for a future article.

Before multi-core CPUs

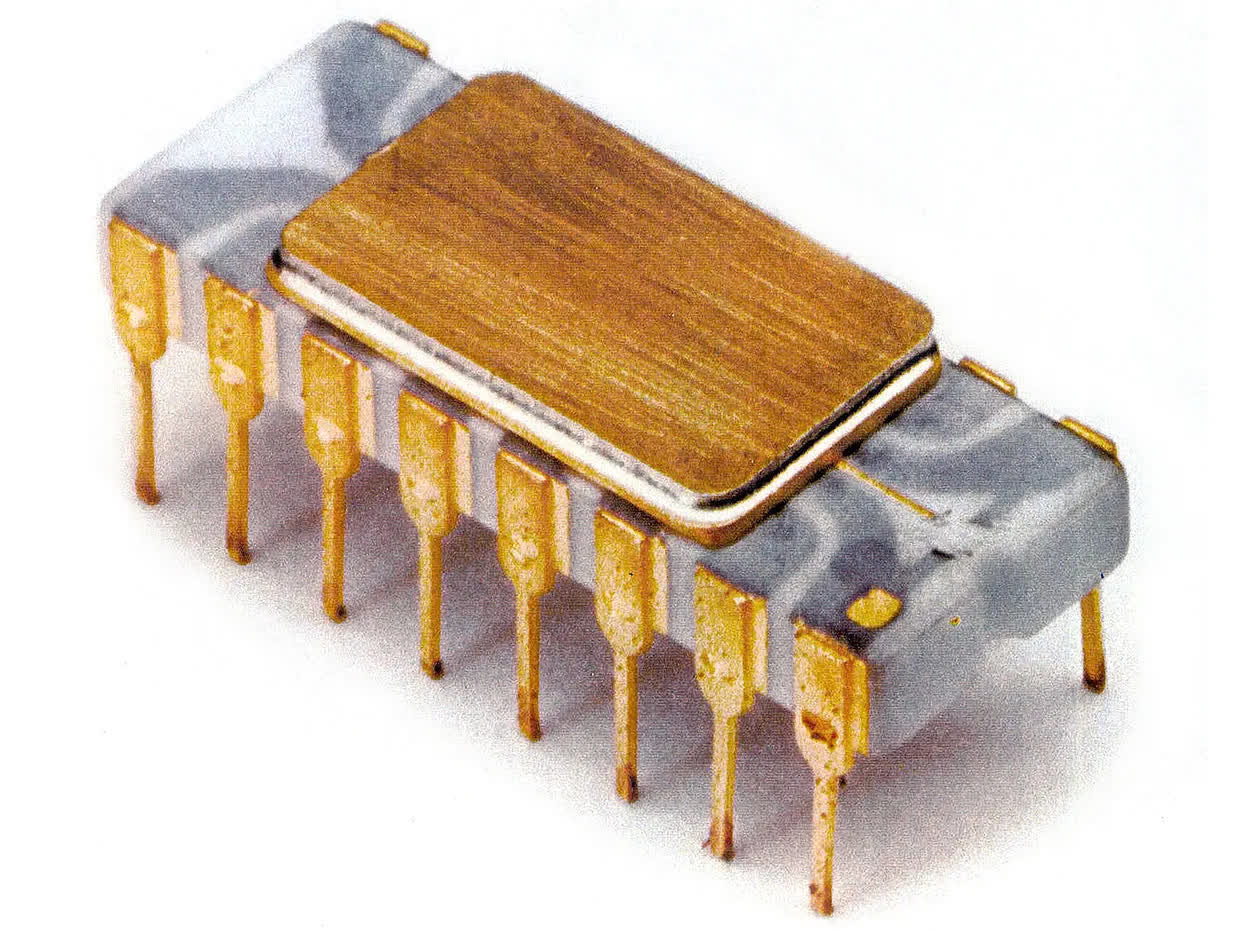

Taking a brief look into the pre-multi-core era will enable us to develop an appreciation for just how far we have come. A single-core CPU as the name implies usually refers to CPUs with a single physical core. The earliest commercially available CPU was the Intel 4004 which was a technical marvel at the time it released in 1971.

This 4-bit 750kHz CPU revolutionized not just microprocessor design but the entire integrated circuit industry. Around that same time, other notable processors like the Texas Instruments TMS-0100 were developed to compete in similar markets which consisted of calculators and control systems. Since then, processor performance improvements were mainly due to clock frequency increases and data/address bus width expansion. This is evident in designs like the Intel 8086, which was a single-core processor with a max clock frequency of 10MHz and a 16-bit data-width and 20-bit address-width released in 1979.

Going from the Intel 4004 to the 8086 represented a 10-fold increase in transistor count, which remained consistent for subsequent generations as specifications increased. In addition to the typical frequency and data-width increases, other innovations which helped to improve CPU performance included dedicated floating-point units, multipliers, as well as general instruction set architecture (ISA) improvements and extensions.

Continued research and investment led to the first pipelined CPU design in the Intel i386 (80386) which allowed it to run multiple instructions in parallel and this was achieved by separating the instruction execution flow into distinct stages, and hence as one instruction was being executed in one stage, other instructions could be executed in the other stages.

The superscalar architecture was introduced as well, which can be thought of as the precursor to the multi-core design. Superscalar implementations duplicate some instruction execution units which allow the CPU to run multiple instructions at the same time given that there were no dependencies in the instructions being executed. The earliest commercial CPUs to implement this technology included the Intel i960CA, AMD 29000 series, and Motorola MC88100.

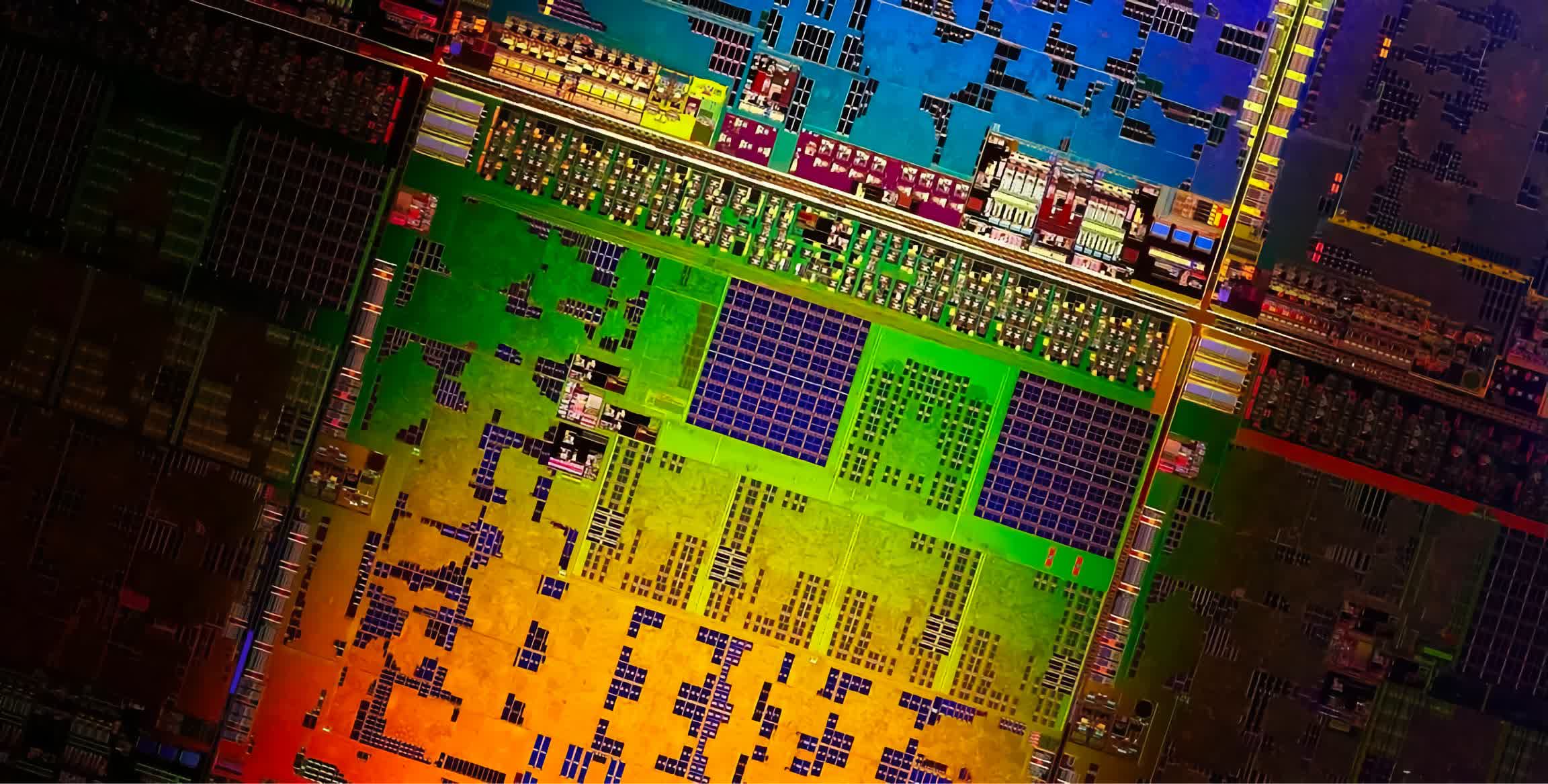

One of the major contributing factors to the rapid increase in CPU performance in each generation was transistor technology, which allowed the size of the transistor to be reduced. This helped to significantly decrease the operating voltages of these transistors and allowed CPUs to cram in massive transistors counts, reduced chip area, while increasing caches and other dedicated accelerators.

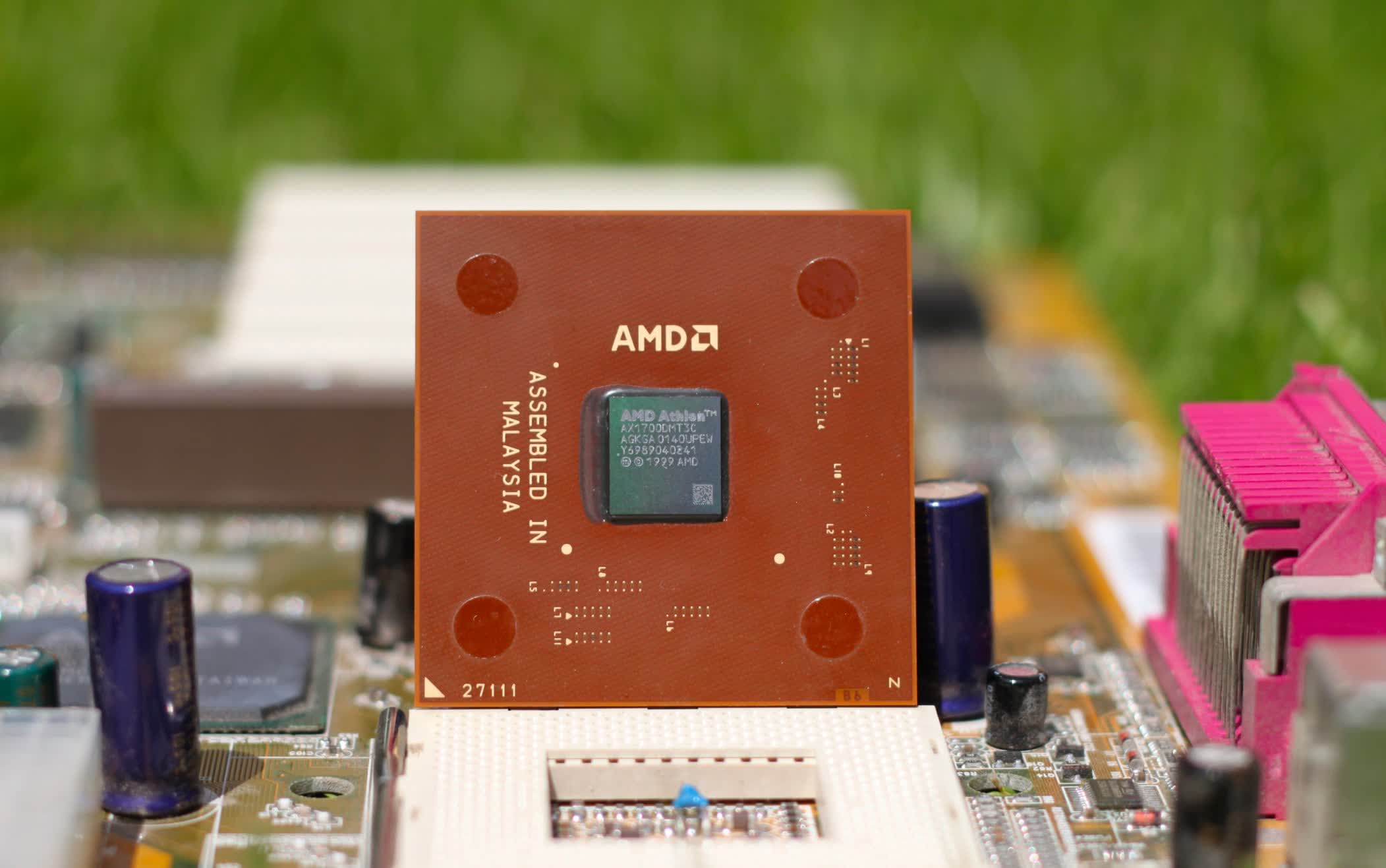

In 1999, AMD released the now classic and fan-favorite Athlon CPU, hitting the mind-boggling 1GHz clock frequency months later, along with all the host of technologies we've talked about to this point. The chip offered remarkable performance. Better still, CPU designers continued to optimize and innovate on new features such as branch prediction and multithreading.

The culmination of these efforts resulted in what's regarded as one of the top single-core desktop CPUs of its time (and the ceiling of what could be achieved in term of clock speeds), the Intel Pentium 4 running up to 3.8GHz supporting 2 threads. Looking back at that era, most of us expected clock frequencies to keep increasing and were hoping for CPUs that could run at 10GHz and beyond, but one could excuse our ignorance since the average PC user was not as tech-informed as it is today.

The increasing clock frequencies and shrinking transistor sizes resulted in faster designs but this came at the cost of higher power consumption due to proportional relation between frequency and power. This power increase results in increased leakage current which does not seem like much of a problem when you have a chip with 25,000 transistors, but with modern chips having billions of transistors, it does pose a huge problem.

Significantly increasing temperature can cause chips to break down since the heat cannot be dissipated effectively. This limitation in clock frequency increases meant designers had to rethink CPU design if there was to be any meaningful progress to be made in continuing the trend of improving CPU performance.

Enter the multi-core era

If we liken single-core processors with multiple logical cores to a single human with as many arms as logical cores, then multi-core processors will be like a single human with multiple brains and corresponding number of arms as well. Technically, having multiple brains means your ability to think could increase dramatically. But before our minds drift too far away thinking about the character we just visualized, let's take a step back and look at one more computer design that preceded the multi-core design and that is the multi-processor system.

These are systems that have more than one physical CPU and a shared primary memory pool and peripherals on a single motherboard. Like most system innovations, these designs were primarily geared towards special-purpose workloads and applications which are characterized by what we see in supercomputers and servers. The concept never took off on the desktop front due to how badly its performance scaled for most typical consumer applications. The fact that the CPUs had to communicate over external buses and RAM meant they had to deal with significant latencies. RAM is "fast" but compared to the registers and caches that reside in the core of the CPU, RAM is quite slow. Also, the fact that most desktop programs were not designed to take advantage of these systems meant the cost of building a multi-processor system for home and desktop use was not worth it.

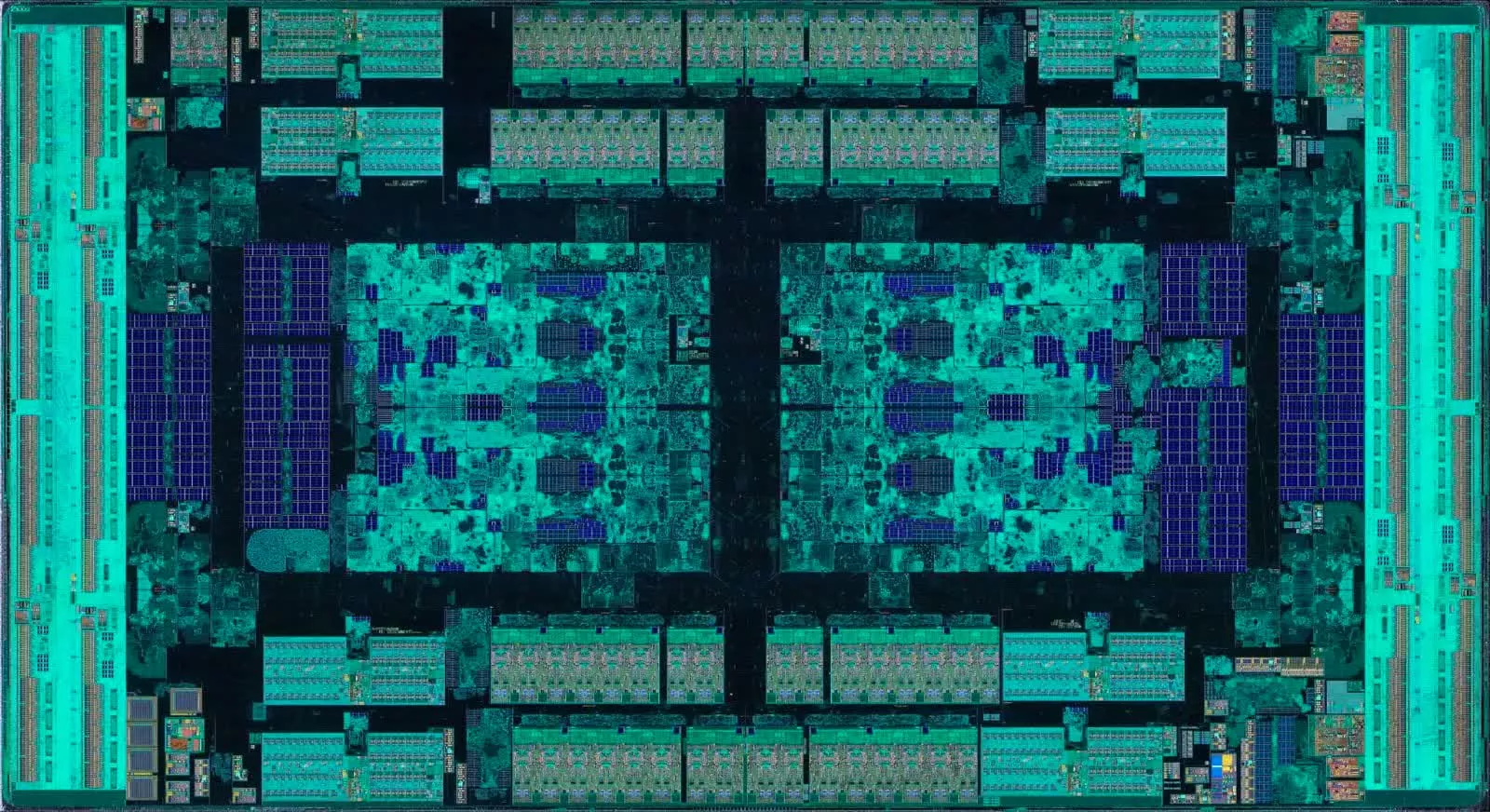

Conversely, because the cores of a multi-core CPU design are much closer and built on a single package they have faster buses to communicate on. Moreover, these cores have shared caches which are separate from their individual caches and this helps to improve inter-core communication by decreasing latency dramatically. In addition, the level of coherence and core-cooperation meant performance scaled better when compared to multi-processor systems and desktop programs could take better advantage of this. In 2001 we saw the first true multi-core processor released by IBM under their Power4 architecture and as expected it was geared towards workstation and server applications. In 2005, however, Intel released its first consumer focused dual-core processor which was a multi-core design and later that same year AMD released their version with the Athlon X2 architecture.

As the GHz race slowed down, designers had to focus on other innovations to improve the performance of CPUs and this primarily resulted from a number of design optimizations and general architecture improvements. One of the key aspects was the multi-core design which attempted to increase core counts for each generation. A defining moment for multi-core designs was the release of Intel's Core 2 series which started out as dual-core CPUs and went up to quad-cores in the generations that followed. Likewise, AMD followed with the Athlon 64 X2 which was a dual-core design, and later the Phenom series which included tri and quad-core designs.

These days both companies ship multi-core CPU series. The Intel 11th-gen Core series maxed out at 10-cores/20-threads, while the newer 12th-gen series goes up to 24 threads with a hybrid design that packs 8 performance cores that support multi-threading, plus 8 efficient cores that don't. Meanwhile, AMD has its Zen 3 powerhouse with a whopping 16 cores and 32 threads. And those core counts are expected to increase and also mix up with big.LITTLE approaches as the 12th-gen Core family just did.

In addition to the core counts, both companies have increased cache sizes, cache levels as well as added new ISA extensions and architecture optimizations. This struggle for total desktop domination has resulted in a couple of hits and misses for both companies.

Up to this point we have ignored the mobile CPU space, but like all innovations that trickle from one space to the other, advancements in the mobile sector which focuses on efficiency and performance per watt, has led to some very efficient CPU designs and architectures.

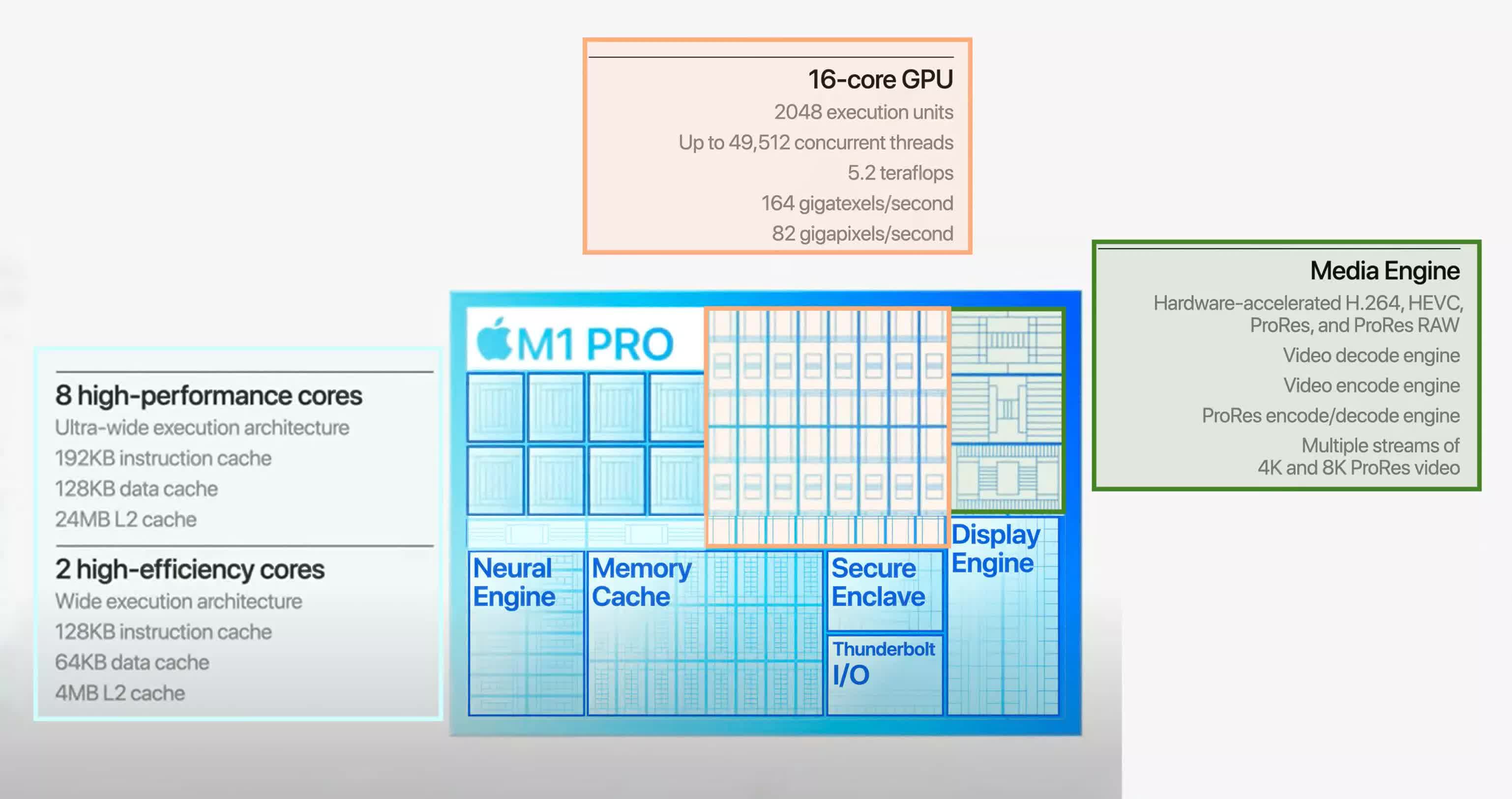

As fully demonstrated by the Apple M1 chip, well designed CPUs can have both efficient power consumption profiles as well as excellent performance, and with the introduction of native Arm support in Windows 11, the likes of Qualcomm and Samsung are guaranteed to make an effort to chip away some share of the laptop market.

The adoption of these efficient design strategies from the low-power and mobile sector has not happened overnight, but has been the result of continued effort by CPU makers like Intel, Apple, Qualcomm, and AMD to tailor their chips to work in portable devices.

What's next for the desktop CPU

Just like the single-core architecture has become one for the history books, the same could be the eventual fate of today's multi-core architecture. In the interim, both Intel and AMD seem to be taking different approaches to balancing performance and power efficiency.

Intel's latest desktop CPUs (a.k.a. Alder Lake) implement a unique architecture which combines high-performance cores with high efficiency cores in a configuration that seems to be taken straight out of the mobile CPU market, with the highest model having a high performance 8-core/16-thread in addition to a low-power 8-core part making a total of 24 cores.

AMD, on the other hand, seems to be pushing for more cores per CPU, and if rumors are to be believed, the company is bound to release a whopping 32-core desktop CPU in their next-generation Zen 4 architecture, which seems pretty believable at this point looking at how AMD literally builds their CPUs by grouping multiple core complexes, each have multiple number of cores on the same die.

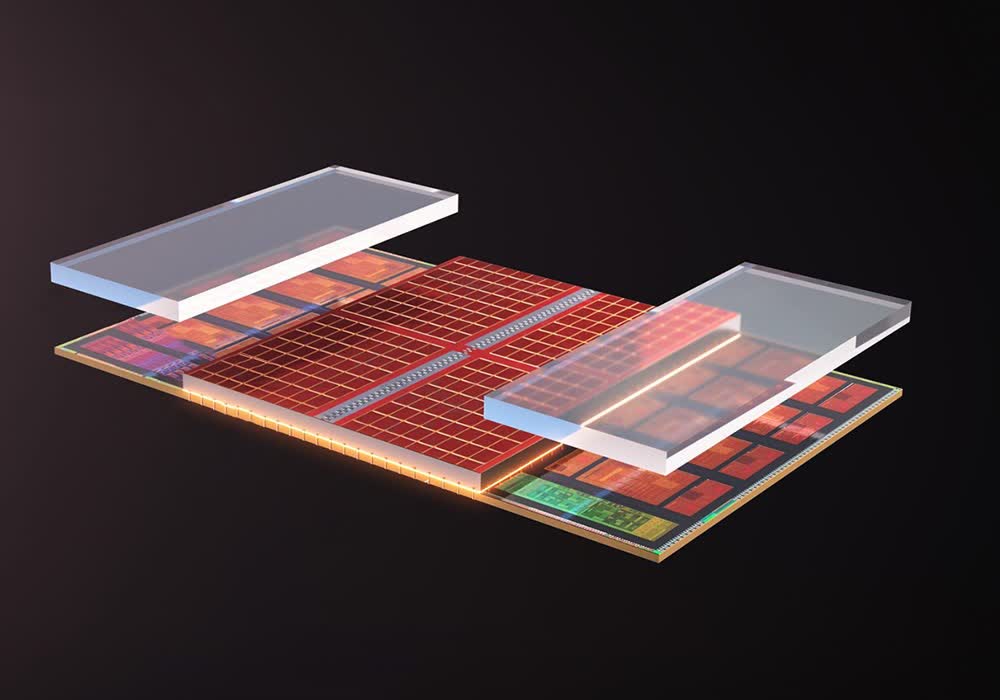

Outside of rumors though, AMD has confirmed the introduction of what it calls 3D-V cache, which allows it to stack a large cache on top of the processor's core and this has the potential of decreasing latency and increasing performance drastically. This implementation represents a new form of chip packaging and is an area of research that holds much potential for the future.

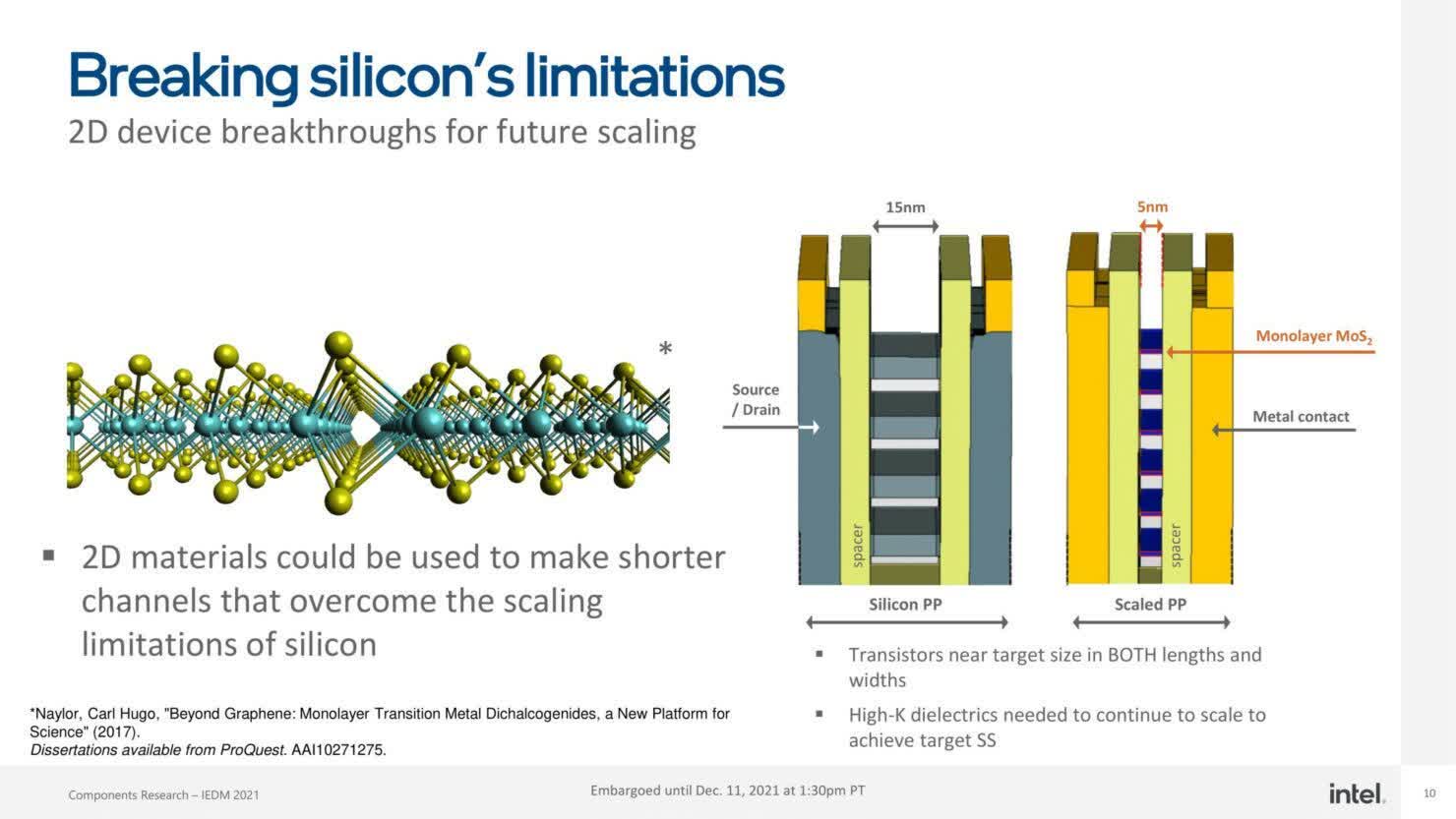

On the downside however, transistor technology as we know it is nearing its limit as we continue to see sizes shrink. Currently, 5nm seems to be the cutting edge and even though the likes of TSMC and Samsung have announced trials on 3nm, we seem to be approaching the 1nm limit very fast. As to what follows after that, we'll have to wait and see.

For now a lot of effort is being put into researching suitable replacements for silicon, such as carbon-nanotubes which are smaller than silicon and can help keep the size-shrink on-going for a while longer. Another area of research has to do with how transistors are structured and packaged into dies, like with AMD's V-cache stacking and Intel's Foveros-3D packaging which can go a long way to improve IC integration and increase performance.

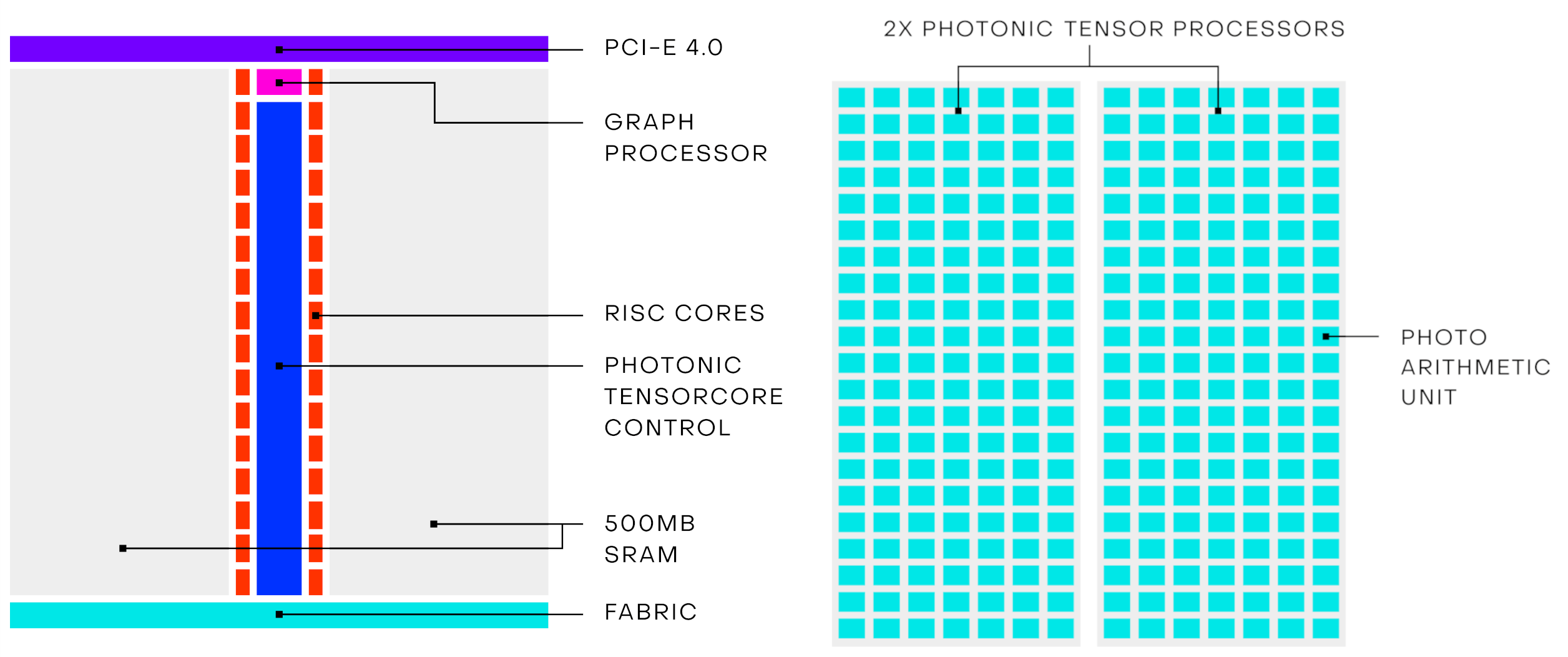

Another area that holds promise to revolutionize computing is photonic processors. Unlike traditional semiconductor transistor technology that is built around electronics, photonic processors use light or photons instead of electrons, and given the properties of light with its significantly lower impedance advantage compared to electrons which have to travel through metal wiring, this has the potential to dramatically improve processor speeds. Realistically, we may be decades away from realizing complete optical computers, but in the next few years we could well see hybrid computers that combine photonic CPUs with traditional electronic motherboards and peripherals to bring about the performance uplifts we desire.

Lightmatter, LightElligence and Optalysys are a few of the companies that are working on optical computing systems in one form or another, and surely there are many others in the background working to bring this technology to the mainstream.

Another popular and yet dramatically different computing paradigm is that of quantum computers, which is still in its infancy, but the amount of research and progress being made there is tremendous.

The first 1-Qubit processors were announced not too long ago and yet a 54-Qubit processor was announced by Google in 2019 and claimed to have achieved quantum supremacy, which is a fancy way of saying their processor can do something a traditional CPU cannot do in a realistic amount of time.

Not to be left outdone, a team of Chinese designers unveiled their 66-Qubit supercomputer in 2021 and the race keeps heating up with companies like IBM announcing their 127-Qubit quantum-computing chip and Microsoft announcing their own efforts to develop quantum computers.

Even though chances are you won't be using any of these systems in your gaming PC anytime soon, there's always the possibility of at least some of these novel technologies to make it into the consumer space in one form or another. Mainstream adoption of new technologies has generally been one of the ways to drive costs down and pave the way for more investment into better technologies.

That's been our brief history of the multi-core CPU, preceding designs, and forward looking paradigms that could replace the multi-core CPU as we know it today. If you'd like to dive deeper into CPU technology, check out our Anatomy of the CPU (and the entire Anatomy of Hardware series), our series on how CPUs work, and the full history of the microprocessor.