Nvidia's latest generation of graphics cards might look familiar on the surface, but dig into the specs and a different story emerges. Earlier this year, we were discussing how the GeForce RTX 5080 is actually closer to an RTX 5070 based on its hardware configuration. Since then, Nvidia has released more graphics cards, and the shrinkflation problem continues.

The underwhelming RTX 5060 is effectively an RTX 5050, we're going to show you the data to back that up – and even then, it is arguably an under-equipped 50-class product.

Granted, Nvidia is free to brand its products however it chooses, but based on the historic record of previous releases, the problem is so obvious that when you adjust the product line-up to more closely reflect the reality of the products you're getting, the GeForce 50 series would become a lot more palatable. Well... palatable at better prices for gamers, not so much for Nvidia.

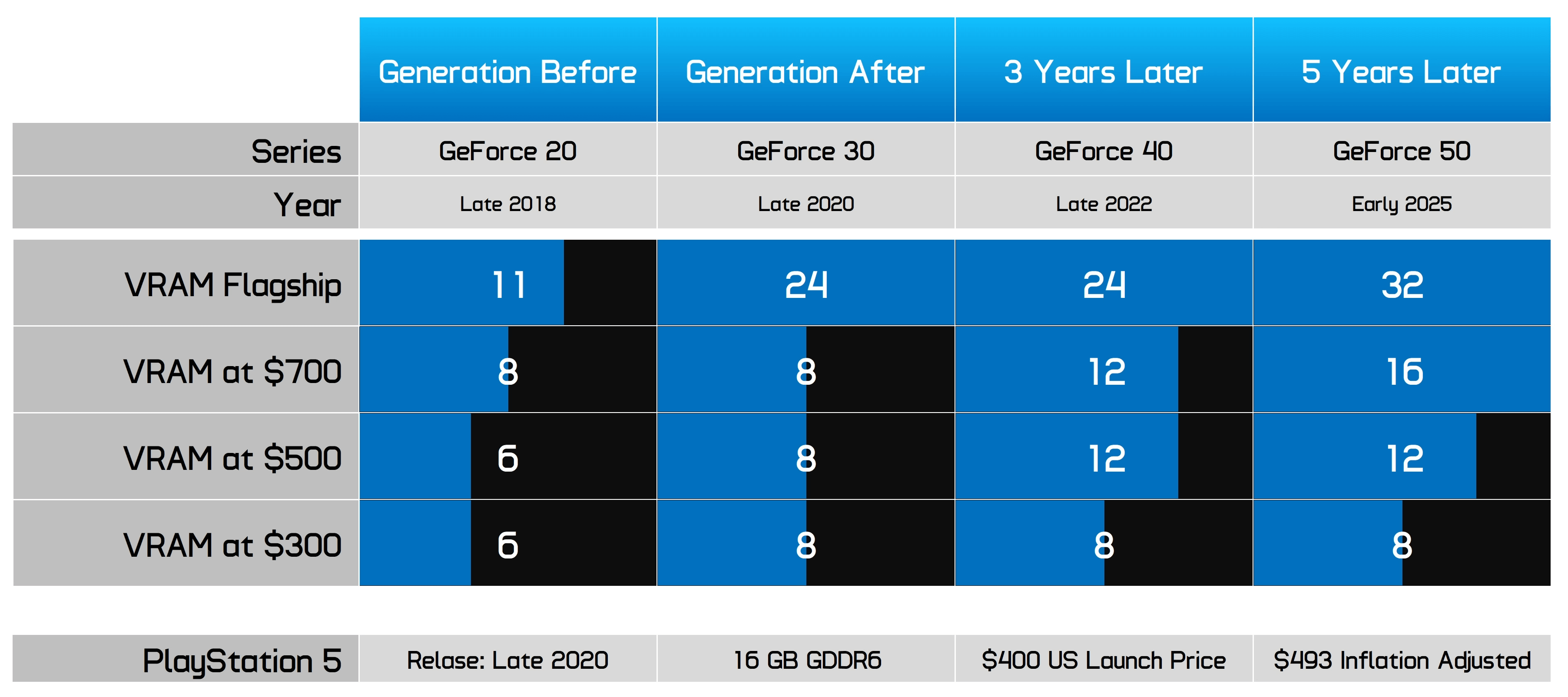

We'll also examine VRAM stagnation and show just how badly Nvidia has failed PC gamers compared to console gamers over this console generation.

The RTX 5060 is not only more like a "5050" based on core configuration and memory bandwidth, but it also offers far less VRAM than a modern mainstream graphics card should, considering the history of PC GPUs versus console hardware – and we're going to look at 20 years of data to illustrate just that.

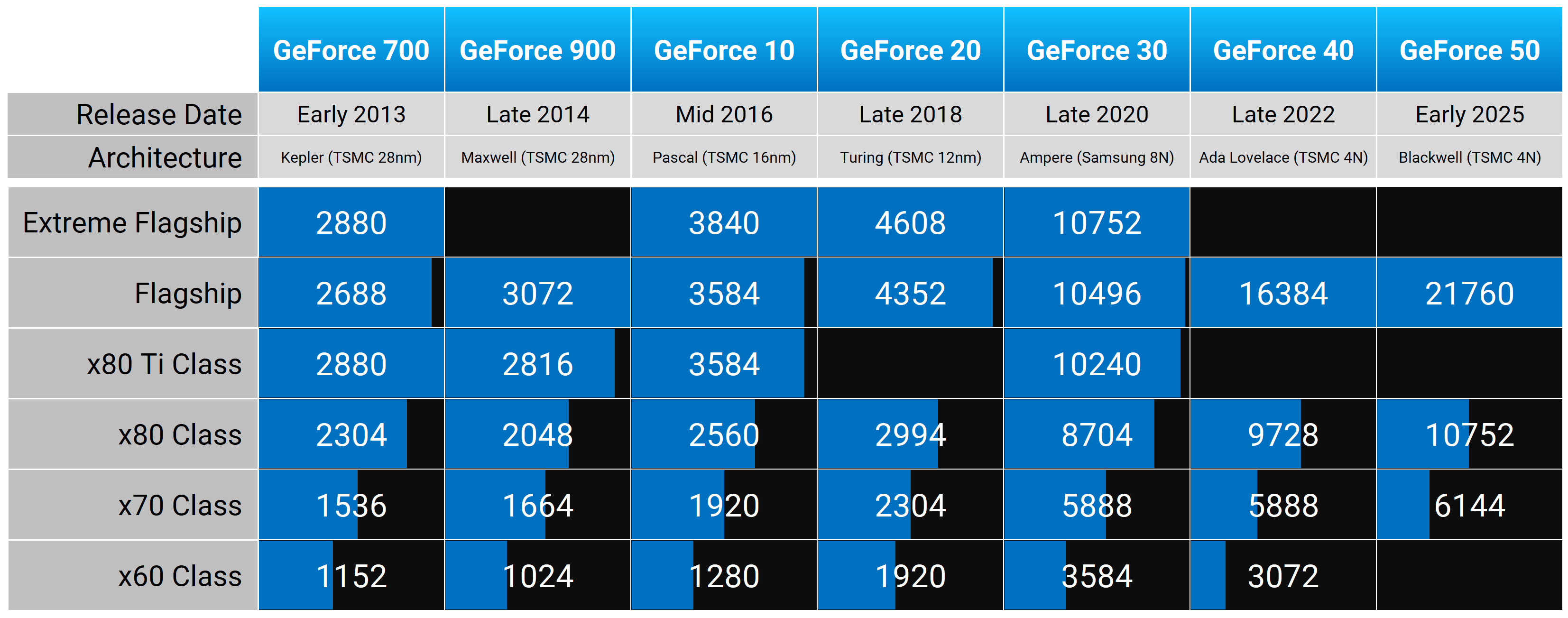

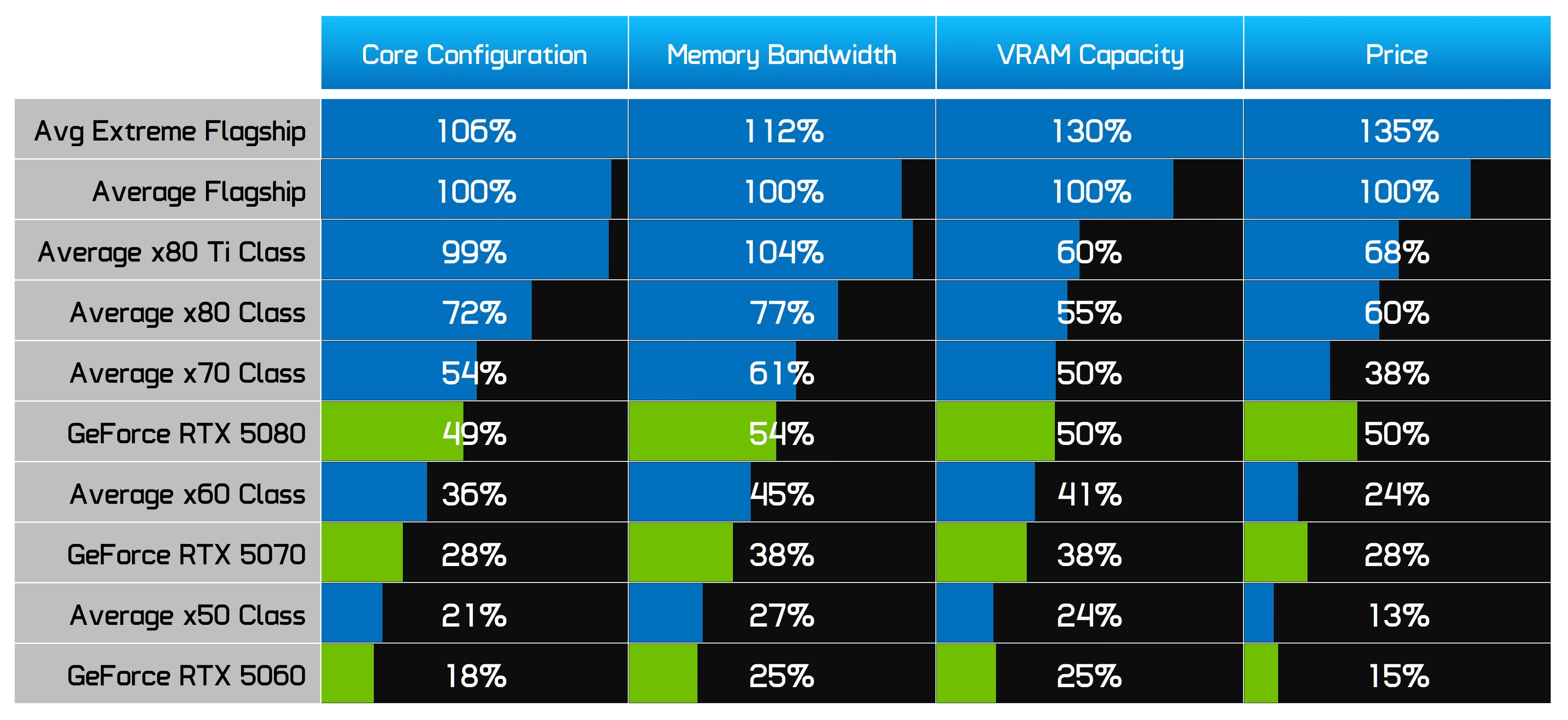

Nvidia Core Configurations

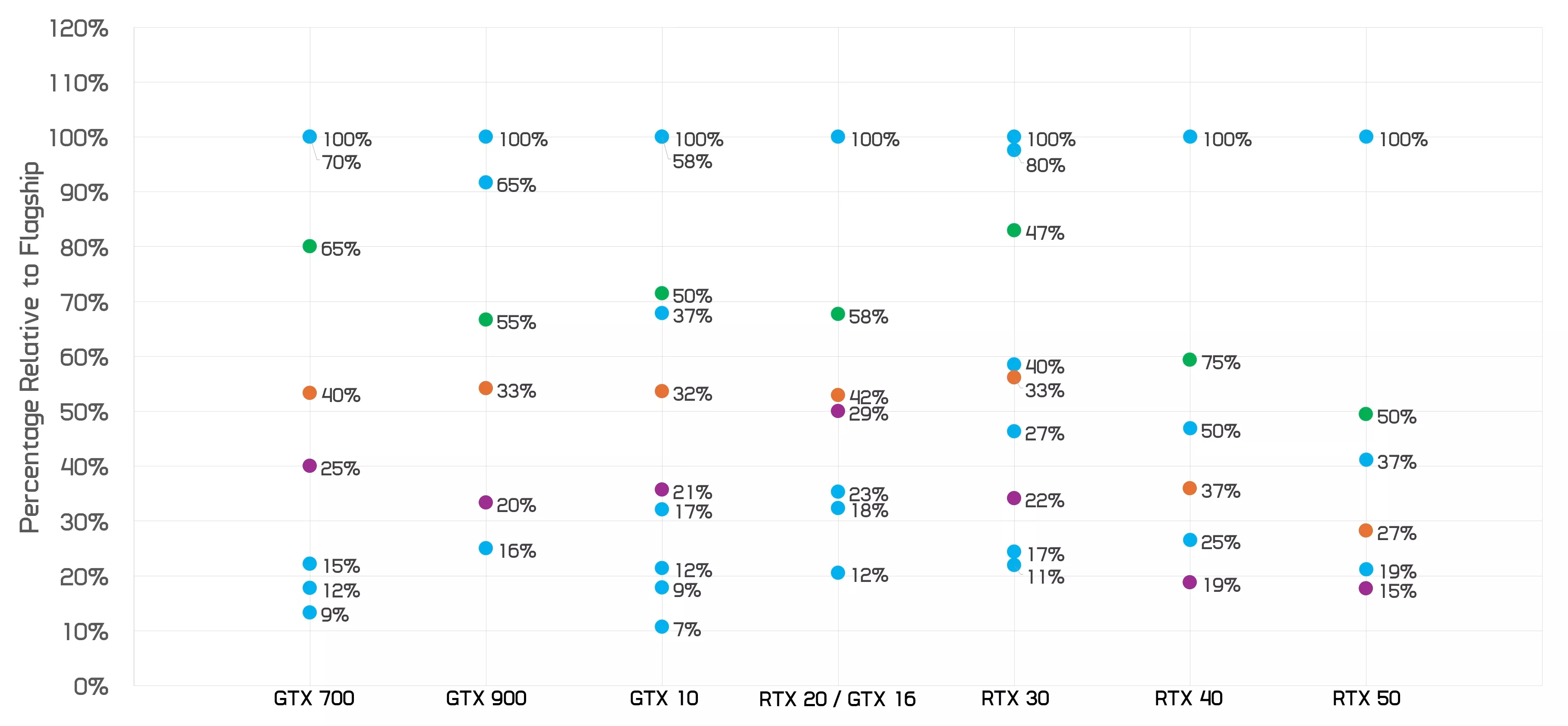

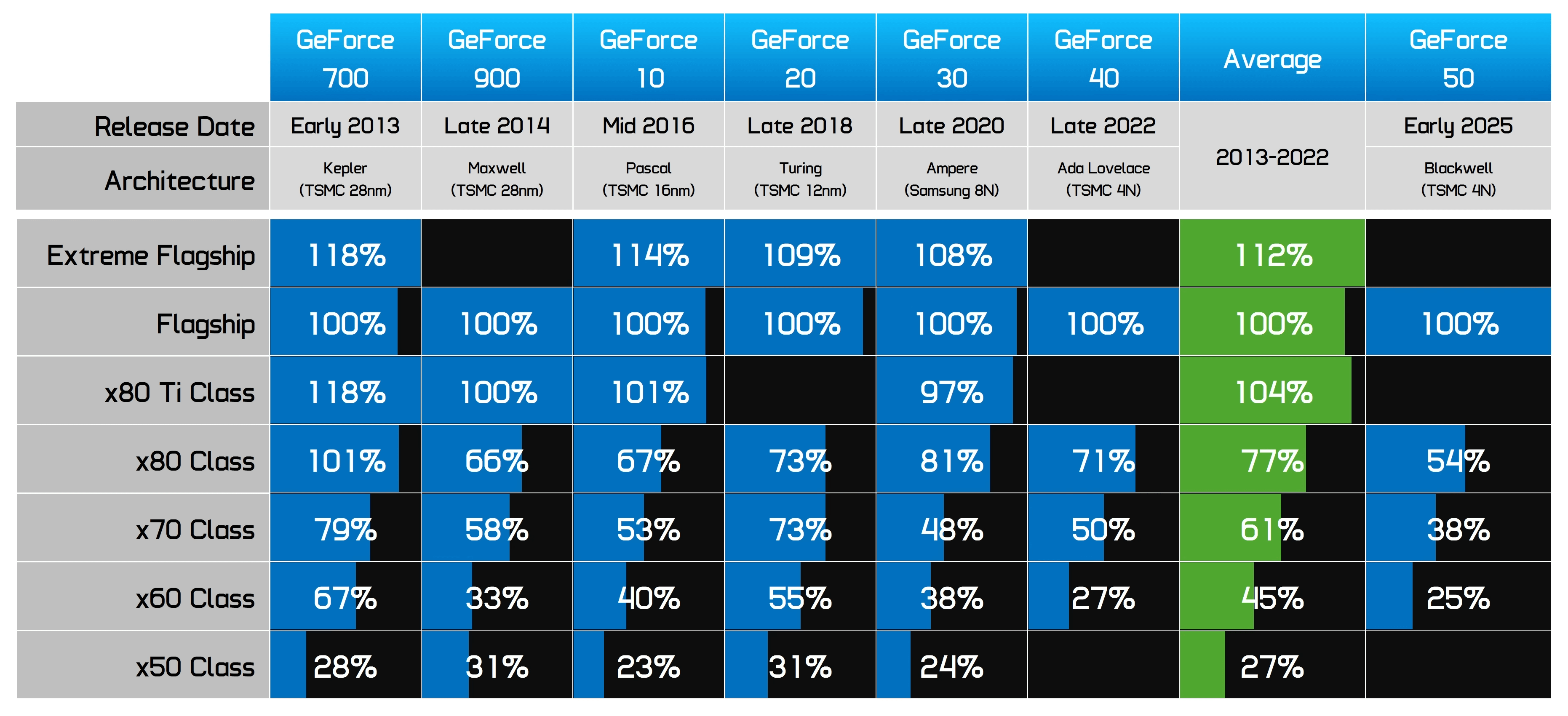

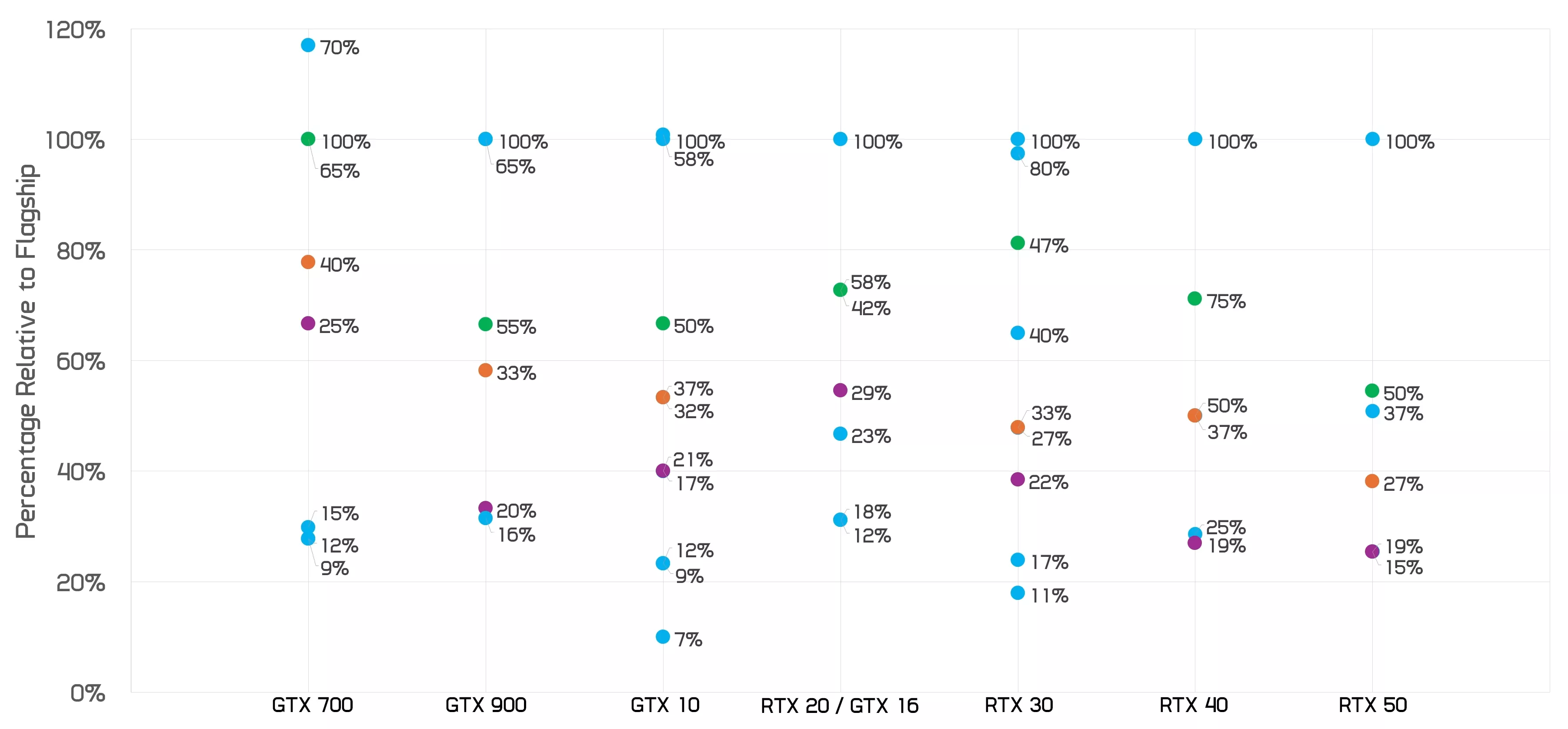

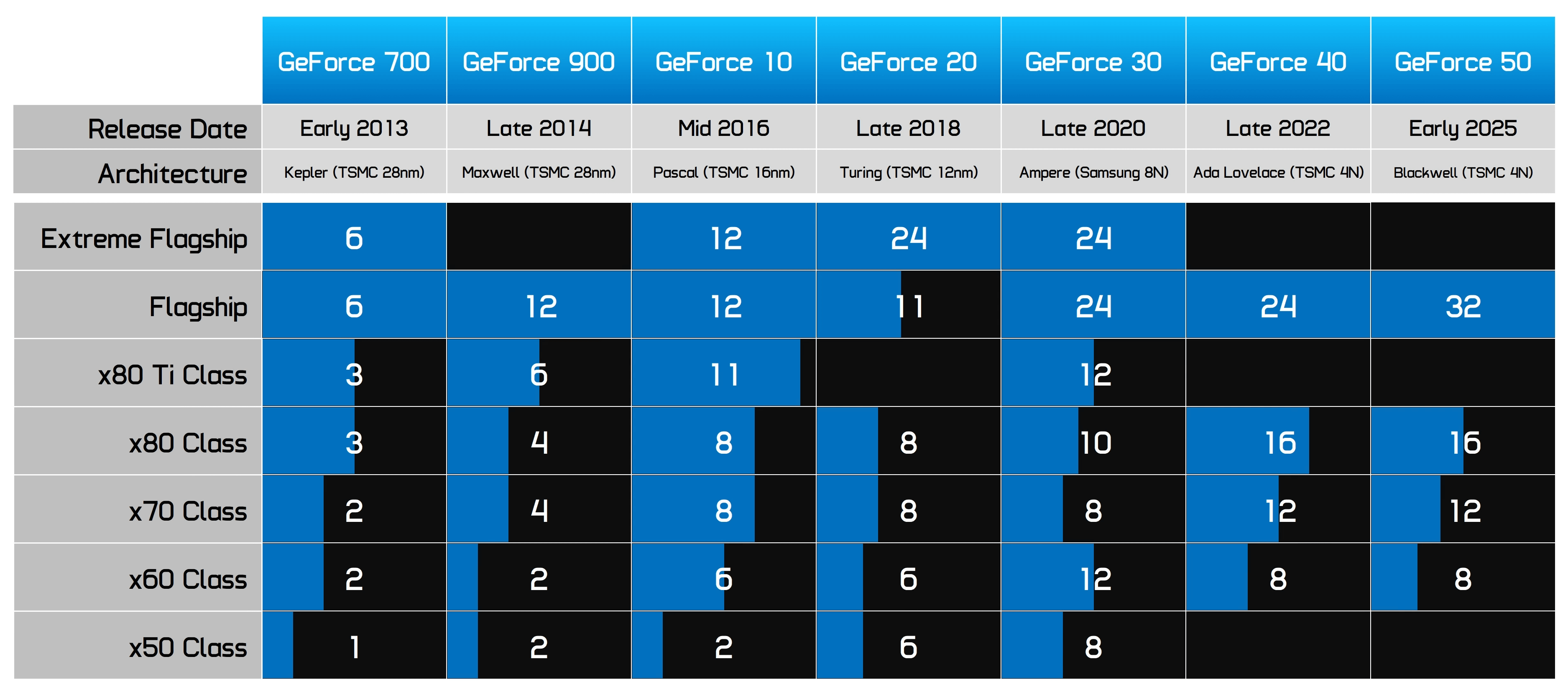

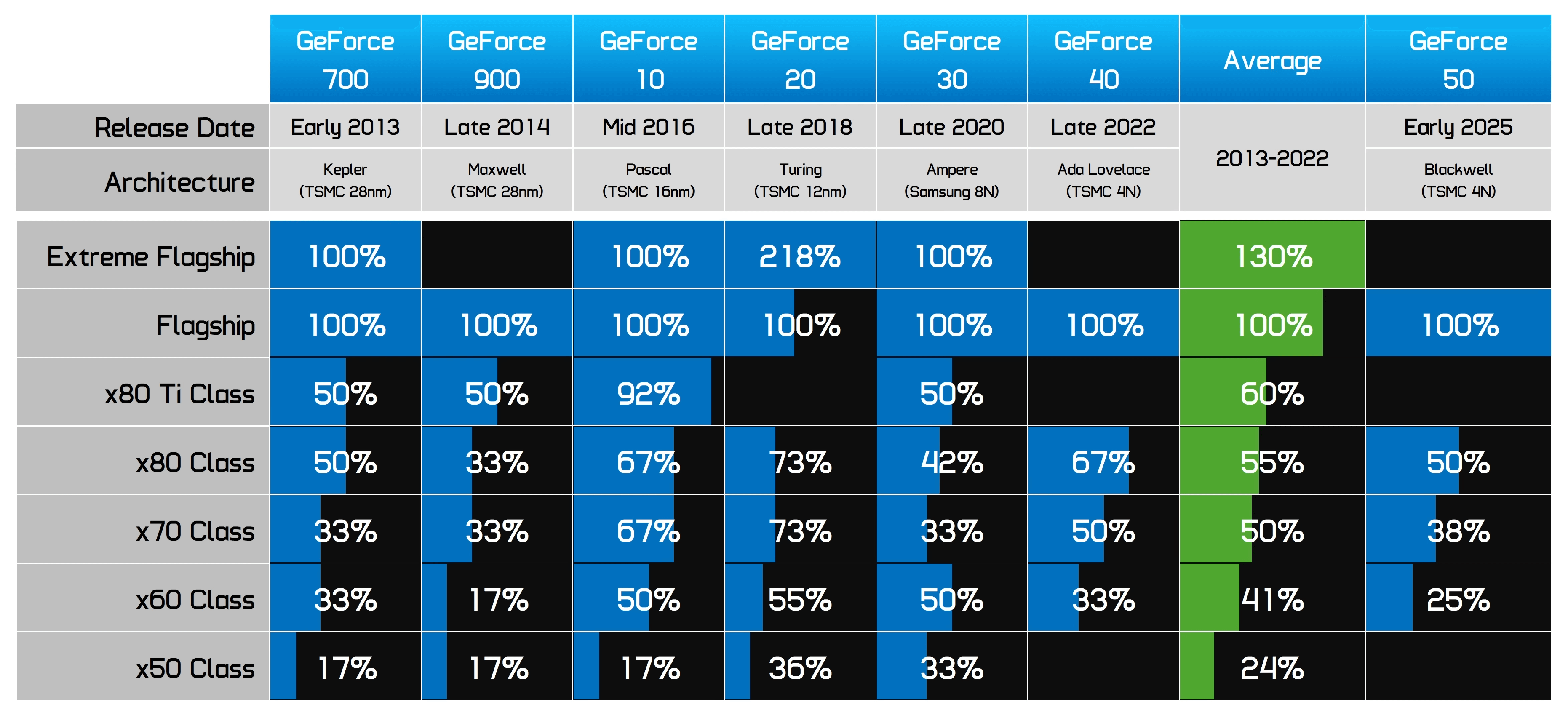

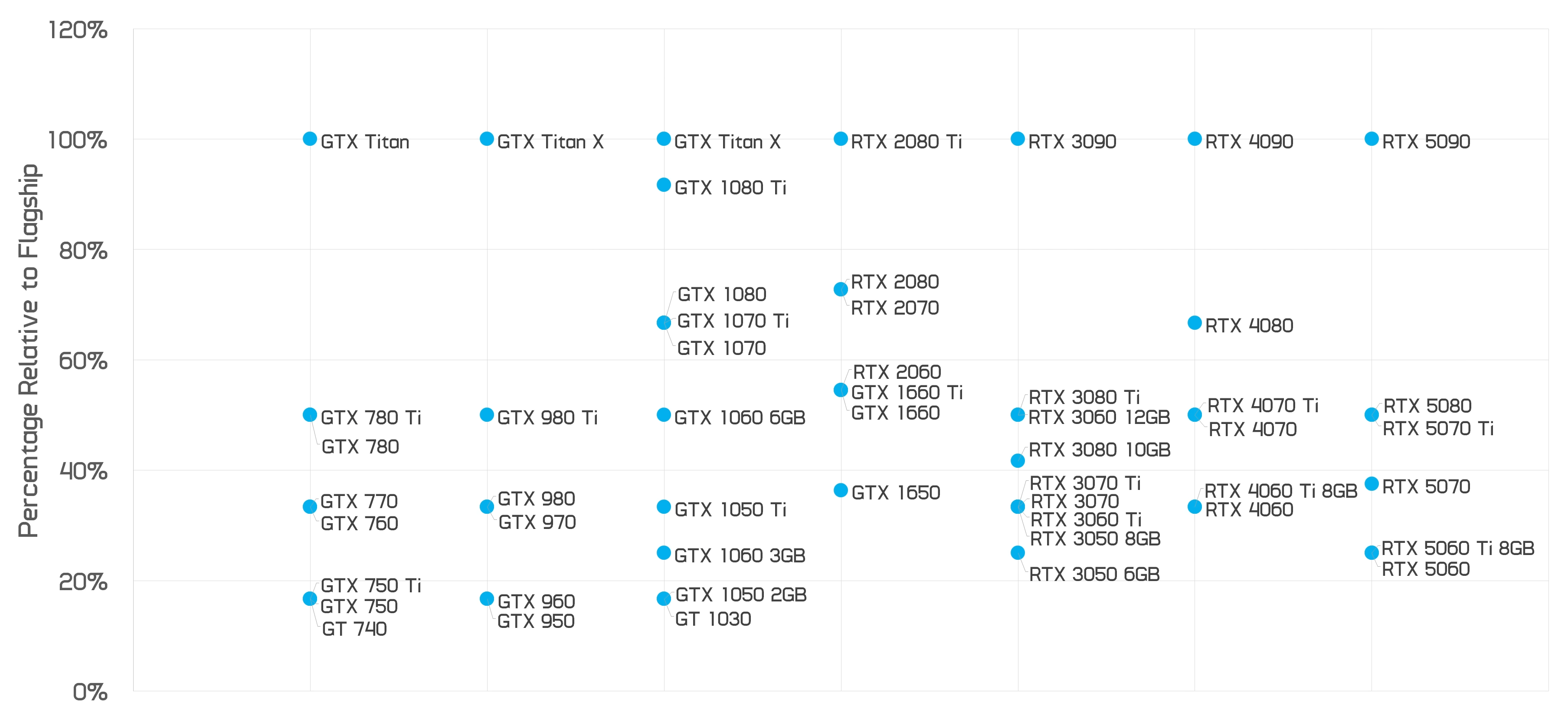

Last time we examined Nvidia GPU hardware configurations, we used data going back to 2013's GeForce 700 series to show how each product class compared to the flagship of that era. We then used that data to create a "typical" GPU generation – an average of what we observed over the last six generations.

This showed us how much each product class is typically scaled down compared to the flagship model across a decade of GeForce GPU history. And when you slot in the RTX 50 series and compare it to a typical generation, it doesn't hold up well.

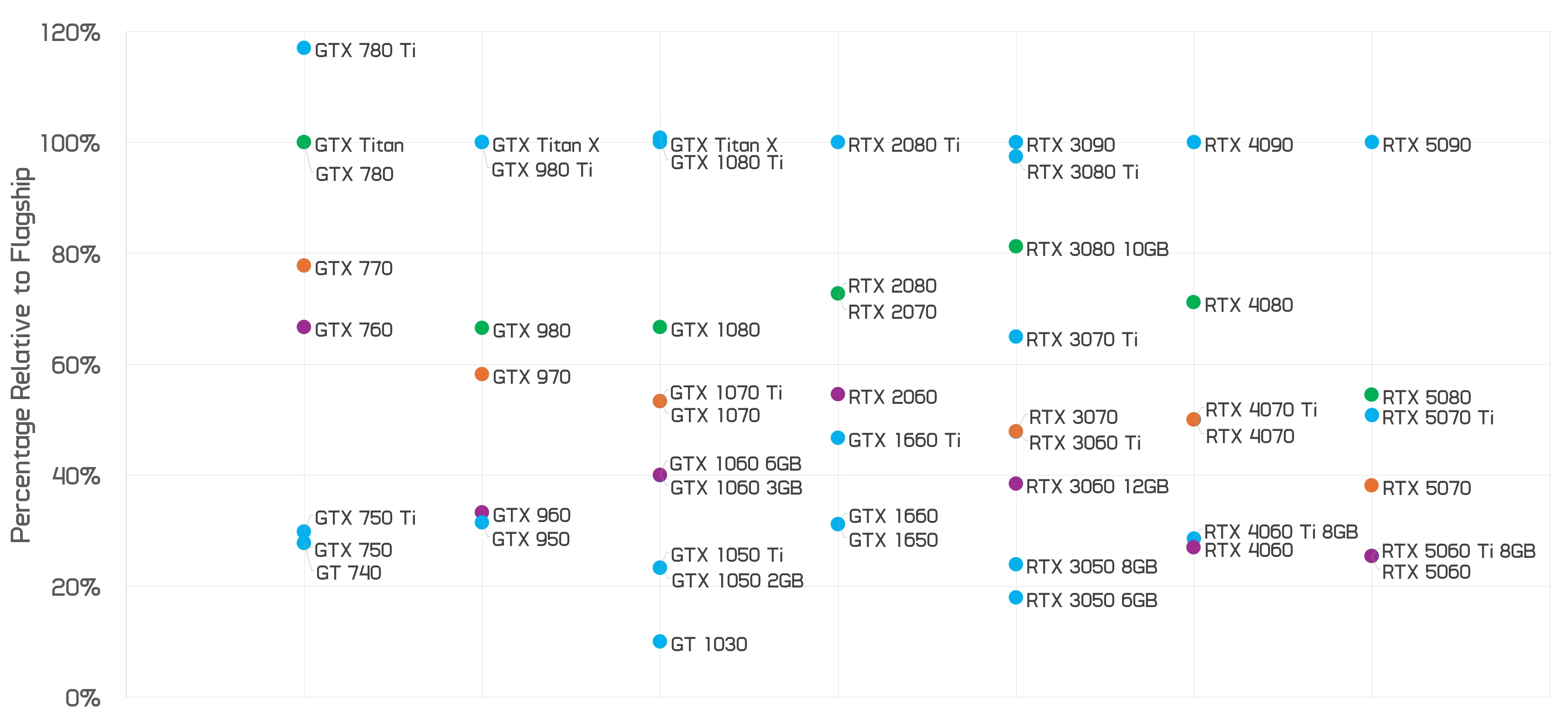

Nvidia GPUs: Shader (CUDA) Core Count

Above is the core configuration that Nvidia has offered over the years: with the 50 series, the RTX 5090 offers 21,760 CUDA cores, which is reduced in successive steps to 10,752 cores for the RTX 5080, 6,144 cores for the RTX 5070, and, as we now know, 3,840 cores for the RTX 5060.

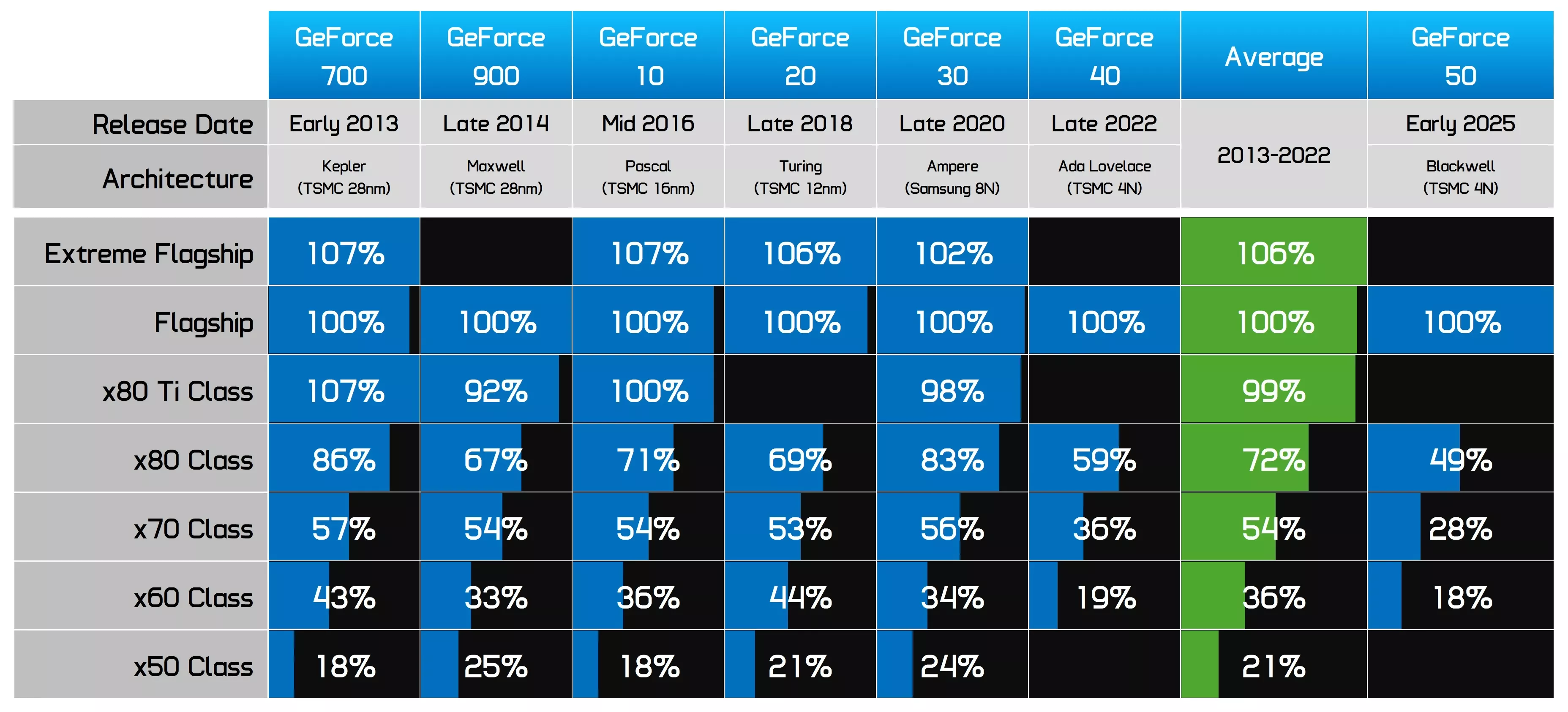

Shader (CUDA) Core Count in %

As a percentage relative to Nvidia's flagship, the RTX 5060 delivers just 18% of the core count – well below the average for 60-class GPUs. Historically, this class delivers 36% of the flagship's cores on average, but both the 4060 and 5060 fall far short of that mark.

Scaling the GPU size down to just 18% aligns much more closely with what Nvidia has provided in the 50-class segment in the past. In fact, it's a slightly worse-than-average 50-class configuration, comparable to the GTX 750 and GTX 1050. It's actually worse than the RTX 3050 8GB from a few generations ago.

When we plot the data like this, we get a clearer picture of how this generation compares. You can clearly see that the RTX 5060 is hovering around the level of previous 50-class products and fails to reach the heights of the 50 Ti models we used to see many generations ago.

Nvidia CUDA Relative Core Count: 2013 to 2025

It's also interesting to track the purple dots over time, which represent the 60-class GPUs. For about five generations, the 60-class product was quite consistent. The RTX 2060 was actually somewhat over-specced, more like a 60 Ti-type GPU, probably reflective of its higher-than-usual price.

But in the last two generations, there's been a steep decline in the relative size of the 60-class models. What used to be a 60-class GPU (GTX 1060 6GB and RTX 3060 12GB) is now more akin to an RTX 5070. In fact, the 5070 is configured below those older 60-class models and is realistically positioned somewhere between a 60-class and 50-class GPU.

This type of chart also allows us to better analyze the Ti models, which haven't always been a feature of Nvidia's lineup. Based on core count, we can say that the RTX 5070 Ti is actually more like an RTX 5060 Ti, its configuration falls between historical 70-class and 60-class configurations and is weaker than the 3060 Ti was relative to the 3090.

The RTX 5060 Ti is more comparable to an RTX 5050 Ti or even just an RTX 5050, depending on which generation you compare it to.

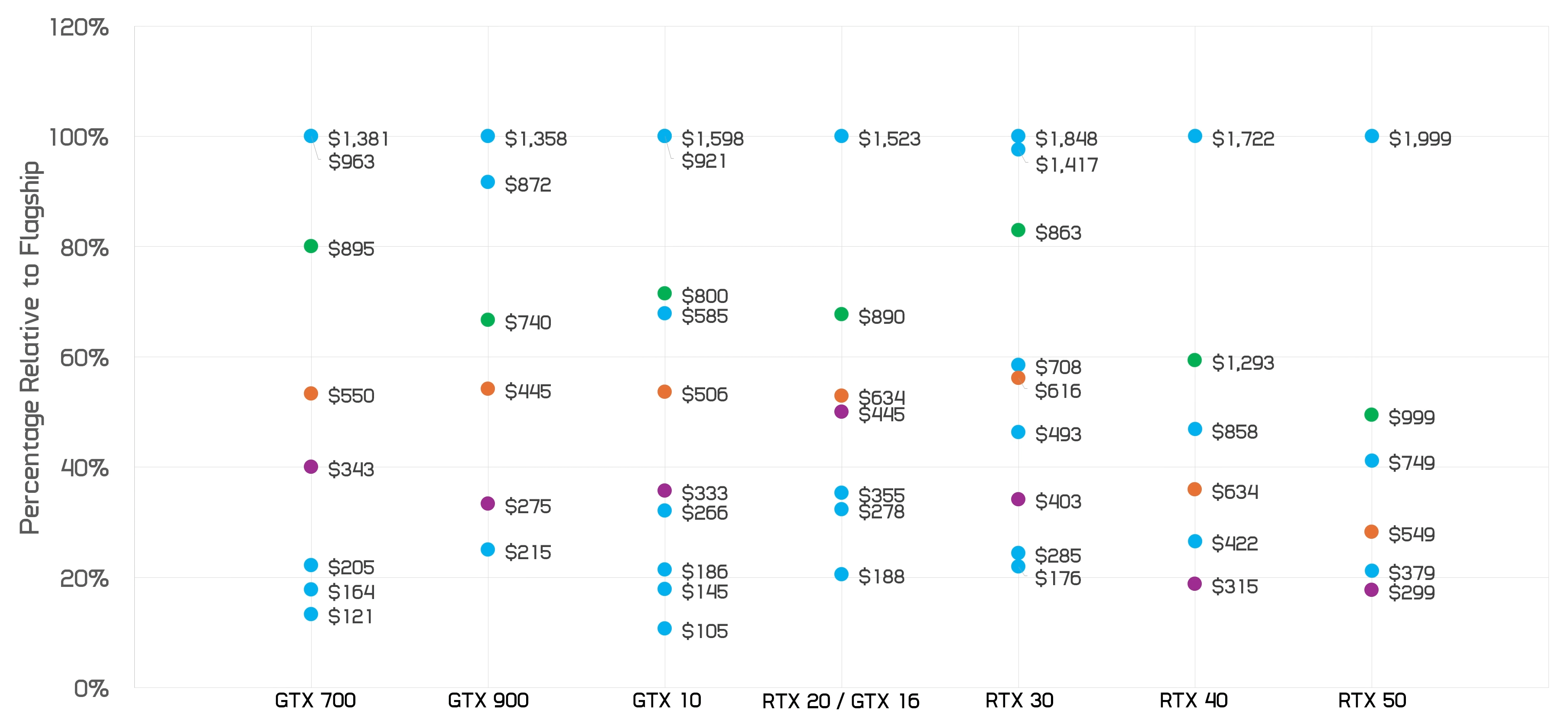

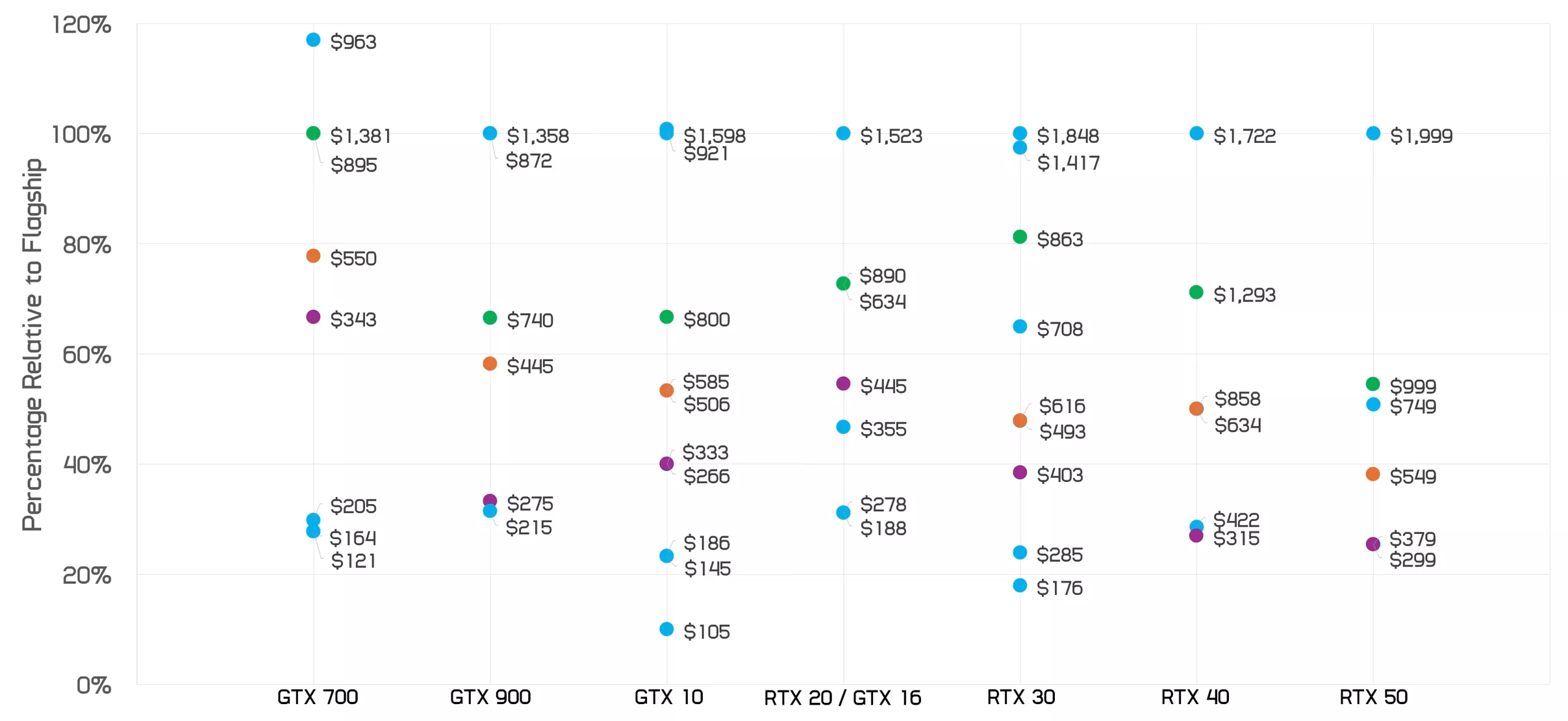

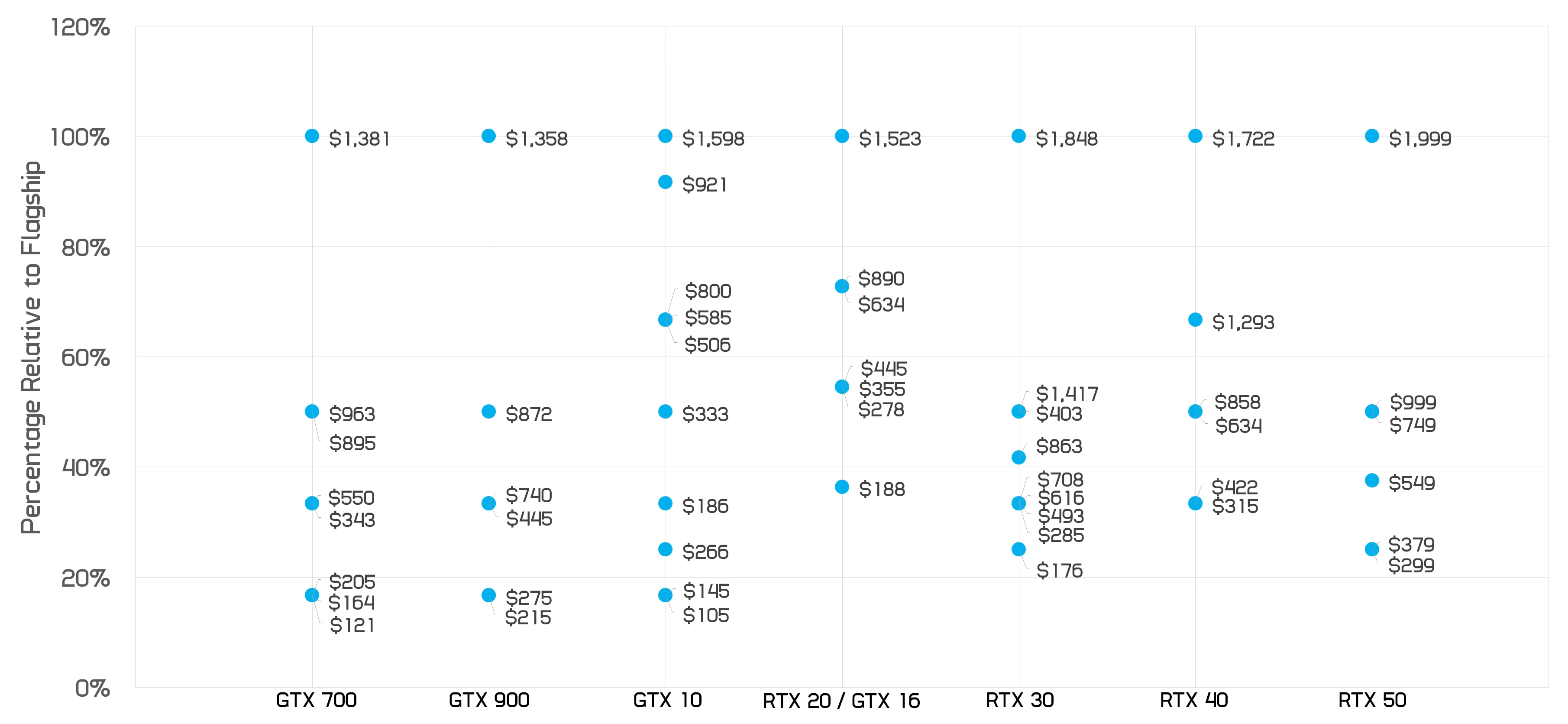

Now, we're switching the chart to replace the labels of each GPU with their relative price...

The percentage listed next to each marker now represents relative price, not relative core count. For example, the RTX 4080 cost $1,200 relative to the RTX 4090 at $1,600; it's listed as 75% relative price on the chart.

This view allows us to see the extent of shrinkflation across these models. Historically, a GPU configured to about 20% the size of the flagship GPU was available for roughly 10% to 15% of the flagship's price. The last two generations have disrupted that relationship: the RTX 4060 was 19% of the price, and the RTX 5060 is 15% of the price, an improvement over last gen, but still on the high side.

But this issue goes deeper, because the flagship GPU has become much more expensive over time. In the GeForce 700 series, you could get a GTX Titan for $1,000. Today, the RTX 5090 is $2,000. So perhaps a more appropriate way to look at this is by adjusting GPU prices for inflation. Everything in this chart is shown in 2025 dollars.

This is a particularly damning chart for Nvidia. Between 2013 and 2020, a GPU 20% the size of the flagship cost about $180 to $200. Today, that same configuration is 50% more expensive, at $300. In prior years (adjusted for inflation) $300 would have bought you a configuration with around 30% to 35% of the flagship's cores.

What Nvidia has done here – and previously with the RTX 4060 – is offer the same name and price point as a typical 60-class GPU. In fact, the RTX 5060 is the second-cheapest 60-class model out of the seven generations shown, so that part isn't too bad. But they're simply giving you less. It's a 50-class configuration with a 60-class name and price – clear evidence of shrinkflation.

This affects other models as well. What used to cost $350 to $400 (inflation-adjusted) now costs $550, and you're not even getting as much as you used to. GPUs that once sold for $500 to $600 prior to 2020 are now $1,000. Everything has gone up by 40% to 50% on top of overall inflation.

Memory Bandwidth Comparison

Another important component of GPU performance is memory bandwidth, determined by the memory bus width and the speed of the memory modules. This is also heavily reduced in lower-tier products, as seen with the RTX 5060, which has 448 GB/s of bandwidth compared to 1,792 GB/s on the RTX 5090.

Nvidia GPUs: Memory Bandwidth (GB/s)

As a percentage, we are getting 25% of the bandwidth on the 60-class product, which historically aligns more with a 50-class GPU – on average, 50-class models have delivered 27% of the flagship's bandwidth.

Memory Bandwidth in %

It is no surprise to see bandwidth reductions following the same trend as core count, and the latest 60-series products are no exception.

When we plot the overall lineups, it becomes clear that both the RTX 5060 Ti and RTX 5060 sit within the typical range of historic 50-class GPUs, such as the RTX 3050 and GTX 1050. Previously, the level of bandwidth now offered in the 60 class was typically found in the 70 class, representing a degradation in product quality relative to the flagship, a trend we have been highlighting for some time.

Nvidia GPU Memory Bandwidth (GB/s): 2013 to 2025

This leads to similar conclusions when we examine pricing, both relative to the flagship and as an inflation-adjusted dollar amount.

The RTX 5060 Ti, for example, offers 25% of the 5090's bandwidth at a starting price of $379. That is significantly more expensive than most previous 25% configurations, with the exception of the RTX 4060 Ti.

VRAM Configuration

Along with memory bandwidth, we also need to examine VRAM capacity, which continues to be a major issue in 2025. Typically, RTX 60-class GPUs have come with 41% of the VRAM of the flagship model, although there is much more variance here than with other hardware specs.

Nvidia GPUs: VRAM Capacity (GB)

For example, the GTX 960, with just 2 GB of VRAM compared to 12 GB on the flagship, was below average in this class. However, five of the six prior generations provided at least 30% of the relative VRAM capacity. The RTX 5060 sits at just 25%, offering 8 GB compared to 32 GB on the flagship RTX 5090.

In terms of capacity, this positions the 5060 somewhere between the 50 and 60 classes when viewed in the context of historical configurations. It is quite common to see a significant reduction in VRAM from the flagship to the 80-class models, but previous generations balanced VRAM buffers more effectively.

Nvidia VRAM Capacity: 2013 to 2025 - Base Config Listed

When we look at pricing, the RTX 5060 and 5060 Ti stand out as very expensive graphics cards relative to their VRAM size. The 5060 Ti 8 GB, in particular, is disappointing.

At that price, it should be offering 30% to 40% of the VRAM capacity – which in this case would mean 12 GB. In some of the better generations, such as the 10 series, that would have translated to a 16 GB graphics card.

Nvidia GPU VRAM vs Gaming Consoles

However, VRAM analysis goes beyond internal product line relationships because one of the most critical factors in whether a PC gaming GPU has enough VRAM is how it compares to gaming consoles of the era and their memory capacity.

Game developers build their titles around console specs and optimize for the memory available on those systems. Unlike shader performance, which can be scaled with resolution and effects, memory usage is harder to scale when developers are targeting a certain level of detail and features. Having PC GPUs with at least as much VRAM as consoles has historically been crucial to ensuring the PC experience isn't compromised.

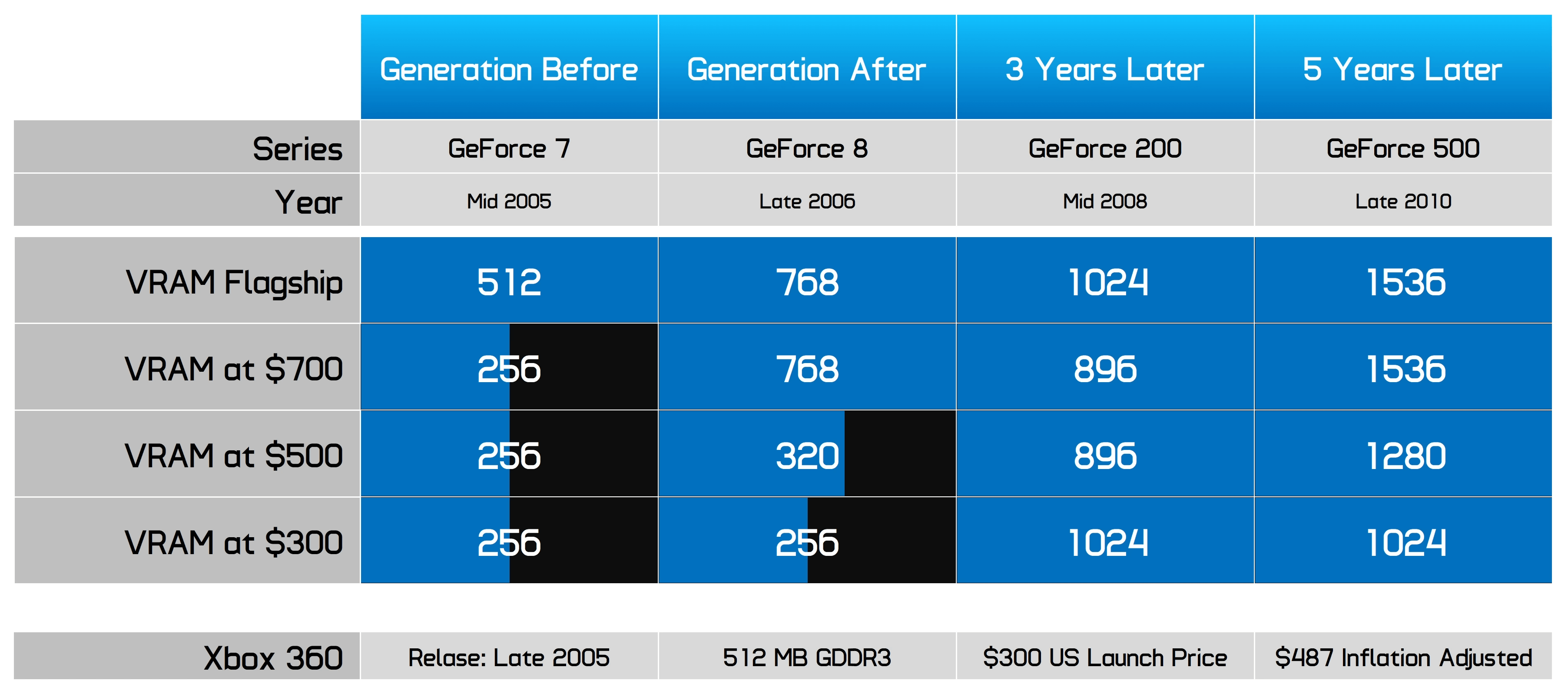

One of the most telling trends over time is how quickly PC GPUs have caught up to and exceeded console memory capacity. Let's look back at the Xbox 360 era. The Xbox 360 had 512 MB of GDDR3 memory, launching in 2005 for $300 – roughly $500 today.

Xbox 360 Era Memory Configurations

At that time, Nvidia's GeForce 7 series was available. To match the Xbox 360's VRAM, you had to purchase the top-tier GeForce 7800 GTX with 512 MB (also available in a 256 MB version). In the rest of the lineup, GPUs at the Xbox 360's price point typically had just 256 MB of VRAM – half the console's capacity.

In the generation following the Xbox 360 launch, the GeForce 8 series (late 2006) brought a slight increase. The high-end 8800 GTX offered 768 MB, while lower-price models provided either 320 MB or 256 MB. But three years after the Xbox 360 launched, Nvidia had dramatically increased VRAM. The GTX 260 and above provided at least 896 MB, with higher-tier models starting at 1,024 MB. Even super budget models like the GT 220 offered 512 MB – exceeding the Xbox 360.

Five years later, with the GeForce 500 series, the gap had widened. The minimum was 1,024 MB of memory, and at price points comparable to the Xbox 360, you were getting more than double the console's VRAM – making these GPUs well-suited for both current and future games.

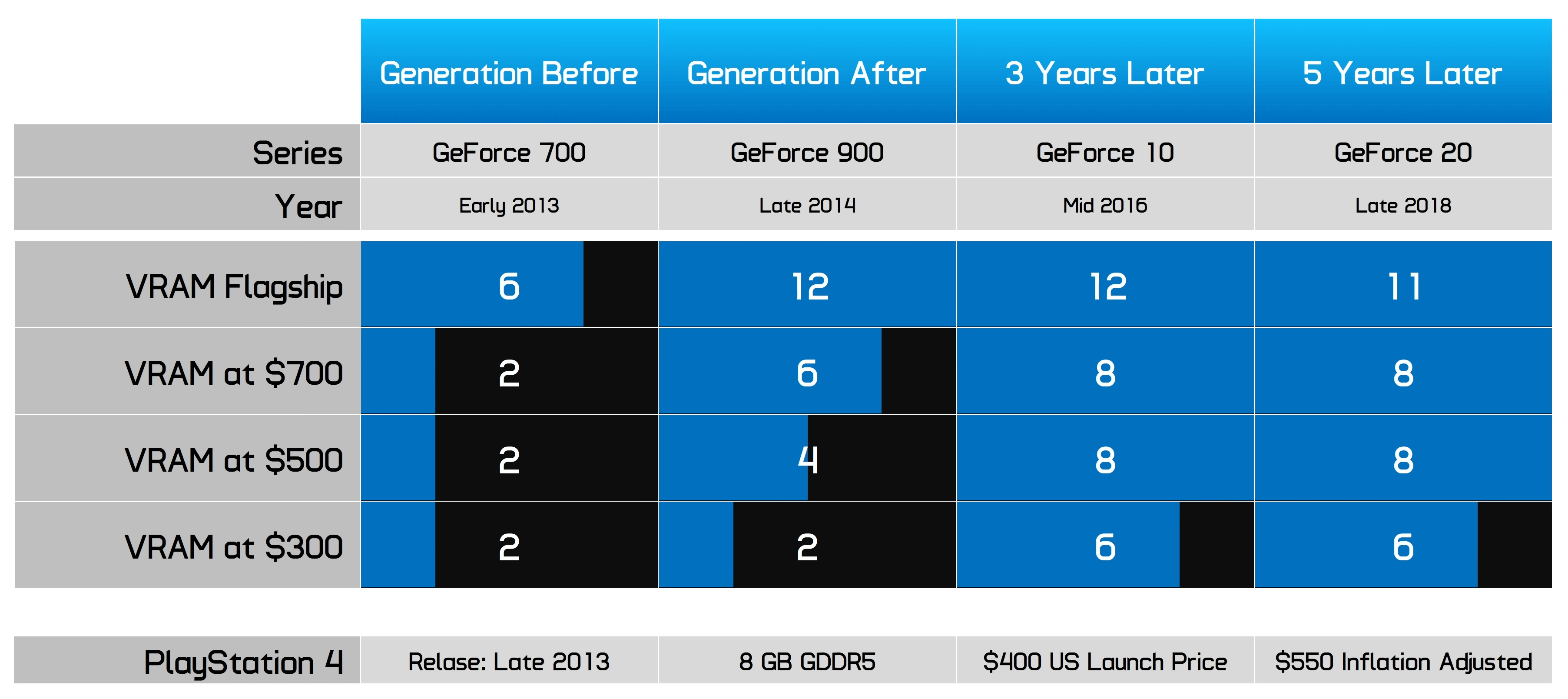

Fast forward to the PlayStation 4 era: this console launched in 2013 with 8 GB of GDDR5 memory at a price equivalent to about $550 today. Before its release, Nvidia's GPUs typically had just 2 GB of VRAM – outside the top-end models. However, over the next two generations, VRAM capacities rapidly improved to meet next-gen gaming demands. One year after the PS4 launch, Nvidia's GeForce 900 series was offering 4 GB at a similar $550 price point, 6 GB or more on higher-end cards, and just 2 GB on the entry-level GTX 960.

PlayStation 4 Era Memory Configurations

Three years later, with more "next-gen" games arriving, Nvidia responded. At similar price points, you were now getting 8 GB – matching consoles. Mainstream cards like the GTX 1060 offered 6 GB, which was slightly below the PS4's capacity but sufficient for gaming during that era.

We're now in the PlayStation 5 era. The PS5 launched in 2020 with 16 GB of GDDR6 memory for $400 – roughly $500 today. Again, before its release, similar-priced PC GPUs offered only 6 GB of VRAM – falling short of next-gen requirements.

PlayStation 5 Era Memory Configurations

Around the PS5 launch, Nvidia released the GeForce 30 series. Most cards at typical price points offered 8 GB, with 10 GB or more only available if you spent the equivalent of $860 on an RTX 3080. The RTX 3060 12 GB was an exception, but at the $300 level, the RTX 3050 still only offered 8 GB – consistent with earlier console generations where some PC GPUs had less VRAM than the latest consoles.

Where the trend starts to deviate is in the crucial post-launch years, when newer games demand more VRAM. In the 2022 – 2023 timeframe, a GPU priced similarly to the consoles offered only 12 GB (RTX 4070). While the 16 GB RTX 4060 Ti variant was available, most mid-range models – including the 4070, 4070 Ti, and 4070 Super – came with just 12 GB. Mainstream RTX 4060 models shipped with only 8 GB.

If we compare this to the PS4 and Xbox 360 eras, this is where most PC gaming GPUs historically matched or exceeded console VRAM within a few years after launch. Nvidia, however, fell short this time, with only 12 GB in mid-range models. Entry-level cards in the RTX 40 series offered an even steeper reduction: 8 GB versus 16 GB in the PS5 – a 50% reduction in VRAM!

This trend continues with the RTX 50 series. Once again, GPUs around the price of a console should offer at least the same VRAM capacity, but Nvidia is still only providing 12 GB in that class and reserving 16 GB for higher-tier models, such as the RTX 5060 Ti 16 GB. Entry-level cards priced at $300 are still stuck at 8 GB.

This is very obvious stagnation and a failure of modern GeForce GPUs to meet current VRAM demands. While the pace of VRAM gains has slowed for both consoles and GPUs, the key milestone for previous successful generations was achieving VRAM parity with consoles at similar price points within a couple of years of their launch. The GeForce 40 and 50 series have failed to do that.

Based on this historical data, the GeForce RTX 5060 should have a minimum of 12 GB of VRAM to be a suitable $300 product in 2025. Anything above this tier should be equipped with 16 GB.

This further highlights the shrinkflation that has plagued modern PC graphics cards. We now have an RTX 5050-class configuration being branded and sold as an RTX 5060 – at a price point 50% higher than typical 50-class GPUs. What you used to get for $300 now delivers significantly less – including VRAM. Historically, these cards should have 12 GB to match console advancements. Today, for the same money, you're getting just 8 GB: clear shrinkflation.

Why Have GPUs Shrinkflated?

Nvidia's lineup would make a lot more sense – and be far more appealing to gamers – if every model were shifted down the stack. The RTX 5080 becomes an RTX 5070, the RTX 5070 becomes an RTX 5060, and the RTX 5060 becomes an RTX 5050.

We shudder to think what the actual incoming RTX 5050 will look like. With those adjustments, and accompanying price changes appropriate for each tier, the GeForce 50 series could have been a massive success. It would not have disappointed as many gamers because their expectations regarding performance and value improvements would have been met generation over generation.

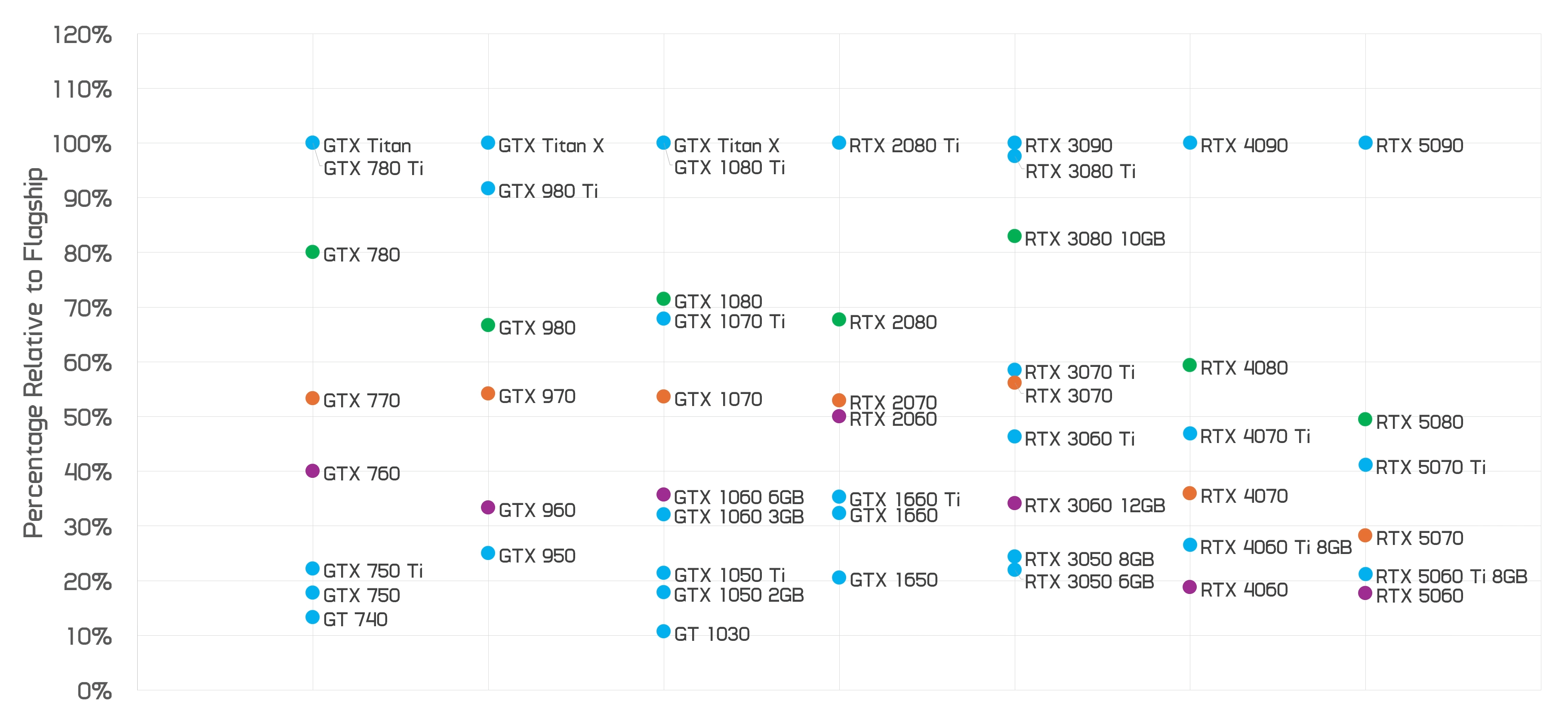

Nvidia GPUs: Average Configuration Summary

The RTX 5060 would not have looked so bad as a relatively weak hardware configuration with just 8 GB of VRAM if it had been branded as an RTX 5050 and priced closer to $200.

To fill the 5060 segment, the RTX 5070 should have taken its place, offering a historically suitable 12 GB of VRAM for around $300 to $350, along with a more reasonable core count relative to the flagship model.

It might sound crazy to suggest that a $550 graphics card should actually be priced closer to $300 – or generously, $350 – but the data suggests that is what GeForce used to offer.

So why is the GeForce lineup suffering from such significant shrinkflation? Why are consumers getting less value now than ever? An obvious answer is simple: new unlimited demand for AI GPUs and profit. Nvidia is a company; it wants to make more money, and shrinkflating PC gaming GPUs (while selling tons more AI GPUs for even more to enterprise clients) is how you achieve that.

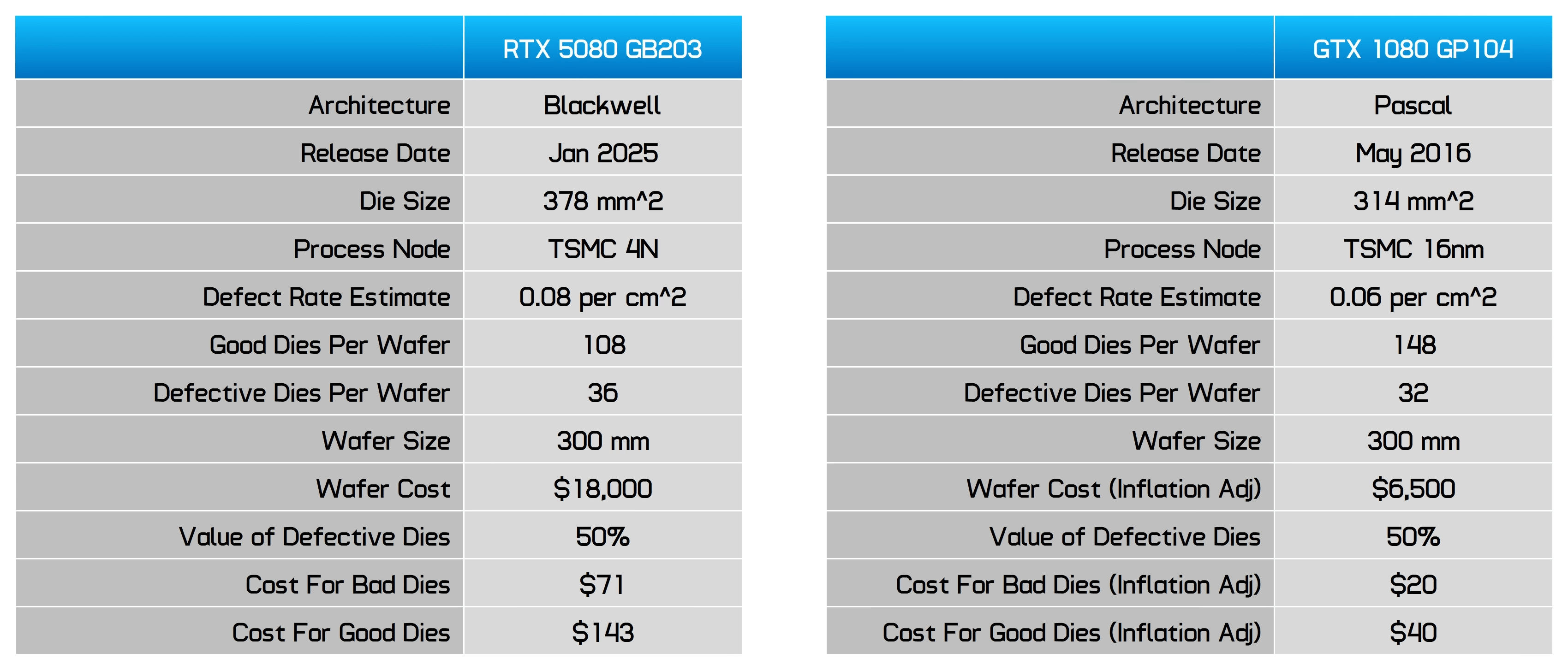

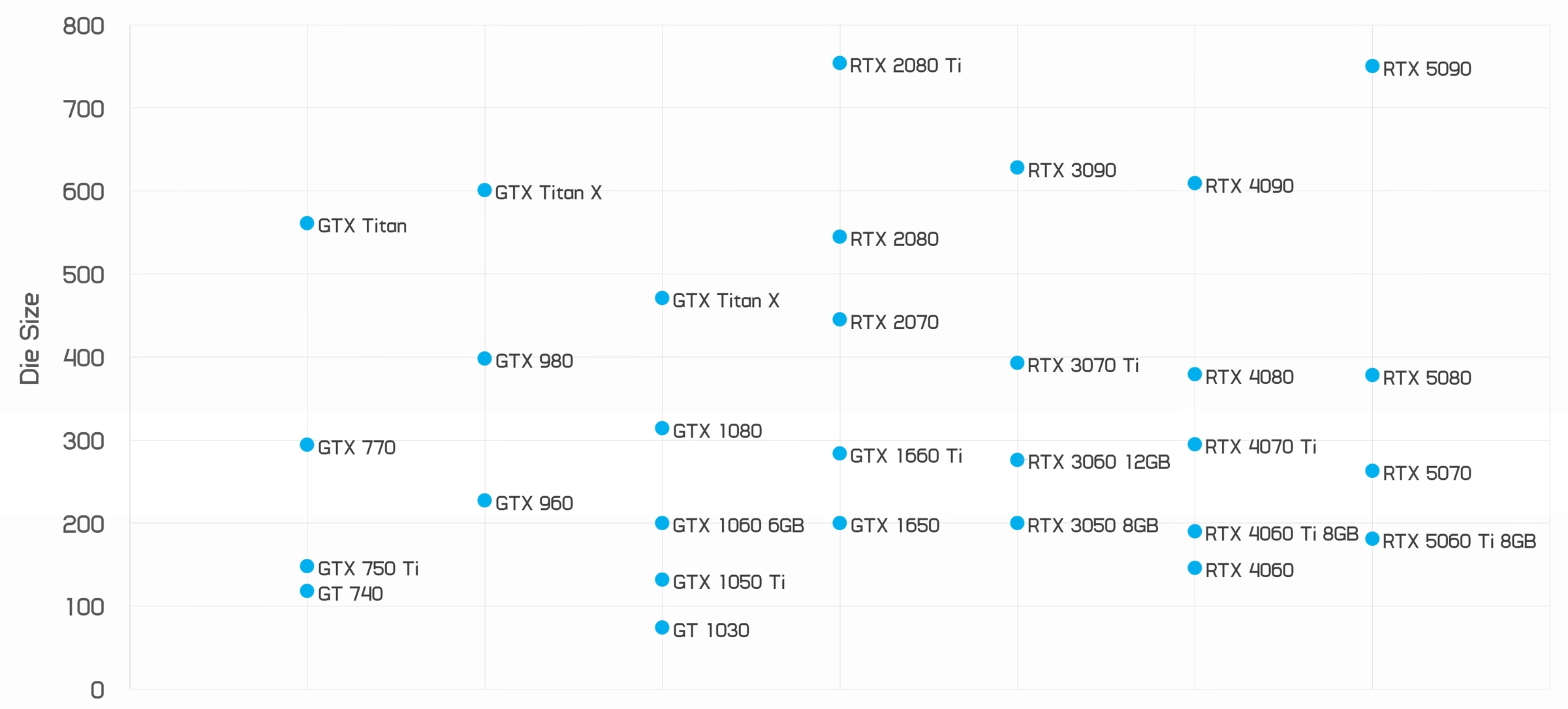

But as we also pointed out in our previous article where we argued the RTX 5080 is really an RTX 5070, the situation is more nuanced. The reality is that graphics cards are more expensive to manufacture today than they were in the past.

Based on rough estimates, we believe the cost of RTX 70-class silicon has risen from around $40 during the Pascal era (when the GTX 1070 was built on TSMC's 16nm process) to about $140 today with TSMC's 4nm process.

Nvidia GPUs: Die Cost Rough Estimate

The reality is that silicon wafer costs have risen substantially, but die sizes haven't adjusted to compensate. The RTX 5070, which we argue is more like an RTX 5060 in relative configuration, is similar in size (if not slightly larger) than most previous 60-class dies.

The same is true of the RTX 5060 Ti 8 GB compared to other 50-class dies. But since the cost of each die is now significantly higher, Nvidia can no longer sell a "60-class die" at the price of previous 60-class GPUs... so it becomes a 70-class product.

Nvidia GeForce Die Size (sq.mm): 2013 to 2025

Models using full / near-full silicon listed

If Nvidia wants to maintain certain profit margins, rising production costs inevitably trickle down to the consumer. If the GPU die costs $100 more to manufacture and Nvidia aims to maintain a 50% profit margin, the graphics card ends up costing the consumer $200 more. Convenient, isn't it?

Another key factor is the lack of meaningful competition. This isn't just a story about gaming GPUs – it extends to AI chips and semiconductor manufacturing as well. In markets where Nvidia faces little resistance, it can keep margins high and pass both production costs and extra profit straight to buyers. As long as demand holds and products continue to sell, there's little reason for Nvidia to change course.

But competition has a way of forcing the issue. AMD's more capable Radeon RX 9000 series is starting to put pressure on Nvidia's pricing, and that's good news for gamers. Yes, manufacturing costs are up – and yes, that limits how cheap some GPU configurations can be. But the inflated margins layered on top? Those still have room to fall.