Expect corpodrones praising 1000$ card in 3,2,1...

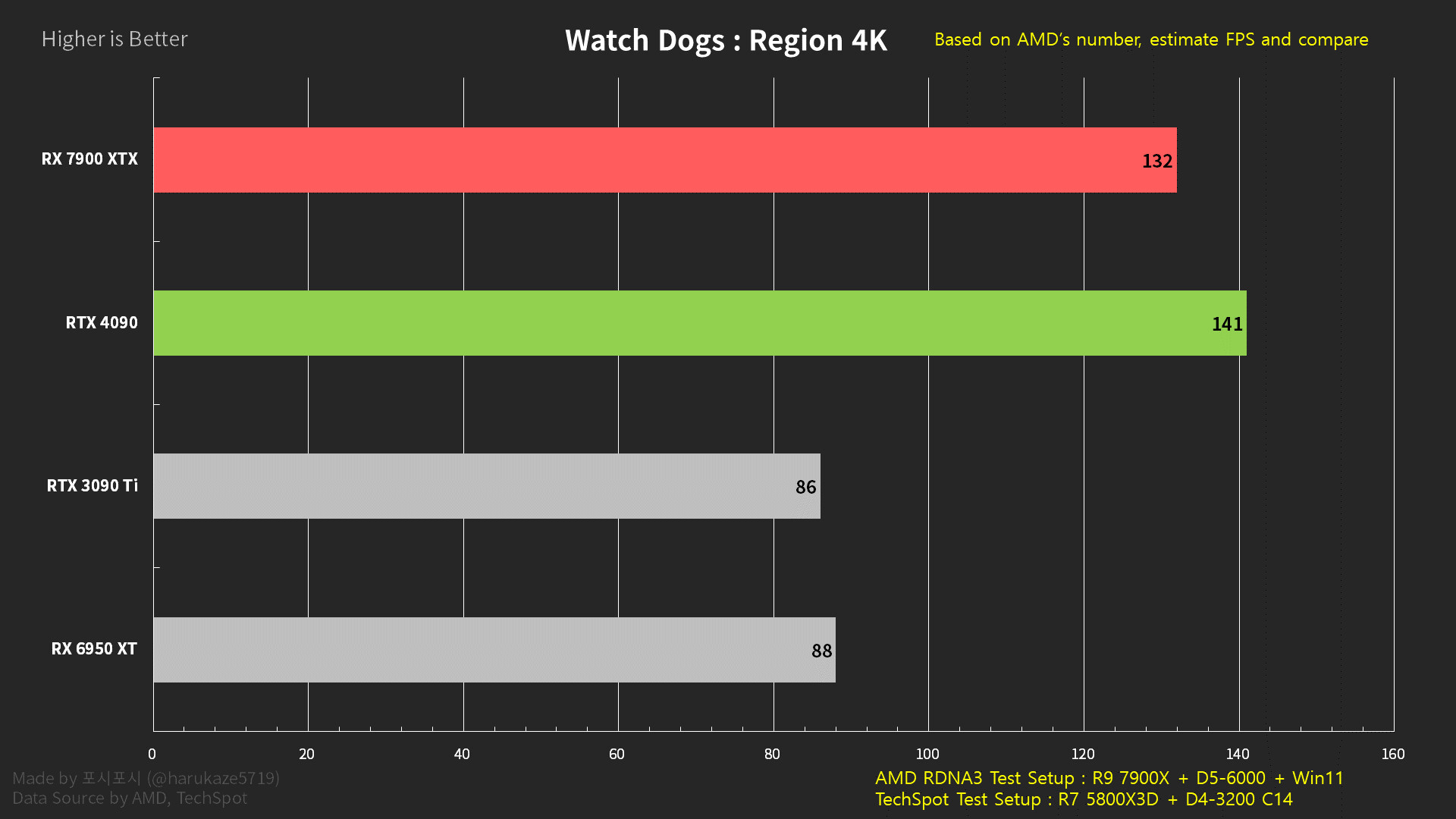

I understand your sentiment but your view is too narrow. Any ATi product with a 9 as the second digit in its model number is a halo product that people tend to not buy anyway. What AMD has shown is that this generation's cards should be $100 less expensive than last-gen. The RX 7900 XTX is $100 less than the RX 6950 XT and the RX 7900 XT is $100 less than the RX 6900 XT.

This means that the RX 7800 XT could be $549. Is that more palatable to you? I'll tell ya, it sure is more palatable to me!

Better than nothing anyway, not insane 1600 and 1200 for x80 card, especially if these two beat 4080 in raster perf, or even with ampere in RT. I'll be glad to see ngreedia's mug fall into dirt and 2020 cards significantly drop in price.

Check my previous post for what could be the pricing structure of this generation and a little instruction manual on how to read Radeon model #'s.

Do you know how inflation works? Genuine question.

Inflation doesn't affect tech the way it affects everything else because it's offset by the fact that tech is unique as it gets less expensive over time to both produce and purchase. That's why the cost of CPUs and GPUs haven't increased all that much in the last 20 years. Sure, the 2017 mining craze caused GPUs to jump temporarily but they did settle down. I think that in this case, nVidia wants to keep the prices in the stratosphere caused by the market manipulation of the latest mining craze but AMD can tell that prices like that aren't sustainable and would ultimately result in people just not buying them any more. AMD recognises that sure, people can buy things they can't afford but only for so long before...

"BACK TO REALITY, WHOOPS! THERE GOES GRAVITY!"

On the other hand, nVidia doesn't care and never did.

It will be interesting to see how important RT is in the long run. I've used it on the PS5 and seen others use it on PC. It looks awesome but no more than, say, going from "high quality" to "highest quality". It seems to be something that Nvidia is pushing hard but only the enthusiast crowd is genuinely excited for.

It's only the

young enthusiasts who are excited for RT because veteran gamers like me recognise RT for what it is, a gimmick. We saw real game-changers like hardware tessellation engines so we don't find RT to be all that impressive.

Wow, I thought they would be $999 and $1199 respectively. I think $899 is too close to XTX but maybe performance isn’t that different. Looks like this is gonna be a winner regardless.

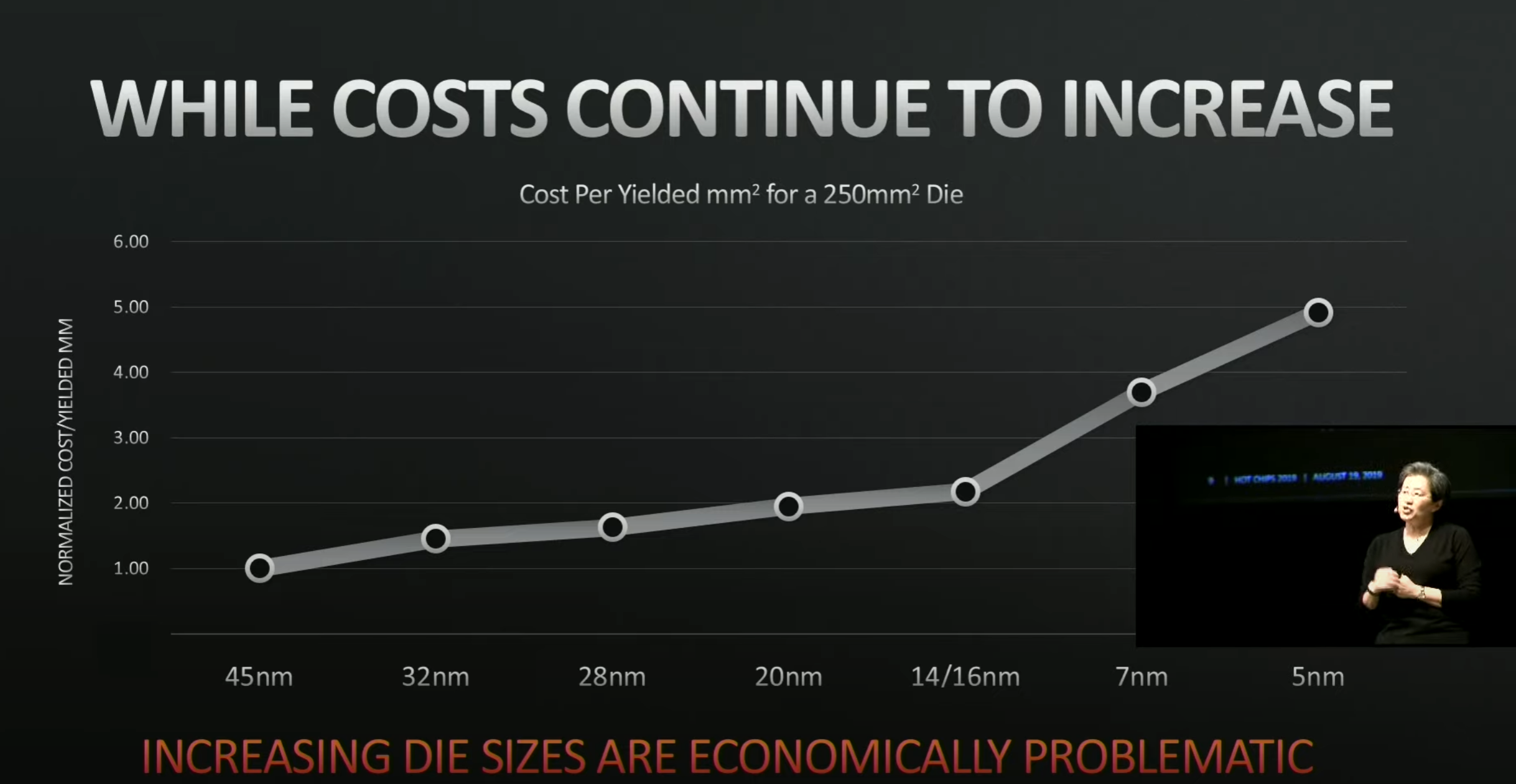

Remember that these are halo products. It's the cards with the second digits of 6, 7 and 8 that most people buy and it's looking like they'll be $100 less expensive. That's all it'll take for AMD to win this generation and win BIG. By dropping the prices like this, they're also being strategic because nVidia's GPU is far more expensive to produce than ATi's GPU just based on die size alone. Add the economic advantage of using chiplets and it's obvious that AMD's pricing strategy is not only to increase their market and mind share but to also damage nVidia because now, nVidia can't make GPUs as cheaply as ATi can.

There is no question the RTX 4090 is a monster of a card, it has no rival in performance nor it's price, but also is a card that will end up in only a minuscule amount of peoples hands.

Keep in mind that the same thing could be said about the 7900 XT/XTX cards because those are level 9 halo products. Most people buy cards at levels 6-8 and those cards will be significantly less expensive than the level 9 halo cards.

It's those cards that matter most because marketshare = mindshare. I had a co-worker who had been gaming for well over a decade and only after getting to know me did he finally decide to try a Radeon card. In the beginning, he was like "I only like nVidia" (even though he'd never had an ATi card in his life). I asked him how he's liking his RX 6600 XT and he admitted "It's like you said, there's no real difference between them except that nVidia costs more." which means that he's no longer averse to buying red.

If Radeons are significantly less expensive for the same (or better) performance (which they almost always are), more and more people will make the same discovery he did and that will do far more to increase profitability than an extra $100 per card in a single generation. It looks like AMD has finally figured this simple fact out.

Very humble pricing for a flagship card. I'm both skeptical on why they keep the price of the 7900 XTX lower than the 4090, and at the same time, am excited to see how it will turn out.

The way I see it, the reasons why the RX 6900 XTX is the price that it are two-fold.

Reason #1 - From what we've seen, the RTX 4090 is way out on a level that most people don't care about (in both performance AND price) so why would AMD bother incurring the cost of getting ATi to produce a GPU like that. Developing extreme models like that is disproportionately expensive and requires things like huge power draw and exotic cooling solutions.

Remember that people are so dumb that they actually paid MORE RTX 3080s with only 10GB of VRAM over the RX 6900 XT despite being faster and having 6GB more VRAM. I'm sure that AMD noticed this and said to themselves "If they're THAT brainwashed then we're wasting our time." and so didn't bother getting ATi to make anything that would cost more than it would be worth.

It's a far better business decision to instead save that development money and use it to offer lower prices on cards in market segments that people will actually buy. This fixation on halo products is just plain stupid because they have absolutely no impact on how the cards that most people will actually buy.

Looking at how the 6900XT and 6950XT gave a run for the money for the 3090s, giving almost blow by blow performance, AMD should be keeping up the tempo in this gen too.

The problem is, as I said, too many fools wanted to pay more for a slower 10GB 3080 than less for a faster 16GB 6900 XT. Hell, even I was astonished by this because I thought for sure people would say "Oh hell, good enough!" but they didn't.

You know, you can't fix stupid and so AMD isn't even going to try. They're just going to leverage the cost savings of using chiplets to flood the market and gain mindshare. Then when the efficiency of chiplets causes Radeon performance to exceed that of GeForce, they'll be in a much better position to actually get sales at the halo level. Remember that ATi's principal source of revenue isn't RDNA, it's CDNA and Radeon Instinct is making them an absolute killing. They have no need to kill themselves trying to chase the retail halo crown. As things are now, it would be a fool's errand anyway.

Lest, at risk of disappointing all those awaiting for these 7 series Radeons.

I don't get why anyone would be disappointed. Having great cards that people can actually afford to buy and use is, to me, a much better outcome than having two halo-level products with halo-level pricing that few, if any, can afford. That would severely damage PC gaming in general. I truly believe that Radeon will eventually surpass GeForce, just like Zen did with Core.

Remember that AMD was much further behind Intel than ATi was behind nVidia but still came back from the brink to slap Intel down. Now, with AMD being a much stronger company than they were then (EPYC completely OWNS the server space these days), there's no reason why they can't do the same to nVidia.

Chiplet design certainly seems more elegant on paper than the old-school monolithic approach. Whether this translates to more reasonable prices in the mid-tier (via improved wafer yields) remains to be seen.

Well, it worked amazingly with Zen so it should work with both CDNA and RDNA.

Yes, their chiplet design will give them higher yields, too, so it will be easier for them to keep supply up and costs down versus Nvidia in this generation.

Exactly. This is a huge opportunity for them.

Whether the supply will be enough, whether the rasterization performance will be high enough, and whether RT makes a huge impact will be the deciding factors for most people.

Increased yields mean increased supply and the rasterisation performance of even the previous generation was overkill for most people. There's no way that it won't be good enough now that it's better than even that.

These cards are quite affordable compared to Nvidia, so I expect from a sales side they will be a strong winner for AMD. So long as they can beat the 4080 slightly in performance, these cards may also become the go-to for the content creator crowd: lots of memory for a lot cheaper, and still enough compute to get the job done, without also costing as much money on the energy side.

I think what AMD wants is to get as many Radeon cards into as many gamer hands as possible to increase their mindshare as much as possible. Pricing like this will do that.

Yes because we should always trust AMD and Nvidia's slides to show 100% accuracy and transparency.

I know, eh? That presentation was GAWD-AWFUL! I was like "Why are they only showing frame rates with FSR? I don't care about FSR, I want to know how good the HARDWARE is!". It was just plain stupid, but these presentations usually are. All I wanted from this presentation was to actually see the cards, learn the pricing and learn the release date. I have no intention of buying one because my RX 6800 XT will do me fine for many years to come but I do enjoy discussions like this and so I wanted to be informed. I also like to see the bigger picture and to do that, one must always be paying attention.

Nah, I‘m complaining mostly about 80 tier cards, could’ve been around 500-700$ mark, don’t really care what they want for XTX 9999 Super Ti Halo Edition, the only reason I worry about their prices is that through halo products corps dictate pricetags for more “reasonable” products. If that wouldn’t have been the case and 4080 had top-tier chip and cost 599-699$, I wouldn’t care less if they priced 4090 north of 10 grands.

Anyway, I’m fine seeing AMD’s flagship going for 999 and not 1.5K, so that should bring prices down, too bad for 7900XT though - 100$ is insignificant for that performance gap and still guarantees AIBs will go north of 1000$ for non top-tier card.

It seems that their plan is to price new cards for $100 less than the previous generation. That would put the RX 7800 XT at $549, right where the RX 6800 XT should have been to begin with.

I’ve been a lawyer and .net dev from the start of my careers, and despite all the raises in my gross income, every year I’ve ended up earning less. But maybe its just because I was born in Ukraine, so I was ****ed up in life from the start, and things are fairy in “americas and europes”

No, it's more a matter of "In the USA, if you're already rich, you get richer but if you're not already rich, you get poorer.". Where the private sector is involved, the people at the very top make a mint while everyone else gets squeezed to death.

It's even worse in the USA than in most places in the developed world because people get hit with medical bills that cause them to declare bankruptcy. The thing is that Americans tend to be very short-sighted and say things like "Why should I pay someone else's medical bills?" because they have no concept of the fact that one day, someone else will be paying theirs. They seem to have this idea that they'll never get old and sick. Now, elderly people in the USA have become impoverished because of this asme attitude that they had when they were young. I guess it's a kind of poetic justice because they're being punished for their own greedy actions in the past.

To me, the most exciting thing about these new AMD graphics cards is that they support DisplayPort 2.1. If one is going to get a monster graphics card, one ought to be able to actually enjoy the high frame rates it makes possible!

Yeah but looking at the performance of the RTX 4090, is it really that hampered when it's still on another level despite having only DP 1.4 and despite the Radeons having DP2.1?

So the big feature, chiplets, is a bit of a disappointment to me.

What were you expecting? You should remember that when Zen first came out, people were also "disappointed" that it didn't completely mop Intel Core on their first try. Today though, AM4 outsells AM5 and 13th-gen COMBINED.

Also, I'm not surprised their ray tracing performance is likely to be 'way behind Nvidia's. Sure, that doesn't sound great from a marketing perspective. But they've managed to make their ray tracing and upscaling at least adequate, and for serious competitive gamers, frame rates matter - not being able to turn settings up to make the game look prettier.

Nobody complained about the RT performance of the RTX 3000-series so if the Radeons are capable of that, nobody should be complaining about them either.

So AMD is giving people the choice of not paying for ray tracing they don't need.

That's a good way of looking at it.

A big reason, though, that will lead many people to choose Nvidia over AMD is that Nvidia Broadcast is a good free solution to replacing the background for streamers.

I don't agree with that assessment because 99% of gamers are not streamers. Sure, it's a great advantage for streamers but the percentage of the market is so small that it's like talking about extreme overclockers. Sure, they exist but they're so few as to be irrelevant.

AMD doesn't have anything like that; there's one deficiency in their software suite that they should be addressing.

Again, it's because there aren't enough streamers out there for it to be a priority.

Of course they don't have tensor cores, but since there are non-Nvidia solutions (either paid software, or awkward kludges) at present, AMD could still provide something that was nearly as good.

Sure, they could, but the difference made by RT is still so small that I really don't care about it and I'm thinking that most people wouldn't care if they actually thought logically about it instead of just thinking "I WANT IT, I WANT IT!".

The changes mentioned are neat. Is it me, or does everyone feel like this is a stop gap?

There's a sense of something much more, brewing in the AMD labs, they are successfully tweaking and adding features they didn't have before. It's busy over there... Great work if you ask me.

You're right, it IS a stop-gap. Zen was a stop-gap before Zen2 and Zen3 came along to topple Intel. The first chiplet Zen models weren't better in most cases than Intel Core but Zen had room to improve. RDNA has that same room to improve.

Honestly very tempted by the 7900XTX, as usual I'll wait for reviews but it probably performs better than anything else, other than for 4090 which it's probably nipping at the heels of anyway. For near enough half the price (in the UK you can't really get hold of a 4090 for less that £2k) I might actually pick one up for my new build.

If more people could think with their brain like you do, I think that this world would be a better place because you're absolutely right.