Highly anticipated: AMD Radeon RX 7000 series graphics cards have just been unveiled and we've got all the details you need to know, plus our general thoughts on what AMD has shown off. The graphics card market this late in 2022 and into 2023 is certainly going to be very exciting with a boat load of competition between these new Radeon GPUs and Nvidia's RTX 40 series.

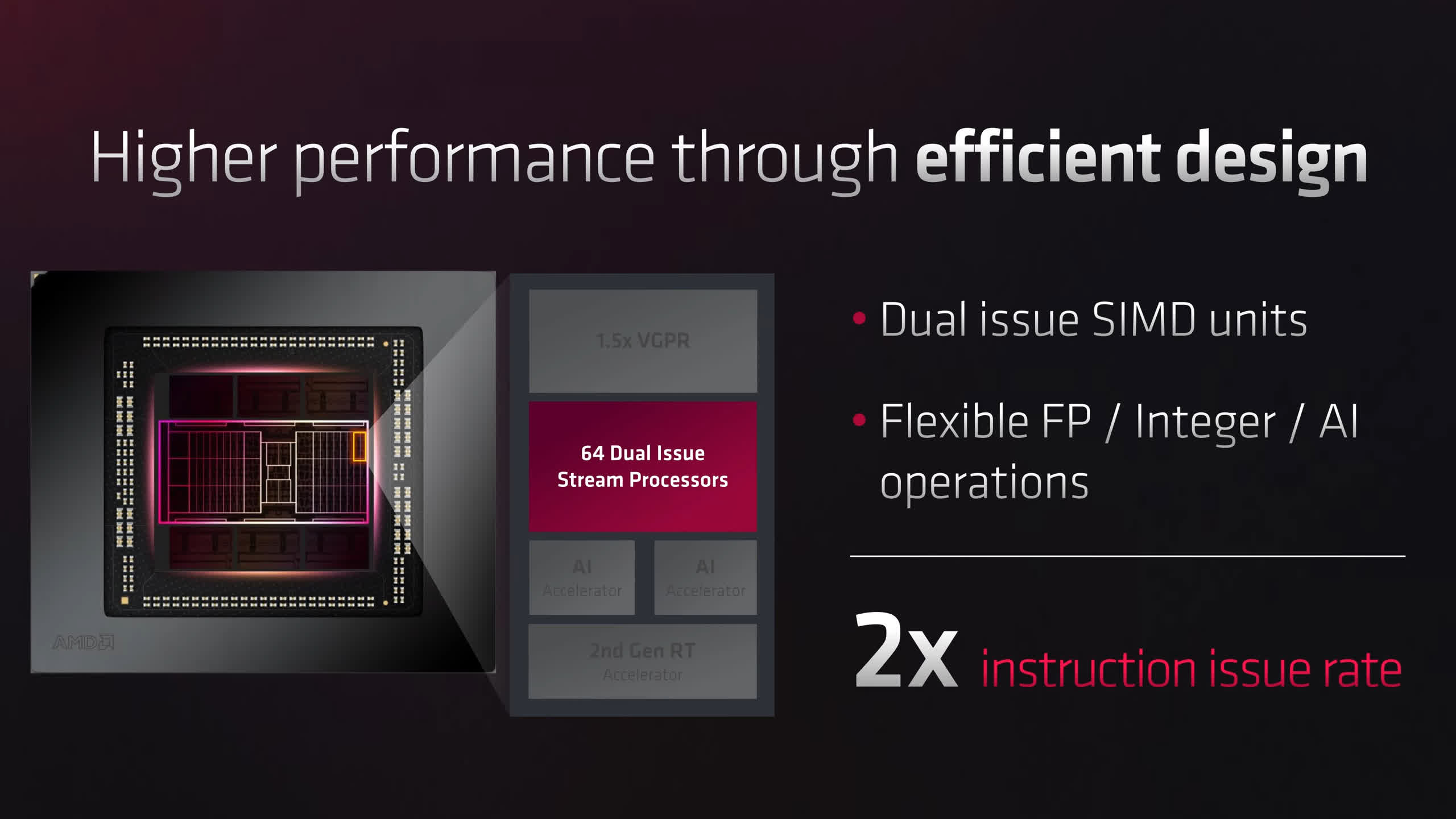

Let's start with the models AMD announced today. The flagship GPU is the Radeon RX 7900 XTX, packing some beefy specs: 48 workgroup processors (WGPs, for short), or 96 compute units if you want to use that language, with a total of 12,288 shader units. The 7900 XTX packs 20 percent more compute units than the 6950 XT, but AMD has moved to a dual-shader design within each compute unit, effectively doubling the shader unit count inside each CU. Nvidia's Ampere architecture introduced a similar design principle which ended up boosting gaming performance by over 20 percent at a given SM count, so it's a positive to see RDNA3 introducing something similar.

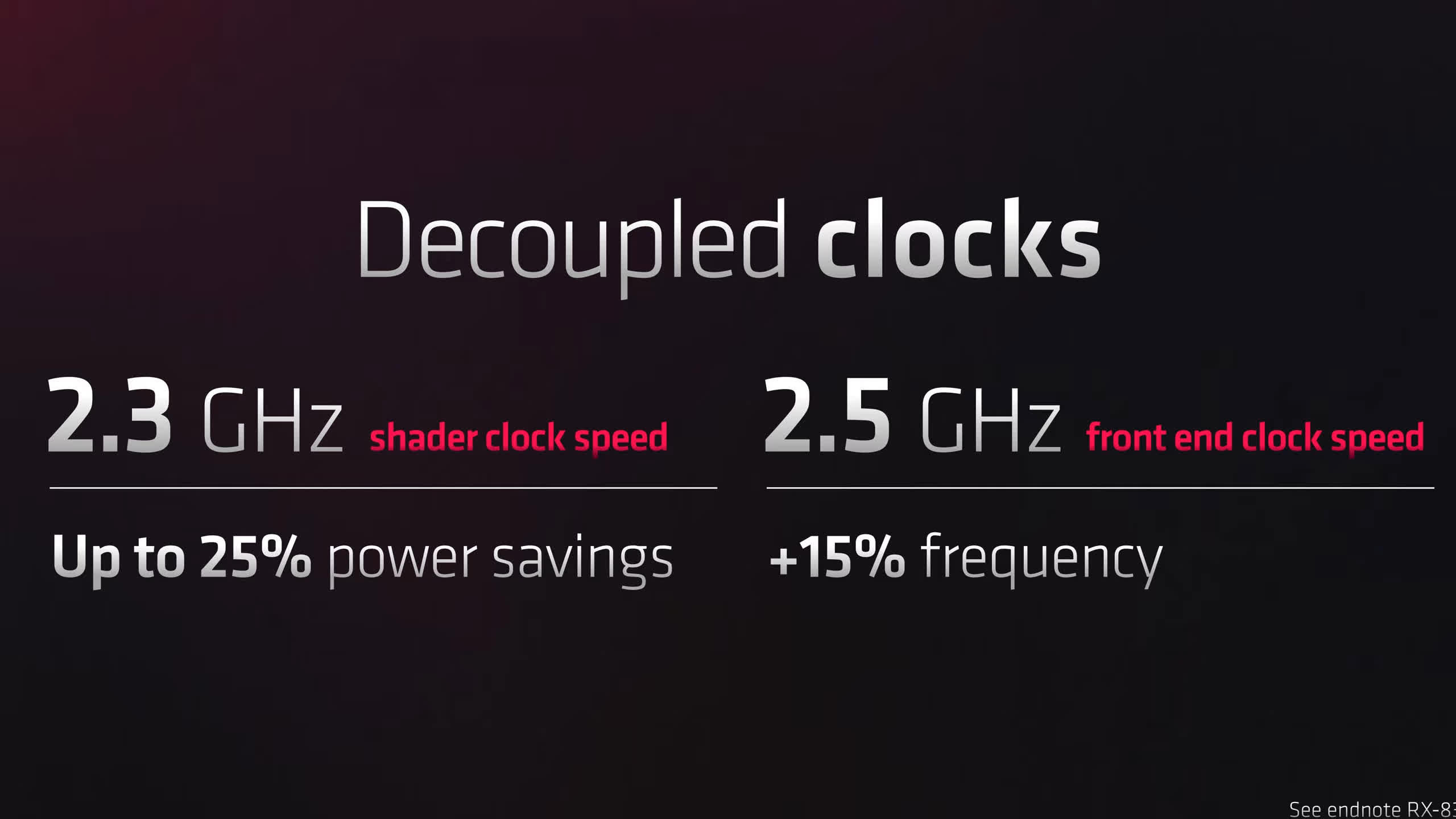

There were rumors that RDNA3 would be a much higher clocked architecture, however that hasn't come to fruition based on AMD's official specifications. The 7900 XTX is clocked at a 2.3 GHz game clock, which is pretty similar to the 6950 XT. They're still touting much higher performance, of course, but clocks have remained modest.

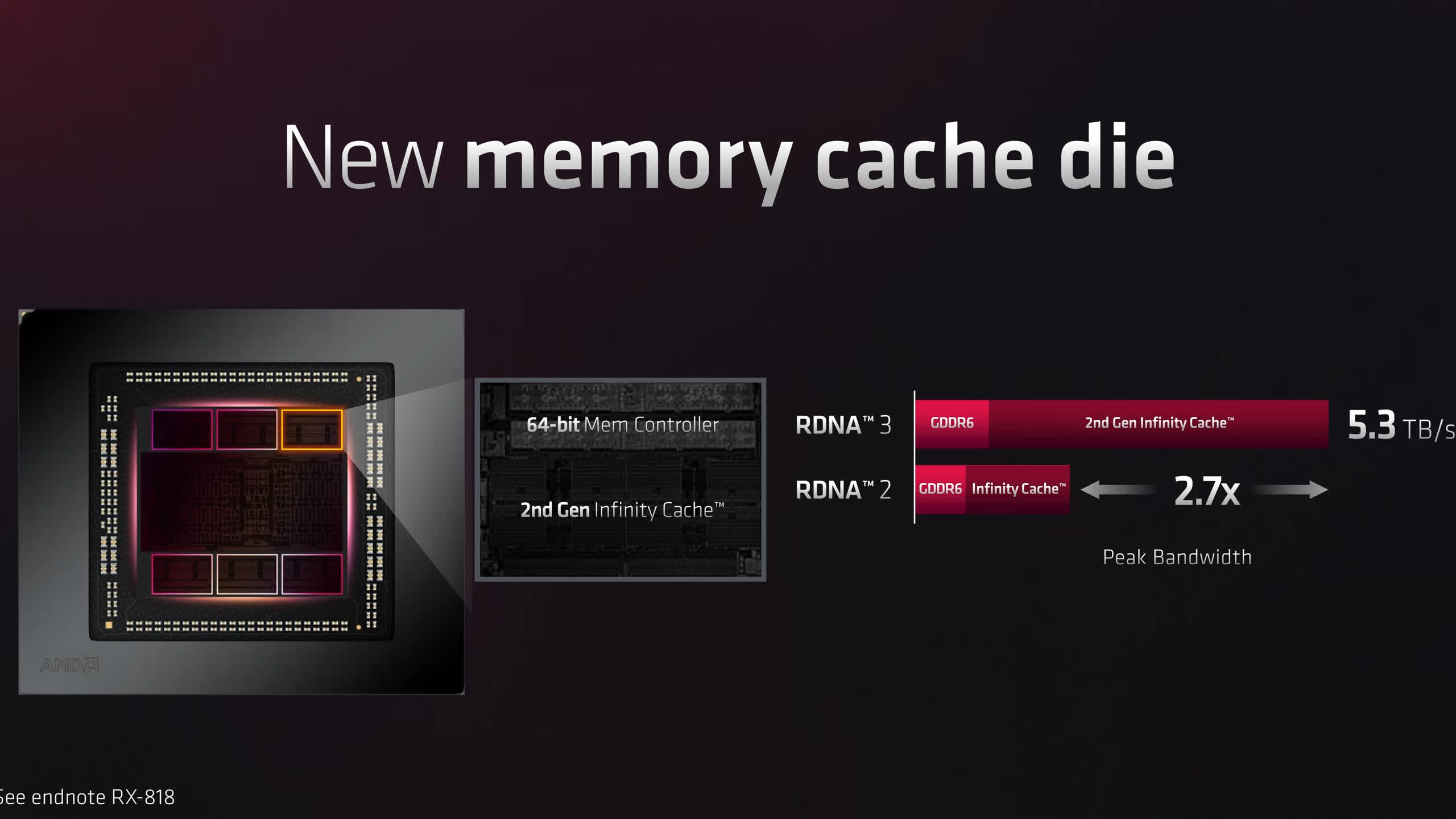

The memory subsystem is also improved relative to flagship RDNA2. While AMD is continuing to use GDDR6 memory, they've moved up to a 384-bit bus here and reports have suggested an increased memory speeds to 20 Gbps though AMD didn't confirm that in the presentation.

Previously AMD were using up to 18 Gbps memory on the 6950 XT and a 256-bit bus, so we could be seeing the 7900 XTX with over 60% more memory bandwidth depending on where final clocks lie. There's also more memory, up to 24GB which matches what Nvidia offers on the RTX 4090.

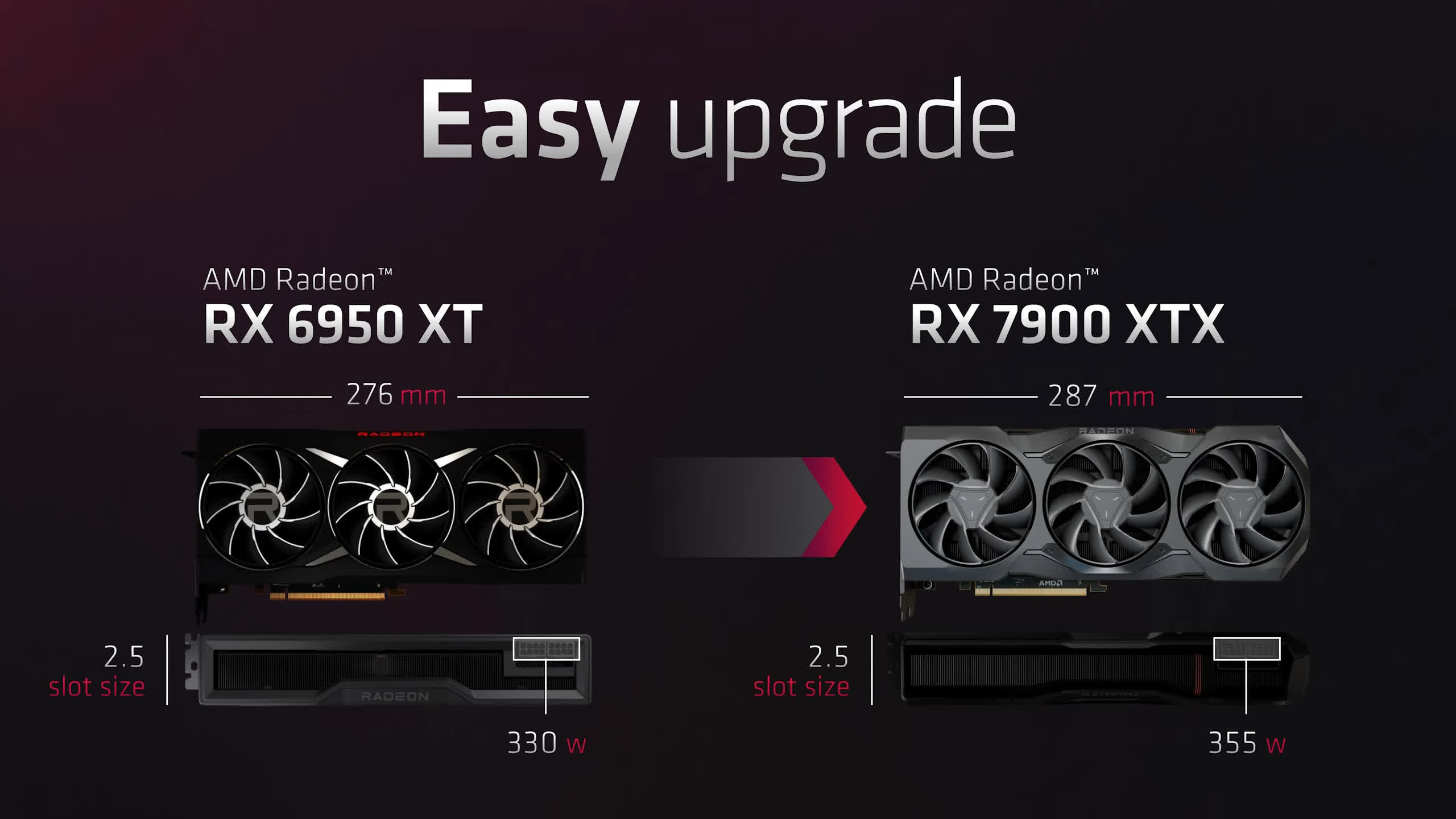

All of this ends up with a total board power rating of 355 watts, which is a modest increase over flagship RDNA2 that topped out at 300 watts for the 6900 XT and 335 watts for the 6950 XT. It is lower than the RTX 4090's whopping 450W rating, so perhaps AMD has the more efficient design this time around.

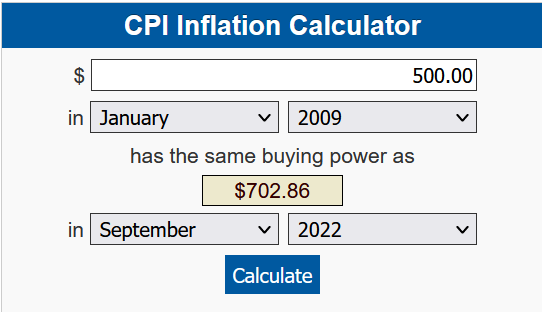

The Radeon RX 7900 XTX will be available on December 13 priced at $999 – and we'll get to discussing advertised value in a moment. For now, let's look at the second graphics card AMD announced.

Coming in a step below the 7900 XTX is the Radeon RX 7900 XT, which is a similar name to the XTX model that might confuse everyday shoppers. Not sure why these parts are so closely named given the wealth of numbers on offer, but here we are.

This card is essentially a cut down version of the 7900 XTX. Instead of 48 WGPs we get 42, for a total of 84 compute units – still an increase on the highest end RDNA2 model. This leads to 10,752 shader units. Clock speeds are also a little lower, at a game clock of 2 GHz with a total board power rating of 300W.

Bus width is cut to 320-bit, but we get a healthy 20 GB of it. In terms of core and memory configuration the 7900 XT ends up roughly 85% that of the flagship model, a much smaller gap than Nvidia offers between the RTX 4090 and RTX 4080, the latter of which is like a 60 to 70 percent version of the flagship. However the XTX vs. XT naming is a bit unusual given the gap in hardware is more like the difference between 6950 XT and 6800 XT in the previous generation.

The Radeon RX 7900 XT will also become available on December 13 priced at $899, representing a 10% price reduction over the flagship model.

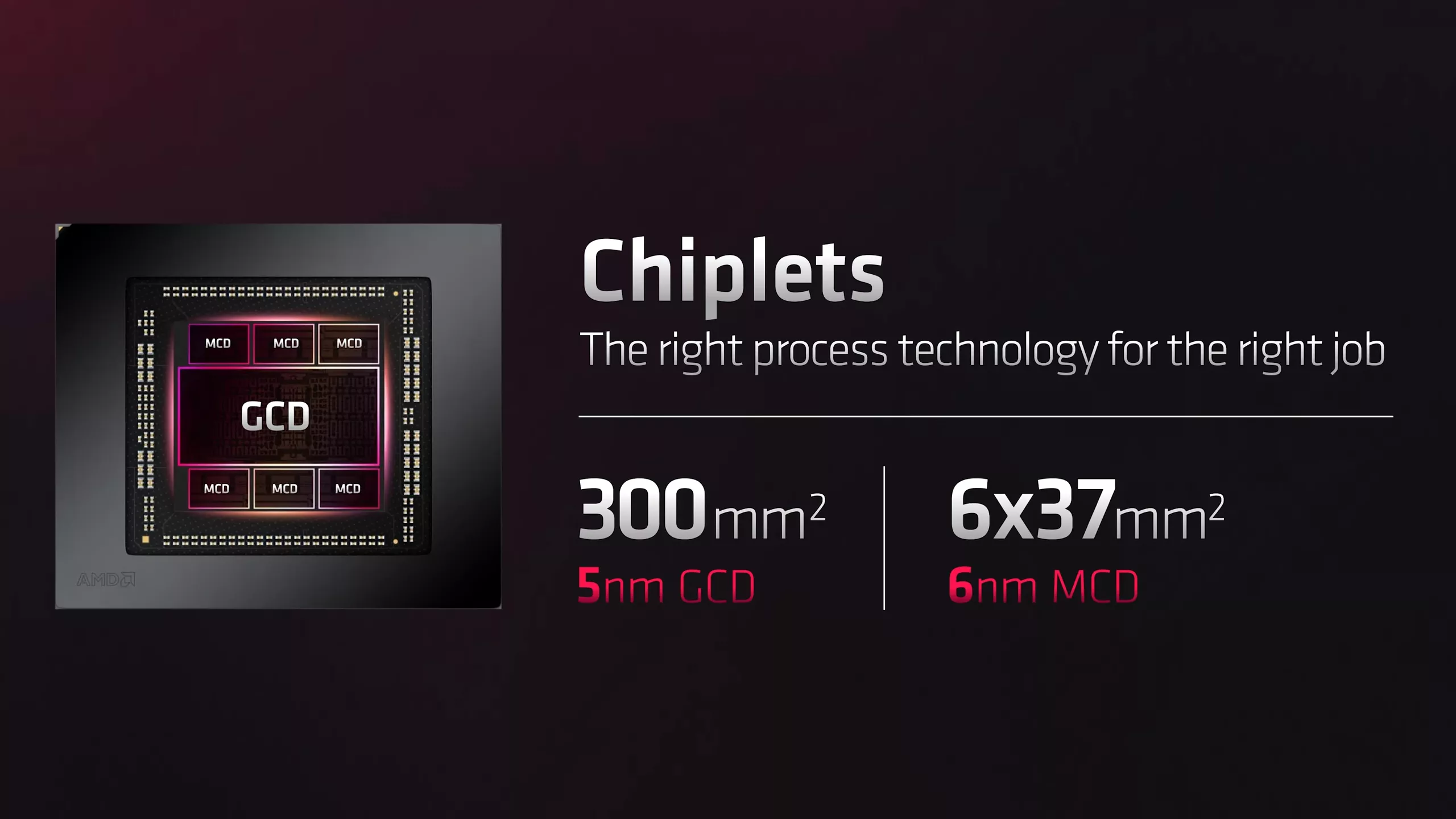

But AMD's RDNA3 GPU isn't just interesting from a graphics card specification perspective – it's also the very first high performance consumer GPU design to use chiplet technology.

Rather than going all out with a massive monolithic die like most companies have been doing for ages, RDNA3 uses a combination of smaller dies in a similar (though not that similar) way to AMD's latest Ryzen CPUs. This means that to get top-end GPU configurations, AMD no longer needs to make a single 520+ mm2 die like RDNA2, or approach the behemoth that is the AD102 600 mm2 die used in the RTX 4090.

AMD's RDNA3 chiplet approach includes two components: the Graphics Chiplet Die or GCD, which houses the main WGPs and processing hardware; plus multiple Memory Chiplet Dies or MCDs, which include the infinity cache and memory controllers. On the flagship 7900 XTX we get one GCD plus six MCDs, while the 7900 XT features a cut down GCD plus five MCDs.

AMD's architecture benefits in multiple ways from this move to chiplet technology. One is that they can now split their GPU design over multiple nodes, reducing the amount of expensive leading edge silicon they need in every GPU.

The GCD is built on TSMC's latest N5 node, but the MCDs are built on TSMC N6, which is a derivative of their older and less costly 7nm process. This ends AMD's reliance on needing huge chunks of the latest silicon for every high-end GPU.

The other main advantage is yields. Large monolithic dies have lower yields than smaller dies, so whenever a design can be split into multiple smaller dies, there's a good chance yields will increase substantially.

AMD has found great success with this approach on Ryzen, where modern Zen 4 chiplets are about 70 mm2, tiny enough that yields should be well over 90%. RDNA3 won't have as much of an advantage as Zen 4 as the GCD is still relatively large compared to a CPU, but at 300 mm2 it's half the size of AD102 and just 60% as large as Navi 21. That sort of size saving will have big implications for yields.

Flanking the GCD are six MCDs about 37 mm2 in size, so these are tiny chiplets just half the size of a Zen 3 CPU core chiplet. Each has a 64-bit GDDR6 controller and 16MB of cache. On a mature node like N6 these should have extremely high yields, so AMD will benefit here a lot even though they need six of them for the 7900 XTX. All up, while flagship Navi 31 RDNA3 GPUs still need over 500 mm2 of total silicon, this chiplet approach split across two nodes should reduce manufacturing costs and increase yields. Whether or not AMD will benefit the most through margins, or consumers will benefit through a great value product, we'll have to explore later on in our review.

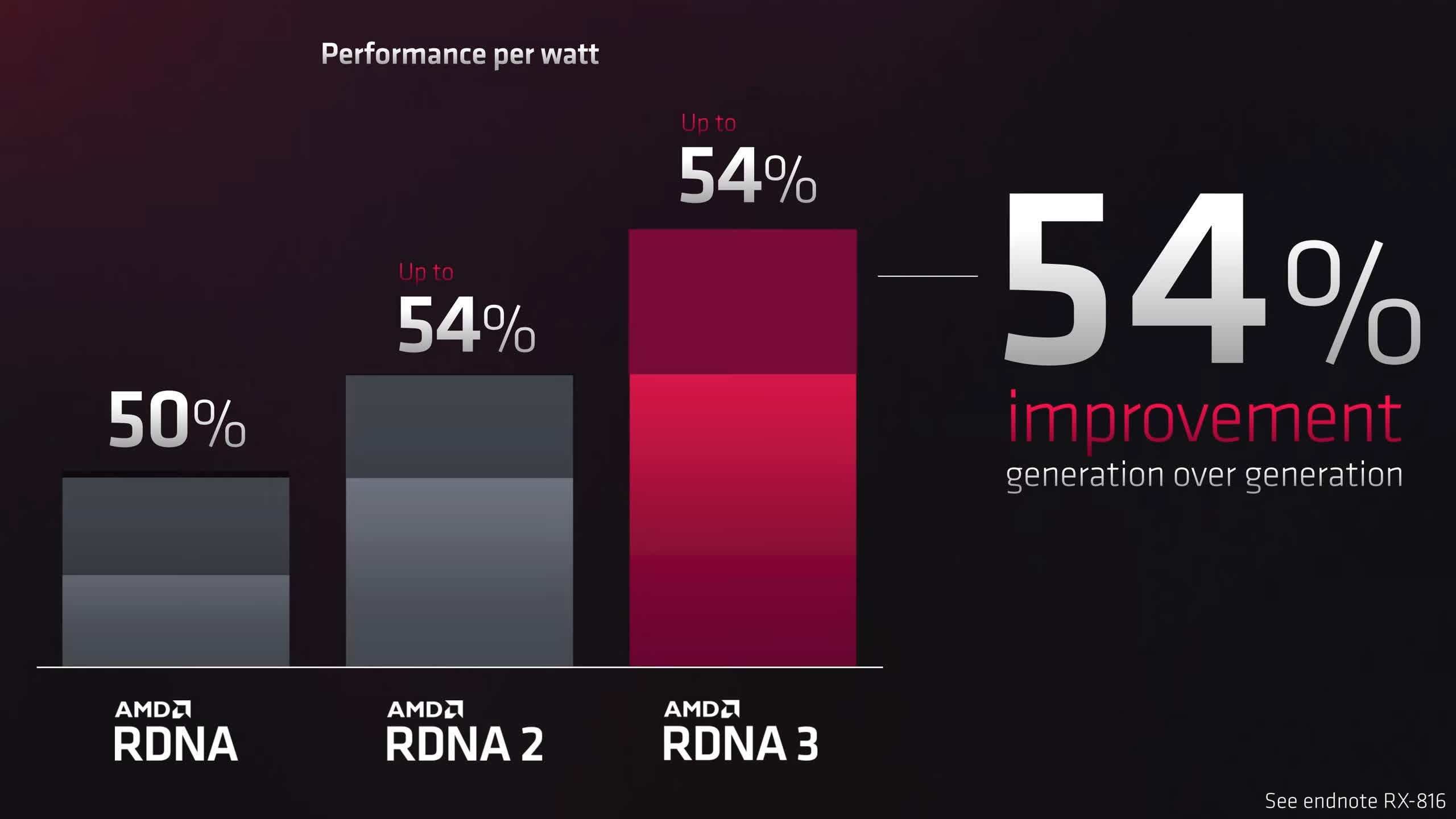

Aside from the new chiplet design, AMD is touting a significant improvement to performance per watt. Previously AMD revealed a greater than 50% gain in this figure comparing RDNA3 and RDNA2, and they've revised that during today's announcement to a figure of 54%.

While of course it's nice to see a substantial gain like this, it is somewhat expected given the generational shift in process tech for the GCD. Both RDNA and RDNA 2 were manufactured using 7nm technology, while RDNA3 moves up to 5nm; you'd definitely hope for a big improvement to efficiency.

AMD also isn't pushing power to ridiculous levels with the models announced so far, which is a big win for the energy conscious shoppers out there.

Another big question mark around RDNA3 is ray tracing performance, and AMD is claiming their new architecture does feature enhanced ray tracing capabilities. This includes 50% more ray tracing performance per compute unit thanks to features like ray box sorting and traversal, plus 1.5x more rays in flight.

There are also new dedicated AI accelerators, two of them per compute unit, delivering up to 2.7x performance, which is a vague claim to make as it's not clear at all what this is referring to. However they are claiming new AI instruction support, again no details on that.

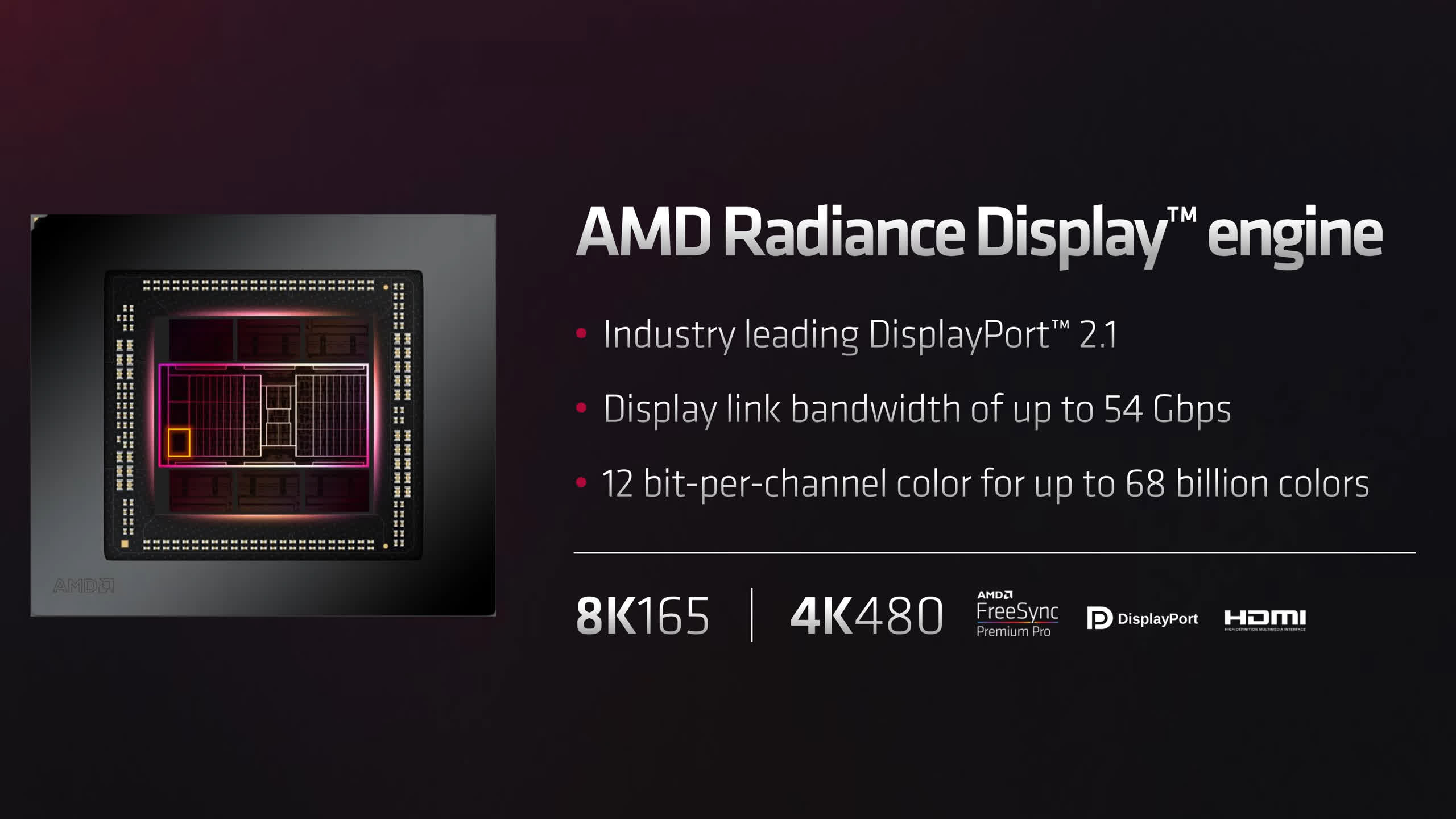

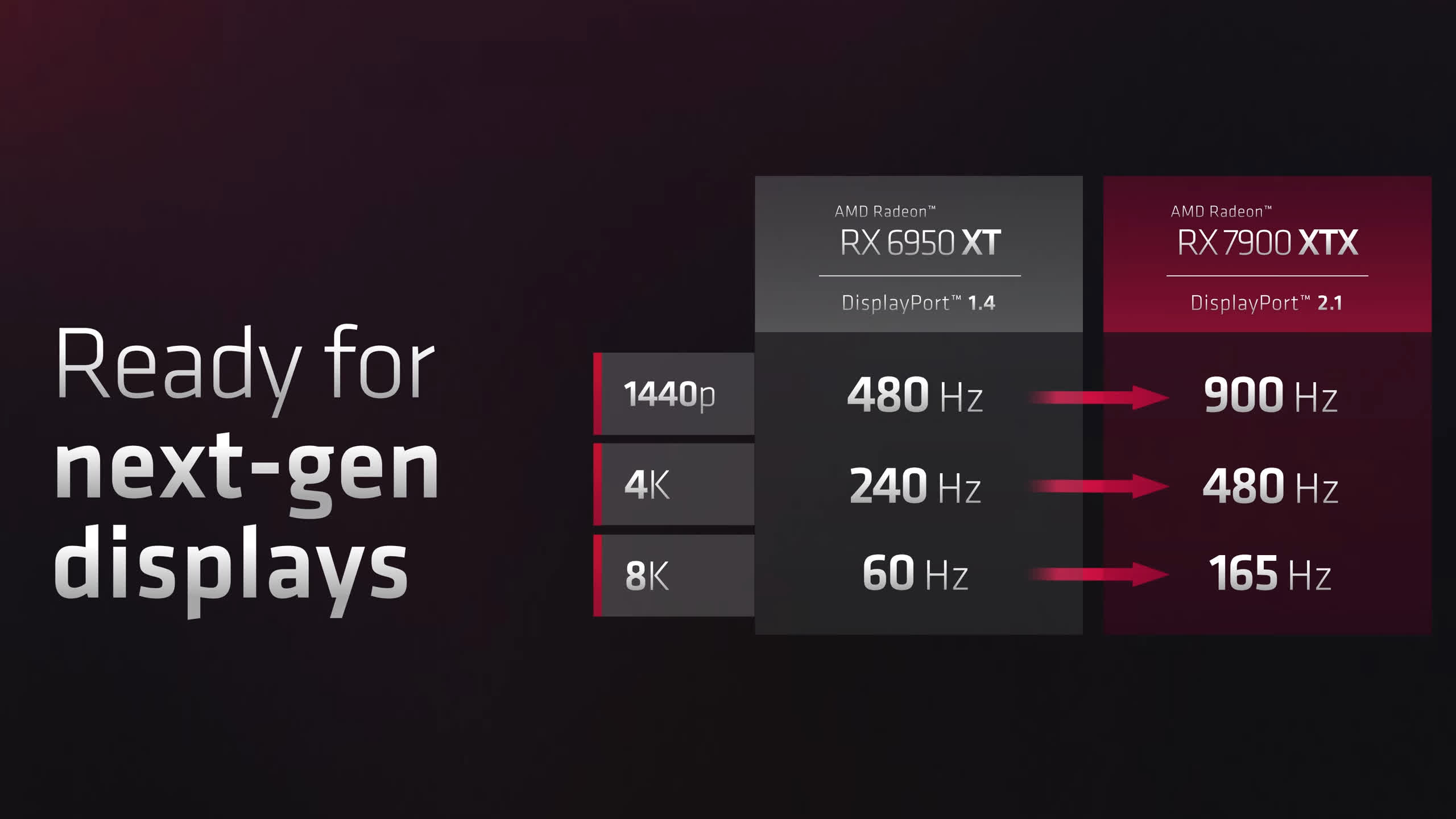

RDNA3 also supports the latest display technologies including DisplayPort 2.1 with a link bandwidth of up to 54 Gbps -- not the full 80 Gbps that DisplayPort 2 can provide but a huge bandwidth upgrade over DP 1.4.

This allows for future display types such as 8K 165Hz and 4K at a whopping 480Hz, future looking stuff there. AMD is claiming the first DisplayPort 2.1 displays will be available in early 2023, but we'll have to wait and see whether these displays actually require DP 2.1 to work at their maximum capabilities.

As for hardware encoding and decoding we get AV1 encoding and decoding at up to 8K 60 FPS and simultaneous decoding and encoding of other codecs. AMD is claiming a "1.8x engine frequency" too, which again could probably do with further elaboration, but it's good to see substantial upgrades to their media block and hopefully we'll see performance equivalent to Nvidia's Ada Lovelace. It certainly has the capabilities with both architectures supporting AV1 encoding.

Other architectural improvements include decoupled clocks, so that the shader and front end clocks can be different, and a huge uplift in infinity cache bandwidth rising up to 5.3 TB/s this generation.

Obviously the big point of interest is how these new RDNA3 GPUs will perform, so let's explore AMD's claims via their first-party benchmarks. These are provided by AMD so you should take them with a grain of salt, but it's what they are claiming these products will do, so it should at least show us a best-case scenario.

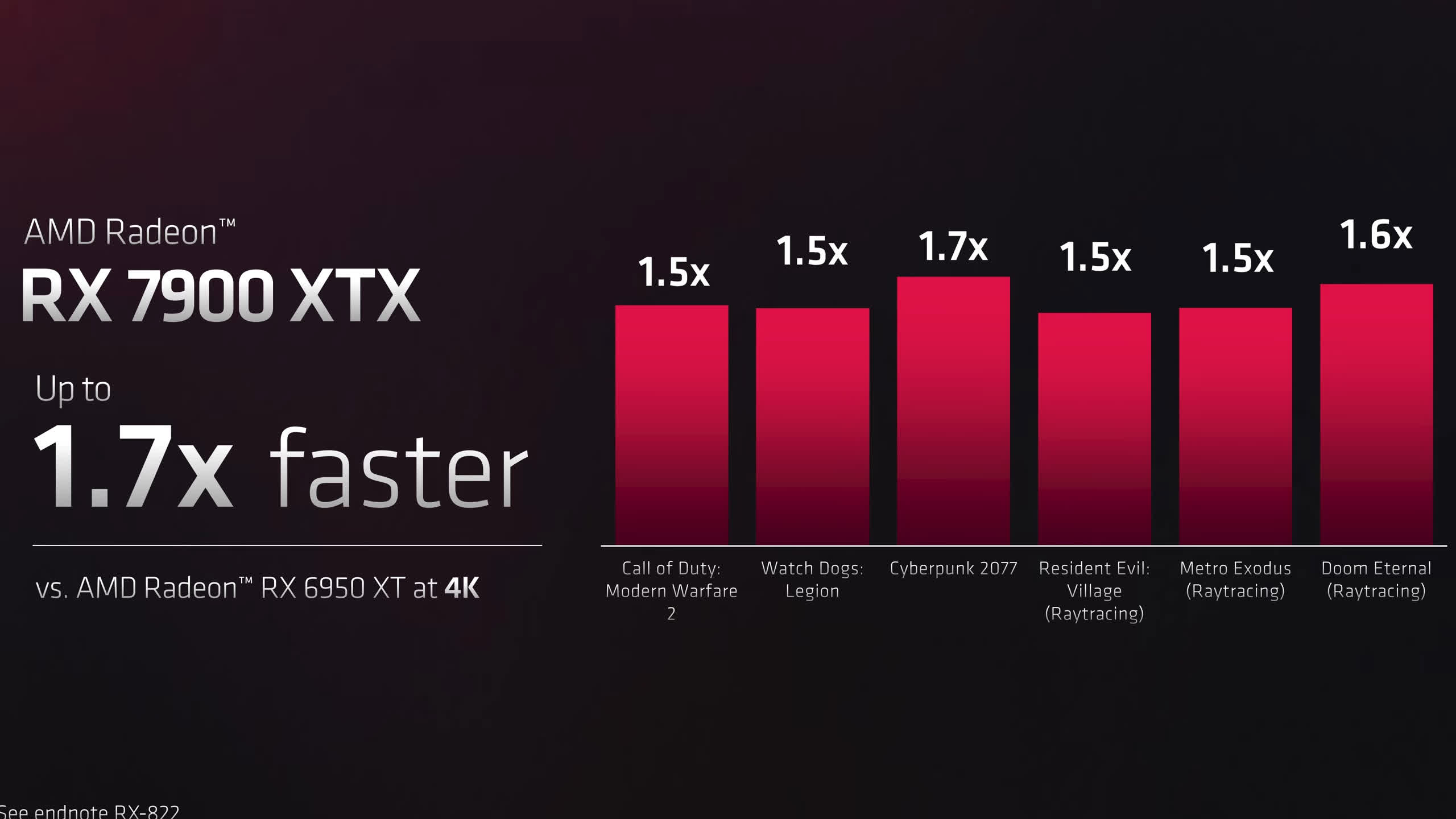

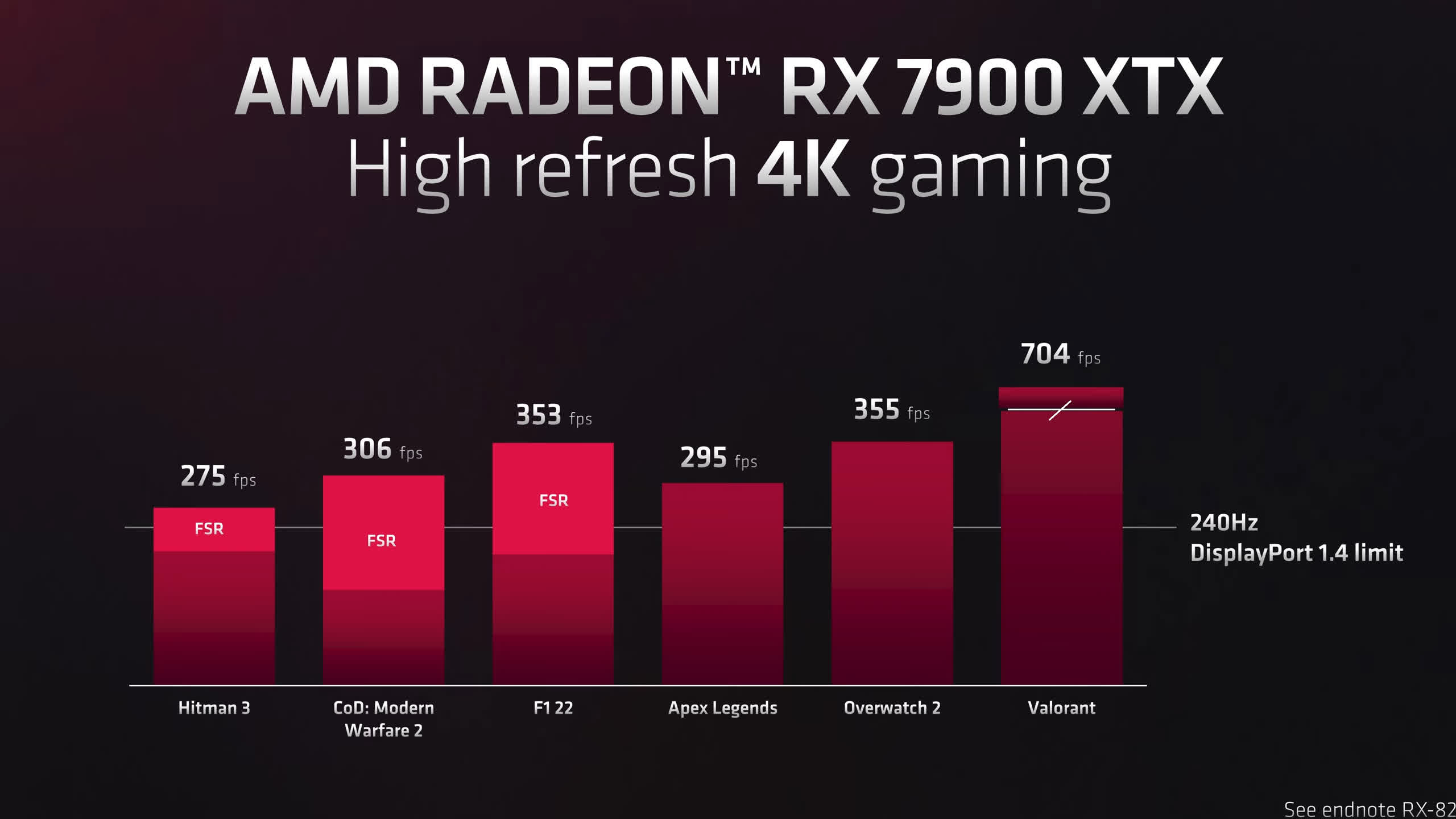

All of AMD's performance figures were comparisons to existing AMD graphics hardware. The biggest claim made was up to a 1.7x performance improvement for the 7900 XTX compared to the Radeon RX 6950 XT at 4K, though the average performance gain across the titles shown appeared to be a bit lower than this.

A strong result compared to AMD's own hardware, with the 6950 XT having an MSRP of $1,100. These days AMD's best RDNA2 GPU can be found for more like $790, with the 6900 XT sitting at just $660. The 7900 XTX still looks to be reasonable value if it can offer 1.5x to 1.7x more performance, while being priced around 50% more than the 6900 XT.

We guess it depends where the final figures lie, like Nvidia it appears AMD is providing a large FPS per dollar gain at MSRP pricing but less so when looking at current GPU pricing.

There are some concerning aspects though. First is ray tracing performance. AMD is only claiming a 1.5 to 1.6x improvement in ray tracing titles such as Metro Exodus and Doom Eternal. This likely won't be anywhere near enough ray tracing performance to match the RTX 4090 at resolutions such as 4K, where often the 4090 is 2x to 3x faster than the 6950 XT.

This may only be enough to get the 7900 XTX into the ballpark of the RTX 3090 Ti, which isn't a bad result, but it seems the gap between rasterization and ray tracing performance with RDNA 3 will remain.

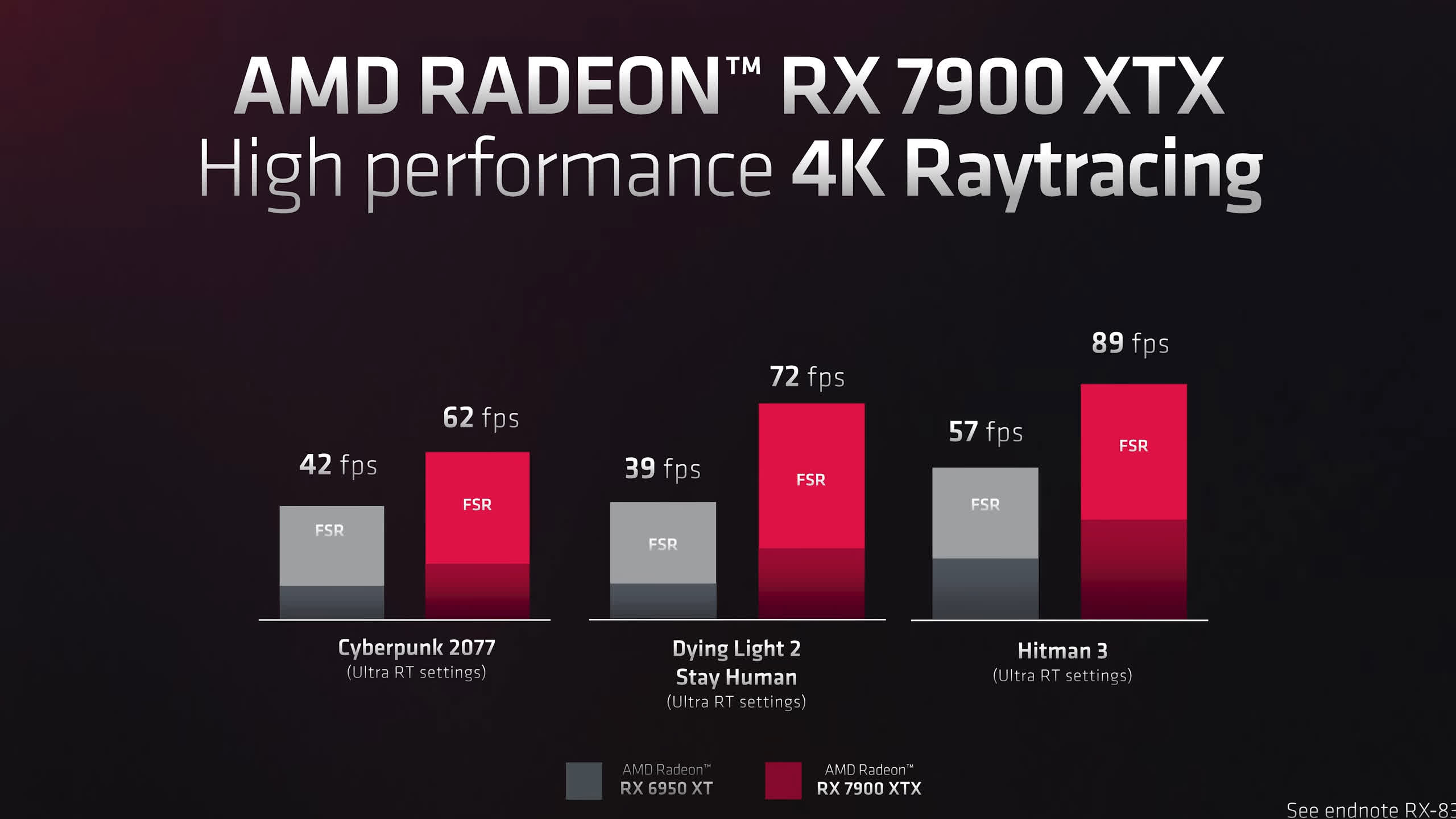

AMD also showed further ray tracing numbers confirming this sort of performance gain is to be expected, although these numbers also use FSR technology for the final FPS figures. The best reported result is an 84% improvement to Dying Light 2 ultra ray tracing, but Cyberpunk 2077 only sees a 48% gain over the 6950 XT. AMD is claiming high performance 4K ray tracing from these numbers, but it seems that's with a heavy reliance on FSR tech.

It's also disappointing to see AMD provide zero performance numbers relative to Nvidia GPUs such as the RTX 4090. A 1.7x performance gain over the 6950 XT would deliver similar performance to the RTX 4090 at 4K across non-ray traced titles, but a 1.5x or 1.6x gain would see it fall short.

With that said, value looks significantly better than the RTX 4090 for rasterization, given the 4090 is priced at $1,600, whereas the 7900 XTX comes in at $1,000. Even if the 7900 XTX was only 1.5x faster than the 6950 XT, it would deliver much better value than the RTX 4090.

But it doesn't seem like the 7900 XTX is designed to compete with the RTX 4090. Its price tag suggests this is more like an RTX 4080 competitor, or even something more around the now cancelled RTX 4080 12GB. The RTX 4080 is slotting in at $1,200 with Nvidia claiming performance around 25% better than the RTX 3090 Ti.

AMD's claims for the 7900 XTX would see it outpace the RTX 4080 in rasterization at a lower price, though it likely will fall short in ray tracing. I guess this is why AMD felt they could only price the 7900 XTX at $1,000 – ray tracing will be a key battle this generation, and they can't charge a premium price if they don't have better performance in all areas.

We also got no performance numbers from the 7900 XT variant, though the hardware configuration suggests it should be around 85% that of the 7900 XTX. Where value lies for this card is questionable and hinges on how it performs in the end, but with an 85% cut down at 90% of the price it's possible that the higher end XTX model will be better value.

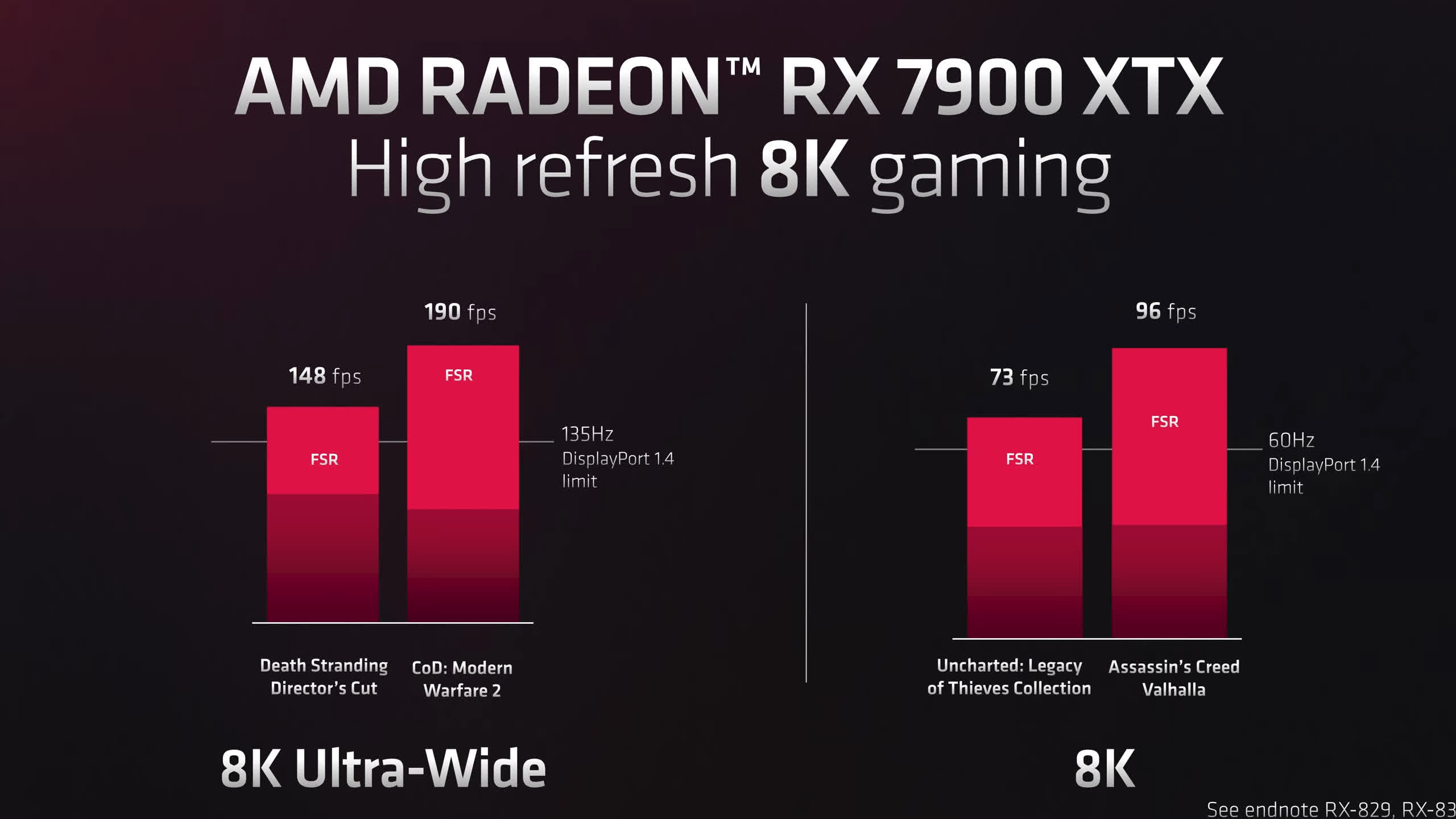

AMD also decided to go down the Nvidia "8K gaming" path now that RDNA3 GPUs utilize DisplayPort 2.1 vs DisplayPort 1.4 on Nvidia's Ada Lovelace. In several games AMD showed their 7900 XTX breaking past the DP 1.4 limit, though again we'll have to wait and see whether this is actually relevant as we'll need new displays for that to matter.

AMD announced several new features at their presentation although details on all of them were pretty light.

We have FSR 3 technology coming in 2023, providing up to 2x more FPS than FSR 2 technology. All we got to see was a single slide, so we truly have no idea whether this is supposed to be a DLSS 3-like frame generation technology or some other approach.

We should get a new FSR in 2023 so keen to see how that shakes up, AMD has very strong competition to contend with in DLSS and now XeSS on Intel GPUs as well.

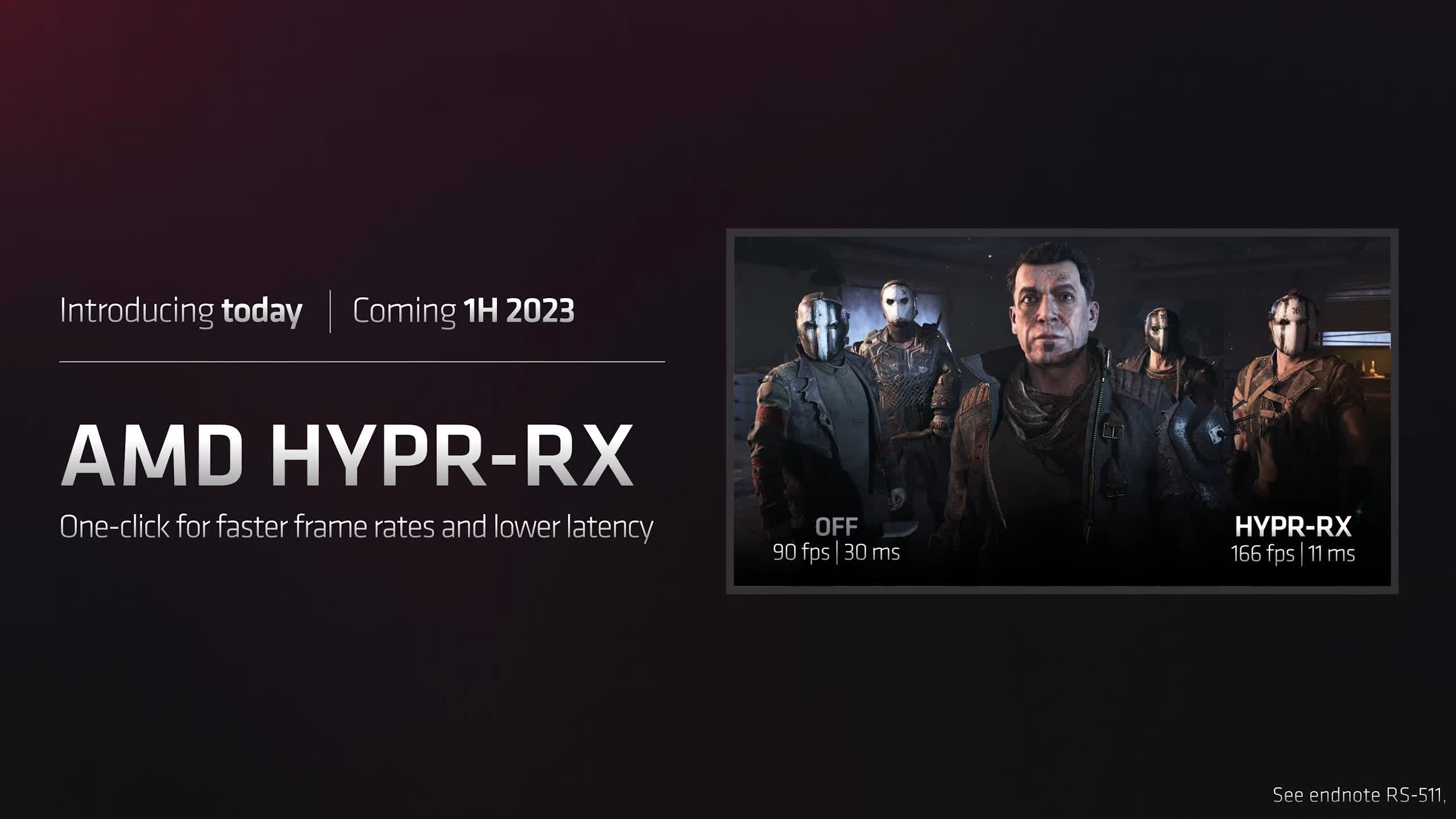

AMD also announced Hyper-RX which appears to be some sort of technology to both increase frame rates and reduce latency – perhaps a combination of FSR to boost performance from lower resolution rendering and a low latency setting similar to Nvidia's Reflex.

This is coming in the first half of 2023 and details were quite light, too. Perhaps AMD showed more in their presentation that just ended, but we opted not to travel to the United States for this one.

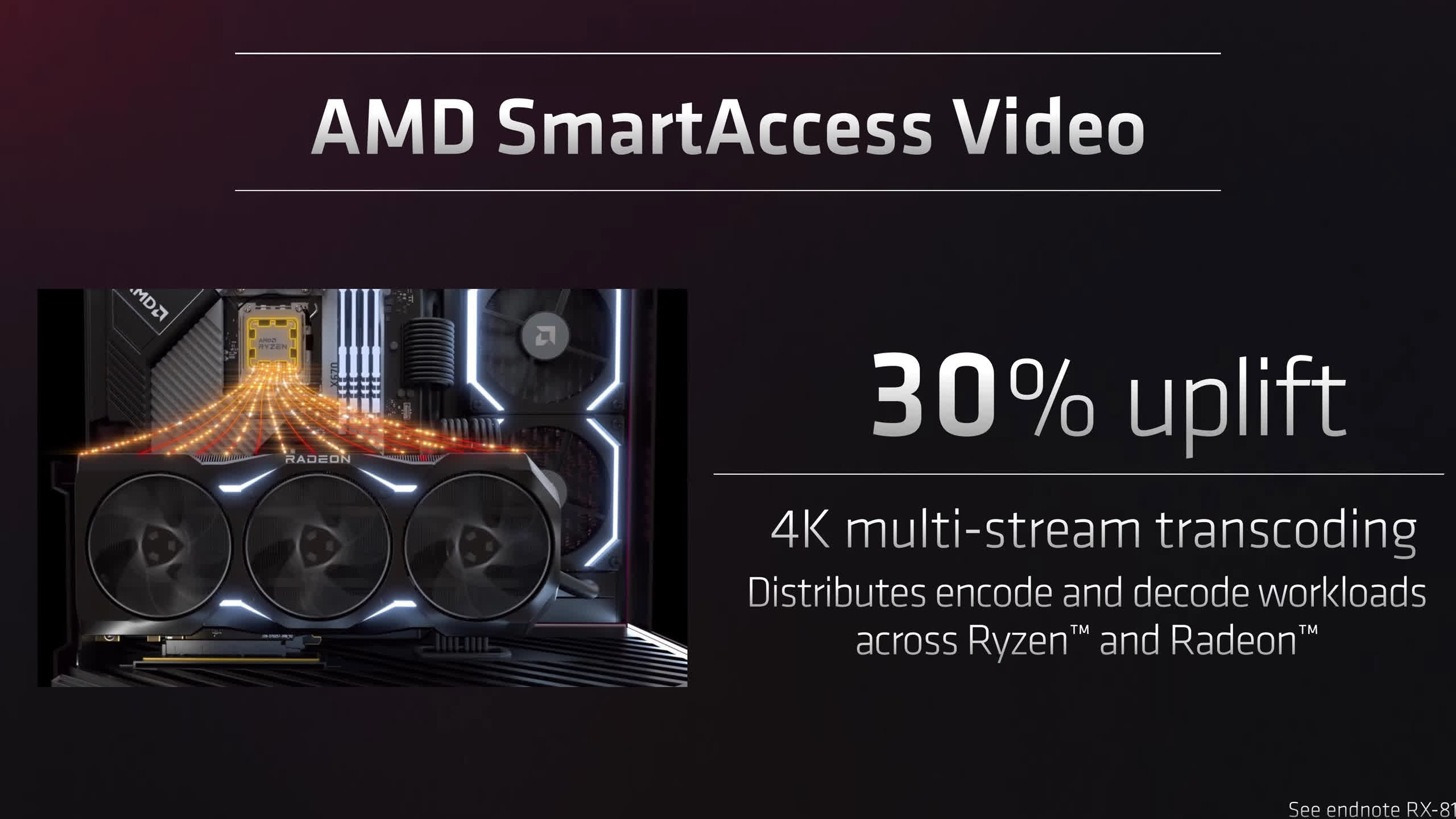

On the video encoding side AMD announced SmartAccess video, with a 30% uplift in 4K multi-stream transcoding.

It appears to be a solution that will combine the video encoding and decoding performance of an AMD Ryzen CPU – now that they have an iGPU that supports these things – with the performance of a Radeon GPU. We assume this will need to be integrated into various workstation and creator type applications for the biggest benefit.

AMD will be producing a reference model for both RDNA3 GPUs, which looks something like the picture below. It's a slightly larger overall design than the Radeon 6900 design but retains its triple-fan layout. The front shroud is more angular and it's a 11mm longer than the previous design, but should occupy a similar number of slots. It doesn't appear as large as the RTX 4090 design though of course we don't have a 7900 XTX in-house just yet to compare, that's just a guess based on what has been shown.

In a relief to a lot of buyers, AMD has also decided not to use the new 12VHPWR connector because they simply don't need 600W of power input. Instead the reference cards go with the standard two 8-pin PCIe connectors, no need for adapters, just something that will work in most setups without a heightened risk of melting. I'm sure some AIB models will opt for a third 8-pin to increase overclocking potential, but right now AMD's design doesn't call for more power than can be supplied through two 8-pins and PCIe slot power. The expectation is that AIBs won't use the 12VHPWR connector either.

Overall, AMD's RDNA3 presentation was interesting. It's exciting to see chiplet technology come to gaming GPUs for the first time and from a technical standpoint there are a lot of interesting aspects to the GPUs AMD showed off today. New hardware blocks like AI accelerators and AV1 encoding, better ray tracing capabilities and wider memory buses.

We think gamers will also be pleased to see AMD being aggressive on pricing and efficiency. The Radeon 7900 series is much cheaper than the RTX 4090 and also cheaper than the RTX 4080, while the top end model is only a 355W card, again much less than the 4090.

AMD made a lot of comments about this GPU being more efficient, the reference design is physically smaller than the RTX 4090 by the looks of things and uses just two 8-pin power connectors. We've been hearing a lot of concerns from you guys over efficiency and power connectors in the last few weeks with some calling the 4090's 450W power rating ridiculous. Well, AMD is producing a GPU that seems to be what you want, so we'll have to see whether this actually ends up being a selling point, or whether raw performance wins out.

The performance claims are where we still have a lot of question marks over the 7900 XTX and 7900 XT. It's great these cards are priced at or below $1,000, and AMD is claiming a large performance gain over the 6900 XT and 6950 XT at a similar MSRP.

But how it fares compared to current GPU pricing and also Nvidia GPUs is up in the air. We were disappointed with the ray tracing performance claims, and it doesn't seem like AMD has been able to deliver a huge uplift here which would be required to match Nvidia's Ada Lovelace. But this could be a moot point if AMD's GPU ends up significantly better value, after all it's cheaper than both the 4090 and 4080 – and whether you care about ray tracing will be a big factor there.

All in all, this is shaping up to be a very competitive generation with both Nvidia and AMD having battlegrounds on multiple fronts, including pricing, rasterization performance, ray tracing performance, power consumption, efficiency and features.

Very keen to see how these GPUs fare in our testing come December 13, which is still over a month away but hey, it'll be here before Christmas.

https://www.techspot.com/news/96554-amd-radeon-rx-7900-xtx-7900-xt-launched.html