TL;DR: Geoffrey Hinton, one of the most respected names in the artificial intelligence community, has left his job at Google to speak out about the dangers that AI pose now and in the future.

Hinton is referred to by some as the Godfather of AI, and for good reason. His work in the field started way back in the early 70s as a grad student at the University of Edinburgh where he was drawn to the idea of neural networks. Few researchers at the time believed the concept had merit but as The New York Times notes, Hinton made it his life's work.

As a professor in 2012, Hinton and two of his students created a breakthrough neural network that could analyze images and identify common items in the photos. The following year, Google acquired Hinton's company, DNNresearch, for $44 million and brought him on to continue his work.

Last year, however, Hinton's opinion on AI and its capabilities changed. Hinton believed increasingly capable systems still weren't on par with human thinking in some areas but had surpassed the brain's abilities in other areas.

"Maybe what is going on in these systems is actually a lot better than what is going on in the brain," Hinton told The New York Times. As this trend continues and companies take advantage of more powerful AI systems, they're becoming increasingly dangerous.

"Look at how it was five years ago and how it is now," Hinton said of AI's state of being. "Take the difference and propagate it forwards. That's scary," he added.

Until recently, Hinton felt Google was being a good steward of the tech by not launching a system that could cause harm. That's no longer the case as Google and other tech giants have engaged in an AI race that he believes could be impossible to stop.

In the NYT today, Cade Metz implies that I left Google so that I could criticize Google. Actually, I left so that I could talk about the dangers of AI without considering how this impacts Google. Google has acted very responsibly.

– Geoffrey Hinton (@geoffreyhinton) May 1, 2023

In the short term, Hinton is worried about the Internet being overwhelmed with fake pictures, videos, and text that will confuse people and leave them not knowing what is real anymore. Over the long term, AI could eventually replace many jobs done by humans. Eventually, AI could overtake its human creators in other areas, too.

"The idea that this stuff could actually get smarter than people – a few people believed that," Hinton said. "But most people thought it was way off. And I thought it was way off. I thought it was 30 to 50 years or even longer away. Obviously, I no longer think that."

Hinton told the publication that a part of him now regrets his life work. "I console myself with the normal excuse: If I hadn't done it, somebody else would have," he said.

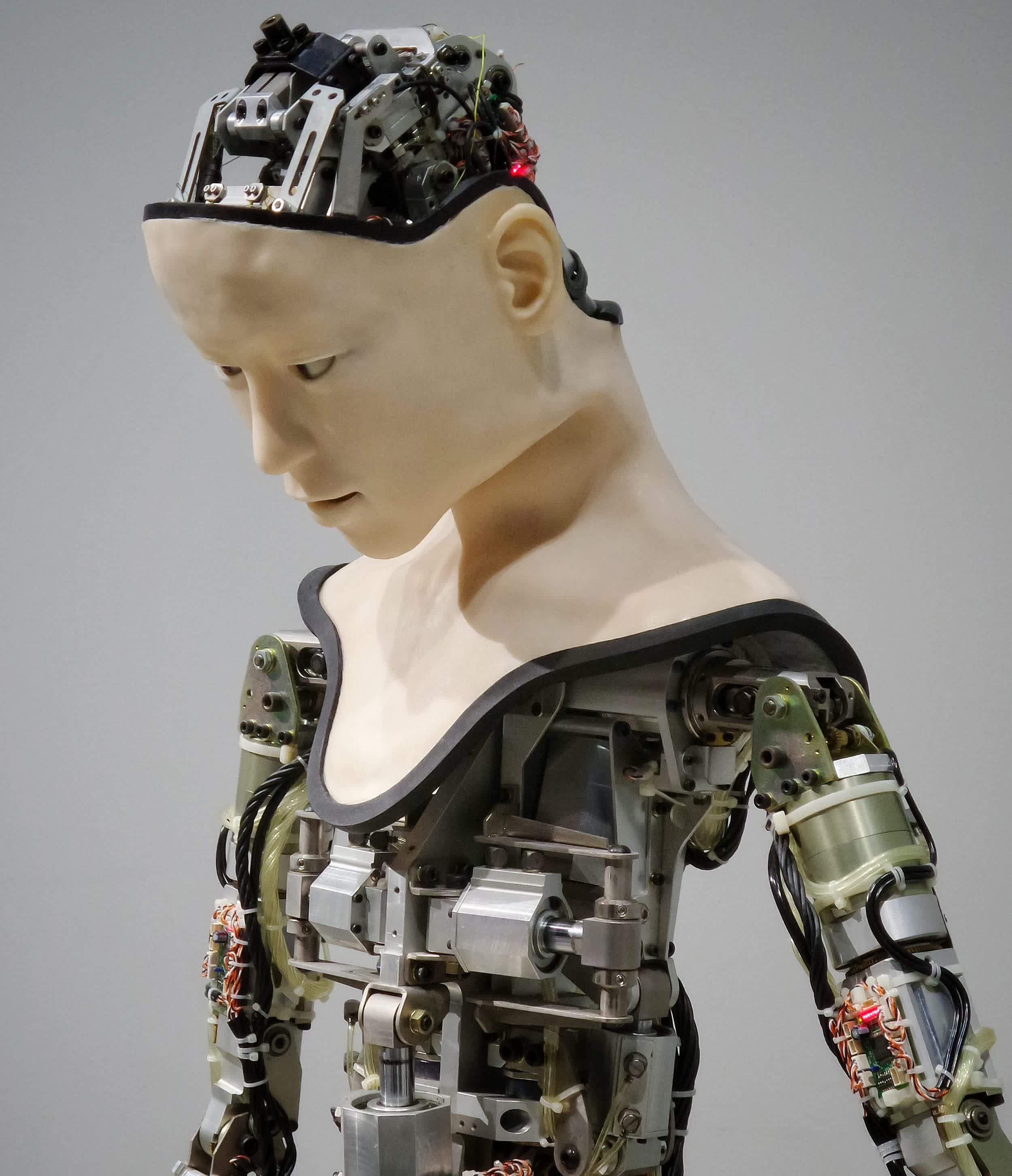

Image credit: Hinton by Noah Berger / AP, Robot by Possessed Photography