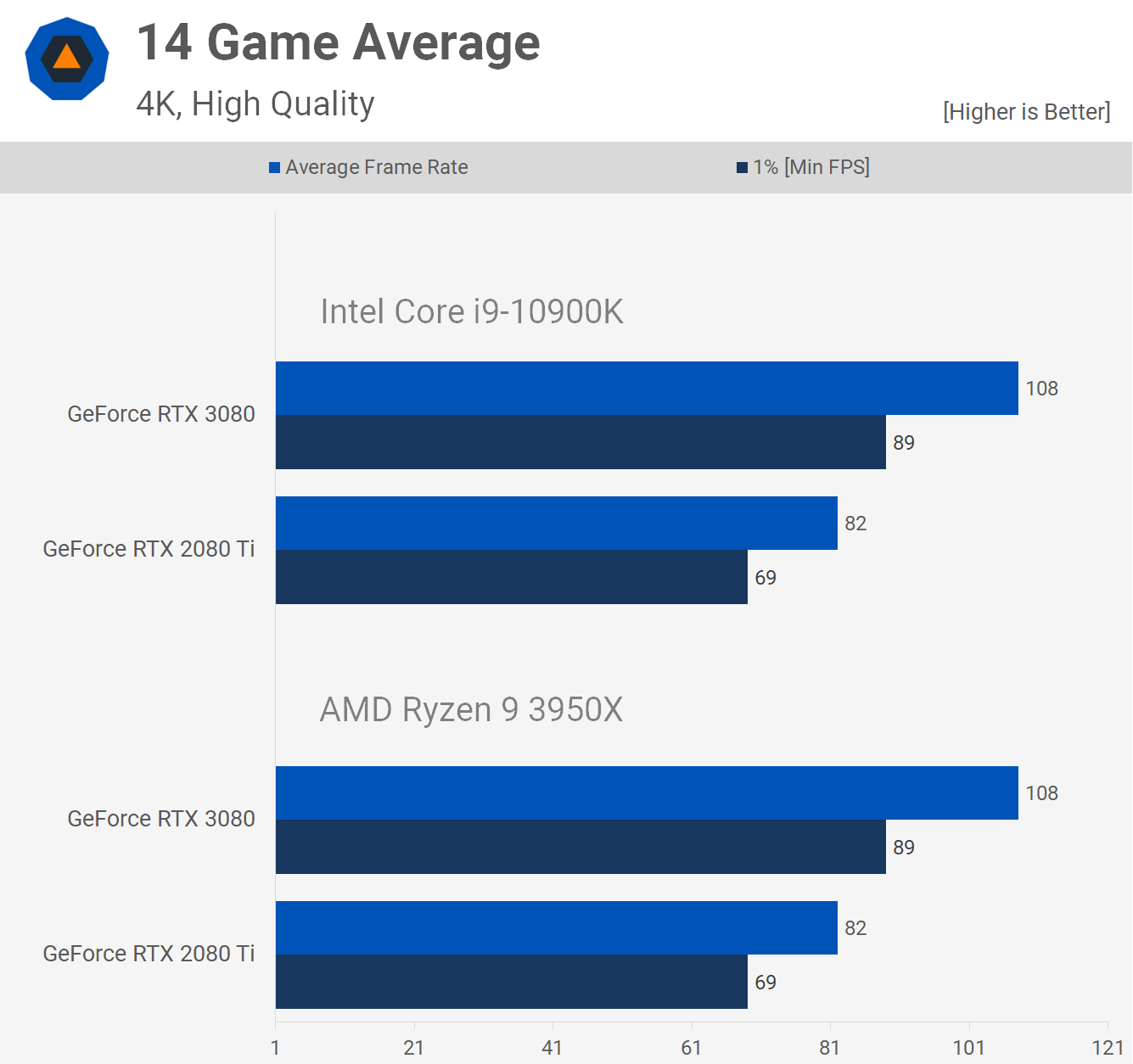

Given the fact that on an Intel system the 3080 gets 5-10% better FPS means one thing. It isn't utilized to 100% or something in the drivers/software is more optimized. Also, there are quite a few competitive players that want high FPS and those should get their information from where? The argument doesn't hold...all the self respecting tech outlets are still using an Intel rig cause it is just better to stress the highest end GPUs. It doesn't have anything to do with fanboyism, with prices or something else.

@Lew Zealand : In a GPU review I want to see how many FPS I get in modern titles in all the common resolutions today, that are 1080p, 1440p and 4k. To see how many FPS 3080 can push you need the best CPU there is which is 10900K. It is pretty logical and this is why most other reviewers use 10900k. As for AMD, we know they are usually 5-10% below Intel, something closer sometimes farther away, but in a GPU review you remove any bottleneck (CPU/RAM/whatever) to give the GPU the best possible platform. Get it?

Peace!

@Lew Zealand : In a GPU review I want to see how many FPS I get in modern titles in all the common resolutions today, that are 1080p, 1440p and 4k. To see how many FPS 3080 can push you need the best CPU there is which is 10900K. It is pretty logical and this is why most other reviewers use 10900k. As for AMD, we know they are usually 5-10% below Intel, something closer sometimes farther away, but in a GPU review you remove any bottleneck (CPU/RAM/whatever) to give the GPU the best possible platform. Get it?

Peace!

Last edited: