The big picture: Intel recently invited two leading overclockers to its headquarters to discuss achieving the world's first 9 GHz CPU overclock. The duo of Pieter-Jan Plaisier (SkatterBencher) and Jon Sandström (ElmorLabs) also looked back at CPU frequency overclocking milestones along the way, highlighting an obvious wall that was hit roughly 17 years ago.

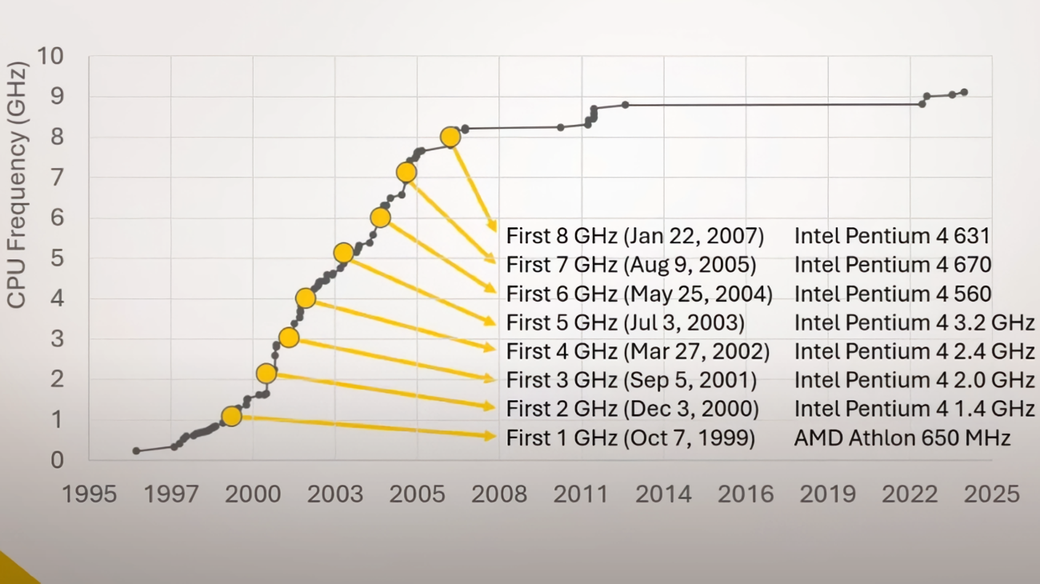

Record-high CPU overclocks feel like a pretty common occurrence but according to the data, that is far from the case. A chart accompanying the overclockers' presentation highlights the first chip to reach 1 GHz – AMD's legendary Athlon 650 MHz – way back in October 1999.

It would take about 14 more months for overclocking enthusiasts to breach the 2 GHz barrier, and just another nine months to crack 3 GHz. The 4 GHz mark was crossed in early 2002 and roughly 16 months later, the first 5 GHz overclock was achieved.

With the odds in their favor (and Intel Pentium 4 chips in their motherboards), overclockers did not look back. The world's first 6 GHz overclock came in May 2004, the 7 GHz walls came down in August 2005, and 8 GHz was achieved in early 2007. Then, it all came to a screeching halt.

It wouldn't be until the end of 2022 – more than 15 years later – that the 9 GHz barrier finally came down. The current world record, 9,117 MHz set by Elmor with a Core i9-14900KS, could very well be the new ceiling for the foreseeable future. And that elusive 10 GHz mark? Well, good luck with that.

That's not to say that CPUs have not improved since the mid-2000s. Today's chips are way faster and more efficient than CPUs of yesteryear, and base clock speeds on air cooling are significantly higher than what you would get with a retail chip a decade and a half ago. But in terms of sheer clock speed on a single core with liquid nitrogen or liquid helium cooling, it seems the ceiling has not budged much.

CPU overclockers hit a ceiling, and it happened about 17 years ago