The microprocessor made personal computing possible by opening the door to more affordable machines with a smaller footprint. The 1970s supplied the hardware base, the 80s introduced economies of scale, while the 90s expanded the range of devices and accessible user interfaces.

The new millennium brought a closer relationship between people and computers. More portable devices became the conduit that enabled humans' basic need to connect. It's no surprise that computers transitioned from productivity tool to indispensable companion as connectivity proliferated.

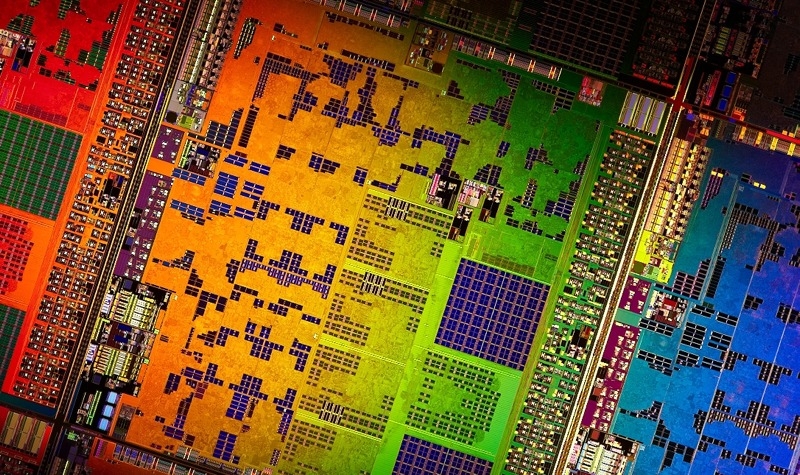

This is the fifth and final installment in the series, where we look at the history of the microprocessor and personal computing, from the invention of the transistor to modern day chips powering our connected devices.