What just happened? Intel's Arc B580 graphics card has been a massive hit with both reviewers and users. So much so, we even hailed it as the best value GPU on the market in our review. However, new reports are coming out indicating that the graphics card's impressive performance is restricted to newer CPUs.

Update (Jan 11, 2025): A few weeks after we published our day-one review of Intel's Arc B580, reports began to surface indicating that the graphics card's impressive performance was largely dependent on newer CPUs. In response, we have published a new review featuring extensive benchmark data that delves into the B580's overhead issues, which cause performance to degrade in CPU-limited gaming scenarios. This updated analysis includes not just the Intel Arc B580, but also the RTX 4060, Radeon 7600, and Radeon 7600 XT. All of these GPUs were retested using the Ryzen 5 5600, allowing us to compare the new results against the original data gathered with the 9800X3D.

The original story follows below:

As discovered by Hardware Canucks, the Arc B580 suffers from massive performance issues when paired with CPUs older than five years – this happens in many games, leading to stuttering and low frame rates not experienced in previous reviews which usually test with more modern CPUs.

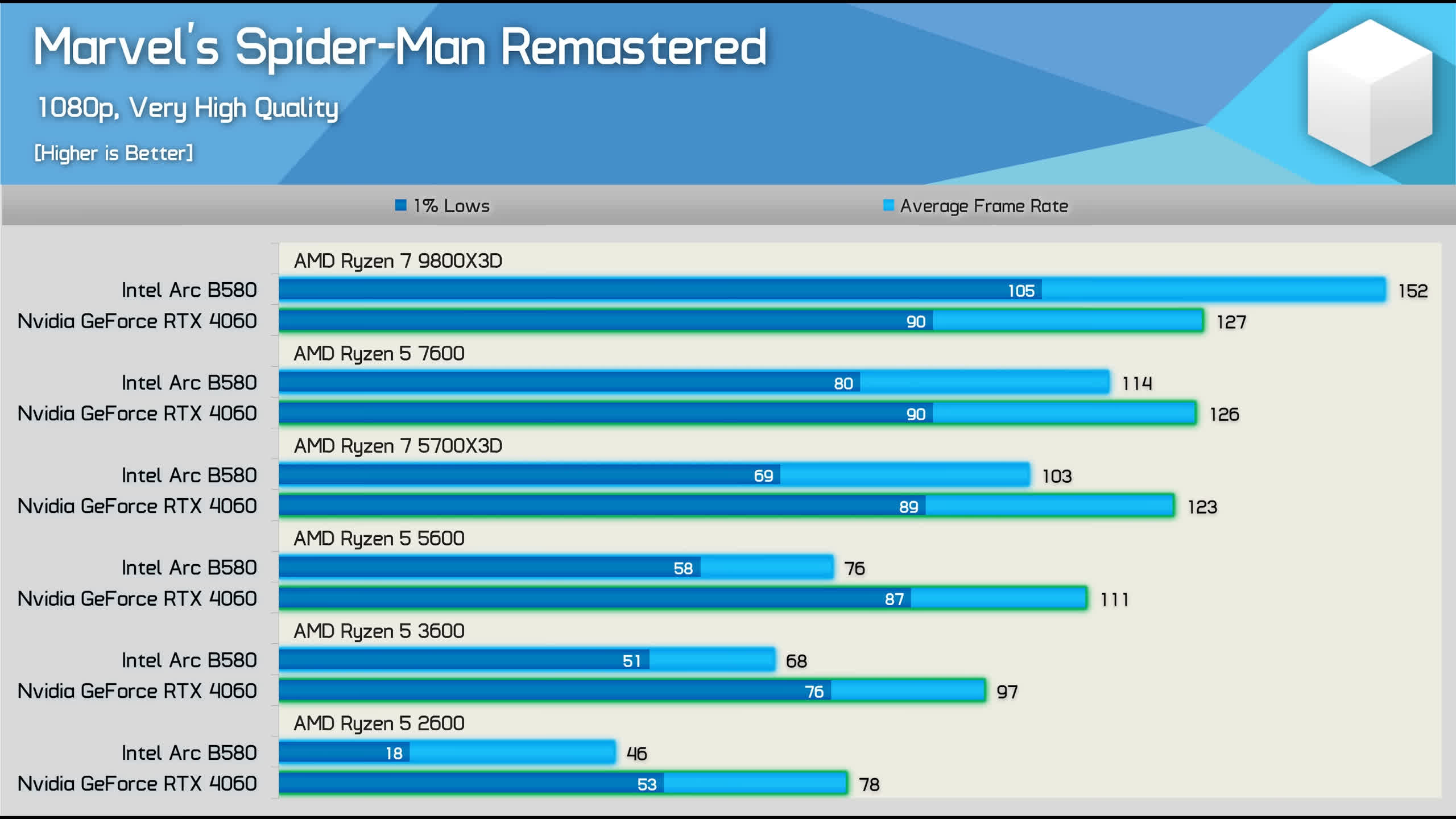

The channel tested the Arc B580 with an Intel Core i5-9600K – a relatively older processor released in 2018 – and found that performance in some games, including Marvel's Spider-Man Remastered and Starfield, was so poor that the games were nearly unplayable.

Unfortunately, the B580's reduced performance is not an isolated issue with 9th-gen Intel Core CPUs, as the issue persists with other older processors. In another test run by our very own Steve Walton on Hardware Unboxed, he confirms that the GPU has similar problems when paired with a Ryzen 5 2600X.

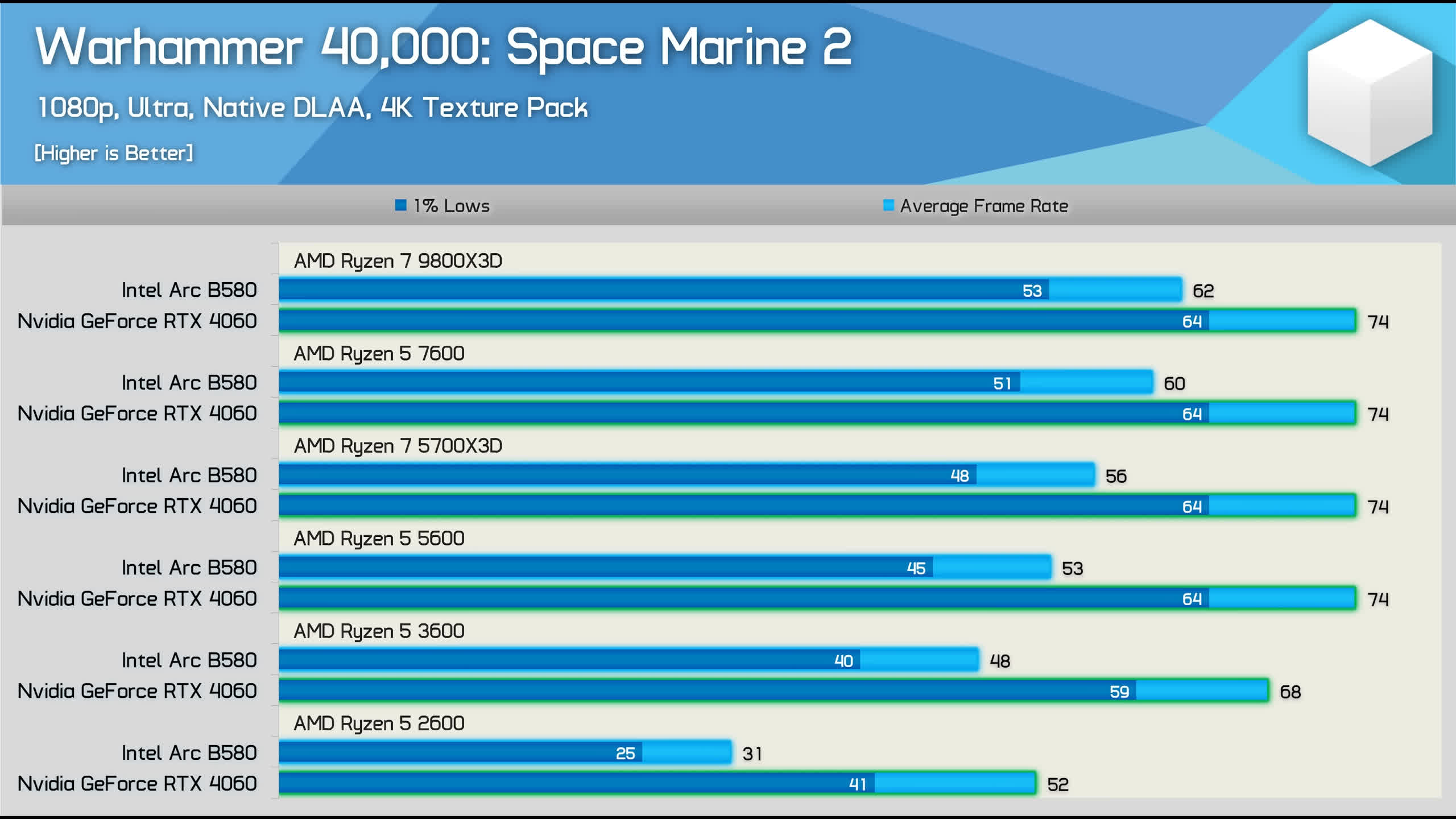

As shown in Hardware Unboxed's testing, the B580 performed much worse than the RTX 4060 in games like Warhammer 40,000: Space Marine 2 when paired with either a Ryzen 7 9800X3D or a Ryzen 5 2600. In fact, with the latter, the average frame rates hit just 31 FPS, while the 1 percent lows dipped to 25 FPS, making the game almost unplayable.

A similar result was seen in Hogwarts Legacy, where the B580 was around 46 percent slower than the 4060, averaging only 24 FPS. Starfield also yielded poor results for the B580, which was about 45% slower than the RTX 4060 when paired with the Ryzen 5 2600.

However, these problems seem limited to a handful of titles. In many other games, the B580's performance is in line with expectations. For instance, in games such as Alan Wake 2, Doom Eternal, Horizon: Forbidden West, and even Call of Duty: Black Ops 6, the B580 delivers playable frame rates when paired with the i5-9600K.

It is worth noting that all Arc graphics cards require Resizable BAR (ReBAR) and Smart Access Memory (SAM) support. For this reason, Intel only recommends its 10th-generation Core CPUs or newer and AMD Ryzen 3000 series or newer for its Alchemist and Battlemage GPUs. However, the systems tested above had ReBAR backported and enabled, suggesting that the issue lies elsewhere.

It is unclear whether this is an architectural issue or a driver-related problem that can be addressed via a future update. Intel is aware of the issues and is already investigating, so perhaps we can expect an answer sooner rather than later. Needless to say, we also plan to re-review the B580 in the coming days, so stay tuned for more information.

Intel Arc B580 massively underperforms when paired with older CPUs