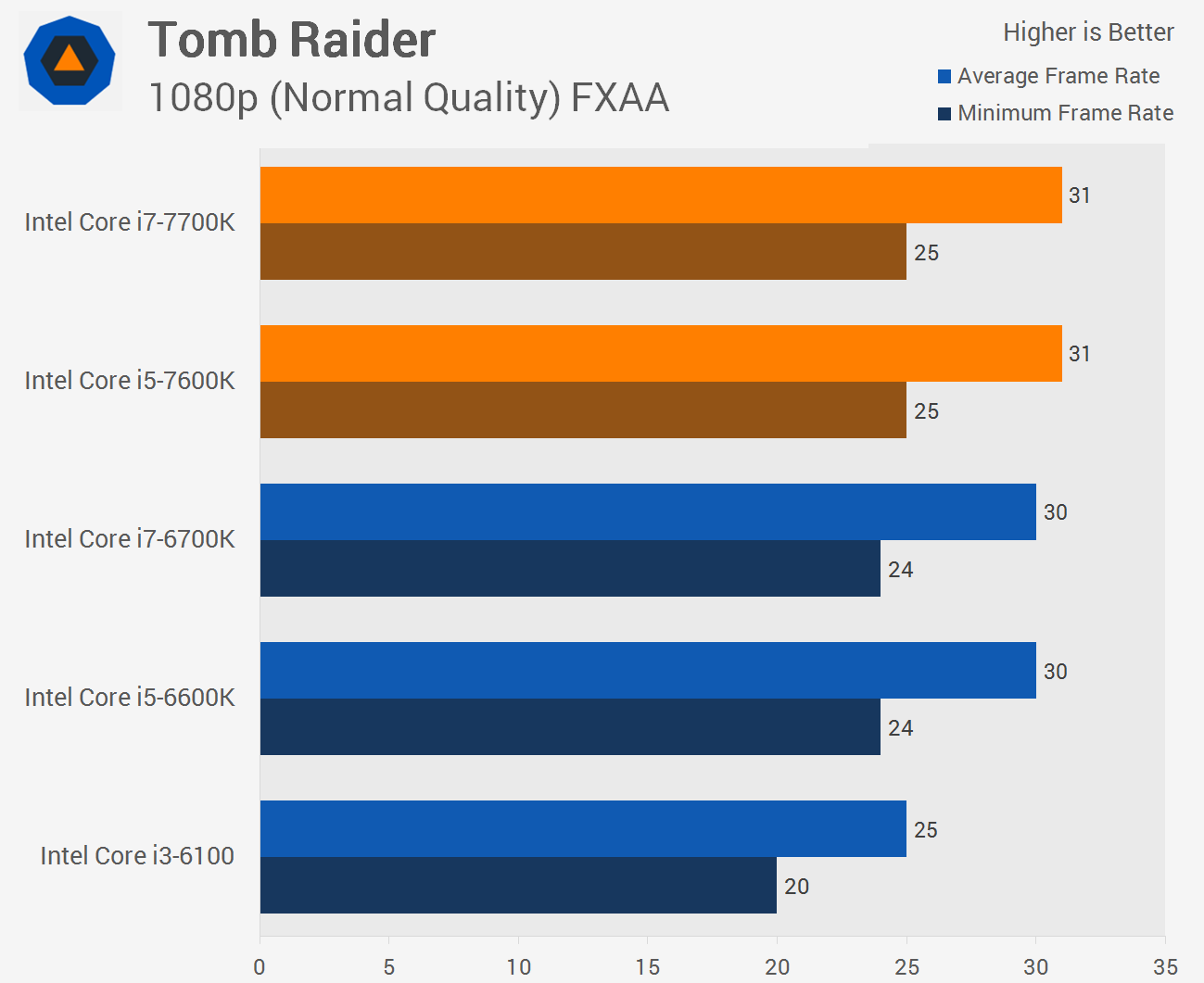

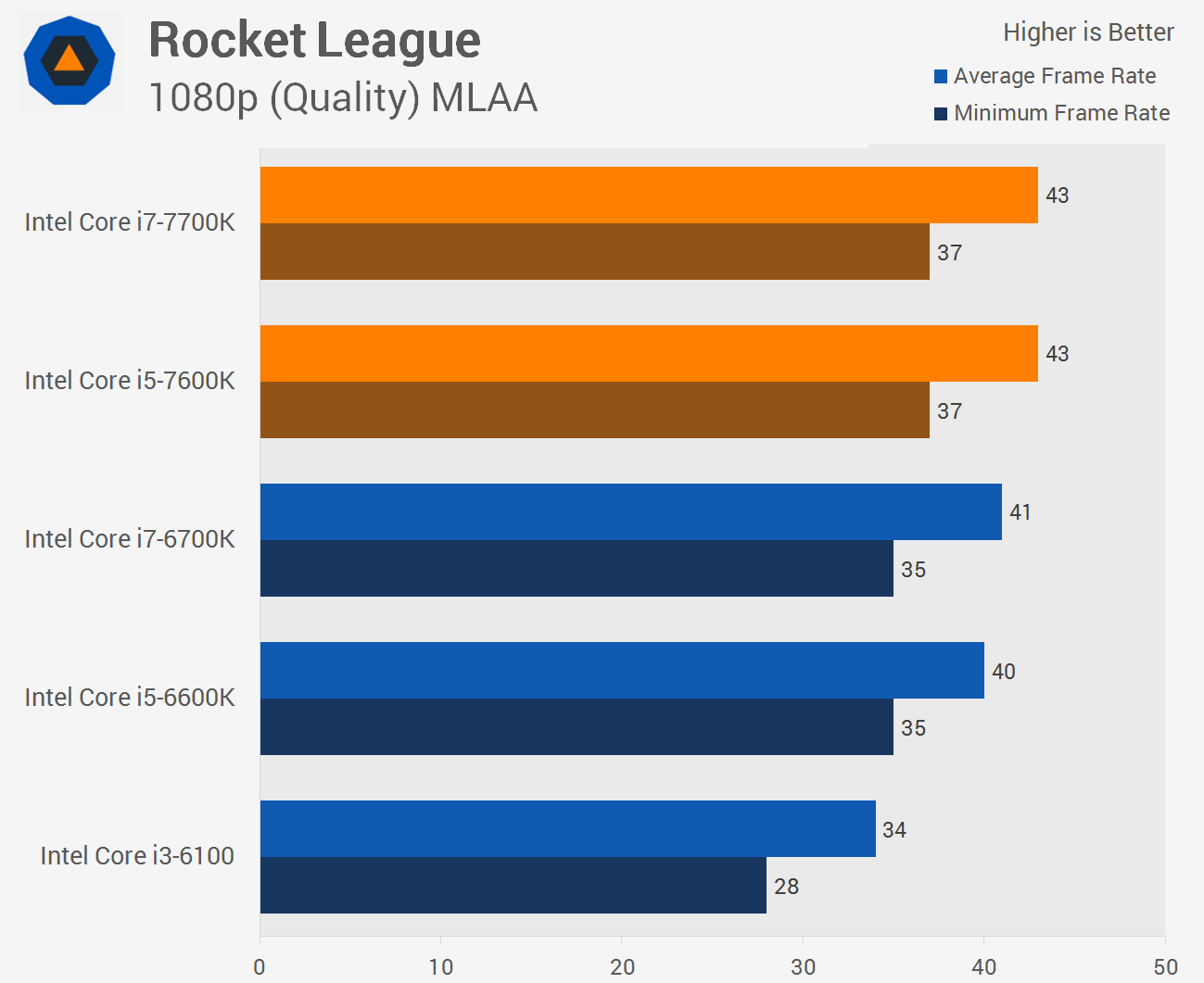

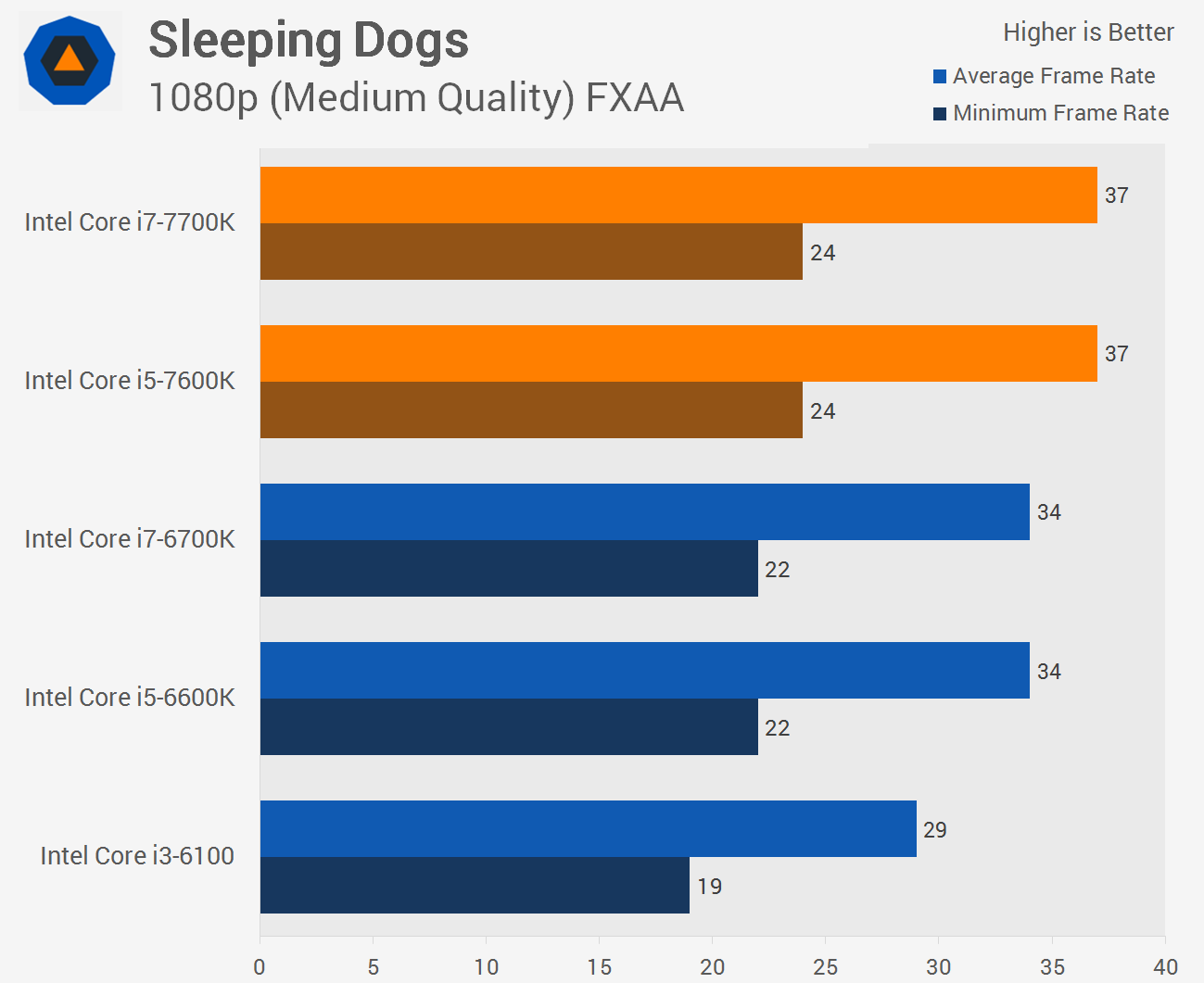

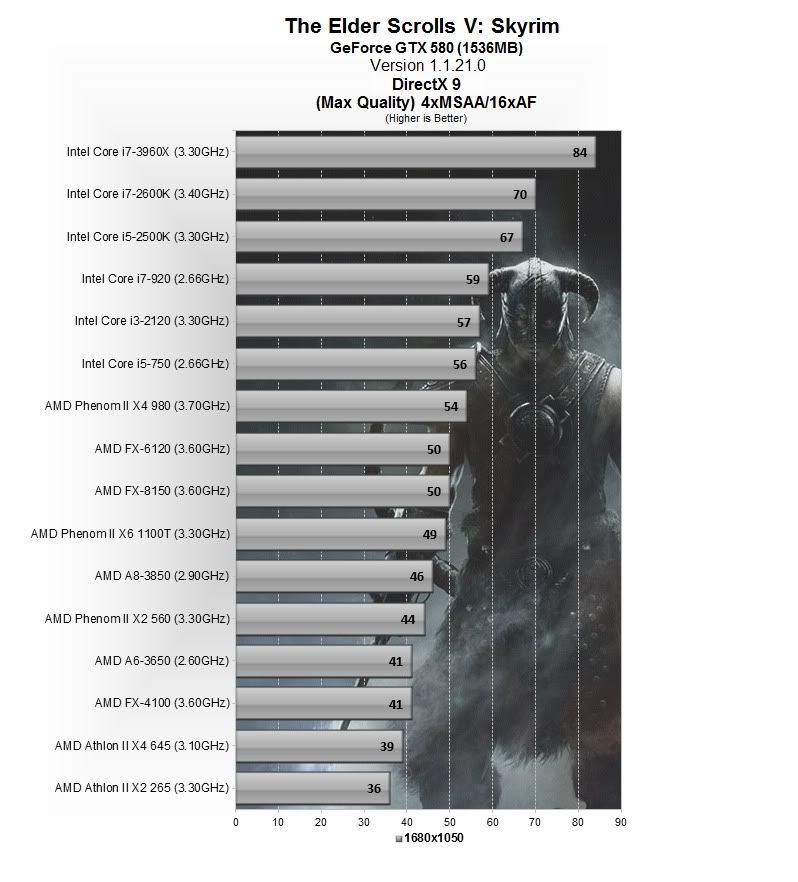

A dual core CPU keeping up and matching a quad core CPU is not doing wonders?

You looking at the same thing I am!? The Core i3 is neck and neck with the i5.

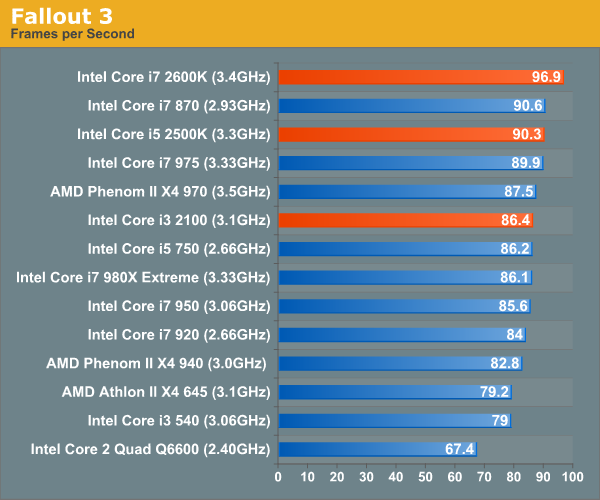

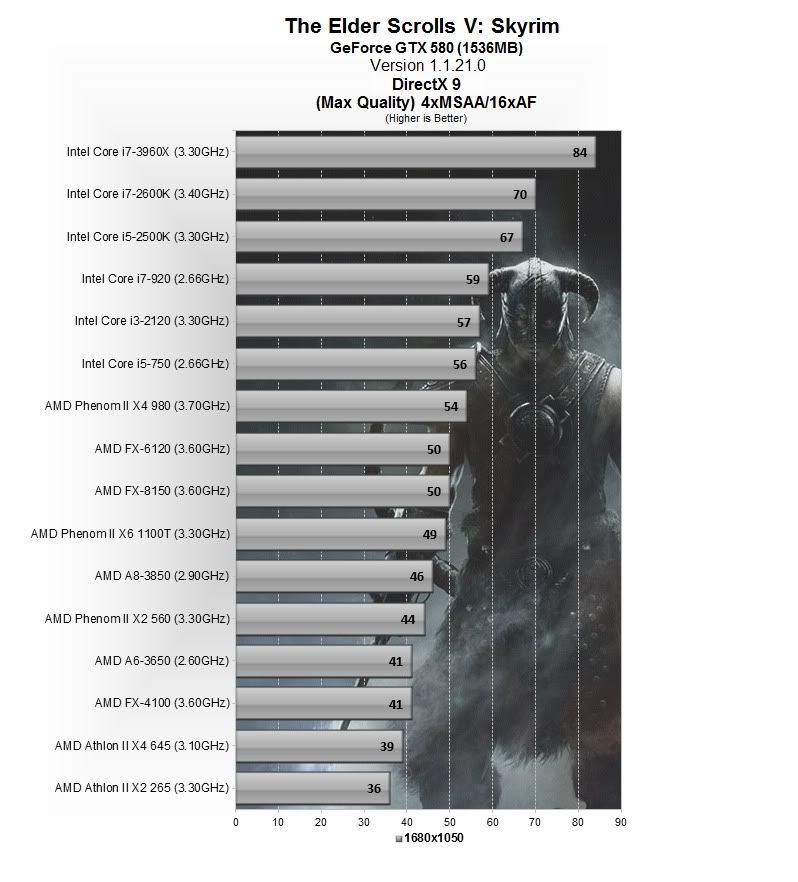

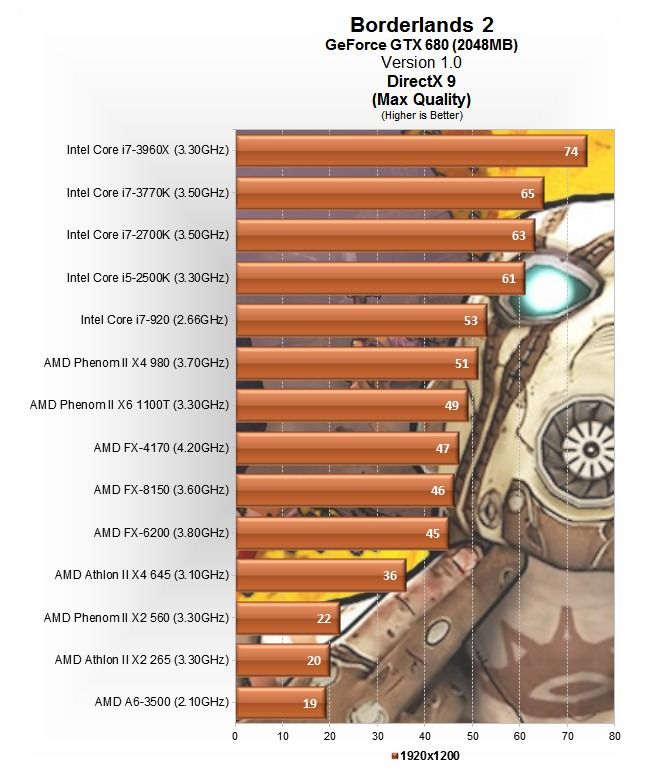

And in the Fallout graph, the i7 870 with a 2.93GHz clock speed is beating the i5 2500k at 3.3GHz.

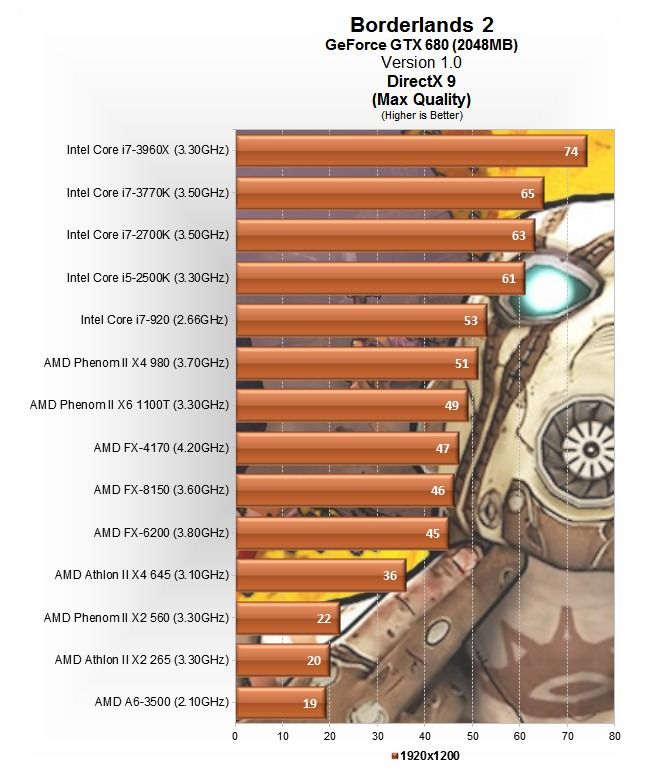

Those graphs display exactly what I said.

Any game that uses 4 cores the i3 will match or just about match the i5, due to HT and having 4 'logical' cores.

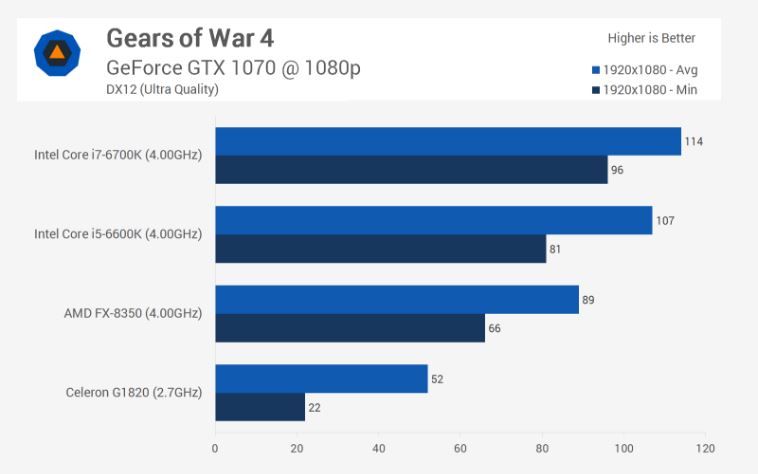

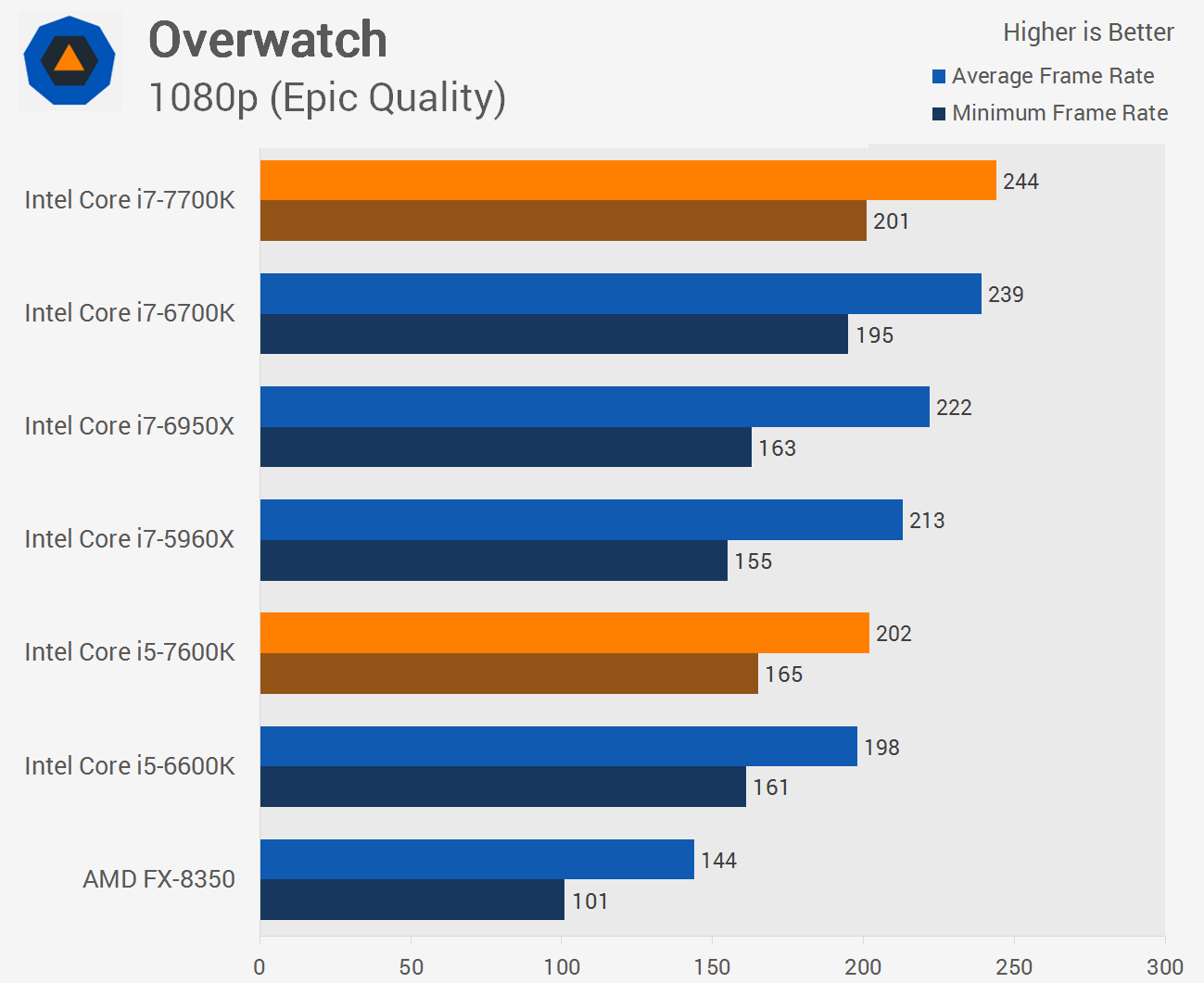

If an i5 keeps up with an i7, its due to the game being limited to only utilizing a a certain amount of CPU power, its not because the i5 is as good. When a game is programmed to use more power, the i7 will kicks its tail, like in Gears, Fallout and many other games.

I love the 920 beating the 750 at the same clock speed here, and how in both graphs, the 6 core i7 is untouched,even at the same or lower clock speed.

The i5 is a bargain.

But in many cases, the i7 will kick its ***, and not just the 6 core version.

i7's are also better for running multiple apps at once, you can overwhelm the i5's cores if asking a PC to do more then game while your gaming. i5's are very nice chips, but you still get what you pay for.