The big picture: One of the recurring themes in computer technology in recent years is the dramatic slowdown of Moore’s Law, and how big companies are scrambling to find creative ways around the limitations of silicon. Now Intel has revealed a neuromorphic system that is more than 1,000 times faster than traditional CPUs at specific tasks. It’s not going to run your games any faster, but it is a boon for self-driving cars and the Internet of Things.

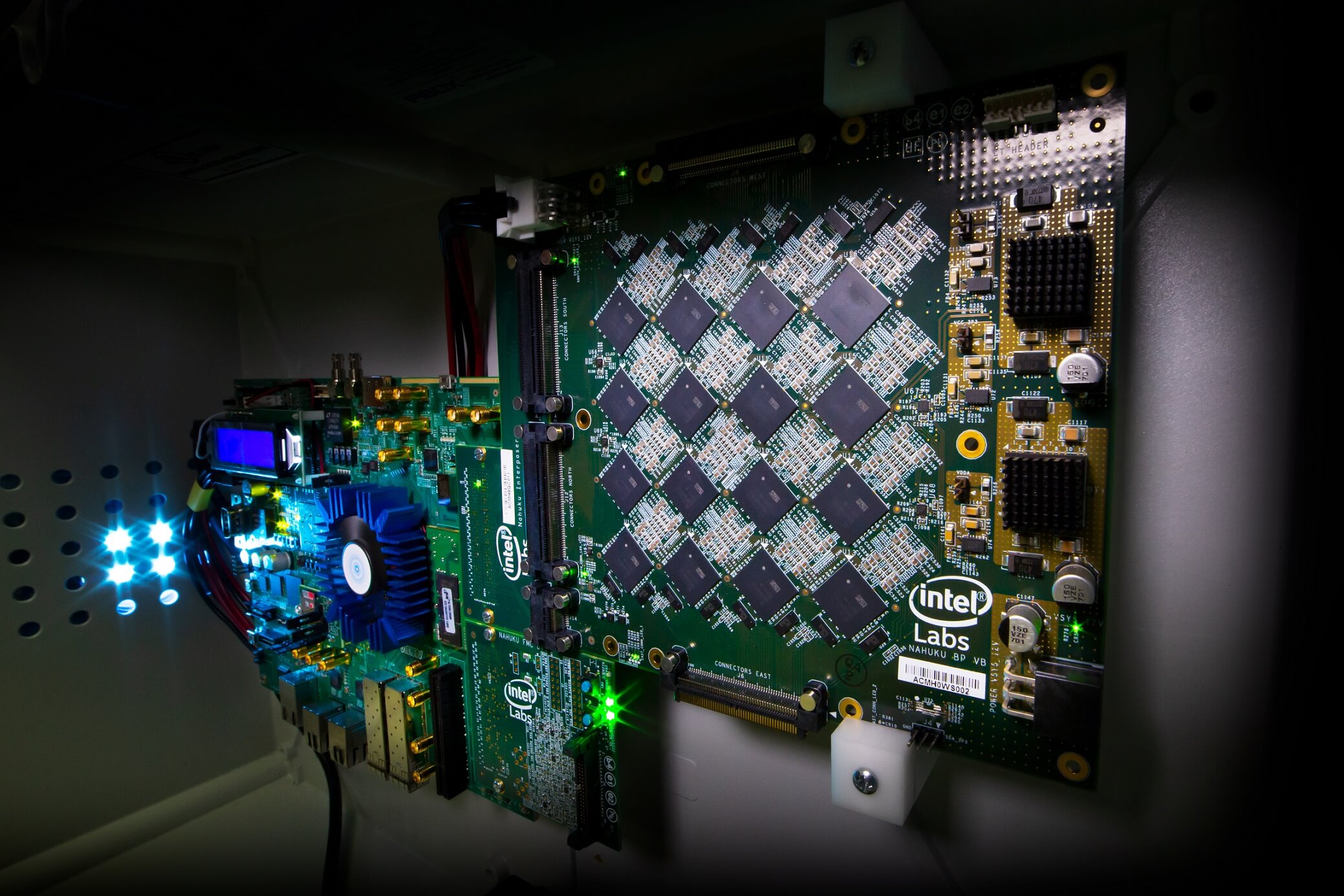

Intel had a big show off earlier at DARPA’s Electronics Resurgence Initiative 2019 summit in Detroit, with a project called Pohoiki Beach -- essentially a cluster of 64 of the company’s Loihi chips networked on a Nahuku board, capable of mimicking the self-learning abilities of eight million neurons. The system is able to process information much faster than a traditional CPU+GPU architecture, all while sipping 10,000 times less power than that setup.

The tech giant says its neuromorphic chips aren’t a suitable replacement for traditional CPU architecture, instead its potential lies in accelerating specialized applications like constraint-satisfaction problems, graph searches, and sparse coding. Simply put, those algorithms are essential for things like autonomous cars, object-tracking cameras, prosthetics, and artificial skin to name a few.

Pohoiki Beach is now headed to over 60 research partners, some of which have already started working on adapting it to real-world problems. The chipmaker claims that even when the system was scaled up 50 times, it was still able to use five times less power. In another application, mapping and location tracking with Loihi chips was just as accurate, but 100 times less power-hungry than a CPU-run solution that’s popular in the industry.

Intel promised to scale up Pohoiki Beach to 100 million neurons later this year, paving the way for tiny supercomputers that accelerate AI and other complex tasks, and is also looking at cramming as many Loihi chips as it can into a USB form factor system codenamed Kapoho Bay, ideal for low-power applications.

If you’ve been following the discussion around computing technology, you may already know that exotic dreams like MESO quantum computing are still far from becoming a reality. Intel’s been hard at work trying to crack that problem, but its strategy remains centered around chiplets.

https://www.techspot.com/news/80974-intel-unveils-pohoiki-beach-chip-cluster-mimics-human.html