In brief: Microsoft's new Xbox Series X console is a beast, courtesy of the biggest generational leap in SoC power and new APIs. The company has worked together with AMD to make a console that can rival some of the most powerful PC gaming rigs on GPU performance and game loading times.

Today, Microsoft revealed the full specifications and design details of its upcoming Xbox Series X console, after a series of leaks and teasers that made PC gamers a little envious. And that's because the new Xbox isn't just chuck full of powerful AMD hardware, but has NVMe expandable storage and ray-tracing support, while maintaining excellent backwards compatibility with older titles.

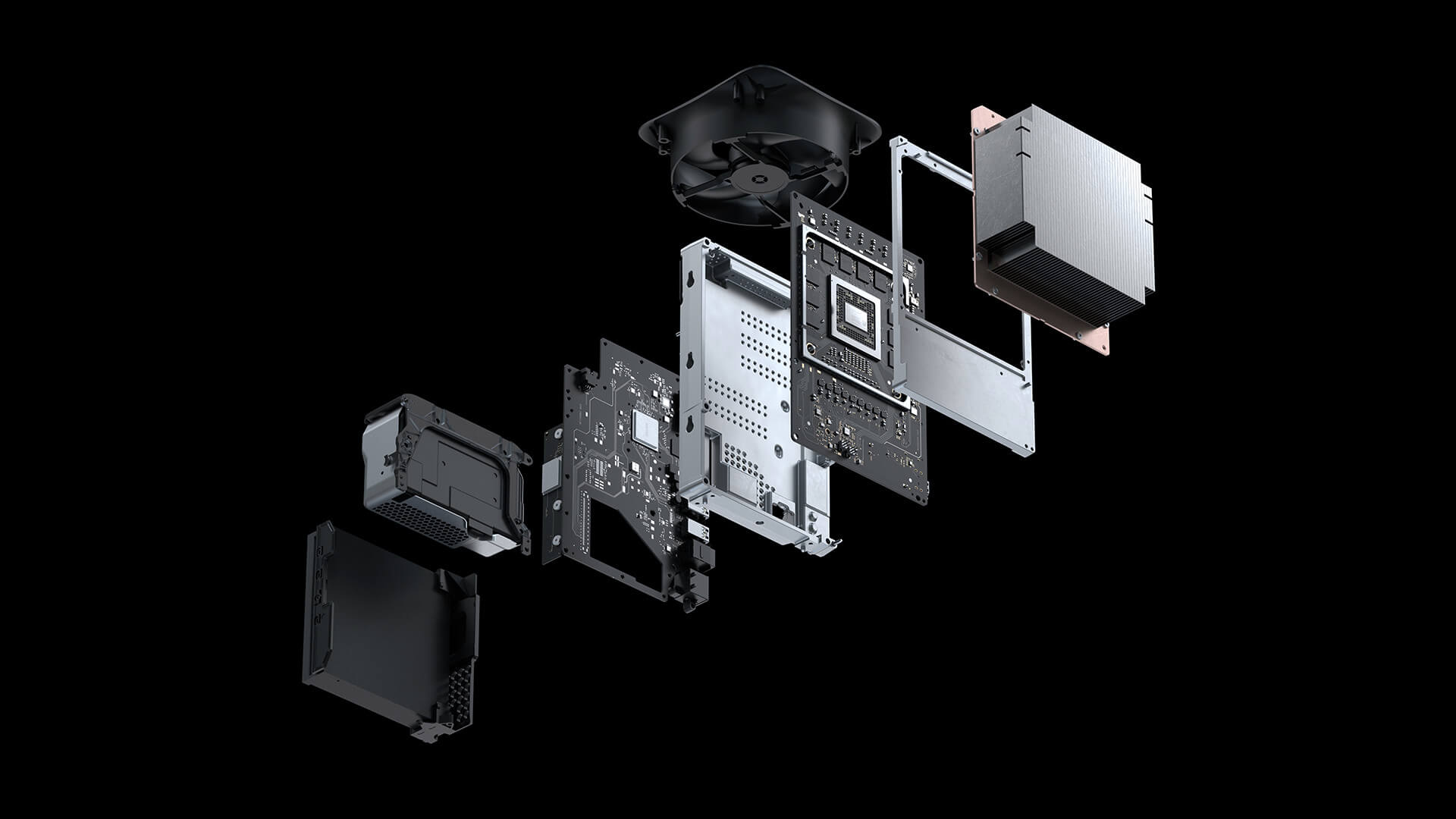

Microsoft is using a custom designed, 8-core AMD Zen 2 CPU clocked at 3.8 GHz (3.6 GHz with SMT enabled) paired with a RDNA 2-class GPU that can achieve 12 teraflops through its 52 compute units that are clocked at 1.825 GHz. As expected, this is all built on TSMC's 7nm node process and tuned for power efficiency and silent operation.

But more importantly, the company installed 16 GB of GDDR6 RAM along with a 1 TB custom NVMe SSD that can achieve raw speeds of up to 2.4 GB/s - a first for consoles. The RAM is comprised of 10 GB of fast memory for the GPU and 6 GB of slightly slower memory, of which 2.5 GB is reserved for the OS.

There's also an expansion slot for a 1 TB Seagate SSD for those who will feel the need to back up their games whenever they need additional space for more frequently played ones.

And if that isn't enough, there's also support for two additional USB 3.2 external storage drives.

To achieve this compact vertical design, Microsoft split the hardware across two mainboards and placed one 130 mm fan at the top to keep the new console cool. The air is sucked in at the bottom and pulled through a large heatsink, which the company says works best for the hot SoC that's inside the Series X.

Microsoft has a performance target of 4K 60 fps or up to 120 fps - even cutscenes now run at 60 fps for smother transitions. Digital Foundry saw benchmarks on Gears 5 that show the Xbox Series X delivering similar performance to an RTX 2080, with Coalition's Mike Raynor saying there's still room for improvement.

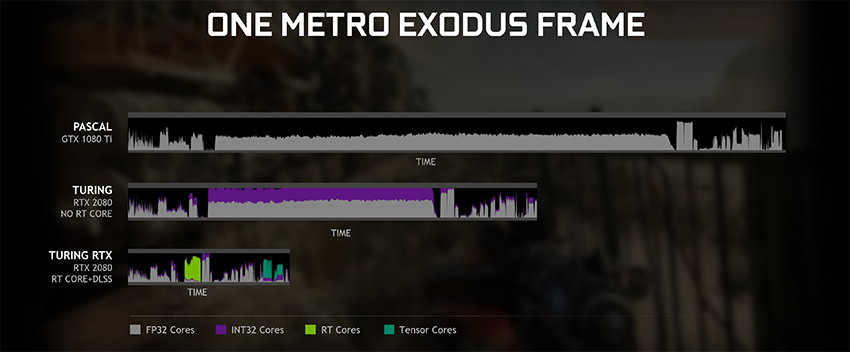

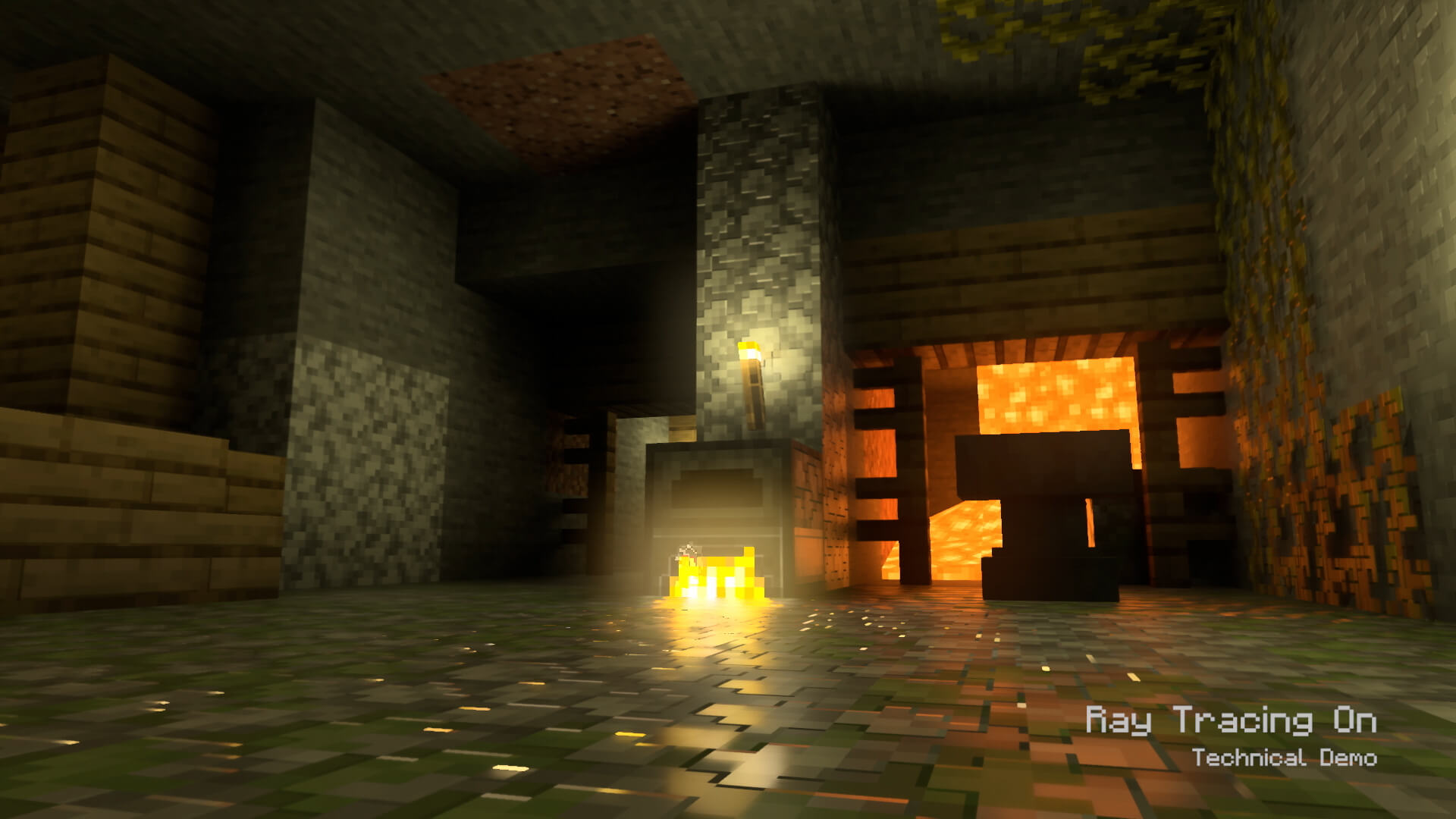

There's DirectX ray tracing support at an equivalent compute performance of over 25 teraflops (12 teraflops for FP32 compute), and Microsoft along with AMD has developed a solution that uses standard shader cores instead of Nvidia's tensor core approach.

Ray tracing aside, Microsoft wanted the Xbox Series X to be fast with loading games, too. The company built a system called Velocity Architecture, which makes 100 GB of game assets on the SSD act like "extended memory." Whenever the game needs some of that data, there's a dedicated hardware decompression block that can send it at over 6 GB/s, which is especially useful for open world games like Assassin's Creed Odyssey and Red Dead Redemption 2.

That means that developers won't have to set aside a significant performance budget for I/O operations, as that is covered through the Velocity Architecture along with DirectStorage and Sampler Feedback Streaming, which Microsoft says will come to PC users in the near future.

Another interesting feature is Quick Resume, which caches data from RAM to the NVMe SSD to make switching between three or four games as fast as possible. The game state will be preserved even after a reboot or a system update, but it'll also eat a sizeable chunk of storage space, depending on the games that are being cached.

Other things that should make a visible difference in the overall gaming experience are mesh shading, variable rate shading, and variable refresh rate for HDMI 2.1 displays. These allow developers to use the GPU compute units more efficiently, avoid screen tearing, and reduce input latency.

Backwards compatibility wasn't a second thought, as Microsoft wanted to use the additional power in the Series X to add HDR mode for older games, even those from an era where HDR wasn't a thing. The company says SDR games will now be enhanced with the help of a reconstruction technique that doesn't require developers to put in any effort.

Existing games that were made for the older Xbox consoles will run at full 4K thanks to an improved version of the Heutchy Method used in previous consoles.

The new Xbox Series X is full of improvements in every department, but if there's one thing that is still a mystery, it's the price.

https://www.techspot.com/news/84405-microsoft-reveals-xbox-series-x-internal-design-specs.html