The video linked in that news article, the only facts about the GPU or RDNA2 were as follows:

- 36 CUs

- Variable clock rate for a fixed power consumption

- Clock capped to a maximum of 2.23 GHz

- 10.23 TFLOPs of peak FP32 throughput

- Primitive shaders are now part of the geometry engine (back again after being nixed in Vega)

- Ray tracing is supported (which we knew from the XBSX details)

- Transistor count of the CUs is 62% higher than that of the PS4 CUs

We can combine the above with the information gleaned from the XBSX details, and this says nothing

explicitly about whether or not the SIMD32 units have been configured to be INT32 or FP32

only, which is what the part of my message you quoted refers to.

However, the fact that both Sony and Microsoft have made a big deal over backwards compatibility strongly points to the CUs, and the overall SE structure, in RDNA2 being generally the same as those in RDNA; this is because that architecture's structure is designed to ensure that code written for GCN (especially the old version in the likes of the PS4) is not disadvantaged by the changes made in the newer design.

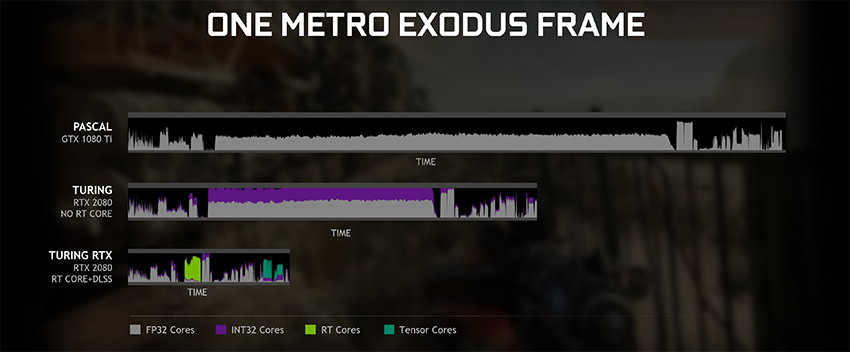

We do know from the Xbox details that the SIMD32 units support more data formats, specifically low precision INT - in Turing, this work is done by the Tensor units, and not the FP32 nor the INT32 shader units. So AMD have improved the overall flexibility of the SIMD32 units in the CUs, but all evidence thus far says they haven't followed Nvidia's route of having separate SIMD32 units for different data formats:

RDNA/RNDA2

INT4, INT4, INT32, FP16, FP32, FP64 calculations = all done via the SIMD32 units

Turing

FP16, FP32 calculations = done via the FP32 shader units

FP64 = done by the FP64 units

INT32 = done via the INT32 shader units

INT4, INT8 = done by the Tensor units