I'm not terribly interested in how people too lazy to educate themselves should be given special dispensation.

Those too lazy people are quite large group. I'm not also interested about tablet users but looking how many sites are designed for tablets, quite many are.

Their averaged scores are aggregated from a sites that vary from the valid to little more than blogs and poorly disguised marketing. Again, there's no cure for stupid.

No cure for stupid yes but if everything is made for those stupid ones, eventually stupidity will increase.

So you'd like the score to better cater to people who can't be bothered to spend a few minutes researching their prospective purchase, while also catering to their twitter/ADHD tendencies by eliminating the need to read the review. I hope I never have to see an era where enthusiast sites have to dumb down content to Twitter sized word bites liberally sprinkled with emoji's for those whose attention strays after half a dozen words.

Personally, I hope these people just bypass TechSpot and move on to sites fully geared for 2 minute attention spans and a low level of mental acuity.

Those who read review do not actually need any ratings. For those who just check rating, that rating is everyhing. So article for enthusiasts, rating for lazy. Or perhaps even different short conclusion section for lazy ones?

Your hope is admirable but still doomed. As many think they "know" something after they have checked score from UltraHighTech enthusiast site. After that, they think they are gurus.

I have a system for that. It's called adding to their knowledge base when I can. I'll provide further reading links and maybe a synopsis of the information. I'd prefer to offer information rather than have the site kowtow to the lowest level of interest.

What if they have no time/interest to read further links? Most people are not interested about video cards way you and I are. We still have to get along those people, so why make it harder?

So the site should better tailor their articles to people who can't be bothered reading said articles so these people, who can't be bothered researching their possible hardware buy will feel....what exactly? A 95/100 is also pretty much a must buy. You think these people are then going to go back and re-read the entire article just to see if the missing 5% impacts their purchase? They couldn't be bothered in the first instance.

I have no sympathy for anyone who makes a substantial purchase without researching it beforehand. Anyone who relies on snippits from a single review (let alone its final distilled score) and blames anyone but themselves for the outcome AND is too stupid to return the item if dissatisfied really deserves everything they get.

95/100 means it's not perfect so there must be something why it's NOT perfect. At least someone will check where that 5/100 is lost. Most will not but again, while there is no cure for stupid, it doesn't mean everything should be done for stupid ones.

I have no sympathy also but fact is that most people in the world are stupid if we talk about video card knowledge. And while I do not say articles should be made for them, that big group should be considered while making articles.

You missed the point. The AMD lead is less than half the average it posts in other games (aggregated) at 4K. What I posted was the best case scenario for AMD bearing in mind 4K's love of high texture fill rates. If I were to choose the middle ground where Nvidia's TAU's weren't limiting their cards, and AMD's cards were likewise unaffected by raster op inefficiencies at much lower resolutions, the difference is more noticeable

Looking more carefully it seems that GTX 970 SLI loses to single GTX 970, so I would not draw any big conclusions about that one. AMD cards are generally better at high resolutions, true. Just Cause 3 is also quite crappy game and not so good to draw performance conclusions. Main problem is that DX12 games are still very rare.

These things are cyclic. Fermi was a compute-centric architecture, and still stands as one of the best archs for compute efficiency. Yes, during Fermi's reign (and GT200 before it) Nvidia fanboys paid no attention to perf/watt. But you know who held it as paramount? AMD fanboys. When Evergreen arrived, perf/watt and perf/mm (a newly important metric that arrived overnight) was the sole point of interest. As soon as AMD pushed "always on" compute and wattage climbed with the GCN architecture, perf/watt suddenly became irrelevant to AMD fanboys.

It cuts both ways. Always has.

I don't really remember that AMD fanboys have ever hyped power effiency as much that Nvidia fanboys did when Maxwell came out.

Nope. AMD's R&D couldn't sustain multiple developments and AMD had too many irons in the fire. Console development, an expensive to run logic layout business (since sold to Synopsys), a poorly thought out attempt at making a splash in the ARM server architecture market, and very likely a substantial ongoing investment in HBM integration which began at least 5 years ago....not to mention a long running APU/CPU architecture development.

If you want to distill AMD's woes down to a single point, it is their managements lack of strategic planning, goal setting, and a reliance upon being reactive rather than proactive in the industry. Too busy trying to imitate those more successful, but putting little thought into how to achieve goals and the actual returns on investment and time (see the SeaMicro acquisition for a prime example) once a course of action is embarked upon.

ARM server architecture market is mostly backup plan in case ARM really becomes strong on servers. AMD can do it, Intel cannot. AMD already has integrated HBM to GPU, Nvidia not. Remembering many manufacturing problems past years, that is something important. Also HBM2 can finally resolve APU's memory bandwidth problems.

Seamicro's idea looked good but not seem to work right now so AMD pulled it off. For a while at least.

That is one interpretation, but I don't think it is correct. As a serial upgrader myself, the best time to sell old hardware is just before the new series arrives. You still recoup a reasonable amount of your original purchase cost and can use the funds to offset the new purchase.

GTX 980 Ti was launched just year ago. And while it was made using very old 28nm tech, it was very expensive. Generally it's quite funny that GPU prices basically went higher as technology become more obsolete. While I agree that selling old hardware is best done before new tech arrives, we are still talking about product that released one year ago. I would expect that 700$ card would last much longer than one year. Many seem not to. No hard to claim that most GTX 980 Ti buyers did not listen to those that said 16nm cards would be much better and now they are in panic mode. In any case GTX 980 Ti goes very high (or even takes top spot) on "worst video card buys ever" -list.

Not just DX11 software.If that were solely the case, why did it need Nvidia to publicize frame pacing, ShadowPlay, GeForce Experience, and a host of other software that AMD has eagerly tried to adapt to its own uses. I'm guessing that Ansel and MSP will also find themselves with AMD analogues in the not too distant future

Those are just software side includes that are quite easy to copyy if become succesfull. Not all software features are succesfull and developing them cost something.

I've been hearing a near constant stream of this marketing since Raja Koduri claimed that Polaris and 14nm was well ahead of Pascal and 16nm....

The overall target is still "console-class gaming on a thin-and-light notebook."

Nvidia's 16nm offerings for console class gaming and notebooks are coming when?

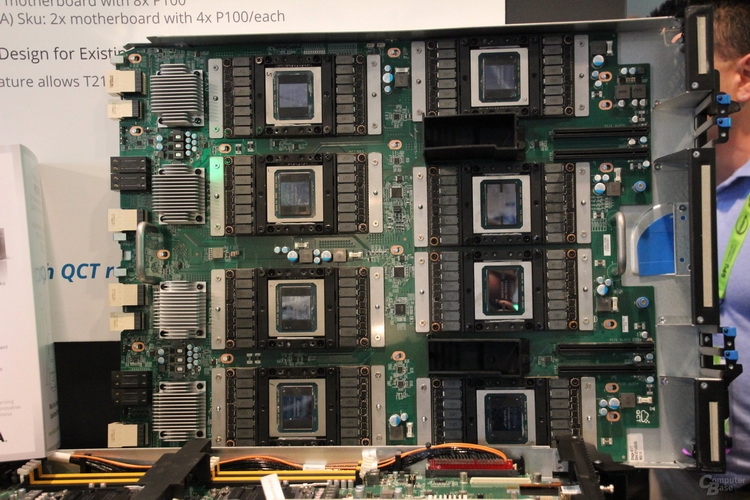

...Yet Nvidia have demonstrated the largest non-Intel GPU in the world on 16nm with series production underway (with over 4500 pre-sold at $10K/per), have the GTX 1080 reviewed and a week from retail availability. the volume market GTX 1070 basically ready to go (holding it back obviously a marketing strategy) and mass market GP106 due to arrive in a month.

And GTX 1080 is not mainstream market, it's for enthusiast/high end market. GP106 is likely compete with Polaris 10 but still, where are Nvidia's 16nm low end offerings? It also takes much more capacity to launch big amount of notebook and low end/mid end chips than launch one high end card with very limited availability.