Things used to move further and faster back in the early days of GPUs. In fact, things moved pretty quickly until just four years ago, when we made the transition from the 40nm design process used by Nvidia’s Fermi architecture to the 28nm process, still used today. This extended development cycle has had AMD and Nvidia squeezing the absolute most out of the 28nm design process.

But as good as the Titan X and Fury X are, it is time to move on in the quest for greater efficiency and even greater performance. First to market with a true next generation GPU is Nvidia. Codenamed Pascal, this latest architecture promises big things and could very well be the biggest step we've seen in recent years.

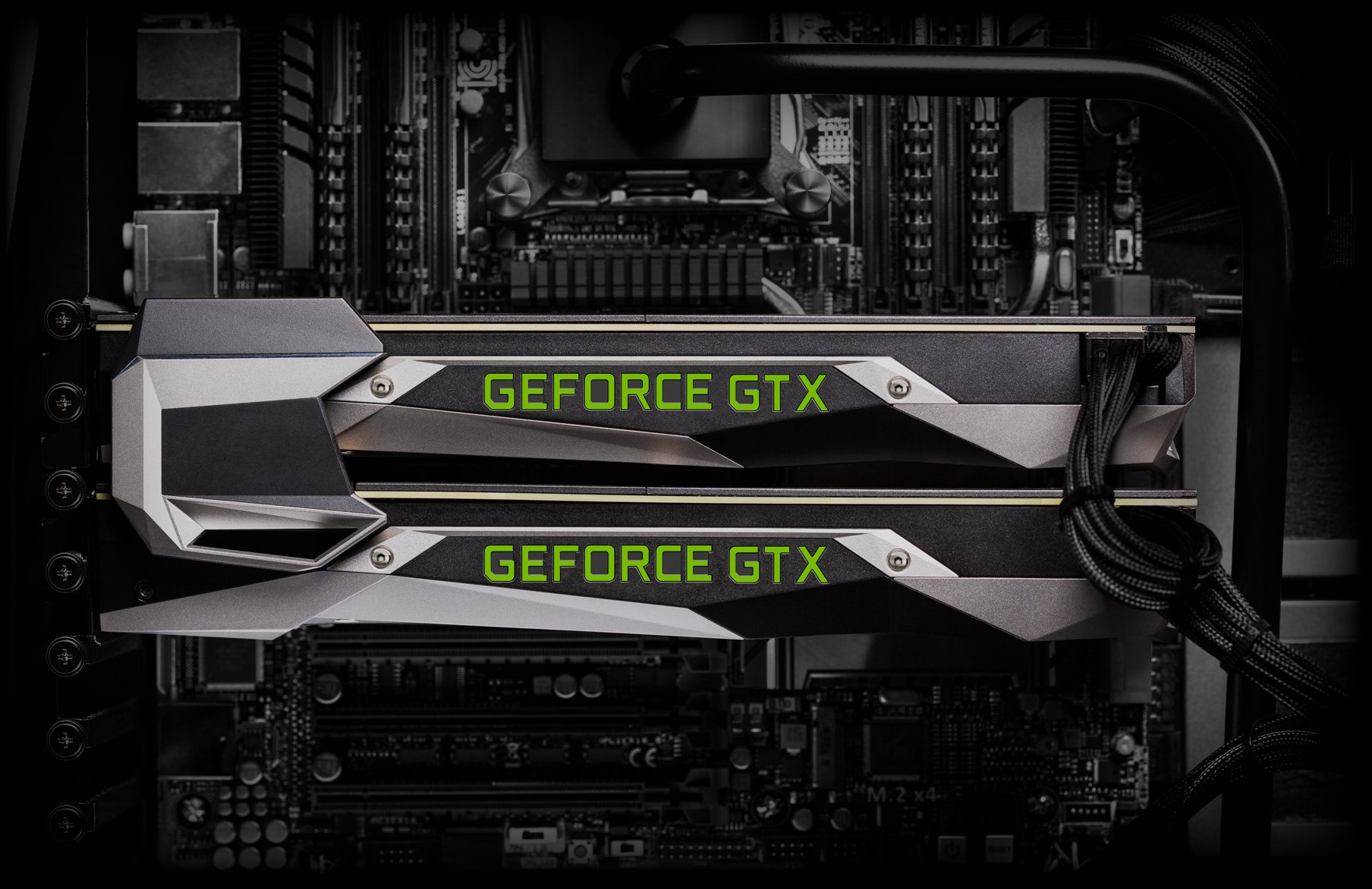

The new GeForce GTX 1080 is faster, built using the 16nm design process and packed with GDDR5X memory, it promises to put away the Titan X while consuming less power than the 980 Ti. We put this and other bold claims to the test.