I'm kinda confused and this is why:

"A big 70% performance jump over the RTX 2080 at 4K is impressive, and a huge improvement in cost per frame, so that's a job well done by Nvidia."

Steve, I respect you and Tim to death and watch Hardware Unboxed religiously but this statement is unintentionally misleading. The reason that I say this is because I seriously doubt that anyone can picture the performance of the 2080 the way we can with the 2080 Ti. There are two cards here that people are looking at to see what the generational performance difference is and the 2080 isn't one of them.

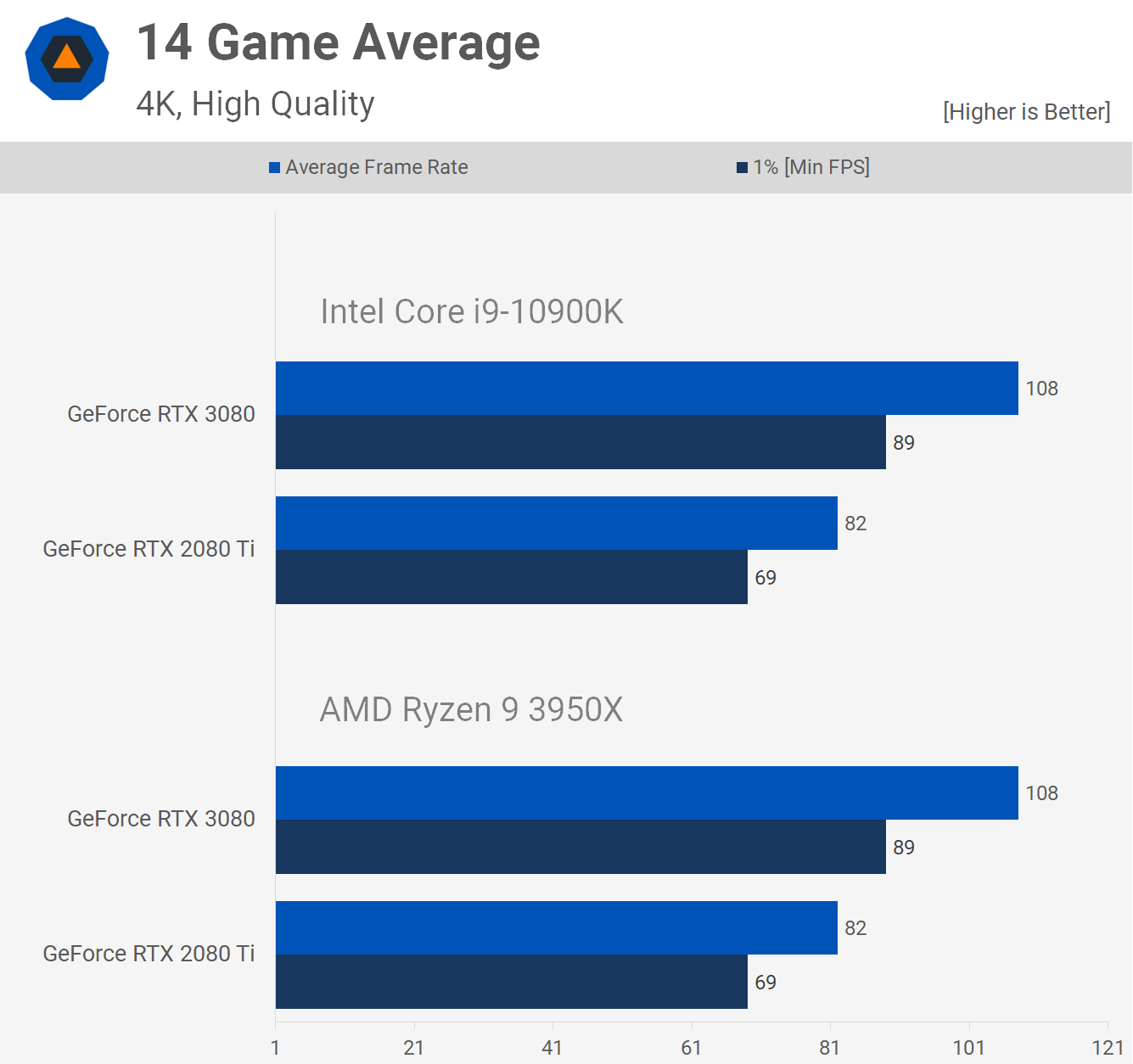

What I see here is an extremely modest increase of 23% at 1440p:

and a less modest increase of 31% at 4K:

Now, some could argue that the RTX 2080 Ti costs $1200USD while the RTX 3080 costs only $700 and that's true but just because the RTX 2080 Ti cost that much doesn't mean that it should have because the top-tier Ti card was the $700 card as recently as the GTX 1080 Ti. It was a terrible price to pay and I'm amazed at just how many people were stupid enough to pay $1200 for that card.now nVidia is using their past bad behaviour to make a normal price look amazing.

It's amazes me just how successfully that nVidia has manipulated their customer base. Their first step was to release the 2080 at $700 which was barely a performance jump over the GTX 1080 Ti (which was also $700). Step two was to release the 2080 Ti which was a made their customers so used to getting screwed over by pricing the 2080 Ti at $1200, that pricing the 3080 at $700 (where the 2080 Ti should have been in the first place and where the 3080 Ti should be priced instead of the 3080), nVidia has made its customer base extremely happy to get screwed over.

Let's look at the pricing progression, shall we?

MSRP of GTX 280 $649

MSRP of GTX 480 $499

MSRP of GTX 580 $499

MSRP of GTX 680 $499

MSRP of GTX 780 $649

MSRP of GTX 780 Ti $699 <- Top-tier Ti line at $699

MSRP of GTX 980 Ti $699 <- Top-tier Ti line at $699

MSRP of GTX 1080 Ti $699 <- Top-tier Ti line at $699

MSRP of RTX 2080 $699 <- Top-tier NON-Ti line at $699

MSRP of RTX 2080 Ti $1199 <- Top-tier Ti line at $1200?!?!?!

MSRP of RTX 3080 $699 <- Top-tier NON-Ti line at $699 (just like RTX 20)

MSRP of RTX 3080 Ti $1199 <- Top-tier NON-Ti line at $699 (just like RTX 20)

MSRP of RTX 3090 $1499 <- Still way more expensive than the Ti

So, nVidia has conditioned the sheep to suddenly accept a $500 jump in price for the top-tier Ti line from $699 to $1199 and the non-Ti from $499 to $649. Don't tell me it's inflation because the cost of the top-tier card was between $500 and $649 for FIVE GENERATIONS (at LEAST ten years). Don't tell me that it's inflation because the IBM PC model 5150 was $2000 in 1984 and we're not paying $12000 for entire PCs that are not even in the same performance universe as that old IBM. Tech is supposed to get cheaper over time with new tech being the same price as the old was (or LESS not more). Here we have nVidia finding a way around that to charge people even more and more money when they shouldn't be (they don't have to after all) and people are CELEBRATING THIS? Seriously?

Nvidia is offering a new level of performance where it has no competition so far. At the end of the day, the price is related to supply and demand. There is a 1000 US$+ tier now and consumers are willing to pay.