Graphics cards have a lot more components than CPUs do - both comprise a processor and primary packaging (the stuff that houses the chip and connects it to the pins/solder ball points), but graphics card then have DRAM modules, VRMs, video output interfaces, secondary packaging (the ‘motherboard’ that houses all of that), and the cooling system (CPUs can be sold without it).

Just like CPU motherboards have become more expensive in part due to carrying a lot more current through and have more electrical signals running, that require tighter tolerances, so have the boards for graphics cards.

DRAM prices have risen, and fallen, repeatedly over the years due to supply and demand (the manufacturing nodes used haven’t changed as frequently as they for CPU/GPUs) and cards are sporting more and/or larger modules. They’re cheaper in comparison to GPU dies, but it’s still a cost to add in.

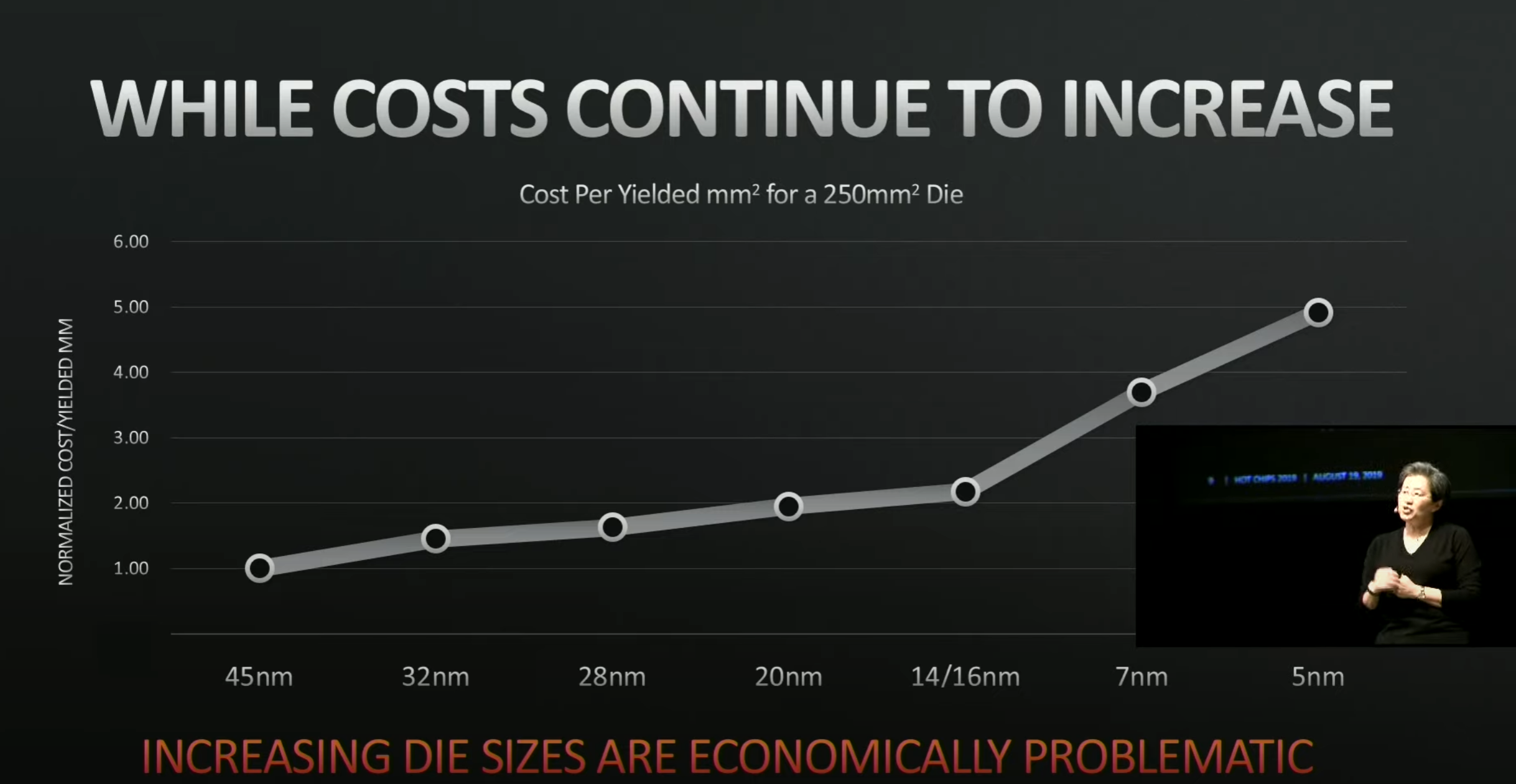

Also, GPUs have been increasingly more internally complex and sophisticated. Not as much as a high end CPU, but the costs to design and then ultimately fabricate on what’s usually a brand new process node, have notably risen these past few generations.

View attachment 88640

[

Source]

High end graphics cards are never going to return to, say, 2016 prices. Not just because of inflation, but only associated design and manufacturing costs have also risen (and also by factors beyond inflation). But there is definitely scope for them to come back down to something more sensible. Just depends on what one considers to be sensible.