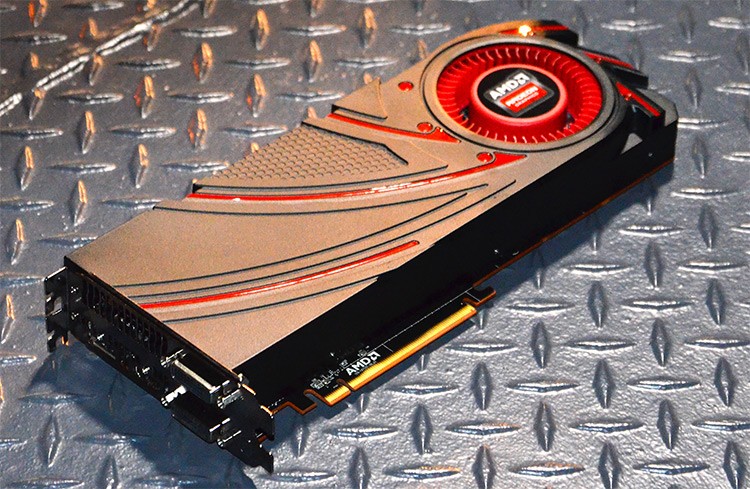

At last week's GPU14 Tech Day event in Hawaii, AMD revealed a new flagship graphics card: the Radeon R9 290X. With over six billion transistors on the GPU die, 4 GB of memory with 300 GB/s of bandwidth, and 5 TFLOPS of computing performance, the card is certainly going to be AMD's best performer and a true single-GPU competitior to the Nvidia GeForce GTX Titan.

AMD didn't reveal a release date or a price for the graphics card at the event, but recently pre-orders have started at a range of online retaliers for the R9 290X. Newegg lists the MSI, Sapphire and XFX branded reference cards with a price listed of 'Coming Soon', but some quick digging into the HTML source code reveals the card's price as $729.99 excluding tax. With Newegg taking a small slice, it's likely AMD has set the MSRP at $699.

OverclockersUK are offering a pre-order deposit of £99 to secure a Radeon R9 290X Battlefield 4 Edition, an SKU that AMD announced at the GPU14 event but again failed to price or date. Although the site doesn't list a final price for the card, they estimate they'll deliver their "several hundred units" on October 31st. Centrecom Australia is offering a similar pre-order system, where prospective buyers can put down $200 to be the first in line.

Leaked specifications for the card indicate it features 2816 stream processors, 176 TMUs, 44 ROPs, a base clock of 800 MHz with a turbo clock of 1000 MHz, 4 GB of GDDR5 memory on a 512-bit bus with a clock of 1250 MHz, and an 8+6 pin power port which we already revealed through pictures of the card. Like a number of other R9 and R7 series cards, the R9 290X will support DirectX 11.2, OpenGL 4.3, 'Mantle', and TrueAudio.

Expect AMD to officially reveal the details surrounding this card in the coming weeks, and of course, look our for our reviews on all of the new R9 and R7 series GPUs.