What just happened? We've seen plenty of melted GPU and cable connectors involving Nvidia graphics cards, but some have question marks over their legitimacy. However, the latest incident, involving an RTX 5090 and Wuchang: Fallen Feathers, happened to the editor-in-chief of a gaming publication, and he recorded everything in great detail.

John Papadopoulos of DSO Gaming writes that he was playing new Soulslike Wuchang: Fallen Feathers yesterday when he noticed an unusual smell. Failing to discover its source, he decided to open his PC to check if it had fallen victim to the dreaded cable-melting issues plaguing so many Nvidia card owners.

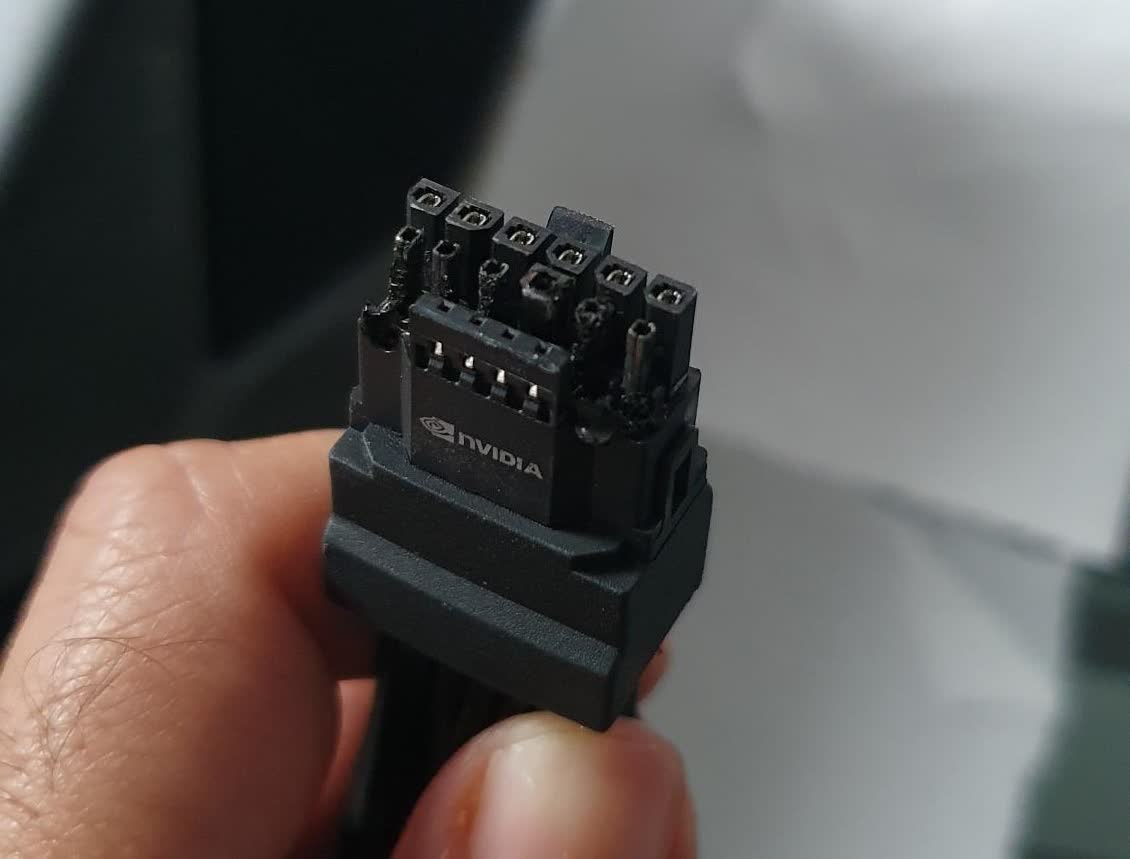

Unfortunately for Papadopoulos, he saw that the 12V-2×6 power cable connected to his RTX 5090 was burned and emitting smoke.

Being aware of the many reported melting incidents, Papadopoulos said he always makes sure to fully seat the power connector in the graphics card. He provided a picture of the connector plugged into the card taken before the incident as proof.

While some people have pointed to what looks like a tiny gap between the card and connector at the top, the editor insists the cable was pushed in as far as it would go. For further evidence of this, he says that the RTX 5090 ran for 20 minutes at 100% usage with no issues.

Papadopoulos also points to the fact that there was no damage on the top row of connectors, which he said would have burned if the cable wasn't fully seated – the damage is at the bottom row on both the cable connector and card socket.

Papadopoulos said that for a test, he removed the RTX 5090, plugged it back in using the same burned power cable, and ran Wuchang for 20 minutes. The PC was stable with no smoke coming from the connector.

There's an admission that this could still have been caused by user error – Papadopoulos says he doesn't remove the cable from the RTX 5090 when taking the card out of his PC case, so it could have come loose at some point. Others might argue that a cable "fully plugged in" should not work itself lose.

This is far from the first melting RTX 5090/cable that we've seen. One of the earliest was reported in February, but it's believed that an unofficial cable was the cause. There were two cases in April, including one involving MSI's "foolproof" yellow-tipped 12V-2x6 cable. And another incident involving MSI's colorful cable was reported in May.

Image credit: DSO Gaming, John Papadopoulos

RTX 5090 cable melts while playing Wuchang: Fallen Feathers, despite proper seating claims