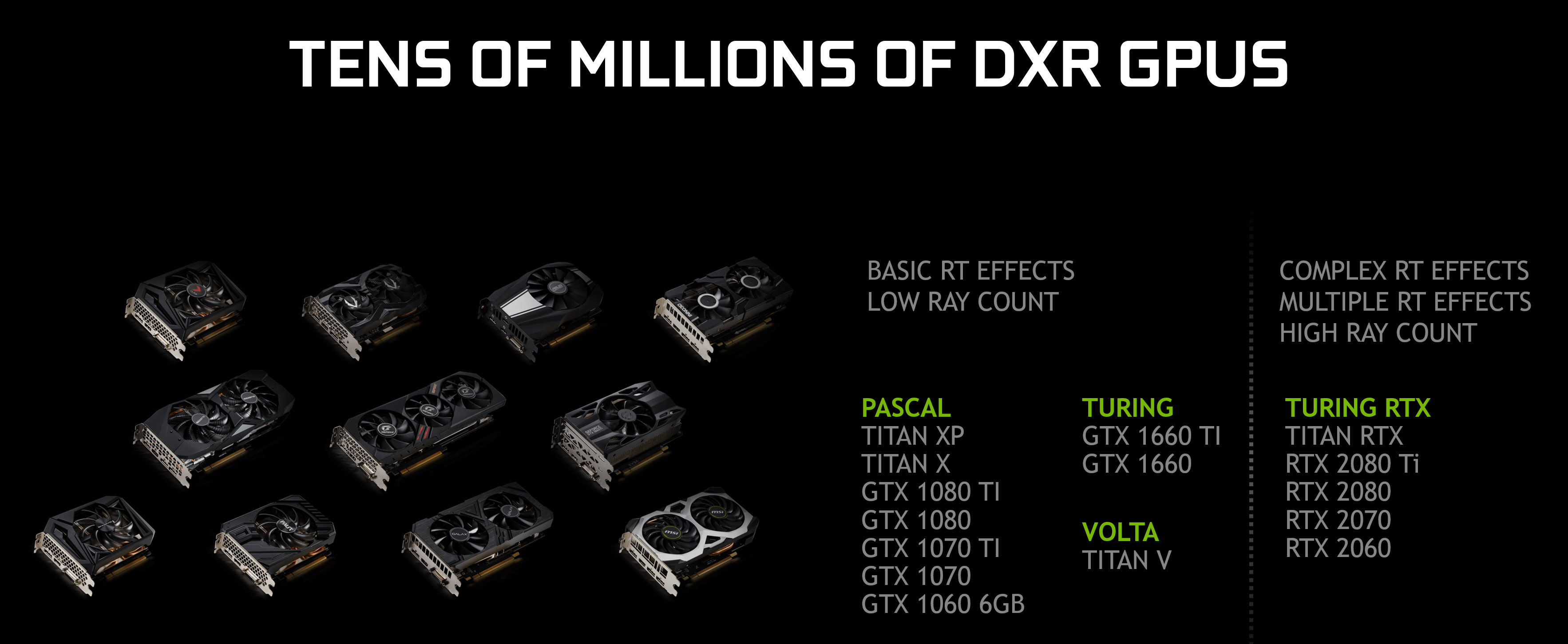

During this year's GDC, Nvidia announced that GTX graphics cards would be getting basic ray tracing support with a driver update. For putting together this test we took the most powerful Pascal GPU we had on hand - the Nvidia Titan X - and pitted it against Nvidia's RTX line-up in the three games that support ray tracing thus far.

https://www.techspot.com/review/1831-ray-tracing-geforce-gtx-benchmarks/