A hot potato: The use of autonomous weapons systems, aka killer robots, is a contentious subject—for obvious reasons. It’s proved particularly controversial for the US Army, which plans to use AI to help identify and engage targets. But the DoD says humans will always have the final say on whether the robots open fire.

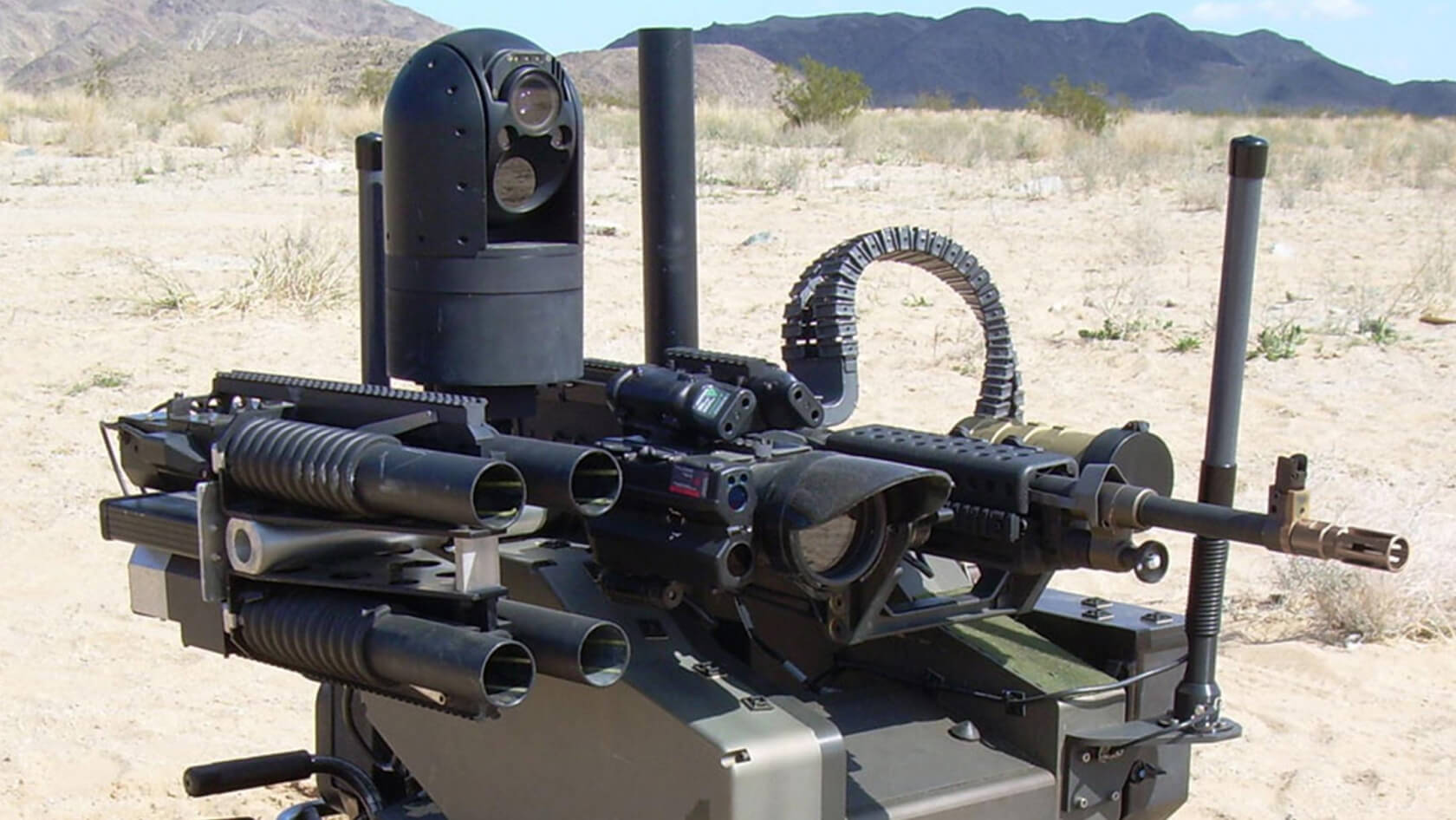

The Defense Department is holding an industry day this week to provide industry and academia an opportunity to overview and aid in developing the Advanced Targeting and Lethality Automated System (Atlas), which is designed for ground combat vehicles.

The Army says it wants to use recent advances in AI and machine learning to develop “autonomous target acquisition technology, that will be integrated with fire control technology, aimed at providing ground combat vehicles with the capability to acquire, identify, and engage targets at least 3X faster than the current manual process.”

Back in 2017, Elon Musk was one of 116 experts calling for a ban on killer robots—the second letter of its kind. It arrived not long after a US general warned of the dangers posed by these machines.

The controversy led to the industry day document being updated last week, emphasizing that the use of autonomous weapons are still subject to guidelines set out by Department of Defense (DoD) Directive 3000.09. This states that “Semi-autonomous weapon systems that are onboard or integrated with unmanned platforms must be designed such that, in the event of degraded or lost communications, the system does not autonomously select and engage individual targets or specific target groups that have not been previously selected by an authorized human operator.”

Speaking to Defense One, an army official said any upgrades to Atlas didn’t mean “we’re putting the machine in a position to kill anybody.”

While many are calling for an outright ban on killer robots, Russia has suggested it will not adhere to any international restrictions on autonomous weapons systems.

https://www.techspot.com/news/79129-us-army-addresses-controversial-killer-robot-program.html