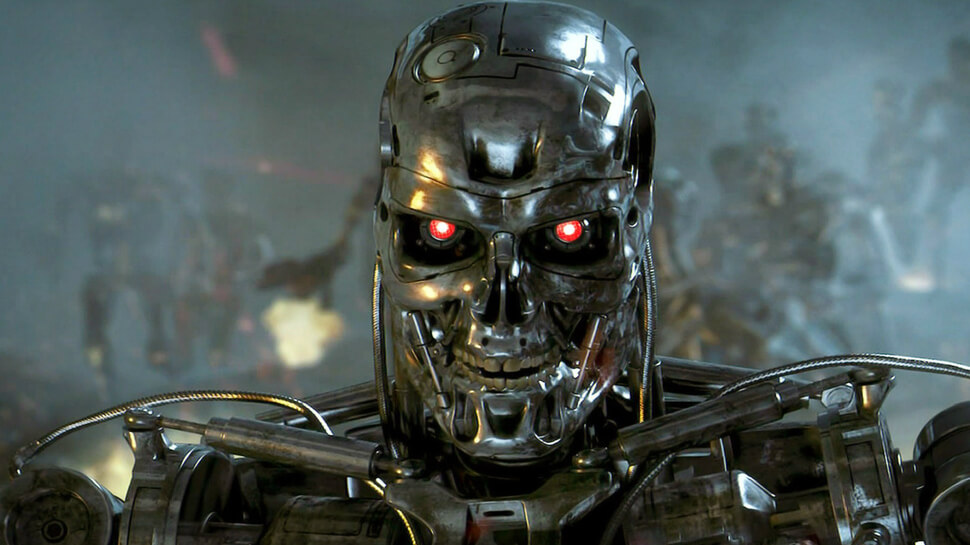

While artificial intelligence has brought countless benefits to humanity, the danger that we might hand too much control over to the machines remains worrying. It's something that Elon Musk, Bill Gates, and Stephen Hawking have long warned against. Now, the second highest-ranking general in the US military has voiced his concerns over autonomous weapons systems.

Speaking at a Senate Armed Services Committee hearing yesterday, Gen. Paul Selva was answering a question about a Defense Department directive that requires human operators to be involved in the decision-making process when it comes to autonomous machines killing enemy combatants.

The general said it was important the military keep "the ethical rules of war in place lest we unleash on humanity a set of robots that we don't know how to control."

"I don't think it's reasonable for us to put robots in charge of whether or not we take a human life," Selva added.

The Hill reports that Senator Gary Peters asked about the directive's expiration later this year. He suggested that America's enemies would have no moral objections to using a machine that takes human thinking out of the equation when it comes to killing soldiers.

"I don't think it's reasonable for us to put robots in charge of whether or not we take a human life...[America should continue to] take our values to war," said the general.

"There will be a raucous debate in the department about whether or not we take humans out of the decision to take lethal action," he told Peters, stressing that he was in favor of "keeping that restriction."

The general did add, however, that just because the US won't go down the route of fully autonomous killing machines, it should still research ways of defending against the technology.

Tesla and Space X boss Elon Musk has been warning people about the dangers of AI for years. At the recent National Governors Association Summer Meeting in Rhode Island, he said: "Until people see robots going down the street killing people, they don't know how to react because it seems so ethereal."

"AI is a rare case where I think we need to be proactive in regulation instead of reactive. Because I think by the time we are reactive in AI regulation, it's too late."