One of the many remarkable aspects of generative AI and the impact it's having on the industry is how different it is compared to other recent big tech trends. Not only is it growing faster and more broadly than other buzzy technologies like blockchain and cryptocurrency, it's also taking organizations into a great deal of uncharted territory.

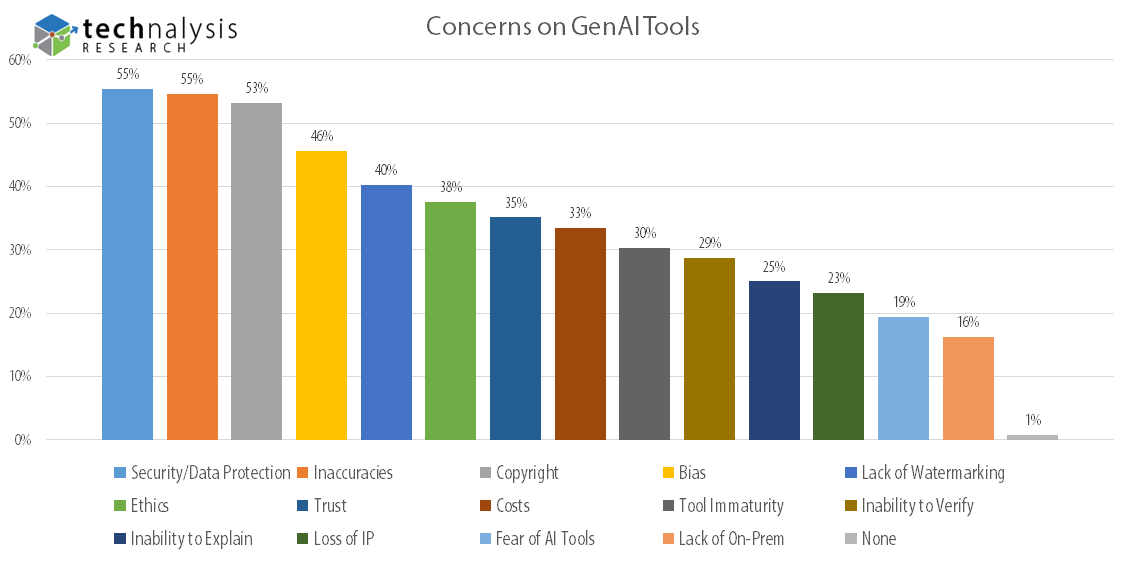

A recent survey by TECHnalysis Research, involving 1,000 IT decision-makers engaged in their companies' GenAI efforts, revealed that 99% of companies using the technology still encounter challenges. Notably, three primary concerns – data protection/security, inaccuracies, and copyright infringement – were highlighted by over half of these companies, as depicted in the graph below. Furthermore, on average, these companies identified five distinct concerns, indicating that potential issues are not confined to a narrow scope but are rather widespread.

Moreover, many are diving into GenAI without a comprehensive understanding of the technology's mechanics and its diverse deployment possibilities. While it's true that many enterprise-targeted technologies have been launched without a full grasp of their nuances or potential ramifications, GenAI seems to defy the conventional wisdom of waiting for a technology to mature before broad implementation.

Fig. 1

There are several reasons why this is the case, but most of them boil down to the fact that GenAI has taken on an aura of inevitability and necessity that's driving companies to start working with it sooner than they might otherwise.

The level of excitement around the technology – generally driven by some impressive early experiences with it – has served as a particularly effective accelerant to adoption. Indeed, the same survey showed that 95% of IT decision makers believe that GenAI could have either a profound or at least potentially noticeable impact on their business. Not surprisingly, that's led to a very palpable sense that everyone is adopting the technology (again, survey results showed that 88% of companies have started to).

It's also led companies to believe that they could find themselves at a serious competitive disadvantage if they don't move quickly – potential concerns and limited understanding be damned.

Of course, the tech industry is littered with new technologies that were initially expected to have a big impact on businesses. The difference with GenAI is that companies seem willing to overlook these potential problems because of the potential benefits it's promised to unleash as well as the sense of urgency around the technology.

It's clear that the confusion surrounding GenAI is real and widespread.

Another significant challenge with GenAI is the confusion around the subject. While few are eager to confess their lack of understanding about a critical new innovation – especially those in the tech sector – it's evident that the ambiguity related to GenAI is both real and widespread. Even seemingly basic distinctions, such as understanding the role and significance of a GenAI-focused foundational model (like an LLM or Large Language Model) versus an application that utilizes this model, can be a source of misunderstanding.

This confusion arises for various reasons. Firstly, in the early discussions and encounters with GenAI tools, such as ChatGPT, the foundational model and the application have often been used interchangeably. It's easy to assume that ChatGPT encompasses both the underlying model and the chat interface many of us have experienced. However, in reality, ChatGPT is the application, while it can run on different iterations of the GPT large language model, like GPT-3 or GPT-4.

This layered approach of an underlying engine and an application built on top of it is common to most GenAI applications. On one hand, it offers a new degree of flexibility, but also a much larger potential for confusion. With typical enterprise software applications like CRM, office productivity suites, etc., for example, we've never had to think about the internal engine used to drive the functionality, nor have we had an option to change it. With GenAI, however, you have the possibility of using several different engines for the same basic application, or the same engine across a radically different set of applications. A single LLM, for example, can be used to create original text, summarize existing text, write software code and much more. As a result, the potential permutations can quickly become overwhelming.

Another issue is that early offerings from different vendors are often described in relatively similar ways but may approach a problem or solve an issue in a completely orthogonal manner. In other words, it's often apples and oranges comparisons, which serve to further confuse the issue.

This is one of the key reasons why educational efforts and clear, basic marketing messages are going to be so essential for the first few generations of GenAI tools. Even people with decades of IT experience are discovering that GenAI is a different animal, and most need to have things explained in a simple, straightforward manner (whether they're willing to admit it or not). With the added pressure of moving quickly to make important strategic decisions around GenAI, the need for clarity is even more important.

Regardless of these concerns, it's clear that the GenAI train isn't slowing down. In fact, when the GenAI-enabled versions of the key productivity suites from Microsoft and Google reach general availability – reported to be sometime this fall – I expect a new wave of GenAI buzz and activity. These are the type of applications that organizations expect GenAI to have some of the biggest impact in – and they also happen to be the tools that virtually everyone in every business uses. As a result, the real-world impact of what GenAI can achieve will never be more clear once a large number of people start using them on a regular basis.

The impact that I believe these tools could have won't make the challenges or educational concerns discussed earlier go away – rather, they're going to become even more prominent. Nevertheless, organizations need to prepare, and vendors need to focus in order to drive the kind of groundbreaking changes that GenAI is bound to create.

Bob O'Donnell is the founder and chief analyst of TECHnalysis Research, LLC a technology consulting firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow him on Twitter @bobodtech

https://www.techspot.com/news/99812-why-generative-ai-unlike-other-big-tech-trends.html