In brief: Google DeepMind's AIs have spent years humiliating the champions of board and video games, but a new project from the research division is instead designed to interpret human instructions in games. The resulting AI displayed an impressive generalized gaming skillset, which researchers hope to apply to other environments.

Google DeepMind recently unveiled SIMA (Scalable Instructable Multiworld Agent) – an AI that learned to perform various actions in different video games based on human commands. The development is a sharp pivot from the company's other gaming-related AI research.

Unlike other game-playing AI, SIMA can't access anything human players can't. It can't read inputs or a game's code like a CPU-controlled character and performs actions through keyboard and mouse inputs according to what's visible on the screen.

Like DeepMind's previous AIs, which learned to beat professional Go, Chess, StarCraft II, and Quake III players by watching hundreds of thousands of matches, SIMA was trained on a massive amount of footage from nine games. However, instead of learning to win at each game in isolation, the new AI learned how to complete numerous tasks in all of them, transferring skills between titles.

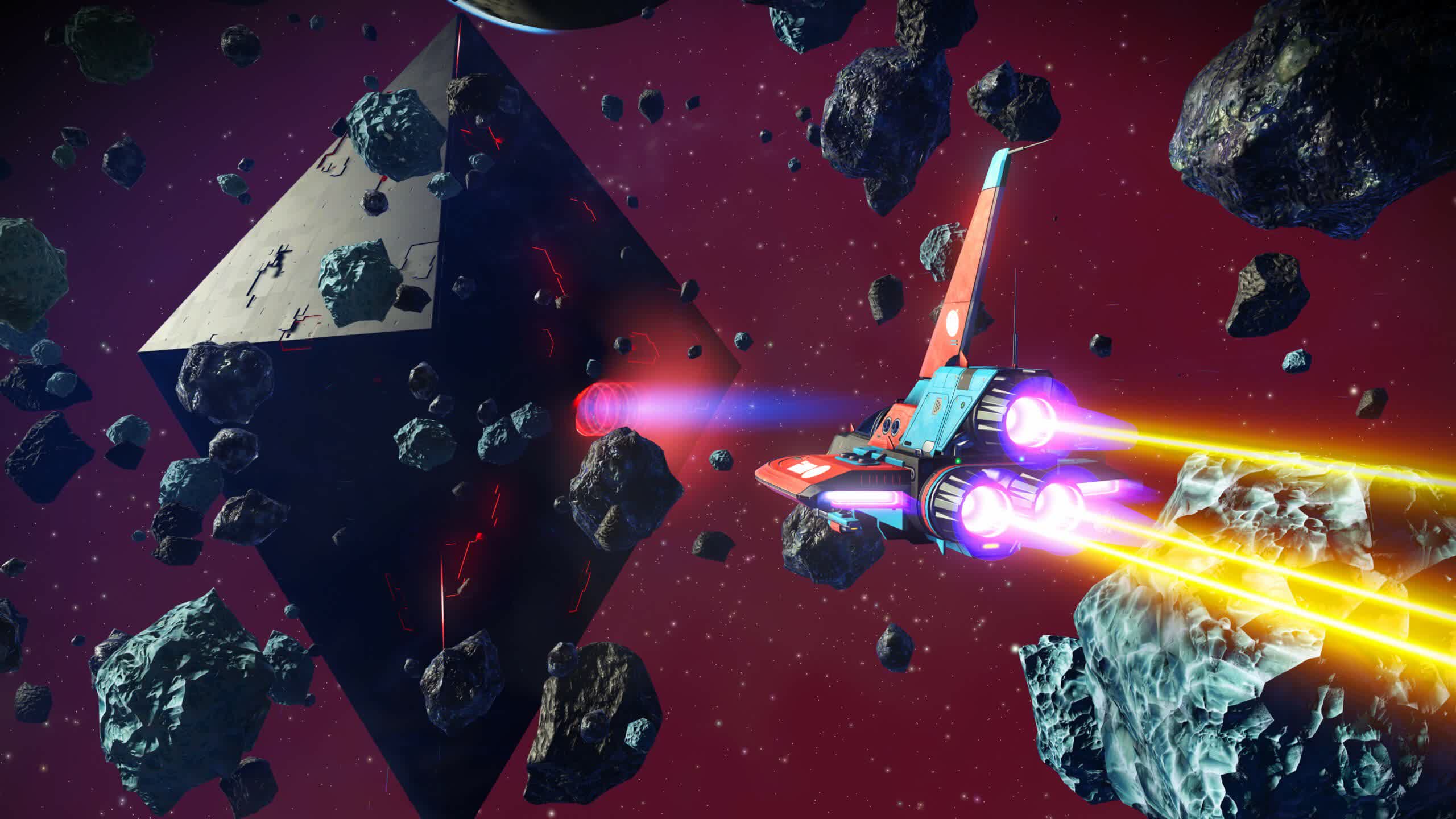

Collaborating with eight developers, Google used footage from solo and co-op sessions of No Man's Sky, Goat Simulator 3, Teardown, Satisfactory, Valheim, and other games. The company focused on less violent sandbox titles with a broad range of gameplay mechanics to expand the range of actions SIMA could learn.

Although the AI can currently only accomplish simple tasks like using a ladder or navigating an obstacle, it can accurately do so according to verbal commands. Furthermore, when trained on all of the games except one, SIMA still quickly applied its general skills to that final title. Google researchers hope that it can eventually complete complex, multi-step procedures. The AI also showed promise in new environments that the researchers built in Unity, indicating the ability to apply in-game training to non-gaming tasks, which could impact the field of robotics.

Another recent gaming-related DeepMind project, Genie, can turn static images into playable 2D video game levels. Google trained the AI on hundreds of thousands of hours of public videos of 2D platform games, eventually teaching it to make games out of sketches, photos, and renders.