Forward-looking: A new chapter in human-computer interaction is unfolding at Meta, where researchers are exploring how the muscles in our arms could soon take the place of traditional keyboards, mice, and touchscreens. At their Reality Labs division, scientists have developed an experimental wristband that reads the electrical signals produced when a person intends to move their fingers. This allows users to control digital devices using only subtle hand and wrist gestures.

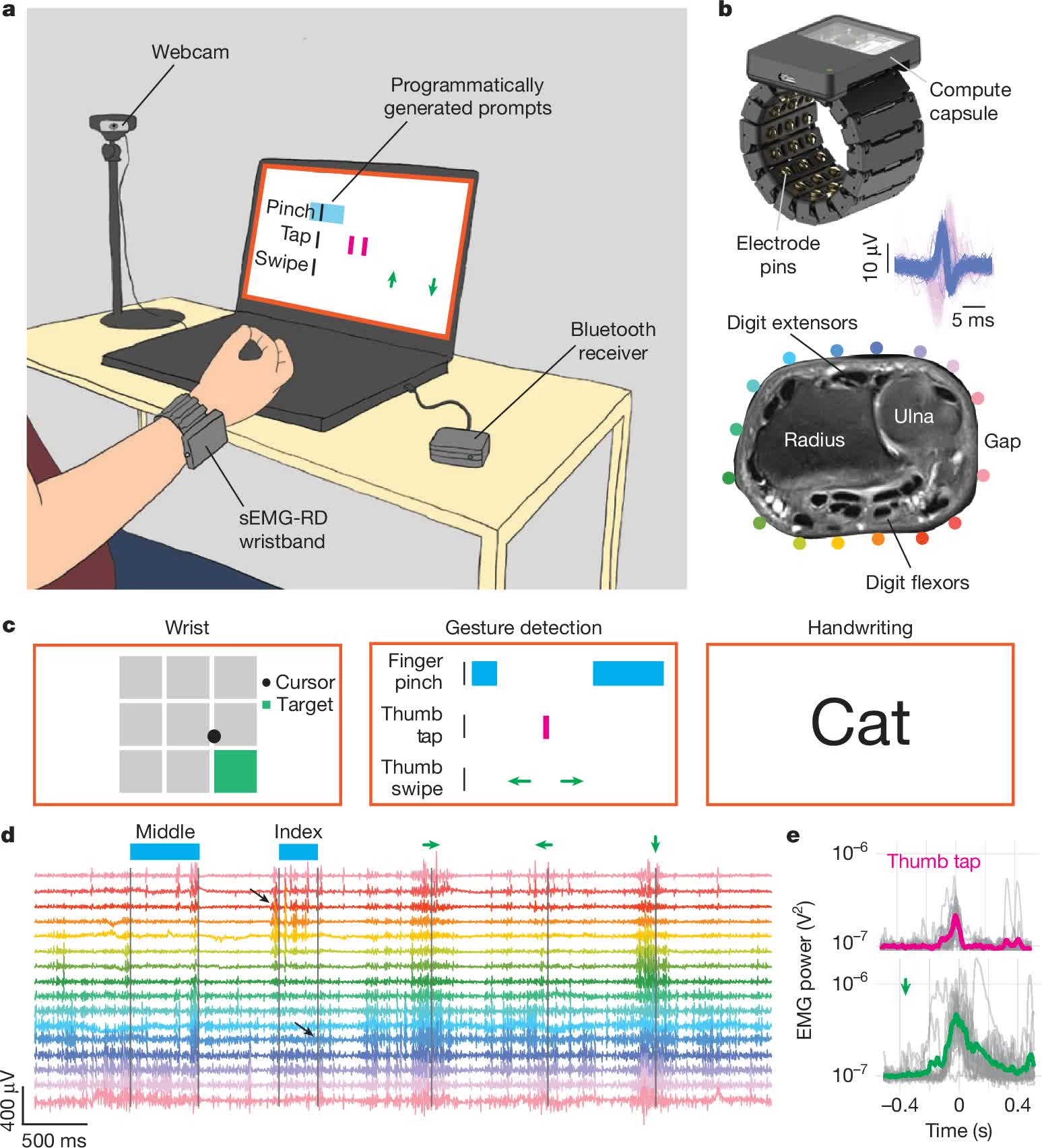

This technology draws on the field of electromyography, or EMG, which measures muscle activity by detecting the electrical signals generated as the brain sends commands to muscle fibers.

Typically, these signals have been used in medical settings, chiefly for enabling amputees to control prosthetic limbs. Meta's work, however, rekindles decades-old ideas by leveraging artificial intelligence to make EMG an intuitive interface for everyday computing.

"You don't have to actually move," Thomas Reardon, Meta's vice president of research heading the project, says. "You just have to intend the move."

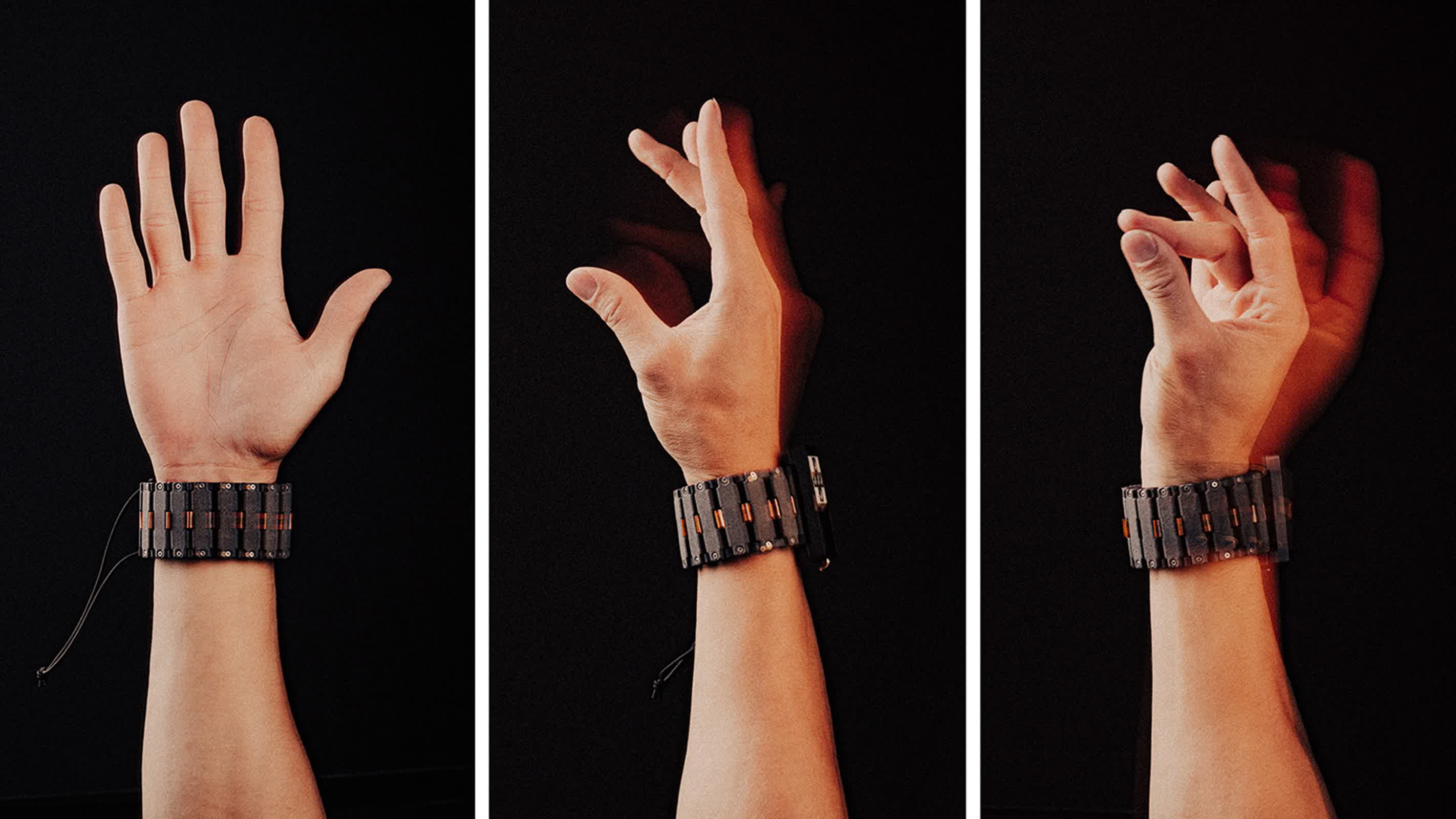

Since experiments began, the prototype wristband has evolved beyond clunky early visions. Now, by detecting faint electrical pulses before any visible movement, the device allows actions like moving a laptop cursor, opening apps, or even writing in the air and seeing the text appear instantly on a screen.

"You don't have to actually move," Thomas Reardon, Meta's vice president of research heading the project, told The New York Times. "You just have to intend the move." This distinction – where intent is enough to trigger action – a leap forward that could outpace the very fingers and hands we've long relied upon.

Unlike some emerging neurotechnology approaches, Meta's solution is entirely noninvasive. Efforts by startups like Neuralink and Synchron are focused on brain-implanted or vascular devices to read thoughts directly, complicating adoption and raising risks. Meta sidesteps surgery: anyone can fasten this wristband and begin using it, thanks to advanced AI models trained on the muscle signals of thousands of volunteers.

"The breakthrough here is that Meta has used artificial intelligence to analyze very large amounts of data, from thousands of individuals, and make this technology robust. It now performs at a level it has never reached before," Dario Farina, a bioengineering professor at Imperial College, London, who has tested the system, told The New York Times.

With EMG data from 10,000 people, Meta engineered deep learning models capable of decoding intentions and gestures without user-specific training.

Patrick Kaifosh, director of research science at Reality Labs and a co-founder of Ctrl Labs, which was acquired by Meta in 2019, explained that "out of the box, it can work with a new user it has never seen data for." The same AI foundations that support this wristband also drive progress in handwriting recognition and gesture control, freeing users from physical keyboards and screens.

Reardon credits years of steady research, starting at Ctrl Labs, for bringing the technology to its current level. "We can see the electrical signal before your finger even moves," he said. "We can listen to a single neuron. We are working at the atomic level of the nervous system," he said.

Beyond serving able-bodied users, the technology shows promise for those with limited mobility. At Carnegie Mellon University, researchers are already evaluating the wristband with people who have spinal cord injuries, allowing them to operate computers and smartphones despite impaired hand function. The system's ability to pick up faint traces of intention – sometimes before any visible movement – brings hope to those who have lost traditional motor abilities. "We can see their intention to type," said Douglas Weber, professor of mechanical engineering and neuroscience at Carnegie Mellon.

Meta aims to fold this technology into consumer products in the coming years, envisioning an era when everyday tasks – from typing to sending messages – are performed invisibly, with the flick of a muscle or the mere intent to act.

The company's recent study published in Nature reports that with continued AI personalization, accuracy in decoding gestures can exceed that of previous approaches, even keeping up with rapid muscle commands. The research also described a public dataset containing over 100 hours of EMG recordings, inviting the scientific community to build on their findings and accelerate progress in neuromotor interfaces.

Reardon, widely recognized as the architect of Internet Explorer, now sees the challenge as making "technology robust and available to everyone." He adds: "It feels like the device is reading your mind, but it is not. It is just translating your intention. It sees what you are about to do."