Facebook on Thursday announced plans to open-source its latest Open Rack-compatible hardware design for AI computing, codename Big Sur.

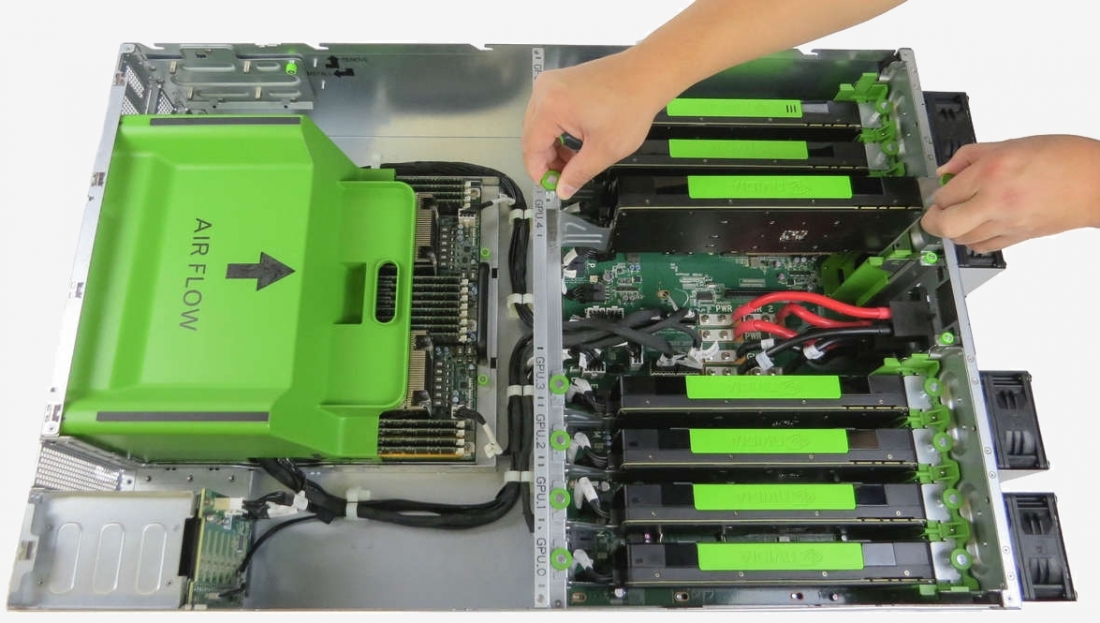

As Facebook staffers Kevin Lee and Serkan Piantino explain, Big Sur was built to use up to eight high-performance GPUs of up to 300 watts each. Leveraging Nvidia's Tesla Accelerated Computing Platform, the duo claim Big Sur is twice as fast as previous generations that were built using off-the-shelf components and design.

The speed increase means they can train neural networks twice as fast and explore networks that are twice as large as before (larger means faster in this instance). What's more, because training can be distributed across eight GPUs, the size and speed of networks can be scaled by another factor of two.

It may seem foolish for a tech giant like Facebook to openly give away its AI secrets but it's actually a strategic move that will help both the social network and the artificial intelligence community advance at a faster pace.

Think about it. If Facebook can hire employees that already have experience with its AI systems, that'll no doubt save them time and money when it comes to training and whatnot. It's also not unheard of as Google essentially did the same thing last month by making its artificial intelligence engine TensorFlow open source.

Facebook said it will submit the design materials to the Open Compute Project (OCP) but didn't reveal a timeline of exactly when that will happen.