AMD is using its compute prowess to tackle the next big thing in the server market: machine learning and artificial intelligence. At its Tech Summit 2016 in California last week, the company announced a new machine intelligence initiative known as Radeon Instinct, which gives server designers and developers a compelling set of infrastructure to tackle machine learning.

Radeon Instinct encompasses both hardware and software aspects to deliver a full machine intelligence platform. AMD hopes that industries such as financial services, life sciences, and the cloud - which are all quickly moving to machine learning solutions and infrastructure - will choose Radeon Instinct for their compute requirements.

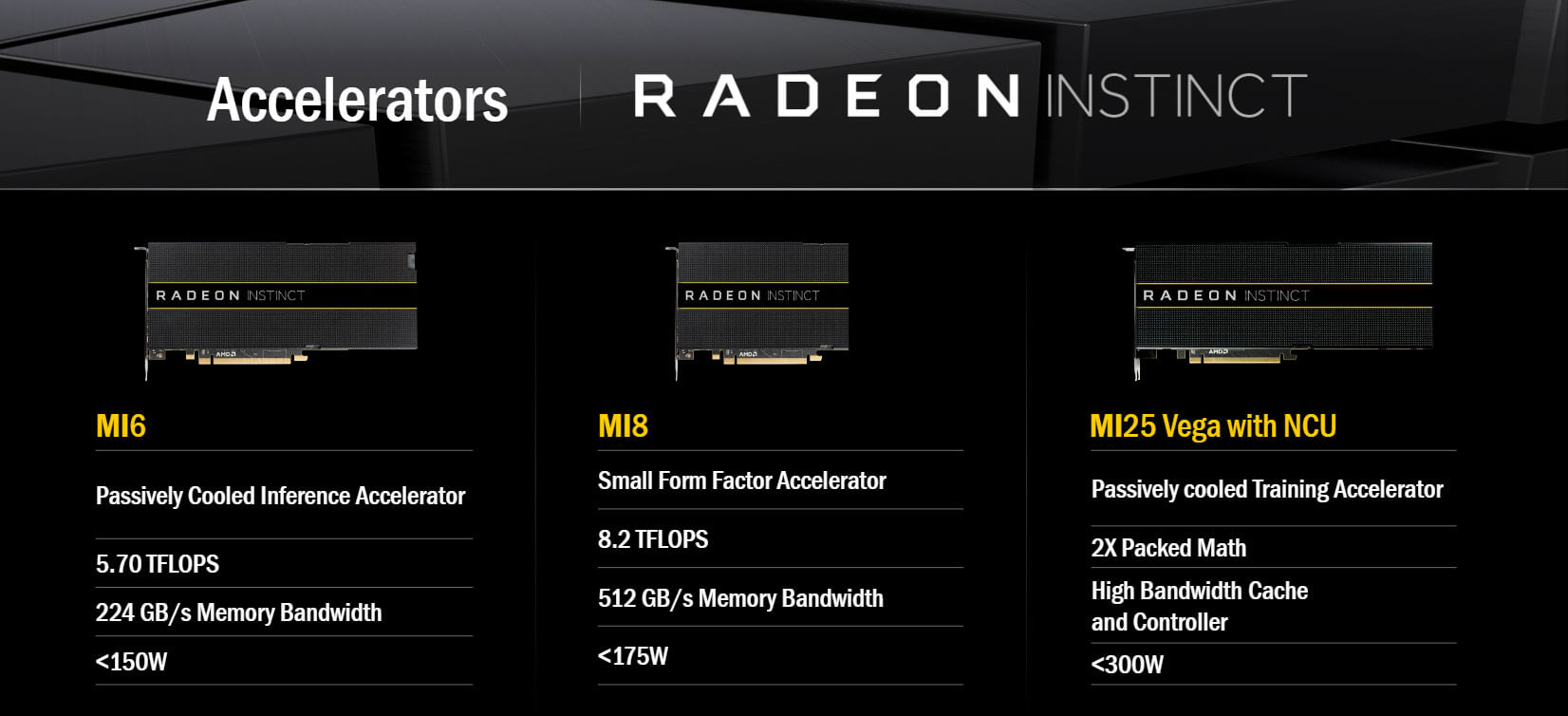

On the hardware front, AMD has developed a set of GPU accelerators for server farms geared specifically for machine intelligence workloads. The three Radeon Instinct Accelerators set to be available in the first half of 2017 use three generations of AMD GPU architectures, including Vega, which is set to be available in consumer oriented cards in a similar time frame.

MI6 is the most basic of the three, and uses a Polaris GPU with 5.7 TFLOPs of compute performance along with 16GB of memory. MI8 steps up to a Fiji GPU with 8.2 TFLOPs of performance, and includes 4GB of HBM in a small form factor card similar to the Radeon R9 Nano.

MI25 is the most interesting of the three accelerators as it uses Vega with AMD's next-generation compute units (AMD calls this NCU, although it's unclear whether the N actually stands for next-gen). AMD is keeping tight-lipped about the specifications of this accelerator, although judging by how they have named the card, MI25 will pack a huge 25 TFLOPs of FP16 compute in a sub-300W power envelope.

AMD lists these three accelerators as "passively cooled", although in reality they'll be slotted into servers with powerful (though external to the card) active cooling.

One of the most intriguing things announced by AMD was the combination of Radeon Instinct Accelerators with the upcoming Zen 'Naples' platform. With Naples, server designers will be able to pair many accelerators (in some cases up to 16) with a single CPU. Previous solutions required multi-socket CPU implementations and even PCIe expanders to achieve this functionality, but with Naples, GPU-heavy servers will be possible at a lower cost and footprint than before.

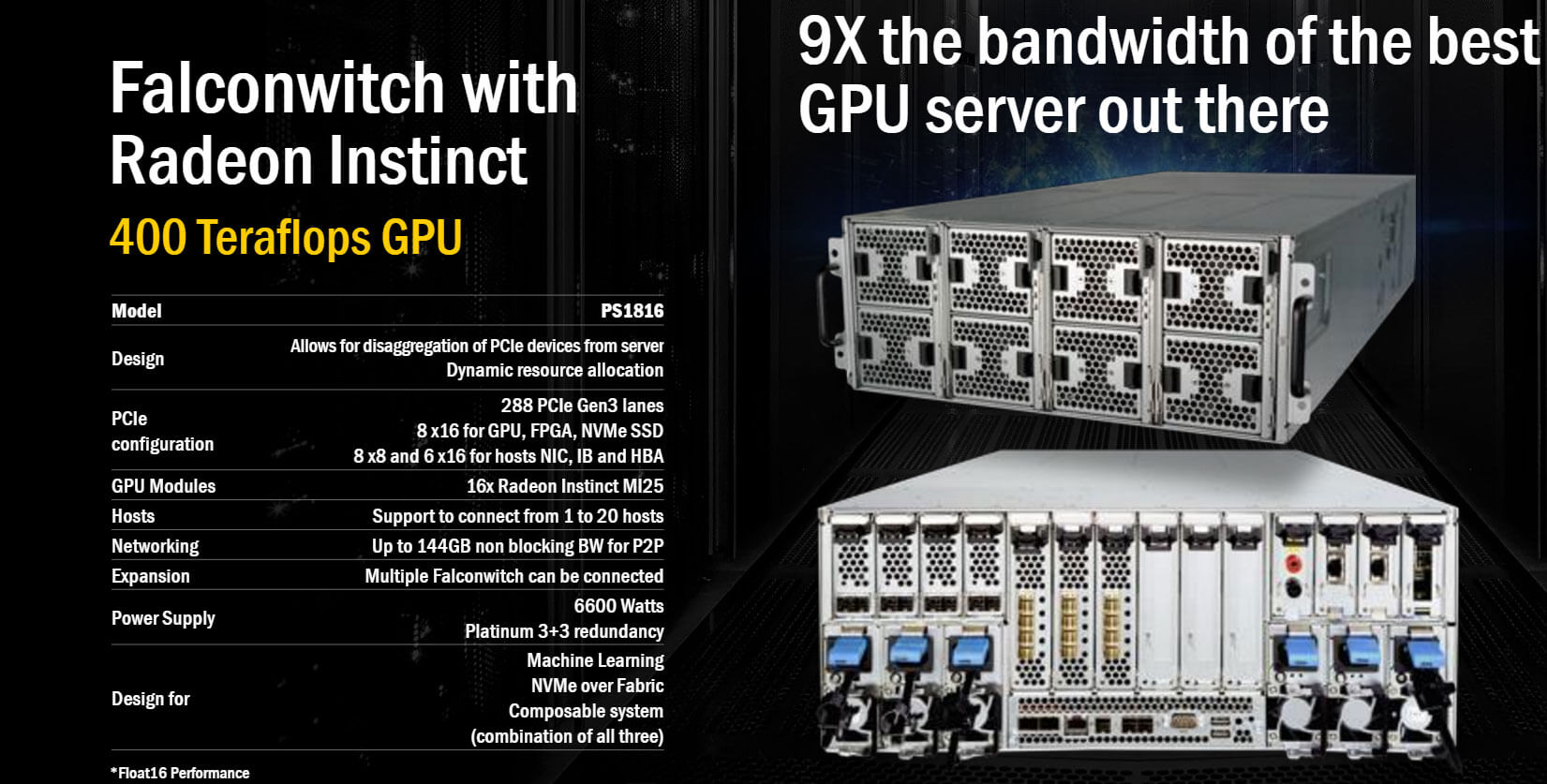

AMD showed off a number of server systems that will be possible thanks to Radeon Instinct. The most compelling was the Falconwitch with Radeon Instinct, a single unit with 16 MI25 accelerators inside, providing 400 teraflops of GPU compute. Using multiple units of this kind, Inventec will release a solution that produces 3 petaflops in a single rack.

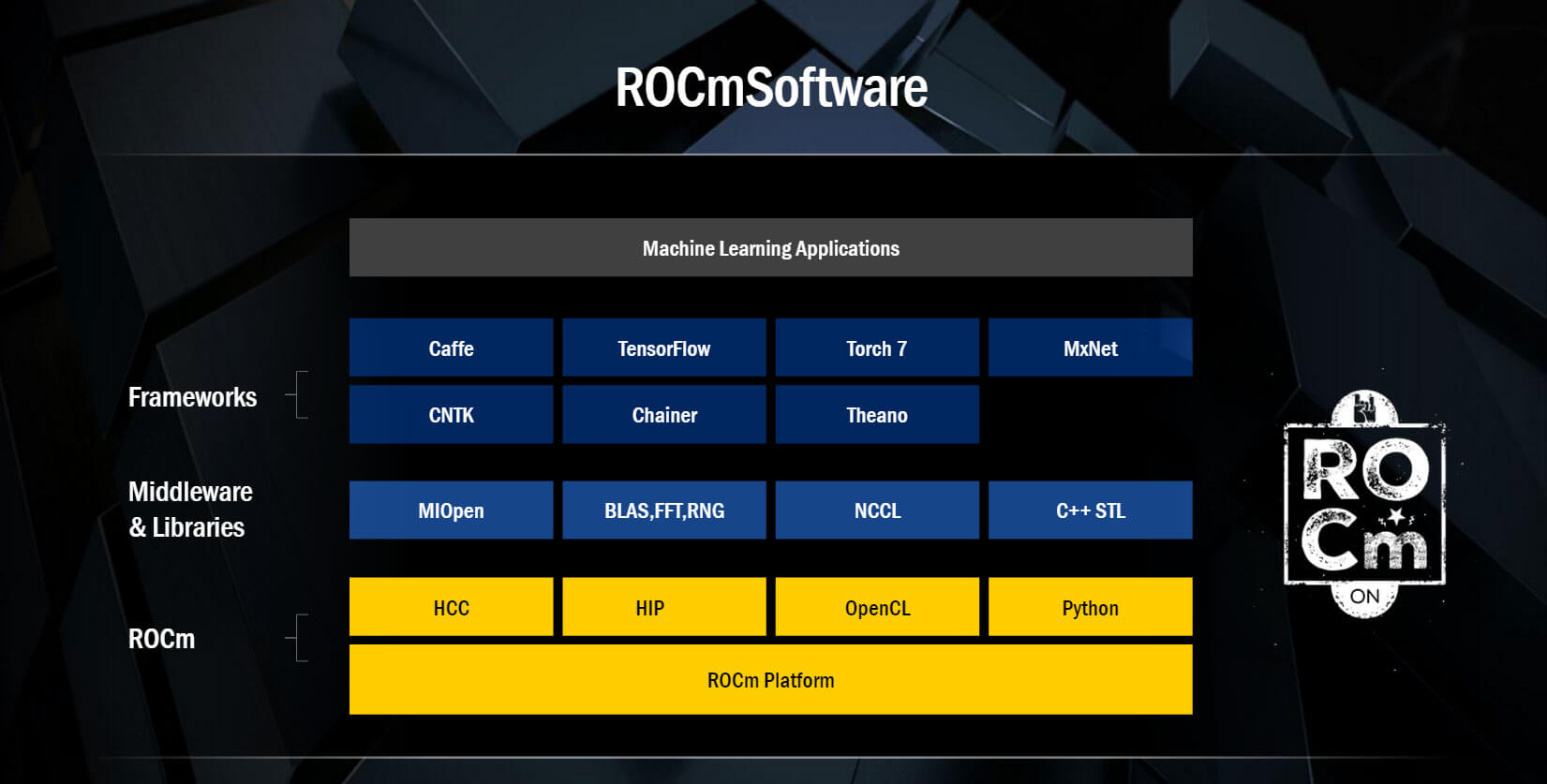

The Radeon Instinct platform will utilize several of AMD's compute software initiatives, including ROCm (Radeon Open Compute), which is their already-announced open-source compute platform. What was freshly announced at the Tech Summit 2016 is ROCm optimizations for popular deep learning frameworks, including Caffe, Torch 7, and Tensorflow.

AMD has extended their deep learning software platform with MIOpen, a new open-source deep learning library from AMD crafted specifically to improve machine intelligence performance on Radeon Instinct accelerators. MIOpen provides highly optimized support for common deep learning routines, and is a key pillar of Radeon Instinct.

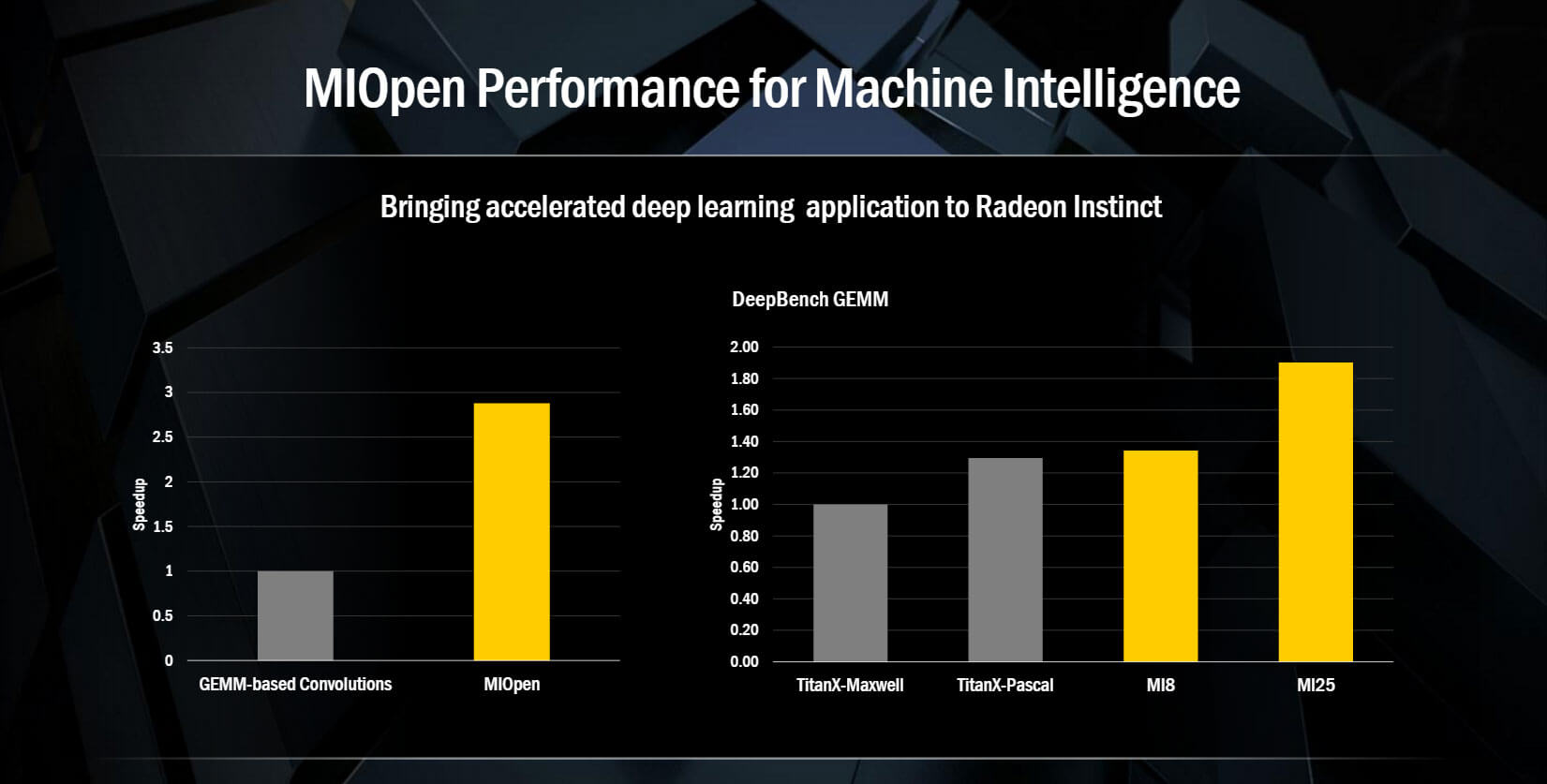

Using MIOpen, AMD achieved a near-3x improvement in deep learning convolution performance compared to a GEMM-based implementation. With just a few weeks of engineering, AMD also managed to narrowly beat the Titan XP in DeepBench using MIOpen and their MI8 accelerator.

The MIOpen library will be available in Q1 2017, while Radeon Instinct Accelerators will launch in the first half of 2017. Server platforms from partners should be available shortly thereafter, giving those in the machine intelligence market a compelling server hardware and software platform for the rapidly-expanding deep learning market.