The intent of online forums and message boards is to foster the sharing of ideas regarding a particular topic although as anyone that has scrolled past a story or video will tell you, things often turn from amicable to flat out vicious in the blink of an eye.

The problem has only compounded over the years as more and more people make their way online but it's not going unabated.

On Thursday, Google offshoot Jigsaw made available to developers an API called Perspective that aims to keep online trolling in check.

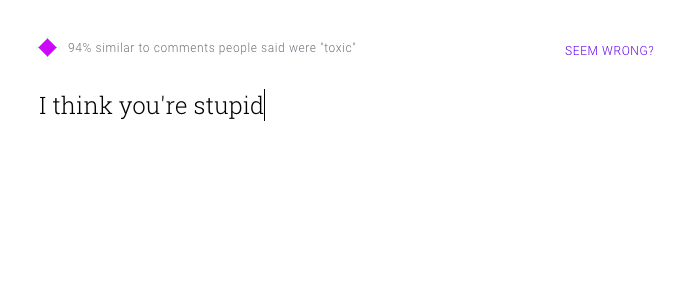

Perspective uses machine learning to determine the "toxicity" of online speech, thus allowing it to identify insults, harassment and abusive comments. As Wired highlights, the automatic moderation works much faster than a human moderator and is far more accurate than a simple keyword blacklist.

Those wanting to see Perspective in action are encouraged to check out the newly launched demonstration website. On it, you'll be able to enter in phrases and observe how the tool rates them for toxicity.

A number of large publications including The New York Times are planning to use it as a first defense in their comments section. The Guardian and The Economist, meanwhile, are said to be already experimenting with the API to see how it might be able to improve their feedback platforms.

Of course, not everyone is onboard with this level of censorship. Feminist writer Sady Doyle told Wired last summer when the broader Conversation AI initiative launched that people need to be able to talk in whatever register they talk.

What are your thoughts on the matter? Should Google and others be working to clean up the trolling and hateful nature of comments sections or should this organic bit of conversation be left unaltered?

Lead image via Kirill M, Shutterstock